Lecture Notes for Section 7.3

advertisement

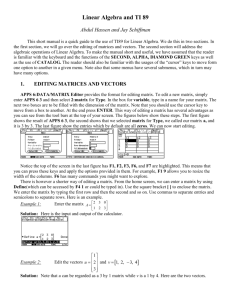

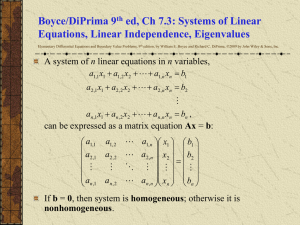

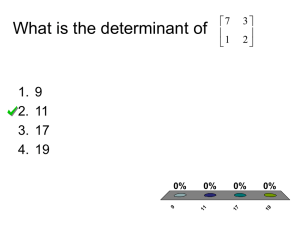

ODE Lecture Notes Section 7.3 Page 1 of 11 Section 7.3: Linear Algebraic Equations; Linear Independence, Eigenvalues, Eigenvectors Big Idea: x Big Skill: x Dsds Systems of Linear Algebraic Equations A system of n linear equations in n variables can be written in matrix form as follows: a1n x1 b1 a11 a12 a11 x1 a12 x2 a1n xn b1 a a22 a2 n x2 b2 21 Ax B a x a x a x b nn n n n1 1 n 2 2 amn xn bn am1 am 2 If B 0 , then the system is homogeneous; otherwise, it is nonhomogeneous. If A is nonsingular (i.e., det(A) 0), then A-1 exists, and the unique solution to the system is: x A 1B A picture for the 3 3 system is: y z 2 x x 2 y 3z 12 2 x 2 y z 9 Solution: (-1, 2, -3) ODE Lecture Notes Section 7.3 Page 2 of 11 If A is singular, then either there are no solutions, or there are an infinite number of solutions. Example pictures for 3 3 systems are: Three parallel planes No Solution z 0 z 2 z 4 Note: Inconsistent systems yield false equations (like 0 = 2 or 0 = 4) after trying to solve them. Planes intersect in three parallel lines No Solution 2 x y 2 x y 2 Note: Inconsistent systems yield a false equation (like 0 = -6) after trying to solve them. All three planes intersect along same line Infinite number of solutions 1 x y z 2 x y z x 0.5 Note: This type of dependent system yields one equation of 0 = 0 after row operations. All three planes are the same Infinite number of solutions y z 1 x 2 x 2 y 2 z 2 3x 3 y 3z 3 Note: This type of dependent system yields two equations of 0 = 0 after row operations. ODE Lecture Notes Section 7.3 Page 3 of 11 If A is singular, then the homogeneous system Ax = 0 will have infinitely many solutions (in addition to the trivial solution). If A is singular, then the nonhomogeneous system Ax = B will have infinitely many solutions when (B, y) = 0 for all vectors y satisfying A*y = 0 (recall A* is the adjoint of A). These solutions will always take the form x = x(0) + , where x(0) is the particular solution, and is the general form for the corresponding homogeneous solution. In practice, linear systems are solved by performing Gaussian elimination on the augmented matrix A | B. Practice: y z 6 x z 5 using an augmented matrix. 1. Solve 3x 2 y x 3 y 2 z 14 ODE Lecture Notes Section 7.3 3z 3 x 2. Solve 3 y 4 z 5 using an augmented matrix. 3x 2 y 6 Page 4 of 11 ODE Lecture Notes Section 7.3 2 x 2 y 3z 6 3. Solve 4 x 3 y 2 z 0 using an augmented matrix. 2 x 3 y 7 z 1 Page 5 of 11 ODE Lecture Notes Section 7.3 y z 1 x 4. Solve x 2 y 3z 4 using an augmented matrix. 3x 2 y 7 z 0 Page 6 of 11 ODE Lecture Notes Section 7.3 Page 7 of 11 Linear Independence 1 k A set of k vectors x , , x are said to be linearly dependent if there exist k complex numbers c1 , , ck not all zero, such that c1x1 ck x k 0 . This term is used because if it is true that not all the constants are zero, then one of the vectors depends on one or more of the other vectors: ci xi c1x1 ci 1xi 1 ci 1xi 1 ck x k . Practice: 1 0 1 2 3 5. Show that the vectors x 0 , x 1 , and x 1 are linearly dependent. 1 0 1 1 On the other hand, if the only values of c1 , c1 c2 1 ck 0 , then the vectors x , , ck that make c1x1 ck x k 0 be true are , x k are said to be linearly independent. The test for linear dependence or independence can be represented with matrix arithmetic: Consider n vectors with n components. Let xij be the ith component of vector x(j), and let X = (xij). Then: x11 c1 x1 n cn x11c1 x1n cn Xc 0 1 n x c xnn cn xn c1 xn cn n1 1 If det(X) = 0, then c = 0, and thus the system is linearly independent. If det(X) 0, then there are nonzero values of ci, and thus the system is linearly dependent. ODE Lecture Notes Section 7.3 Page 8 of 11 Practice: 6. Determine the dependence or the independence of the vectors 1 2 4 1 2 3 x 2 , x 1 , and x 1 . 1 3 11 Note: Frequently, the columns of a matrix A are thought of as vectors. The columns of vectors are linearly independent iff det(A) 0. If C = AB, it happens to be true that det(C) = det(A)det(B). Thus, if the columns of A and B are linearly independent, then so are the columns of C. ODE Lecture Notes Section 7.3 Page 9 of 11 Eigenvalues and Eigenvectors The equation Ax = y is a linear transformation that maps a given vector x onto a new vector y. Special vectors that map onto multiples of themselves are very important in many applications, because those vectors tend to correspond to “preferred modes” of behavior represented by the vectors. Such vectors are called eigenvectors (German for “proper” vectors), and the multiple for a given eigenvector is called its eignevalue. To find eigenvalues and eigenvectors, we start with the definition: Ax x , which can be written as A I x 0 , which has solutions iff det A I 0 The values of that satisfy the above determinant equation are the eigenvalues, and those eigenvalues can then be plugged back into the defining equation Ax x to find the eigenvectors. You will see that eigenvectors are only determined up to an arbitrary factor; choosing the factor is called normalizing the vector. The most common factor to choose is the one that results in the eigenvector having a length of 1. Practice: 3 1 7. Find the eigenvalues and eigenvectors of A . 4 2 ODE Lecture Notes Section 7.3 Page 10 of 11 1 0 0 8. Find the eigenvalues and eigenvectors of A 2 1 2 . 3 2 1 Notes: In these examples, you can see that finding the eigenvalues of an n n matrix involved solving a polynomial equation of degree n, which means that there are always n eigenvalues for an n n matrix. Also in these two examples, all the eigenvalues were distinct. However, that is not always the case. If a given eigenvalue appears m times as a root of the polynomial equation, then that eigenvalue is said to have algebraic multiplicity m. Every eigenvalue will have q linearly independent eigenvectors, where 1 q m. The number q is called the geometric multiplicity of the eigenvalue. Thus, if each eigenvalue of A is simple (has algebraic multiplicity m = 1), then each eigenvalue also has geometric multiplicity q = 1. If 1 and 2 are distinct eigenvalues of a matrix A, then their corresponding eigenvectors are linearly independent. So, if all the eigenvalues of an n n matrix are simple, then all its eigenvectors are linearly independent. However, if there are repeated eigenvalues, then there may be less than n linearly independent eigenvectors, which will pose complications later on when solving systems of differential equations (which we won’t have time to get to…). Practice: 0 1 1 9. Find the eigenvalues and eigenvectors of A 1 0 1 . 1 1 0 ODE Lecture Notes Section 7.3 Page 11 of 11 Notes: In this example, A was symmetric: AT = A., and we see that even though there was a repeated eigenvalue, there were still three linearly independent eigenvectors. Symmetric matrices are a subset of Hermitian, or self-adjoint matrices: A*= A; a ji aij Hermitian matrices have the following properties: All eigenvalues are real. There are always n linearly independent eigenvectors, regardless of multiplicities. All eigenvectors of distinct eigenvalues are orthogonal. If an eigenvalue has algebraic multiplicty m, then it is always possible to choose m mutually orthogonal eigenvectors.