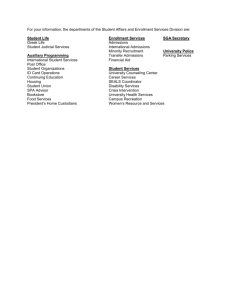

ADMISSIONS - Berkeley Law

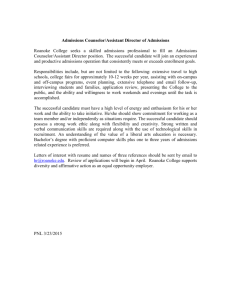

advertisement