classII_IV

Optimization – Class III

Simplex method

Example:

Max z

300 x

1

250 x

2

Max z

300 x

1

250 x

2

0 s

1

0 s

2

0 s

3

Subject to:

2 x

1

x

2

40

Subject to:

2 x

1

x

2

s

1

40 x

1

3 x

2

45 x

1

3 x

2

s

2

45 x

1

12 x

1

s

3

12 x

1

, x

2

0 x

1

, x

2

, s

1

, s

2

, s

3

0

Definitions: o With the exception of the variables that appear on the left (i.e., the variables that we have been referring to as the dependent variables) are called basic variables . Those on the right (i.e., the independent variables) are called nonbasic variables . The solutions we have obtained by setting the nonbasic variables to zero are called basic feasible solutions .

General task o Maximize :

n j=1 c x j j o subject to j n

1 a x j

i

;

1..

m x j

0; j

1..

n

Definition o Slack Variables: w i

i j n

1 ij j

;

1..

m

Other notation x

1 x w n 1

, . . . , w m

)

x

1 x n x n

1

, . . . , x ).

n j=1 c x j j x

i j n

1 ij j

;

1..

m x i

0; i

1..

n

m

Each dictionary has m basic variables and n nonbasic variables. Let B denote the collection of indices from {1, 2, . . . ,

} corresponding to the basic variables, and let N denote the indices corresponding to the nonbasic variables.

Initially, we have N

{1, 2, . . . , } and B

{

1,

2, . . . ,

} .

In the following steps, we have:

c x j j

x i

i

ij j

;

B

When pivoting, we must enlarge

thus choose the k with c k maximal and positive, and ensure that all x stay positive, thus choose a i so that

, i

a x ik k

0

In other words: x k

min / i ik

0

b a ik

or x k

max

a / b ik i i

1

Identify the Incoming and Outgoing Variables

Incoming Variable -An incoming variable is currently a nonbasic variable (the current value is zero) and will be changed to a basic variable (introduced into the solution). o -For the maximization problems, the incoming variable is the variable with the largest positive value(coefficient) in the c j

z j

row. o - For the minimization problems, the incoming variable is the variable with the largest negative value in the c j

z j

row.

Outgoing Variable -An outgoing variable is currently a basic variable that is first reduced to zero when increasing the value of the incoming variable and will be changed to a nonbasic variable (removed from the solution). o To determine the outgoing variable, compute the ratio of the Quantity to the coefficient of the incoming variable for each basis row.

o For both the maximization and minimization problems, the outgoing variable is the basic variable with the smallest ratio. o The coefficient of the incoming variable in the outgoing row is called the pivot element.

Summary: The Simplex Procedure o Step 1: Standardize the problem. o Step 2: Generate an Initial Solution. o Step 3: Test for Optimality. If the solution is optimal, go to Step 6.

Otherwise, go to Step 4. o Step 4: Identify the Incoming and Outgoing Variables. o Step 5: Generate an Improved Solution. Go to Step 3. o Step 6: Check for other Optimal Solutions.

Initialization

If b is negative: x

1 x w n 1

, . . . , w m

)

x

1 x n x n

1

, . . . , x ).

n j=1 c x j j x

0 x

j n

1 a x ij j

x i

0

1..

m x i

0;

Example: i

0..

max imize - 2 x

1

x

2

x

1

x 2

-1

x

1 x

2

- 2 x

2

1 x

1

, x

2

0.

Unboudness

Example:

5

-

3 x

1 x

2

5

x

3 x

4

7 - 4 x

5

.

1 x

1 x

1

If x k

max

a ik i

/ b i

is non-positive – the problem diverges.

THEOREM 3.4. For an arbitrary linear program in standard form, the following statements are true: o (1) If there is no optimal solution, then the problem is either infeasible or unbounded. o (2) If a feasible solution exists, then a basic feasible solution exists (nonebase values are zero). o (3) If an optimal solution exists, then a basic optimal solution exists.

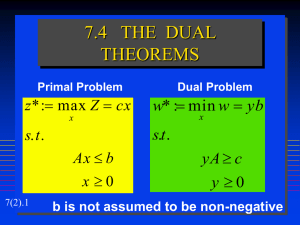

Dual problem

Example

4 x

1

x

2

3 x

3 a x

1

4 x

2

1

( )3 -

1 x

2

3

x x x

1 2 3

)

x x x

1 2 3

)

(0, 0, 3)

2* a

3* b

11

Example continuation

4 x

1

x

2

3 x

3 y x

1 1

x

2

y

1 y

2

3 x

1

- x

2

x

3

3 y

2 y

1 y x

2 1 y y x

1 2 2 y x

2 3

y

1

+ 3 .

2

4 x

1

x

2

3 x

3 y

1

+ 3 y

2

4 y

2 y y

1 2

1

3 min imize y

1

+ 3 .

2 o Maximize :

n j=1 c x j j o subject to j n

1 a x j

i

;

1..

m x j

0; j

1..

n

Becomes: o Minimize :

i m

1 b y i i o subject to i m

1 y i y a ij

j

;

1..

n

0; j

1..

m o maximize

m

i

1 b y i i o subject to i m

1 y i y i

(

a ij

)

c j

); i

1..

n

0; j

1..

m

Weak Duality Theorem : If (x1, x2, . . . , xn) is feasible for the primal and (y1, y2, . . . , ym) is feasible for the dual, then n j=1 c x j j

i m

1

Proof b y i i n j=1 c x j j

n j=1 i m

1 y a i ij

x j

i m

1 n y a i ij j=1

i m

1 b y i i

Strong Duality Theorem : If the primal problem has an optimal solution, x_ =

(x_1 , x_2 , . . . , x_n), then the dual also has an optimal solution, y_ = (y_1, y_2, .

. . , y_m ), such that: n c x *

m b y * j j

1 i i j

1 i

Note that the numbers in the dual dictionary are simply the negative of the numbers in the primal dictionary arranged with the rows and columns interchanged.

Include function into array form (just as the first line).

That is the dual dictionary is the negative transpose of the primal dictionary

This property is preserved from one dictionary to the next. Let’s observe the effect of one pivot. To keep notations uncluttered, we shall consider only four generic entries in a table of coefficients: the pivot element, which we shall denote by a

, one other element on the pivot element’s row, call it b , one other in its column, call it c , and a fourth element, denoted d , chosen to make these four entries into a rectangle. o The pivot element gets replaced by its reciprocal; o Elements in the pivot row get negated and divided by the pivot element; o Elements in the pivot column get divided by the pivot element; and o All other elements, such as d, get decreased by bc/a.

In the dual

Thus feasibility of the dual is optimality of the primal

Duality theory is often useful in that it provides a certificate of optimality.

The strong duality theorem tells us that, whenever the primal problem has an optimal solution, the dual problem has one also and there is no duality gap. But what if the primal problem does not have an optimal solution? For example,

suppose that it is unbounded. The unboundedness of the primal together with the weak duality theorem tells us immediately that the dual problem must be infeasible. Similarly, if the dual problem is unbounded, then the primal problem must be infeasible. It is natural to hope that these three cases are the only possibilities, because if they were we could then think of the strong duality theorem holding globally. That is, even if, say, the primal is unbounded, the fact that then the dual is infeasible is like saying that the primal and dual have a zero duality gap sitting out at +infinity. Similarly, an infeasible primal together with an unbounded dual could be viewed as a pair in which the gap is zero and sits at

−infinity. But it turns out that there is a fourth possibility that sometimes occurs— it can happen that both the primal and the dual problems are infeasible. For example, consider the following problem

Example max imize 2 x

1

x

2 subject to : x

1

x 2

1

x

x

1 2

- 2 x

1

, x

2

0.

Complementary Slackness Theorem : Suppose that x = (x1, x2, . . . , xn) is primal feasible and that y = (y1, y2, . . . , ym) is dual feasible. Let (w1,w2, . . .

,wm) denote the corresponding primal slack variables, and let (z1, z2, . . . , zn) denote the corresponding dual slack variables. Then x and y are optimal for their respective problems if and only if: x z j j

0, for j

n w y i i

0, for i

m

Proof n j=1 c x j j

n j=1 i m

1 y a i ij

x j

i m

1 n y a i ij j=1

i m

1 b y i i

x j

0, i m

1 y a i ij

c j i m

1 y a i ij

j

; j

i m

1 y a c i ij j

0 x j z j

0

The Dual Simplex Method

One can actually apply the simplex method to the dual problem without ever writing down the dual problem or its dictionaries. Instead, the so-called dual simplex method is seen simply as a new way of picking the entering and leaving variables in a sequence of primal dictionaries.

Example:

- x

1

x

2 x

3

4

2

1

x

2 x

4

x

1

4 x

2 x

5

-7+ x

1

3 x

2

Solve the dual and perform the changes on the primal too and get an optimal solution

First step:

Second step

If the dual is feasible. This means that all the coefficients of the nonbasic variables in the primal objective function must be nonpositive, we proceed as follows. o First we select the leaving variable by picking that basic variable whose constant term in the dictionary is the most negative (if there are none, then the current dictionary is optimal). o Then we pick the entering variable by scanning across this row of the dictionary and comparing ratios of the coefficients in this row to the corresponding coefficients in the objective row, looking for the largest negated ratio just as we did in the primal simplex method. o Once the entering and leaving variable are identified, we pivot to the next dictionary and continue from there.

If the dual is non-feasible, it is enough to change the score function.

Equalities in the constraints

maximize c x subject to Ax = b, x

0

maximize c x subject to Ax

b, Ax

b,x

0

maximize c x subject to -Ax

b, Ax

b,x

0

Lets associate y+ to the first set of constraints and y- to the second set of constraints. minimize b y -b y subject to A y - A y = c, y

0 y

y -y

minimize b y subject to A y= c

In other words, equations lead to unconstrained values in the dual.

Matrix notation

maximize c x subject to Ax = b, x

0

A m*(n+m)

=

a

11 a m 1 a

1 n a mn

1

1

1

1

, b= b

1

b m c c

1

c n

, x

0

x

1

x

1

x n

x n

x n

1

w

1

x

w m

A

[ N , B ], x

x

w

x x

AX

N * x

N

B * x

B

c

c

c X

c * x

N

c * x

B

c * x

B c

AX

*

N

*

B

x

B

B

1

*

N c c

T c x

c

N

T

* x

N

c

B

T

* x

B

c

N

T

* x

N

c

B

T

* B

1

b

*

T

* x

N B

T

*

1

B b

c

B

T

*

1

B N

c

N

B

T * B b

B N

T c

B

c

N

T

* x

N

In the optimal solution

N

x *

N

0

*

T

*

1 c B b

B x *

1

B b

B

Dual problem maximize -

m

i

1

T b y b y i i m subject to i

1 y i

(

a ij

) ( c j

)

A y z c y

z

y

z z

T

A Y

N

T

A Y

T

B z

T c

T

* z

B

T

* y

c

B

T

*

1

1

T c

B

c

N x

B

B

1

b

*

N

T -1 c B b

B

T

* x

N c

N

- ( B N ) c

B

[ c j

]

-1

B b

i

-1

B N

[ a ij

]

c B b

B

T -1 -1

B b

T

- ( ) z

B z

N

(

-1

B N

T

) c

B

-

In the optimal solution c

N

(

-1

B N

T z

B z

B

*

0

-

T -1 c B b

B z *

(

-1

B N

T

) c - c

N B N

Back to the primal problem we get:

*

*

N

T z x

N x

B

x

B

*

1

B Nx

N

And the dual is

x

B

T z

B

z

N

*

(

-1

B N

T

) .

B z

N

The (primal) simplex method can be described briefly as follows. The starting assumptions are that we are given o A partition of the n +m indices into a collection B of m basic indices and a collection N of n nonbasic ones with the property that the basis matrix B is invertible, o An associated current primal solution x

B

0

(and x

N

0

) o An associated current dual solution z

N

0

(with z

B

0

)

The simplex method then produces a sequence of steps to “adjacent” bases such that the current value

*

of the objective function increases at each step (or, at least, would increase if the step size were positive), updating x

B

and z

N

along the way.

Two bases are said to be adjacent to each other if they differ in only one index.

That is, given a basis B, an adjacent basis is determined by removing one basic index and replacing it with a nonbasic index.

Check for Optimality. If z*

N

0

stop.

Select Entering Variable. Pick an index j

N

for which z *

0 j

Variable x j is the entering variable.

……

Problems in general form

Maximize C

T x, subject to:

a

Ax

;

u l i

u i

Define slack variables: w

Ax a

;

u

If lower bound of everybody is feasible, simply apply simplex method and move to upper boud, until ended

Dual of general form

Maximize C

T x, subject to:

Ax

Ax

;

l

Adding slack variables:

Ax

Ax

;

l

Minimize:

Subject to:

T T T T b v a q u s l h

T

A v

T

A q

c

Residual slackness: fv

pq ts gh

0

V,q and s,h must be complementary, so only one can be non-zero. Otherwise we could reduce them both by a factor and reduce the bjective function

Minimize

T

T

T

T b y a y u z l z

Subject to :

T

A y

c

Example:

Put all non-basic variables to zero and move in both positive and negative directions