6 RELATIVE FREQUENCIES AND EXCHANGEABILITY

advertisement

2. LEARNING PROBABILITIES FROM RELATIVE

FREQUENCIES

Roger M Cooke

ShortCourse on Expert Judgment

National Aerospace Institute

April 15,16, 2008

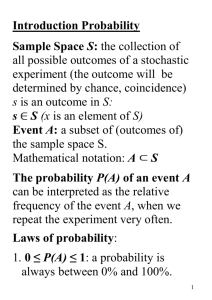

1. Introduction

We focus on learning from observed relative frequencies. The following notation will

be used throughout.

11,....1n,...; indicator functions for events A1,...;

{0,1}n = 2n : set of sequences of length n containing only 0's and 1's.

For Q e 2n , #Q = number of 1's in Q.

wi(n) : probability that exactly i of A1,...An occur.

Sn = {p Rn | pi ≥ 0, Σpi = 1}

(nj) = n!/(j!(n-j)!)

for any set A (finite or infinite), A! = the set of permutations of the elements of A.

2. The Frequentist Account

The frequentist interprets probability as limiting relative frequency. It is useful to

rehearse the frequentist account of learning from experience. The

weak law of large numbers reads:

Let Xi, i = 1,2,.. be independent and identically distributed variables taking values in

{0,1}, and let P{Xi = 1} = p. Then for all ε > 0

n

P{|(1/n)ΣXi – p| > ε} → 0 as n → ∞

i=1

1

The frequentist learning model works as follows. Suppose we have a variable X

taking values in {0,1} and we wish to know the probability that X = 1. If we can find

a sequence of independent variables Xi with the same distribution as X, then we can

observe a large number n of these variables. The relative frequency of "1"'s in

X1,...Xn is then unlikely to differ greatly from the

probability that X = 1. Two features of this account are noteworthy:

1. Probability exists. The person reasoning as above believes that there is some

objective quantity "p".

2. p is initially unknown, but can be measured to arbitrary precision, in a

probabilistic sense, by observing relative frequencies in large, finite

sequences of observations.

Note: Expressed in this way, we have to know that the variables Xi are independent

and identically distributed, which we could never know before measuring their

probabilities. This circularity in the naïve frequentist account is removed in more

sophisticated treatments, where probability is defined as limiting relative frequency

in a random sequence, and “random” is defined without reference to probability.

3.The Subjectivist Account

The subjectivist cannot accept this account of learning. "p" is not an objective

quantity, but a subjective degree of belief. It cannot be "observed" in the sense

implied by the frequentist account. It is a fact, nevertheless, that people attach

importance to relative frequencies. The subjectivist must offer an explanation of this

fact.

Expected Relative Frequency

Theorem 1: Let pi = p(Ai) = p(1i=1). Then the average of the probabilities pi equals

the expected relative frequency of occurrence of A1,...An, or

(1/n)Σpi = Σiwi(n) /n .

2

Proof: Rewriting the expected relative frequency of occurrence on the right hand

side, we have:

E(1/n)Σ1i = (1/n)ΣE(1i) = (1/n)Σpi.

Note that this theorem makes no independence assumptions. It also says nothing

about changing our beliefs in the light of observations.

Exchangeability

According to De Finetti, exchangeability provides the characterization of those belief

states in which we learn from relative frequencies.

Definition: The events A1,..An are exchangeable with respect to probability measure

p if for all Q, Q' 2n with #Q = #Q'; p(11,..1n = Q) = p(11,..1n = Q'). An infinite

sequence of indicators is exchangeable with respect to p if every finite subsequence

is exchangeable with respect to p.

Exercise: Suppose the events A1,...An are independent and identically distributed.

Are they exchangeable? What is wrn ?

Exercise: If A1,...An are exchangeable and #Q = r, then:

p(Q) = wr(n) / (nr) .

After observing a sequence of outcomes Q 2n , our probability for An+1 is no longer

p(An+1) but p(An+1|Q). We wish to calculate this quantity on the assumption that

A1,...An+1 are exchangeable with respect to p. Suppose #Q = r.

P(An+1 | Q) = P(An+1 Q)/p(Q) =

[wr+1(n+1) / (n+1r+1)]

────────────

[wr(n) / (nr)]

3

= [wr+1(n+1) / wr(n)] × [(r+1)/(n+1)]

To reduce this note that

wr(n) / (nr) = [wr+1(n+1) / (n+1r+1)] + [wr(n+1) / (n+1r)]

Putting s = n - r and simplifying:

wr(n)

wr+1(n+1) (r+1) + wr(n+1) (s+1)

= ──────────────────── ;

n+1

Since r+1 = n+2 - (s+1). This yields:

wr+1(n+1) (r+1)

p(An+1|Q) = ───────────────────

wr(n+1) (s+1) + wr+1(n+1) (r+1)

r+1

= ────────────────────

(r+1) + (s+1) [wr(n+1) / wr+1(n+1) ]

r+1

= ──────────────────────

(n+2) + (s+1) [wr(n+1) / wr+1(n+1) -1]

4

If the probability of r occurrences in A1,...An+1 is close to the probability of r+1 such

occurrences, then the term in brackets is close to one and

p(An+1|Q) ~ (r+1)/(n+2).

We have proved the following result.

Theorem: Let A1, A2,...be exchangeable, and suppose there is a constant K such that

for all n:

Max {r = 0...n } |[wr+1(n+1) / wr(n) ] – 1| < K/n

then max Q2ω |p(An+1|Qn) - #Q/n| → 0 as n →∞.

Notice that this theorem does NOT say that the relative frequency of 1's converges to

some limit as n goes to infinity.

Exercise: Consider an Urn with n (n even) black and white balls drawn without

replacement. Let Ai denote the event that the i-th ball is black. Suppose the Ai's are

exchangeable. Consider three cases: (1) we are told that there are exactly n/2 black

balls in the urn; (2) we are told that there are either n/2 - 1 or n/2 + 1 black balls in

the urn, each option having probability 1/2; and

(3) we are told that for r = 0,...n the urn contains r black balls with probability 2-n(nr)

• Calculate P(An) in all cases

• Calculate the possible values p(An|Qn-1) in all cases.

(hint: think before calculating)

4. DeFinetti's representation theorem

Instead of speaking of exchangeable sequences A1,...An, we may just as well speak of

exchangeable measures on the field of events generated by A1,...An.

5

DeFinetti's theorem states that an exchangeable measure can be uniquely represented

as probabilistic mixtures of certain "extreme" measures. Any measure can be

represented as a mixture of other measures via conditionalization. For example, if

{Bi}i=1,...n is a partition, then

p(A) = Σp(A|Bi)p(Bi)

represents the measure p(.) as a mixture of the measures pi = p(P|Bi), with mixing

weights p(Bi). Exchangeability is preserved under mixing, (see exercise), hence if

events are exchangeable under each pi, then they are

exchangeable under p as well. The question of representing exchangeable sequences

can be translated into the question of representing exchangeable

probability measures.

DeFinetti's representation theorem for finite exchangeable sequences of events:

(1) Any exchangeable measure on P(2n) is uniquely determined by the probabilities

wj(n) , j = 0,...n, and (2) any q Sn+1 determines an exchangeable measure by setting

wj(n) = qj+1; j=0...n.

Proof: (1) Partition P(2n) into sets Bi = {Q2n | #Q = i}, i = 0,...n. For any

exchangeable probability measure p on P(2n),

p = Σp(•|Bi)p(Bi).

p(•|Bi) is uniform on P(2n) and p(Bi) = wi(n). Hence if p' is another exchangeable

measure and p'(Bi) = p(Bi), then p'=p.

(2) For fixed i, the probability μi on P(2n) which assigns Bi probability one and for

which P(•|Bi) is uniform, is exchangeable; and for any qSn+1,the measure p = Σμiqi

is also exchangeable (see exercise).

Consider drawings from an urn containing r black and n-r white balls. The

probability of drawing j black and m-j white balls, with m < n, j < r is given by the

hypergeometric distribution:

(mj) (n-mr-j)

wj(m) = ──────────.

( n r)

6

If m = n, then wj(m) = 1 if j = r and = 0 otherwise. The probability on P(2n) induced

by drawing from such an urn is exchangeable and corresponds to the measure μr in

the above proof. Hence any exchangeable measure can be

represented uniquely as a mixture of urn drawing measures. The probability p(Bi)

corresponds to the probability of "drawing" an urn with i black balls, i

= 0,...n; and the probabilities p(P|Bi) correspond to drawings from the urn with i

black balls.

DeFinetti's representation for infinite sequences of exchangeable events

For large n, drawing a few balls without replacement from an urn with r black and nr white balls is "almost" like flipping a coin with probability r/n of "black" and (nr)/n of "white". One can prove, letting n→ ∞ keeping the ratio r/n constant, that the

hypergeometric distribution converges to the binomial distribution, that is

wj(m) → (mj)(r/n)j ((n-r)/n)m-j .

For infinite sequences of exchangeable events, DeFinetti's theorem states that any

exchangeable probability measure may be uniquely represented as a mixture of

binomial measures. For Q2n , #Q= r, the probability of Q under the binomial

measure with parameter q (0,1) is

P(Q) = qr (1-q)n-r .

Taking mixtures of such measures corresponds to integrating the above over q

(0,1), with respect to some measure dp(q) on the interval (0,1). Every

exchangeable probability corresponds to a unique measure dp(q), and we may write:

P(Q) = ∫[0,1] q#Q (1-q)n-#Q dp(q)

where Q is a sequence of r 1's and n-r 0's. This notation is a bit ambiguous, as the P

on the left hand side is a probability on 2ω ; the p under the integral is a probability

measure on (0,1). The quantity dp(q) may be thought of as the probability of

"drawing" a binomial probability with parameter q.

7

The key to applying DeFinetti's theorem for infinite exchangeable sequences lies in

finding a class of measures dp(q) for which the above integral can be

evaluated easily.

5. The subjectivist resolution of the Ellsberg Paradox

The following "paradox" was proposed by Daniel Ellsberg to argue that people's

degrees of belief cannot be represented as (subjective) probability measures.

Consider two cases:

Case 1: A subject is offered the opportunity to bet on the outcomes of tosses with a

coin which the subject believes to be fair (P(heads) = 0.5, tosses independent).

Case 2: A subject is offered the opportunity to bet on the outcomes of tosses of a coin

about which he believes that the probability of heads could with equal probability be

any number r (0,1).

On the subjectivist account the subject's beliefs are modeled by, Q 2n:

p(Q) = ∫q#Q (1-q)n-#Q dp(q).

In case 1, the subject is certain that q = 0.5, hence his beliefs are modeled by setting

dp(q) = δ(q-0.5); where δ(x) is the "Dirac delta measure" assigning unit mass to the

point x=0; hence for any function F(q);

∫F(q) δ(q-θ) = F(θ).

In case 2, dp(q) = dq. Hence

p(heads on one toss) = ∫qd(q) = 0.5.

The "paradox" is that subjects in case 1 will accept even money bets on heads,

whereas in case 2 they supposedly will not, even though the subjective probabilities

are the same in both cases. Hence there must be a difference in the beliefs in these

two cases, even though P(heads on one toss) is the same.

On the subjectivist account the difference in these two cases only appears when we

consider the subject's willingness to revise his/her betting rates on the basis of

observations of outcomes of previous tosses.

Exercise:Let Hi denote the event "heads on toss i".

8

(i) Suppose that the subject believes the tosses are independent with P(Hi) = r; i =

1,2,... . Show

that P(H2|H1) = r.

(ii) Suppose that dp(q) is not concentrated at one point; use Jensen's inequality to

show that P(H2|H1) > p(H1). (iii) Suppose dp(q) = dq, use the Beta integral:

∫qr (1-q)n-rdq = r!(n-r)!/(n+1)!

to derive Laplace's rule:

P(Hn+1|r heads on n tosses) = (r+1)/(n+2).

In case (ii) the subject's beliefs are modeled as a mixture of "independent coin

tosses", with mixing measure dp(q); the mixture of independent measures is not

independent; hence the subject in case (ii) exhibits willingness to learn from previous

experience whereas the subject in case 1 does not learn from experience.

9