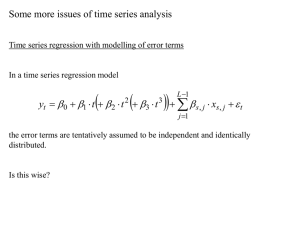

The general solution to a stochastic linear difference equation has

advertisement

Models with Trends and Nonstationary Time Series Ref : Enders Chapter 4, Favero Chapter 2, Cochrane Chapter 10. The general solution to a stochastic linear difference equation has three parts: yt trend stationary component noise The noise component: ARCH, GARCH approaches model this variance (volatility) component. The stationary component: AR(p), MA(q), ARMA(p,q) models. Require the roots of the characteristic equation to lie within the unit circle (or the roots of the inverse of the characteristic equation to lie outside the unit circle). Here: we examine the trend component. Trend = deterministic trend + stochastic trend Deterministic trend: constant, accelerating nonrandom trend. Stochastic trend: random. It can be due to any shock, such as technology, oil prices, policy, etc. Until the 1960s researches modeled time series as covariance stationary. Problem: this assumption did not describe macroeconomic time series that generally grow over time. Originally some proposed ways for dealing with the problems of growing series: taking the log of Y and assuming the DGP could be described by log(Yt ) y t bt z t , where y was assumed covariance stationary and E(y)=0, which led to expected growth rate of b in the series: E (y t ) b . The series y were said to be trend stationary. Box and Jenkins first proposed the idea that instead of treating the macro series as covariance stationary around a deterministic trend, we should accept that they are not cov stationary, but instead first difference them to make them cov stationary: If y t b y t 1 u t , then a stationary model for y would be: yt b ut . They then modeled u as a covariance stationary, ARMA (p,q) process and thus y is an ARIMA(p,1,q) process. Models with Trends 1 Reminder: “I” stands for integrated process and “1” shows that the process needs to be differenced once to be stationary: integrated of degree 1=I(1). A covariance stationary series are I(0). If a time series needs to be differenced d times to become stationary, it is integrated of degree d, I(d). Then the series can be represented by an integrated moving average process of the order, p, d, q, an ARIMA(p,d,q). Usually d=1 is sufficient. In economics d=2 is the maximum we would need to differentiate. For ex: rate of growth of inflation (differentiate the price level twice). They showed that many time series could be successfully modeled this way. Nelson and Plosser (1982) later tested and confirmed that they could not reject nonstationarity in most macroeconomic and financial series. They suggested technology shocks as an explanation for this finding, but others later interpreted it as evidence of rigidities. Since this finding, which established that most macroeconomic and financial series can be described well by an ARIMA process, nonstationarity became part of macroeconometrics. Why is it important to recognize this? Most macro variables are very persistent (nonstationary). But standard inference techniques are unreliable with nonstationary data. Dickey and Fuller: OLS estimates are biased towards stationarity, suggesting that series that looked stationary with OLS regressions would be in fact generated by random walks. This finding made most of the conclusions in the macro literature wrong or at least undependable. In this lesson, we will look at: Trend stationary models Random walk models Stochastic trend models Trends and univariate decompositions When a process is unit root nonstationary, it has a stochastic trend. If linear combinations of more than one nonstationary processes do not have stochastic trends, these variables are cointegrated. I. Trend Stationary and Difference Stationary Models Consider the process xt xt 1 et where e is white noise ( et ~ n.i.d.(0, 2 ) ). If 1 we can write (1 L) xt et hence xt i 1 i ( et i ) stationary process. Models with Trends 2 If 1 , then (1 L) xt et , there is a unit root in the AR part of the series and we have to solve the equation recursively. If x 0 0 , recursive substitution until t=0 gives the solution: xt ti 10 ( et i ) x0 . Rewriting: xt (2) t determinis tic trend ti 10 et i x0 . stochastic trend If there are no shocks, the intercept is x 0 . Suppose there is a shock at time i (e.g. an oil-price shock), it shifts the intercept by et i and the effect is permanent (with coefficient 1). This is a stochastic trend since each shock affects the mean randomly. The model has a very different behavior than the traditional covariance stationary models where the effect of shocks dies over time. GDP et i x0 time tt i Models with Trends 3 If xt ~I(0) then xt Trend Stationary (TS) xt has a linear time trend Difference Stationary (DS) I(1) or unit root stationary xt bt et LR var(xt ) 0 Non-zero serial correlation. Special case: random walk LR var(xt ) 0 Look at special cases for xt xt 1 et 1. Difference Stationary models (DS) (i) Random walk: AR(1) model with 0 , 1 (a unit root process) xt xt 1 et Note: This is a martingale process if e is not an i.i.d (0, 2 ) process. xt et the process is stationary in its first-difference. The solution to the differential equation is: xt ti10 et i x0 if x0 0 Properties of x t : E ( xt ) E ( xt s ) x0 or E ( xt ) E ( xt s ) 0 if x 0 0 . The mean of a random walk is constant. 2 (0) Var( xt ) E( xt2 ) E ti 10 et i = E ti10 e 2 t i = t 2 and 2 t 1 Var( xt s ) E( xt2 s ) E ti 10 et i s = E i0 e 2t is = (t s) 2 . lim t E( xt2 ) . The variance explodes as t grows over time. RW is nonstationary. Covariances and correlation coefficient: Models with Trends 4 (s) Covar ( xt , xt s ) E[(xt E( xt ))(xt s E( xt s ))] (t s) 2 (2) (s) (1) (t 2) / t , ( s ) (t s ) / t (1) (t 1) / t , (2) (0) ( 0) (0) As s 0 1 and as s t , 0 . So, the correlation coefficient slowly dies out, though it takes a long time. It may be difficult to distinguish the ACF of an AR(1) from random walk, especially if the autocorrelation coefficient is large. So ACF is not a useful tool with RW to determine if a process is nonstationary. Variance and covariance are time dependent, thus the random walk process is nonstationary and needs to be first differenced to become stationary it is called an I(1), or difference stationary (DS) process. This means that stochastic shocks have a nondecaying effect on the level of the series. They never disappear or die away over time slowly. (ii) Random walk with drift: AR(1) model with 0 , 1 xt xt 1 et xt et the process is stationary in its first difference. We saw that the solution to this differential equation is: xt ti10 et i x0 t Thus x has a linear trend when we have a RW with drift. This process is the sum of two nonstationary processes: xt zt ( x0 t ) where x0 t = linear (deterministic) trend zt ti 10 et i = stochastic trend (random walk without drift) As t grows, the linear trend will dominate the random walk. Since z t et , the first difference of x is stationary: xt et --mean constant ( ) , var constant ( Var (xt ) 2 ), covar=0 A RW with drift is also a called difference stationary (DS) model. Models with Trends 5 (iii) ARIMA(p,1,q) model A( L) xt B( L)et If A(L) has a unit root, B(L) all roots outside the unit circle, we can write the model as: (1 L) A * ( L) xt B( L)et where polynomial A*(L)’s roots all lie outside the unit circle and it is of order p-1, A * ( L)xt B( L)et Now xt sequence is stationary since A*(L)’s roots all lie outside the unit circle. With ARIMA(p,d,q), we can first-difference d times and the resulting sequence will be stationary as well. First-differencing is used to make stationary a nonstationary series. It removes both the deterministic and the stochastic trends. But as we will see in the topic about cointegration, this makes the researcher lose valuable long-run information. 2. Trend-Stationary (TS) processes: xt t ut , where u is a white-noise process. Here nonstationarity is removed by regressing the series on the deterministic trend. The process fluctuates around a trend but it has no memory and the variation is predictable. Ex: log(GNP) can be stationary around a linear trend. If you difference this process, then the resulting series are not well-behaved. 1. First-differencing a TS process xt ut ut 1 (1 L)ut We are introducing a unit root in the MA component. Thus xt becomes noninvertible. 2. Subtracting the deterministic trend: “detrending” Substract the estimated values of x from the observed series xt t . If nonstationarity is only due to deterministic trend, the resulting series uˆt xt xˆt will be a stationary process and thus can be modeled as an ARMA(p,q) process for ex. More generally the trend function can be a polynomial with the degree to be determined by the AIC or SBC. xt i 0 i t i ut n Models with Trends 6 Similarly, if you try to detrend a DS model, you end up adding a deterministic trend to the existing stochastic trend portion of the in the first difference : Warning: the problem with detrending is that it may be seriously misleading: a RW generates a lot of low frequency fluctuations. In a short sample, a drift may be wrongly interpreted as a trend or a broken trend. If you fit a trend to the series, which true representation is a RW, you would be estimating the wrong model. This is the problem with “technical analysis” in the stock or FX markets (head-and-shoulder patterns). Models with Trends 7 Illustration Eviews graphs TREND.PRG BeveridgeNelson.WF Generate three series: one DT (with deterministic trend) with 0, 0.1 and two with stochastic trends (ST1, ST2) whose difference is that error terms are from different drawings from the same distribution. STs are RW with a drift =0.1 . smpl 1 1 genr ST1=0 genr ST2=0 smpl 2 200 series ST1= 0.1+ST1(-1) +nrnd series ST2=0.1+ST2(-1)+nrnd series DT= 0.1*@trend +nrnd 40 30 20 10 0 -10 25 50 75 100 DT 125 ST1 150 175 200 ST2 Unlike for the series DT, detrending the series ST1 and ST2 will not make the series stationary. series dtdet=dt-0.1*@trend series st1det=st1-0.1*@trend series st2det=st2-0.1*@trend plot dtdet st1det st2det Models with Trends 8 20 15 10 5 0 -5 -10 25 50 75 DTDET 100 125 ST1DET 150 175 200 ST2DET Try now first-differencing. All series become stationary. series ddt=dt-dt(-1) series dst1=st1-st1(-1) series dst2=st2-st2(-1) plot ddt dst1 dst2 5 4 3 2 1 0 -1 -2 -3 -4 25 50 75 DDT 100 125 DST1 150 175 200 DST2 The TS model is a special case of DS model: Consider a general form of a DS model: (1 L) xt a( L)et (the random walk with drift= simplified version where a(L)=1). And a general form of a TS model: xt t b( L)et , or: (1 L) xt (1 L)b( L)et a( L)et where a( L) (1 L)b( L) , a(L) has unit root. Models with Trends 9 ** The main difference between the two models is that the MA part of the TS model has a unit root. Both the DS (integrated variable) model and the TS (deterministic trend) model exhibit systematic variations. Differences TS models: variation predictable can be removed by removing the trend. DS models: variation not predictable cannot be removed by detrending. Alternative Representation of an AR(p) processes This section shows that any polynomial of the process ( L) xt can be written ( L) (1) (1 L) * ( L) (3) where * ( L) i1i L0 i2 i L i3 i L2 .... pj01 ip1 j i L j This is a useful representation that will be used to derive the Beveridge-Nelson decomposition. Consider a polynomial ( L) 1 1L 2 L2 ... p1Lp1 p Lp (4) Define (1) 1 1 2 ... p 1 p and j j 1 j 2 ... p for j=0,1,2,… The polynomial (3) is equivalent to: (1) * ( L)(1 L) (1) ( 0 1L 2 L2 ... p1 Lp1 )(1 L) , because (5) (1) 0 0 L 1L 1L2 2 L2 2 L3 ... p1Lp1 p1Lp (1) 0 (1 0 ) L ( 2 1 ) L2 (3 2 ) L3 ... ( p1 p2 ) Lp1 ( p1 ) Lp Replace (1) and i : (1 1 2 ... p ) (1 2 ... p ) [(2 3 ... p ) (1 2 ... p )]L [(3 4 ... p ) (2 3 ... p )]L2 ... [( p ) ( p1 p )]Lp1 p Lp 1 1L 2 L2 ... p 1Lp 1 p Lp ( L) Models with Trends 10 Thus: ( L) (1) (1 L) * ( L) A more elegant way of showing the same thing (Favero): C(L)=C(1)+(1-L)C*(L) Consider C(L), a polynomial of order q. Define a polynomial D(L) such that: D(L)=C(L)-C(1), also of order q since C(1) is constant. Thus D(1)=0, meaning that 1 is a root of D(L), and D(L)=C*(L)(1-L)=C(L)-C(1) thus C(L)=C(1)+(1-L)C*(L). II. Decomposition of Univariate Time-series Ref: Favero Ch.2, Enders Ch.4, Pagan online lecture notes #4 The idea is that it is informative to decompose a nonstationary sequence into its permanent and temporary (stationary) components. Beveridge and Nelson (1981, JME): expressed an ARIMA(p,1,q) model in terms of random walk+drift+stationary components. They showed how to recover from the data the trend and the stationary components. This idea goes back to measuring the “output gap” used to assess the business cycle or estimate the Phillips curve. Also, if you assume like in Blanchard and Quah that demand shocks affect out put temporarily while supply shocks affect it permanently, you can also infer the demand shocks from the temporary component. However, overall this is not a good way to approach this question, since the temporary vs permanent components should be model determined. Moreover, there are some problems associated with the assumptions behind trend extraction approaches since they are not unique. Consider again equation 2 xt t ( et i x0 ) (2) with x = deterministic trend due to drift + (difference stationary process). BN decomposition further decomposes the 2nd term in the RHS into a stationary component and a random walk component. Models with Trends 11 Deriving the temporary vs. permanent effects (Favero p.51): Consider the first difference of an integrated process: (6) xt C ( L)et where et ~ i.i.d .(0, 2 ) and C(L) is a polynomial of order q. Define a polynomial D(L) such that D(L)=C(L)-C(1), also of order q since C(1) is constant. D(1)=0, meaning 1 is a root of D(L) and hence we can express D(L) as: D(L)=C*(L)(1-L) Also C(L)=C(1)+C*(L)(1-L) D(L)=C(L)-C(1) Using this result, we can thus rewrite (6) substituting C(L): (7) xt C (1)et C * ( L)et . Integrating this equation (i.e., divide both sides by (1-L)) we get: xt [ t C (1) zt ] C * ( L)et TRt Ct where z is a process such that zt et , thus TRt TRt 1 C (1)et TR=deterministic trend + stochastic trend=permanent (random walk) component; C = cyclical trend = temporary (stationary) component. Alternative interpretation of the decomposition (Pagan, online lecture notes): Rewrite (7) as: (1 L) xt C (1)et C * ( L)(1 L)et xt (1 L)1[ C(1)et C * (L)(1 L)et ] (1 L L2 ...)[ C(1)et ] C * ( L)et xt [ t C (1)tj 0 et j ] C * ( L)et Pt ( permanent) Tt (temporary) The term in the summation is an integrated series, which reflects permanent shocks P is an I(1) process and T is an I(0) process since lim j C *j 0 . Models with Trends 12 This is what is called the 1. Beveridge and Nelson decomposition: For any time-series xt that is I(1), we can represent it as (1 L) xt C ( L) t (6) with t ~ i.i.d and C(L) a polynomial of order q, and we can write xt Ct TRt where Ct =temporary (cyclical) component = C * ( L) t TRt =permanent (trend) component=deterministic trend+stochastic trend = TRt 1 C (1) t ( t C (1) t j ) . To see this: apply C(L)=C(1)+(1-L)C*(L) in (3) to the polynomial C(L) in (6). The equation xt C ( L)et can be written as (1 L) x t C (1) t (1 L)C * ( L) t (1 L)TRt (1 L)Ct xt TRt Ct . Two features of B&N decomposition: 1. The shocks to the permanent component C(1)e are white noise. 2. The shocks to permanent and temporary components are perfectly negatively correlated. Examples: find the cyclical and trend components in 1. ARIMA(0,1,1) process xt t t 1 with 0 1 The BN decomposition gives: xt Ct TRt With Ct = C * ( L) t and (1-L) TRt = C (1) t . From the example, we have: C( L) 1 L C (1) 1 From C(L) C(1) (1 L)C * (L) C * ( L) C ( L) C (1) L L 1 L 1 L Thus xt t (1 ) zt where zt t 1 L , a process for which zt t . 2. An ARIMA(1,1,1) process xt xt 1 t t 1 with 0 1 Rewrite the process as xt (1 L) (1 L) t xt (1 L) t /(1 L) Thus C ( L) 1 L 1 and C (1) 1 1 L Models with Trends 13 and C * ( L) C ( L) C (1) 1 L (1 )(1 L ) Hence, xt C * ( L) t C (1) t /(1 L) = 1 t zt . The longer the (1 )(1 L ) 1 memory (higher rho), the bigger is the effect of the stochastic trend on the process. 3. An AR(1) process: xt xt 1 t HW2: Find the transitory and the permanent components. Show that the permanent component’s effect on the process is higher, the longer is the memory. When the transitory component is measured by the Beveridge-Nelson decomposition, and the growth rate is AR(1), show that the transitory component is proportional to the growth rate in x, Procedure to use the BN technique in empirical studies: Step 1: Estimate the first-difference of the series, and identify the best ARIMA model of the sequence. Get the error term and all the parameters in the constant in TR and C(1). Step 2: Given an initial value for TR, compute the permanent component TR of the sequence. Step 3: Compute the cyclical component by subtracting the permanent component from the observed values in each period. Numerical Example 1. Favero p.52 Suppose you estimated an ARIMA(2,1,1) model and got the following regression result: xt xt 1 0.6 xt 1 0.6 xt 2 ut 0.5ut 1 How can we generate the permanent and the cyclical (transitory) components of this process? For this, we need to calculate the parameters in C(1), TR and compute C*(L). Rewriting the model with lag operators, you transform it into an ARMA(1,1) model: (1 L) xt 0.6L(1 L) xt (1 0.5L)ut (1 L) xt (1 0.5L) ut (1 0.6 L) Models with Trends 14 Using the expression in (6): (1 L) xt C( L) t C ( L) and (1 0.5L) and C(1)=1.5/0.4 (1 0.6 L) 1.1 1.1L 1 0.5L 1.5 1.1 C ( L) C (1) 1 0.6 L 0.4 0.4(1 0.6 L) C * ( L) 1 L 1 L 0.4(1 0.6 L) 1 L Thus the BN decomposition of the x process is: xt Ct TRt C * ( L)ut ( C (1)ut ) /(1 L) = 1.1ut 1.5 zt 0.4(1 0.6 L) 0.4 and TRt TRt 1 where zt ut and 0 1 L 1.5 ut . 0.4 We can compute the permanent and the cyclical (transitory) components (tr and cycle, respectively) in Eviews as follows: Use bn.prg and bn.wf smpl 1 2 genr x=0 smpl 1 200 genr u=nrnd smpl 3 200 series x=x(-1) +0.6*x(-1)-0.6*x(-2) +u+0.5*u(-1) smpl 1 2 genr TR=0 smpl 3 200 series TR= TR(-1)+(1.5/0.4)*u genr CYCLE=X-TR plot cycle TR x 10 5 0 -5 20 -10 0 -20 -40 -60 25 50 75 X Models with Trends 100 TR 125 150 175 200 CYCLE 15 2. REER_Enders: Canadian real exchange rate. Workfile: reer_enders.wf Program: bn.prg Consider an ARIMA(1,1,1) model xt 108 0.98 xt 1 ut 0.39ut 1 . The model has an unstable AR root. So fit the ARMA model xt 0.186 ut 0.37ut 1 (eqreer) Dependent Variable: CANADA-CANADA(-1) Variable Coefficient Std. Error t-Statistic Prob. C -0.186171 0.343883 -0.541381 0.5896 MA(1) 0.370357 0.099292 3.729962 0.0003 R-squared 0.114123 Mean dependent var -0.186517 Adjusted R-squared 0.103941 S.D. dependent var 2.506556 S.E. of regression 2.372716 Akaike info criterion 4.588163 Sum squared resid 489.7908 Schwarz criterion 4.644087 F-statistic 11.20779 Prob(F-statistic) 0.001205 Log likelihood Durbin-Watson stat Inverted MA Roots -202.1733 2.020327 -.37 Root=-0.37 it is inside the unit circle, thus the ARMA model is invertible. The residuals are nearly white noise, no serial correlation: DW=2 (can use it because there are no lagged dependent variable on the RHS), all autocorrelations and partial autocorrelations are close to zero. Models with Trends 16 Date: 10/03/07 Time: 09:26 Sample: 1980Q2 2002Q2 Included observations: 89 Q-statistic probabilities adjusted for 1 ARMA term(s) Autocorrelation .|. .|. . |*. .|. .|. .|. .|. . |*. .|. .*| . .*| . .*| . **| . .|. **| . .*| . .*| . .|. .*| . .*| . Partial Correlation | | | | | | | | | | | | | | | | | | | | .|. .|. . |*. .|. .|. .|. .|. . |*. .|. **| . .*| . .*| . **| . .|. .*| . .|. .*| . . |*. .|. .|. | | | | | | | | | | | | | | | | | | | | 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 AC PAC Q-Stat Prob -0.013 0.036 0.147 0.033 0.053 0.041 -0.010 0.113 0.028 -0.188 -0.099 -0.062 -0.239 0.037 -0.193 -0.100 -0.071 0.025 -0.093 -0.063 -0.013 0.036 0.148 0.037 0.045 0.020 -0.022 0.097 0.023 -0.201 -0.152 -0.078 -0.213 0.063 -0.153 -0.055 -0.074 0.148 -0.001 -0.037 0.0154 0.1344 2.1585 2.2604 2.5293 2.6949 2.7040 3.9707 4.0524 7.6886 8.7160 9.1245 15.210 15.358 19.447 20.562 21.134 21.204 22.196 22.663 0.714 0.340 0.520 0.639 0.747 0.845 0.783 0.852 0.566 0.559 0.610 0.230 0.286 0.149 0.151 0.173 0.217 0.223 0.252 Compute the cyclical and permanent components: xt 0.186 (1 0.37 L)ut C(L)=1+0.37L Thus C * ( L) C(1)=1.37 0.186 1 0.37 L 1.37 0.37 L 0.37 0.37 and therefore, 1 L 1 L Ct C * ( L)ut 0.37ut ---the cyclical (transitory) component. (1 L)TR C (1)ut 0.186 1.37 u ---permanent component. TRt TRt 1 C (1)ut TRt 1 0.186 1.37u Then we can rewrite x in terms of its cyclical and permanent components as: Models with Trends 17 xt (0.37ut ) (0.186 1.37 zt ) EVIEWS: Generate the permanent and the transitory components of the x process. The decomposition of a series into a random walk and a stationary component is not unique, BN is one way of doing it so, but there are also other ways, such as HodrickPrescott Filter as we will see below. BN requires (i) the correlation between the trend and the cyclical components to be -1, (ii) var(trend)>var(the x series): the permanent component is more volatile than the series because the negative correlation between the trend and the cyclical component smoothes out the x sequence. We can see this using the Example 1 where we got xt 1.1ut 1.5 zt 0.4(1 0.6 L) 0.4 where zt 1.5 ut ut and TRt TRt 1 0.4 1 L Thus E[(Ct E(Ct )(TRt E(TRt ))]/ E[Ct E(Ct )]2 E[TRt E(TRt )]2 1.1 t 1.5 t E 0 . 4 ( 1 0 . 6 L ) 0 . 4 ( 1 L ) 1.1 t E 0.4(1 0.6 L) 2 1.5 t E 0.4(1 L) = -1 2 Var ( xt ) 2 Var (Ct ) Var (TRt ) 1.5ut Var (TRt ) E (TRt ) E 0.4(1 L) 2 2 2 2 1.5 ut i 1.5ut i 0 E E since Eut iut j 0 0.4 0.4 2 14 u Var ( xt ) 2 2 1.1ut 1.5ut E Var ( xt ) = E 0.4 0.4(1 0.6 L) Models with Trends 2 18 2 2 1.1 0.6i ut i 1.1 2 2 2 i 0 E 14 u E ut 15 u 0.4 0.4 2 2 2 7.56 u 15 u 7.5 u 2 Hence 2 TR . If the innovation between the trend and the stationary component is not -1, but rather 0 (they are uncorrelated), but you use a BN decomposition, you would impose on the data an incorrect partitioning of the variances. But there is no way to know the identification from the data when you use univariate decomposition. 2. Hodrick-Prescott Decomposition (Filter) This is another way of decomposing a series into its trend (permanent), TR and cyclical (temporary) components (x-TR). Vast applications in the real business cycle literature. The idea is to minimize variations around the trend (permanent component). Min Tt1 ( xt TRt ) 2 Tt21[(TRt 1 TRt ) (TRt TRt 1 )]2 TR t The minimization is done under the constraint of a penalty, represented by C2 / x2 , which penalizes variability in the growth component (TR) series. The larger the value of , the smoother is the solution series TR. See Hodrick and Prescott (JMCB 1997) for an application to growth in GNP and aggregate demand’s components (all variables are in natural logs, so the change in TR corresponds to a growth rate). Illustration Workfile bn.wf Select X, proc, HP filter. Call the smoothed series hptrendx, with 100 . Compare results with BN: trendHP is smoother than trendNB. If increase the maximum volatility will be that of the actual series x. Models with Trends 19 Hodrick-Prescott Filter (lambda=100) 20 20 6 0 10 -20 0 -40 -10 4 -20 -60 2 -30 0 -40 -2 -50 -4 -6 -60 25 50 75 X 100 125 150 175 200 Trend Cycle HP Filter 25 50 75 CYCLE 100 125 150 175 200 TR X BN decomposition Both methods give different decompositions. Since we don’t have prior information on the relationship between innovations in the trend and the stationary components, the decomposition of the series into a permanent and temporary component is not unique. The advantage of the HP filter is that it is applying the same trend to all the series you use. Therefore, it is often applied in business cycle analyzes where most series share the same stochastic trend. BN decomposition extracts a different trend from each series. The disadvantage of HP filter though is that since it smoothes the trend, it may introduce spurious volatility into the stationary component of the series. Which method you want to use depends on the type of identification problem you are analyzing. Theoretical models imply that several time series share common stochastic trends. In empirical methodology this translates into multivariate models. Then we need to see if we are able to group series into common stochastic trends and look at whether this makes the trend is disappear: cointegration. To consider whether different series with stochastic trends are cointegrated, we first need to look at the unit roots and unit root tests. II. Unit roots and spurious regressions We mentioned that many economic time series are nonstationary. This includes most NI statistics, goods and asset prices. Nonstationarity is usually due to a few causes, and it gets transmitted to all other variables. Models with Trends 20 Productivity shocks: affect output and the real side of the economy. Monetary policy: a deviation of money supply from its LR constant growth, the log of money supply is a random walk with drift: ln M t et then all nominal variables and nominal prices become nonstationary. Individual budget constraints: at ct yt (1 rt )at 1 a=assets, c=consumption, y=income, r=interest rate. Solving for a: [1 (1 rt ) L]at yt ct or [1 L]at yt ct But 1 (root>1) thus the system is unstable ( 1 is a random walk) thus I(d). Implications of nonstationarity in economics and finance 1. Model building: Nonstationary series are more volatile than stationary series Series with drift terms tend to be more volatile around strong trends. If we have nonstationary processes with drifts, the other series also must exhibit the same behavior to have meaningful estimates. For ex: you cannot explain GDP with unemployment since GDP has a trend while the other variable does not. If the dependent variable is nonstationary, you need to have at least a subset of the independent variables to be nonstationary as well. 2. Econometrics If data are nonstationary, then the sampling distributions of coefficients estimates may not be well approximated by the Normal distribution. This is particularly true if the series have a drift. Suppose you regress one independent random walk process on another random walk process, you may end up getting high R2 and significant estimates but this result is called spurious, and meaningless since the two series have no relation (Granger and Newbold, 1974, J of Econometrics). Consider: (8) xt zt et The traditional regression methodologies require xt ~ I (0) , zt ~ I (0) , and et ~ i.i.d .(0, 2 ) . If e is nonstationary, then estimates are meaningless because shocks will have permanent effects, and thus the regression will have permanent errors. Suppose that x and z are random walk with 0 . xt xt 1 t zt zt 1 t . Thus et xt zt = Models with Trends i0 t i i0 t i assuming initial conditions are 0. k k 21 Note that the first moment of e is not constant but instead: Eet s et et 1 xt 1 z t 1 xt t 1 z t t 1 = et t 1 t 1 . Thus also: Eet s et . The second moment increases with t, and also not constant. Hence the traditional statistical inference methods, such as OLS, are not valid. This problem will not disappear in large samples either, making asymptotic results not applicable also. Illustration USUK.wf Run series LCUS on c LYUK EqTable2_2 Dependent Variable: LCUS Method: Least Squares Date: 01/02/07 Time: 16:17 Sample (adjusted): 1959Q2 1998Q1 Included observations: 156 after adjustments Variable Coefficient Std. Error t-Statistic Prob. C LYUK -5.612166 1.208546 0.162908 0.014643 -34.44987 82.53580 0.0000 0.0000 R-squared 0.977893 Mean dependent var 7.829120 Adjusted R-squared 0.977750 S.D. dependent var 0.351691 S.E. of regression 0.052460 Akaike info criterion -3.044783 Sum squared resid 0.423821 Schwarz criterion -3.005682 Log likelihood 239.4931 F-statistic 6812.158 Durbin-Watson stat 0.138834 Prob(F-statistic) 0.000000 High R2, and t stats. but meaningless results. Spurious regressions. Models with Trends 22 See if the series LCUS and LYUK are AR(1). Eqlcus and eqlyuk Dependent Variable: LCUS Variable Coefficient Std. Error t-Statistic Prob. C 9.964851 0.595449 16.73503 0.0000 AR(1) 0.996284 0.001022 975.2605 0.0000 R-squared 0.999836 Mean dependent var 7.836301 Adjusted R-squared 0.999835 S.D. dependent var 0.355190 S.E. of regression 0.004563 Akaike info criterion -7.929080 Sum squared resid 0.003248 Schwarz criterion -7.890313 Log likelihood 628.3973 F-statistic 951133.0 Durbin-Watson stat 1.366495 Prob(F-statistic) 0.000000 Inverted AR Roots 1.00 Dependent Variable: LYUK Variable Coefficient Std. Error t-Statistic Prob. C 0.049911 0.049873 1.000764 0.3185 LYUK(-1) 0.996123 0.004486 222.0758 0.0000 R-squared 0.996887 Mean dependent var 11.12186 Adjusted R-squared 0.996867 S.D. dependent var 0.287769 S.E. of regression 0.016108 Akaike info criterion -5.406311 Sum squared resid 0.039956 Schwarz criterion -5.367210 Log likelihood 423.6923 F-statistic 49317.67 Durbin-Watson stat 2.296729 Prob(F-statistic) 0.000000 In both equations ˆ 1 , and the error terms seem i.i.d. with zero mean and constant or finite variance, thus equations are close to random walk. Models with Trends 23 .02 .01 .02 .00 .01 -.01 .00 -.02 -.01 -.02 60 65 70 75 80 85 RELCUS 90 95 00 RELYUK This leads to the solution to (8) with a stochastic trend. In (8) we are thus regressing a trending variable on another trending variable, hence ˆ is high. Residuals from running LCUS on LYUK show that the stochastic error term is still present. So this is a spurious regression. 8.5 8.0 .15 7.5 .10 .05 7.0 .00 -.05 -.10 -.15 1960 1965 1970 1975 Residual Models with Trends 1980 1985 Actual 1990 1995 Fitted 24 Several cases: xt ~ I (0) y t ~ I (0) y t ~ I (d ) y t ~ I (d ) y t ~ I (k ) xt ~ I ( d ) Classical regression model appropriate Classical regression model not appropriate. Spurious regression. Classical regression model not appropriate, If residual sequence has spurious regression. Residual sequence has stochastic trend, spurious stochastic trend. regression. If residual sequence is stationary, series are cointegrated. Classical regression model not appropriate. Spurious regression (Enders) Important to check if the series are nonstationary. Tests such as Dickey-Fuller (DF) and Augmented Dickey-Fuller (ADF) have been widely used to check the stationarity of the processes. Dickey-Fuller (DF) test (1979) xt t xt 1 t where is NID But this test is valid only for AR(1) processes. If there is higher order correlation, then we need to use the ADF test. Augmented Dickey-Fuller (ADF) or Said-Dickey test Consider the following AR(p) equation: xt t ip1 i xt i et Assume that there is at most one unit root, thus the process is unit root nonstationary. We will reparameterize this equation. For this, consider an AR(2) process and reparameterize it (subtract xt 1 from LHS and RHS, subtract and add 2 xt 1 to RHS): xt x t 1 t x t 1 1 xt 1 2 xt 2 β 2 x t 1 β 2 x t 1 et xt t (1 1 2 ) xt 1 2 ( xt 1 xt 2 ) et xt t xt 1 2 xt 1 et And for AR(p): xt t xt 1 ip1 i xt i et , Models with Trends 25 where (1 1 2 .... p ) and i ( i1 i2 ... p ) for i=1, 2, 3,…, p-1. This is the augmented version of the DF test (for DF all i 0 ). It is general enough to be asymptotically valid when there is an MA component, if there are sufficient lagged difference terms. Null hypothesis: 0 . Alternative: 0 If not rejected then x is nonstationary. The test is evaluated with the t-ratio: t ˆ . se( ˆ ) (Note: for p=1, (1 1 ) , and the test is equivalent to whether there is a stable root or not.) This statistics does not follow the conventional Student’s t-distribution. In both tests critical values are calculated by Dickey and Fuller and depend on whether there is an intercept and/or deterministic trend, whether it is a DF or ADF test. Eviews uses the more recent MacKinnon estimates for critical values, which are valid for any sample size. Intercept and deterministic time trend The intercept and trend depend on the alternative hypothesis. If the alternative is that the series is mean-zero stationary, then there is no intercept. Unlikely in macroeconomics series. If alternative is constant-mean stationary, then include an intercept. This is a good assumption for time series that do not grow over time. If the alternative is that the series is trend stationary, then include a constant and a linear trend. This specification represents most macroeconomic time series that are growing over time. Choice of lag length 1. Sequential t/F-statistics approach: start from a large number of lags, and reestimate by reducing by one lag every time. Stop when the last lag is significant. Quarterly data: look at the t-stats for the last lag, and F-stats for the last quarter. Then check if the error term is white noise. 2. Information Criteria: AIC or SBC or HQC ** Note that the ADF test is not robust to the choice of p. Models with Trends 26 Phillips-Perron (PP) test (1998) It uses a nonparametric method to correct for the serial correlation of t . PP make the adjustment to both DF and ADF tests, and call their adjusted tests Z(t) and Z ( ) , respectively and they have the same asymptotic distribution as the DF and the ADF tests. - Advantage: more powerful than ADF. - Disadvantage: more severe size distortions than ADF tests (the size of the test in small samples is significantly different from the size of the test obtained from asymptotic theory). Kwiatkowski, Phillips Schmidt and Shin (KPSS) test (1992) Often we model variables assuming that the variables are stationary. The tests so far have the null of nonstationarity. KPSS test is one of the first ones that sets the null that the series is trend stationary. It assumes that the series is (trend) stationary under the null. It uses LM statistics and the critical values for this are based on asymptotic results given in KPSS. Procedure for unit root test: 1. Decide on the model: whether or not a constant/time trend is required. If there is no trend, then the alternative hypothesis should be that the process is non-zero mean and without trend. If there is a trend, then the alternative is that the process is trend stationary with a non-zero constant. 2. Choose the maximum order of lags. 3. If the t-statistics is negative and greater than the critical value in absolute value, reject the null of unit root. Problem with the nonstationarity tests: The power of the DF and ADF tests are very low, i.e. they can not reject the null of nonstationarity too often (suggest that there is a unit root). Models with Trends 27