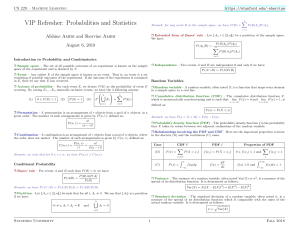

What do I need to know about probability

advertisement

What are the prerequisites for Math 53?

Any version of Linear Algebra and any version of Multivariate Calculus, plus some

familiarity with probability.

Math 63 (analysis) is NOT required. Economics is NOT required.

What’s that about probability?

Probability is not a formal prerequisite, but we’ll proceed on the assumption that

everyone is familiar with the basics. We’ll review each concept when it arises, but not

enough to let you catch up if you’re totally clueless at the start.

Stat 11, Econ 31, or AP statistics in high school would be enough. Those courses cover

mostly statistics, rather than probability. It is the probability part that we will need.

What should I read?

Here are some references. You can check them to see whether you know enough

probability already, or read them to learn the material. Pick your favorite book --- they

all cover the same material, so you don’t need to read them all!

Grinstead and Snell, Introduction to Probability, 2nd revised ed.,

Chapters 1-6 except sections 3.3 and 4.3.

Moore and McCabe, Introduction to the Practice of Statistics, 5th ed.:

Sections 1.1, 1.2, 1.3, 2.1, 2.2, 4.1 through 4.4. Be comfortable with the

use of the tables inside the front cover.

Ross, A First Course in Probability, 6th ed.:

Sections 2.1 through 2.5, 3.1, 3.2, 3.4, 4.1 through 4.5, 5.1 through 5.4, 6.1, 6.2,

maybe 7.3.

(I’ll revise this soon to add more references.)

Just tell me what I need to know.

OK, below is a rough essay on probability. If you know everything in this essay, you’re

in great shape. If you feel you would need review on a few points, we’ll do that in Math

53. If you think you know this material but the language seems strange, you’ll be fine. If

all of this is completely strange to you, you need to read some reference material before

we start.

1

Here’s the essay.

Events A, B, C… are subsets of a sample space, S. Events can be constructed by taking

unions, intersections, and complements of other events. A probability measure is a

function P that assigns “probabilities” P(A), P(B), P(C)… to events. All probabilities are

in the interval [0, 1], and they satisfy certain rules. For example, if the events

A1, A2, A3,… are pairwise disjoint --- that is, if Ai Aj = whenever i j --- then

P( A1 A2 A3 … ) = P(A1) + P(A2) + P(A3) + ….

Two events A and B are independent if P(AB) = P(A) P(B). More generally, a set of

events are independent if, whenever we partition them into two sets {Ai} and {Bj}, any

combination of the A’s is independent of any combination of the B’s.

One may also consider conditional probabilities. The notation P(A|B) means the

probability that we would assign to A, if we knew that event B had already occurred. We

could also define P(A|B) = P(A B) / P(B). It is generally true that

P(A B) = P(A) P(B|A) = P(B) P(A|B)

even if the events are not independent. In fact, A and B are independent precisely when

P(A|B) = P(A), and this is true precisely when P(B|A) = P(B).

A (real-valued) random variable is a function X:SR, for which we can define a

cumulative distribution function (cdf) as follows:

F(x) = P(X ≤ x) = P( { s S | X(s) ≤ x } ).

Then F satisfies

lim F ( x) 0, lim F ( x) 1, lim F ( x) F (a), F ( y) F ( x) when y x.

x

x

x a

Any function with these properties can be a cdf for a random variable. We have:

P( a ≤ x < b ) = F(b) – F(a).

Some random variables are continuous, which means that they have density functions. If

X has cdf F, and if F has a derivative f, then f is called the density function for X. Then f

satisfies f(x) ≥ 0 (always) and

P(a≤x≤b)=

b

a

f ( x)dx 1 , and we have

f ( x)dx .

We call f a density function even if F is not differentiable at countably many points, as

long as F is continuous everywhere. We get f from F by F’ = f, and we can reconstruct F

from f by

F(a) =

a

f ( x)dx .

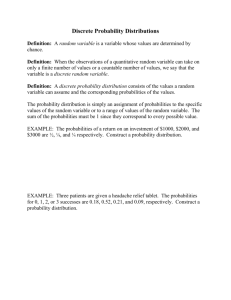

Some random variables are discrete, which means that all of the probability is

concentrated on countably many values x1, x2, x3…. If X is discrete, then it has a

probability mass function p given by

p(xi) = P(X = xi).

Now each p(xi) ≥ 0 and i 1 p( xi ) 1 . We also have

2

P ( a ≤ x ≤ b ) = p( xi )

where the sum is taken over all of the xi’s satisfying a ≤ xi ≤ b.

Two random variables X and Y have a joint distribution function FXY given by

FXY(x, y) = P ( X ≤ x and Y ≤ y ).

We say that these variables are independent if

FXY(x, y) = F(x) F(y)

for every x and y. Sometimes the random variables have a joint density function fXY, and

in that case

P x, y A f ( x, y)dxdy .

A

(We won’t use double integrals much in Math 53 --- maybe not at all. In fact, we won’t

use joint distributions much, except conceptually.)

The expected value of a random variable is, intuitively, the average of a large number of

independent draws from its distribution. Formally, it is given by

E( X ) =

xf ( x)dx

if X has a density function or

E( X ) = i 1 xp( xi )

if X has a probability mass function. Not every random variable has an expected value.

We can define a new random variable Y by expressing it as a function of X: say,

Y = h(X). Then we can find the expected value of Y by

E( Y ) = E( h(X) ) =

E( Y ) = E( h(X) ) =

or

h( x) f ( x)dx

i 1

h( x) p( xi ) .

The variance of a random variable is given by

Var( X ) = E ( (X – E(X))2 ) = E(X2) – E(X)2.

Not every random variable has a variance. When X has a variance, then it also has a

standard deviation given by

X = Var ( X ) .

The covariance of two random variables X and Y is given by

Cov(X, Y) = E(XY) – E(X)E(Y).

When two random variables are independent, their covariance is zero. Their correlation

coefficient is given by

Corr(X, Y) = Cov(X, Y) / (XY);

and this always satisfies -1 ≤ Corr(X, Y) ≤ +1.

A random variable has a uniform distribution on the interval [a, b] if its density function

is given by

3

f(x) = constant when a ≤ x ≤ b, and zero otherwise.

A random variable has an exponential distribution with mean if its density function is

f(x) = (1/) e-x/ when x ≥ 0, and zero otherwise.

A random variable has a normal distribution with parameters and if its density

function is

f ( x)

1

1 x

2

2

.

e

2

The graph of this function is the familiar “bell curve.” If X has a normal distribution,

then we can easily determine probabilities such as

P(a≤x≤b)

by consulting a normal distribution table.

We’ll review each of these concepts when they appear in Math 53, but we’ll expect

everyone to catch on fairly quickly.

(end)

4