problems

advertisement

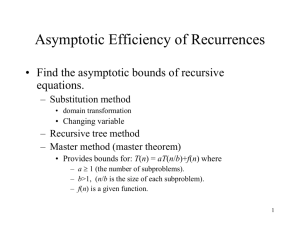

Algorithms Homework – Fall 2000 4.1-1 Show that the solution of T(n) = T( n/2 ) + 1 is O(lg n). By induction: Base case: n=2 T( n/2 ) + 1 c lg 2 T( 2/2 ) + 1 c lg 2 Let c = 2 1 + 1 2 lg 2 Inductive Hypothesis: Assume: T( n/2 ) + 1 c lg ( n/2 ) Inductive step: T(n) c (lg n – lg 2) + 1 = c lg n – c lg 2 + 1 c lg n 4.1-2 Show that the solution of T(n) = 2T( n/2 ) + n is (n lg n). Conclude that the solution is (n lg n). By induction: Base case: n=2 2T( 2/2 ) + 2 c2 lg 2 2 + 2 c2 lg 2 Let c = 1 2+22 Inductive Hypothesis: Assume: T( n/2 ) c n/2 lg ( n/2 ) Inductive step: T(n) c((n/2) – 1)(lg n – lg 2 – 1) = c(n/2 lg n – n/2 lg 2 – n/2 – lg n + lg 2 + 1) = c(n/2 lg n – n/2 – n/2 + 2) = c(n/2 lg n – n + 2) c(n/2 lg n) = c n lg n Since T(n) = O(n lg n) and T(n) = (n lg n) then T(n) = (n lg n) (from page 54) 4.1-3 Show that by making a different inductive hypothesis, we can overcome the difficulty with the boundary condition T( 1 ) = 1 for the recurrence (4.4) without adjusting the boundary condition for the inductive proof. See pp 55 - 57 4.1-4 Show that (n lg n) is the solution to the “exact” recurrence (4.2) for merge sort. T(n) = T( n/2 ) + T( n/2 ) + (n) = (n lg n) if and only if T(n) = T( n/2 ) + T( n/2 ) + (n) = O(n lg n) and T(n) = T( n/2 ) + T( n/2 ) + (n) = (n lg n) By induction: T(n) = T( n/2 ) + T( n/2 ) + (n) = O(n lg n) Base case: n=2 T(2/2) + T(2/2) + (2) c22 lg 2 1 + 1 + c12 c22 lg 2 c12 + 2 c22 Let c2 2 c1 and c2 2 Inductive Hypothesis: Assume true for n/2 T(n) 2(c1 n/2 lg n/2 ) + c2n c1n lg n/2 + c2n = c1n (lg n – lg 2) + c2n = c1n lg n – c1n + c2n c1n lg n, for c1 c2 Therefore: T(n) = T( n/2 ) + T( n/2 ) + (n) = O(n lg n) T(n) = T( n/2 ) + T( n/2 ) + (n) = (n lg n) n=2 T(2/2) + T(2/2) + (2) c22 lg 2 1 + 1 + c12 c22 lg 2 c12 + 2 c22 Let c2 = c1 Assume true for n/2 T(n) 2(c1 n/2 lg n/2 ) + c2n c1n lg n/2 + c2n = c1n (lg n – lg 2) + c2n = c1n lg n – c1n + c2n c1n lg n, for c1 c2 Therefore: T(n) = T( n/2 ) + T( n/2 ) + (n) = (n lg n) Also: Base case: 4.1-5 Show that the solution to T(n) = 2T( n/2 + 17) + n is O(n lg n). Guess: T(n) cn lg n – b, for b 0 T(n) (c n/2 lg n/2 + 17 – b) + (c n/2 lg n/2 + 17 – b) cn 2 lg (n/2) + 34 – 2b cn lg n + 34 – 2b cn lg n – b cn lg n = O(n lg n) 4.1-6 Solve the recurrence T(n) = 2T( n ) + 1 by making a change of variables. Do not worry about whether values are integral. Let m = n T(m2) = 2T(m) + 1 Let S(m) = T(m2) S(m) = 2T(m) + 1 S(m) = T(m) + T(m) + 1 Guess: 2T(m) + 1 = (m) O(m) and (m) By induction: Base case: m = 1, for O(m) 2 + 1 c(1) 3c Let c 3 Assume true for m/2: S(m) 2c m/2 + 1 – b, for b 0 = cm + 1 – b = cm – b cm = O(m) = O( n ) Also: Base case: m = 1, for (m) 2 + 1 c(1) 3c Let c 3 Assume true for m/2: S(m) 2c m/2 + 1 = cm + 1 cm = (m) = ( n ) Therefore, since T(n) = 2T( n ) + 1 = ( n ) and T(n) = 2T( n ) + 1 = O( n ) then T(n) = 2T( n ) + 1 = ( n ) 4.2-1 Determine a good asymptotic upper bound on the recurrence T(n) = 3T( n/2 ) + n by iteration. T(n) = 3T( n/2 ) + n = 3( n/2 + 3T( n/4 ) ) + n = 3( n/2 + 3T( n/4 + 3T( n/8 ) ) ) + n 3n/2 + 9n/4 + 27n/8 + … + 3lgn (1) + n (3 / 2) i + (nlg3) i 0 = O(n) + O(nlg3) = O(max(O(n), O(nlg3)) = O(nlg3) 4.3-1 Use the master method to give tight asymptotic bounds for the following recurrences. a. T(n) = 4T(n/2) + n. CASE 1 a = 4, b = 2, f(n) = n, nlogba = nlg4 = n2, f(n) = O(nlogba-) = O(nlg4-1) = O(n) T(n) = 4T(n/2) + n = (n2) b. =1 T(n) = 4T(n/2) + n2 CASE 2 a = 4, b = 2, f(n) = n2, nlogba = nlg4 = n2 f(n) = O(nlogba-) = O(nlg4) = O(n2) (nlogba lg n) = (n2 lg n ) T(n) = 4T(n/2) + n2 = (n2 lg n ) c. T(n) = 4T(n/3) + n3 CASE 3 a = 4, b = 2, f(n) = n3, nlogba = nlg4 = n2, f(n) = (nlogba+) = (nlg4+1) = (n3) and 4(n/2)3 cn3, for c 1 T(n) = 4T(n/3) + n3 = (n3) =1 4.3-2 The running time of an algorithm A is described by the recurrence T(n) = 7T(n/2) + n2. A competing algorithm A has a running time of T(n) = aT(n/4) + n2. What is the largest integer value for a such that A is asymptotically faster than A? 7T(n/2) + n2 a = 7, b = 2 f(n) = n2 nlogba = nlg7 aT(n/4) + n2 a = a, b = 4 f(n) = n2 nlogba = nlog4a log4a = lg 7 The largest integer value for a is 4 lg7 PROBLEMS 4-1 Recurrence examples Give asymptotic upper and lower bounds for T(n) in each of the following recurrences. Assume that T(n) is constant for n 2. Make your bounds as tight as possible, and justify your answers. a. T(n) = 2T(n/2) + n3 CASE 3 of Master Theorem a = 2, b = 2, f(n) = n3, nlogba = nlg2 = n n3 = (n1+1) = (n2) 2(n/2)3 cn3, c 1 c = ¼ T(n) = (n3) b. T(n) = T(9n/10) + n CASE 3 of Master Theorem a = 1, b = 10/9, f(n) = n, nlogba = nlog10/91 = n0 = 1 n = (n0+1) = (n) 9n/10 cn, c 1 c = 9/10 T(n) = (n) c. T(n) = 16T(n/4) + n2 CASE 2 of Master Theorem a = 16, b = 4, f(n) = n2, nlogba = nlg416 = n2 T(n) = (n2 lg n) d. T(n) = 7T(n/3) + n2 CASE 3 of Master Theorem a = 7, b = 3, f(n) = n2, nlogba = nlg37 n2 = (nlog37+c) 7(n/3)2 cn2, c 1 c = 7/9 T(n) = (n3) e. T(n) = 7T(n/2) + n2 CASE 1 of Master Theorem a = 7, b = 2, f(n) = n2, nlogba = nlg7 n2 = O(nlg7-c) T(n) = ( nlg7) f. T(n) = 2T(n/4) + n 4-3 Parameter-passing costs Throughout this book, we assume that parameter passing during processing calls takes constant time, even if an N-element array is being passed. This assumption is valid in most systems because a pointer to the array is passed, not the array itself. This problem examines the implications of three parameter-passing strategies: 1. An array is passed by pointer. Time = (1). 2. An array is passed by copying. Time = (N), where N is the size of the array. 3. An array is passed by copying only the subrange that might be accessed by the called procedure. Time = (p – q + 1) if the subarray A[p…q] is passed. a. Consider the recursive binary search algorithm for finding a number in a sorted array (see Exercise 1.3-5). Give recurrences for the worst-case running times of binary search when arrays are passed using each of the three methods above, and give good upper bounds on the solutions of the recurrences. Let N be the size of the original problem and n be the size of a subproblem. Binary search works by comparing the element for which you are searching to the element at index (p – r)/2 of a subarray of size n, where p is the first index of the subarray and r is the last index (integer division is used). Therefore, the array passed into binary search is continually divided in half and the appropriate half is searched recursively in this manner until a subarray of size 1 is reached. The division must continue until a subarray of size 1 is reached, because this is a worst-case scenario, which is the case when the element for which you are searching is not present. The first subarray searched is the entire original array of N elements. In each recurrence of binary search the size of the subarray is ½ of the previous recurrence. Therefore, the work to complete this is expressed as T(n/2). Strategy 1 – (Passed by pointer = (1)) T(n) = T(n/2) + (1) The array is passed by pointer, which is constant time. Therefore, the time involved in passing the array is (1). CASE 2 of Master’s Theorem a = 1, b = 2, nlogba = nlg1 = n0 = 1, f(n) = (1) T(n) = (f(n) lg n) = (lg n) Upper bound = O(lg n) Strategy 2 – (Passing by copying = (N)) T(n) = T(n/2) + (N) There are N elements copied to each recurrence of binary search. Therefore, the time involved in passing the array is (N). T(n) = T(n/2) + (N) n/2 + n/4 + 2N n/2 + n/4 + n/8 + 3N n/2 + n/4 + n/8 + n/16 + 4N … (the recurrence continues lg N times) lg n n/ 2 i + lg nN = (N lg N) i 1 Upper bound = O(N lg N) Strategy 3 – (Passing by copying subrange = (p – q + 1)) T(n) = T(n/2) + (n/2) = T(n/2) + (n) There are always half as many elements copied into the next recurrence as there are in the current subarray. Therefore, the time involved in passing the array is (n/2) = (n). CASE 3 of Master’s Theorem a = 1, b = 2, nlogba = nlg1 = n0 = 1, f(n) = (n/2) af(n/b) cf(n) c(n/2)/2 = cn/4 cn/2 T(n) = (n/2) = (n) Upper bound = O(n)