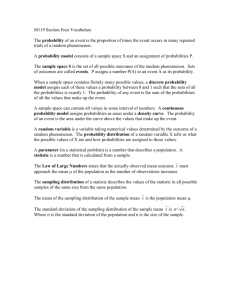

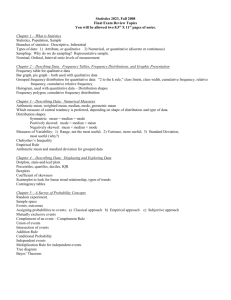

Study Guide for Statistics Test #1 (Chapters 1-4)

advertisement

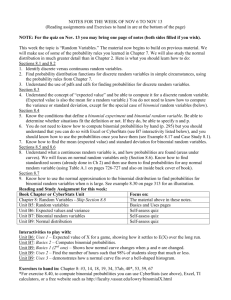

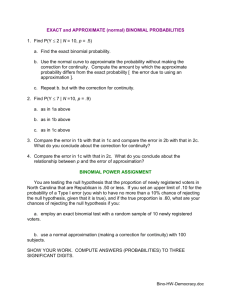

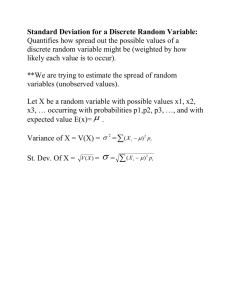

Study Guide for Statistics Test #2 The material you need to know for the test appears below, divided into concepts (know definitions, uses, examples, etc.) and computations (know how to do problems with real data). There are no guarantees that this is a completely exhaustive list of possible topics: You are responsible for all information presented in class, in the text, and from homework problems (by hand and using SPSS). Study suggestions: (1) Review the text, notes, homework problems, handouts; (2) Do many practice problems (text, SMC library study guide, website); (3) Use this guide to check your understanding. Chapter 5: Z-scores: Location of Scores and Standardized Distributions I. Concepts 1. Standardization: Reasons, functions, applications/uses (a) What do z-scores tell us about a score’s location in a distribution? (b) What’s typically considered common, typical, ordinary, and what’s usually considered deviant, abnormal, special, unusual, unique, etc.? (c) Comparing scores from different distributions, or on different tests/measures (d) Role in inferential statistics: How do z-scores help answer these questions: Does a given score (or sample) belong to the population in question? Is a particular score (or sample) representative of a population? Did the treatment have an effect? 2. The relationship between raw scores and z-scores (including shape of their distributions, position in their respective distributions); rescaling, re-labeling 3. Properties of z-scores (mean, standard deviation), and their distributions (shape) II. Computations A. Calculate a z-score (given X, μ, and σ). z = (X – μ) / σ 1. Don’t forget to include the sign (+/-)! B. Determine X, μ, or σ, given a z-score and the other two (e.g., X = μ + zσ ) Chapter 6: Probability: The Normal Distribution, Binomial Distribution, Inferential Statistics I. Concepts A. Probability theory 1. Properties of probability (e.g., range = 0 to 1; sum of all possible probabilities = 1) 2. Assumption of random sampling (know definition and 2 requirements of this) B. Percentiles, quartiles, and percentile rank (the percentage of scores at or below a particular score) C. The normal curve, normally distributed data (e.g., think of Belleville example), and the unit normal table 1. Properties of a normal curve, or normally distributed data: Bell-shaped, Unimodal, Symmetrical (unskewed), Mode=Median=Mean 2. Proportions of area under any normal curve are always the same – this is what makes the normal curve useful in calculating probabilities (because probabilities are proportions) 3. Body versus tail Conley Stats Winter 2006 D. Binomial data and the binomial distribution 1. Notation – know what each of these symbols mean/refer to: A, B, p, q, X, Y, n 2. Properties of binomial data (exactly two categories; p(A) + p(B) = p+q = 1.00) 3. Circumstances in which the normal distribution is an appropriate approximation or model for binomial data and probabilities (i.e., pn ≥ 10 and qn ≥ 10). E. Real limits – why and when do we use them? (more info below under computations) 1. Each X value is actually an interval bounded by real limits. Real limits are determined by our unit of measurement. 2. Calculating probability using the normal curve (and thus the unit normal table) involves calculating the relative area under the curve that corresponds to the X value(s) of interest. Thus, you need to make a 2-dimensional slice of area under the curve (see real limits handout). To calculate the probability of obtaining a single value, use the real limits of X (1/2 the unit of measurement) to determine the width of that slice. 3. Binomial data are discrete, but when certain conditions are satisfied, we can approximate binomial probability using the unit normal table and the normal curve, which is continuous. Thus, for any binomial probability calculation using the normal approximation, use real limits (e.g., HW problem 26 from chapter 6). F. How probability is related to inferential statistics 1. How probability links samples and populations 2. Given a sample (and its statistics), is it likely to have come from a particular population? If we determine it’s unlikely, what does that mean? What do we conclude? (Reject null hypothesis; Assume treatment had an effect; Assume sample differs from population.) II. Computations A. Computing probability with simple frequencies (the “easy way”): If you know all possible outcomes, and have random sampling, you can construct a frequency distribution and/or histogram and compute p(X)=f(X)/N. B. Using the unit normal table (will be provided for the test) to go back and forth between: 1. Z-scores (or raw scores, Xs, which you convert to Zs first) (a) and 2. Percentiles, Proportions, Probabilities, and/or Area under the curve (all interchangeable!) (a) Exactly at a particular X value: Use real limits to create a 2-dimensional “slice” with a width equal to one unit of measurement (so use real limits of ½ unit of measurement on each side of the score). (i) e.g., p(SAT=650) Calculate area for the slice from 645 to 655 (because the unit of measurement for SAT scores is 10) (ii) e.g., p(size 8 shoe) Calculate area for the slice from 7.75 to 8.25 (because the unit of measurement for shoe sizes is .5) (b) Above or below a particular X value: (i) If you don’t use real limits, technically the proportion/probability/area you calculate includes ½ of the values at that particular X value, as well as all of the values above it (or below it, as the case may be). (ii) So technically, to compute probability/proportions/area above (but not including) a value of X, use the upper real limit of X (X + ½ unit of measurement). To compute Conley Stats Winter 2006 probability/proportions/area below (but not including) a value of X, use the lower real limit of X (X - ½ unit of measurement). (iii) To compute probability/proportions/area at or above (equal to or greater than) a value of X, use the lower real limit of X to include every score at X as well as everything above it. To compute probability/proportions/area at or below (equal to or lower than) a value of X, use the upper real limit of X to include every score at X as well as everything below it. (iv) Thus, using these rules, calculating p(SAT 650 or higher) is the same thing as calculating p(SAT above 640). Both would use the real limit of 645 (which divides the 640 interval from the 650 interval). Similarly, p(shoe size smaller than 8) = p(shoe size of 7.5 or lower) both use real limit of 7.75. (c) Between two particular X values or z-scores, which has two possibilities: (i) If two values are on opposite sides of the mean (e.g., finding the middle X% of a distribution) add two proportions/probabilities/areas together (ii) If two values are on the same side of the mean subtract lower from higher (d) Bottom line: When you do any computation like this on the test, make it clear whether or not you’re using real limits, and why (what your rationale is for using them or not; what area you’re actually finding). (e) Note: If you’re given z-scores, real limits aren’t an issue. Real limits only apply to raw scores. 3. IMPORTANT: For all of these problems, sketch a normal distribution, draw on μ, σ, and the X value(s) in question, and shade the area you’re covering! The unit normal table does not differentiate positive and negative z-scores, so you must look at your sketch to determine the sign (+/-) of z. Your sketch will help avoid other simple mistakes. C. Binomial probabilities 1. Graphing binomial data: What are X and Y (what goes on the X- and Y-axes)? What’s the range of X and Y? 2. Calculating μ, σ, and z-scores for binomial data. E.g., z = [X – pn] / √[npq] 3. Calculating binomial probability with simple frequencies (the “easy” way): If the two events A and B have an equal likelihood and you can map out all possible outcomes in a frequency distribution or histogram (e.g., number of heads in 6 coin tosses), you can compute binomial probabilities as proportions from the binomial distribution itself. Otherwise, go to the next option: 4. Using the normal curve and unit normal table to estimate binomial probabilities: (a) Keep in mind that binomial data are discrete and the normal distribution is continuous, so each value (number) on the X axis of a binomial distribution represents a segment that is 1 unit wide (including ½ unit above and ½ unit below the value – i.e., real limits). (b) To calculate binomial probabilities, you first find the interval width in question (see above), and then use the table to calculate the area between those values. (c) In sum, calculating binomial probabilities from the normal approximation method (the normal curve / unit normal table) involves using real limits! See pp. 187-190 for a review of these concepts. Conley Stats Winter 2006 Chapter 7: Probability and Samples: The Distribution of Sample Means I. Concepts A. Sampling error: discrepancy, or amount of error, between a sample statistic and its corresponding population parameter B. Sampling distribution (and how it relates to the distribution of scores in a population and in a sample) 1. A distribution of statistics (from entire samples), instead of a distribution of individual scores 2. Contains all possible samples of a specific size (all possible samples with a given n) - so it is usually theoretical, and used to make statistical prediction models, or to make a set or rules that relate samples to populations. 3. Distribution of sampling means is one example of a sampling distribution (a) Definition: the collection of sample means for all the possible random samples of a particular size (n) that can be obtained from a population. (b) Tends toward (approaches) a normal distribution under either of these conditions: (i) Population data (from which samples are drawn) are normally distributed (ii) Number of scores (n) in each sample (pre-determined for the given sampling distribution) is relatively large (benchmark: 30 or more). So watch out: If your population data are not normally distributed and your sample sizes are too small, you cannot use the unit normal table to calculate the probability of obtaining a particular sample mean (see Learning Check #3 on p. 213). 4. Know general characteristics/properties of sampling distributions (p. 203) C. Central limit theorem: For any population with mean μ and standard deviation σ, the distribution of sample means for sample size n will have a mean of μ and a standard deviation of σ/√n, and will approach a normal distribution as n approaches infinity. D. Parameters of the distribution of sample means: 1. μM (=μ): Expected value of M. (a) The mean of all possible sample means (of a given n). (b) Always equals μ (because the sample mean is an unbiased statistic; on average it produces a value that is exactly equal to the corresponding population parameter). 2. σM (=σ/√n): Standard error of the mean, or standard error of M. (a) The standard deviation of a distribution of all possible sample means (of a given n). The standard distance between M and μ. (b) Measures exactly how much difference is expected on average between M and μ. In other words, it specifies precisely how well a sample mean estimates a population mean. It is a quantitative measure of the difference (or error) between sample means and the population mean. (c) Equals σ/√n (not σ) because standard error shrinks in proportion to sample size. (d) When n=1, the sampling distribution equals the population distribution, so σM=σ. Conley Stats Winter 2006 E. Law of large numbers: As sample size increases, standard error of the mean (error between M and μ) decreases. 1. i.e., the larger the n, the more probable it is that the sample mean will be closer to the population mean, or the more accurately the sample mean represents the population mean. 2. Know mathematical and conceptual reasons for this. F. Why do we use the distribution of sample means? What is its function? 1. To determine margin of error in sampling. 2. To answer probability questions about sample means. 3. More specifically, to understand which samples are likely to be obtained and which samples are not. In other words, what’s considered an “extreme” or “rare” sample, compared to the expected (or null hypothesized) population. 4. In sum, the distribution of sample means is used in inferential statistics and hypothesis testing. II. Computations A. Given population standard deviation σ and sample size n, determine how much error one can expect, on average, between the sample mean and the population mean Calculate standard error of the mean: σM = σ/√n. B. Z-score for sampling mean: z = [M – μ]/σM. C. Calculate probabilities or proportions of obtaining particular samples/sample means (just like chapter 6, but using sample means instead of X scores, and using chapter 7 formulas). D. Determine if a given sample (mean) is reasonable or expected (versus if it is considered “extreme” or unlikely), in light of a particular population it is supposed to come from. Use zscore boundaries of z>1.96 or z<-1.96, unless otherwise indicated (see example p. 220). Relationships Between/Among Concepts: In general, the concepts and calculations in these chapters build on each other (and on previous chapters). Some themes, though, include: Relationship between samples and population. Probability, proportions, area, and z-scores (using the normal curve / unit normal table). Role in inferential statistics. Conley Stats Winter 2006