isma centre university of reading

advertisement

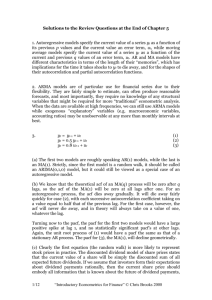

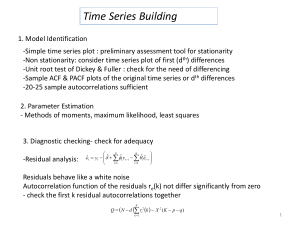

Solutions to the Review Questions at the End of Chapter 5 1. ARMA models are of particular use for financial series due to their flexibility. They are fairly simple to estimate, can often produce reasonable forecasts, and most importantly, they require no knowledge of any structural variables that might be required for more “traditional” econometric analysis. When the data are available at high frequencies, we can still use ARMA models while exogenous “explanatory” variables (e.g. macroeconomic variables, accounting ratios) may be unobservable at any more than monthly intervals at best. 2. yt = yt-1 + ut yt = 0.5 yt-1 + ut yt = 0.8 ut-1 + ut (1) (2) (3) (i) The first two models are roughly speaking AR(1) models, while the last is an MA(1). Strictly, since the first model is a random walk, it should be called an ARIMA(0,1,0) model, but it could still be viewed as a special case of an autoregressive model. (ii) We know that the theoretical acf of an MA(q) process will be zero after q lags, so the acf of the MA(1) will be zero at all lags after one. For an autoregressive process, the acf dies away gradually. It will die away fairly quickly for case (2), with each successive autocorrelation coefficient taking on a value equal to half that of the previous lag. For the first case, however, the acf will never die away, and in theory will always take on a value of one, whatever the lag. Turning now to the pacf, the pacf for the first two models would have a large positive spike at lag 1, and no statistically significant pacf’s at other lags. Again, the unit root process of (1) would have a pacf the same as that of a stationary AR process. The pacf for (3), the MA(1), will decline geometrically. (iii) Clearly the first equation (the random walk) is more likely to represent stock prices in practice. The discounted dividend model of share prices states that the current value of a share will be simply the discounted sum of all expected future dividends. If we assume that investors form their expectations about dividend payments rationally, then the current share price should embody all information that is known about the future of dividend payments, and hence today’s price should only differ from yesterday’s by the amount of unexpected news which influences dividend payments. Thus stock prices should follow a random walk. Note that we could apply a similar rational expectations and random walk model to many other kinds of financial series. If the stock market really followed the process described by equations (2) or (3), then we could potentially make useful forecasts of the series using our model. In the latter case of the MA(1), we could only make one-step ahead forecasts since the “memory” of the model is only that length. In the case of equation (2), we could potentially make 1/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 a lot of money by forming multiple step ahead forecasts and trading on the basis of these. Hence after a period, it is likely that other investors would spot this potential opportunity and hence the model would no longer be a useful description of the data. (iv) See the book for the algebra. This part of the question is really an extension of the others. Analysing the simplest case first, the MA(1), the “memory” of the process will only be one period, and therefore a given shock or “innovation”, ut, will only persist in the series (i.e. be reflected in yt) for one period. After that, the effect of a given shock would have completely worked through. For the case of the AR(1) given in equation (2), a given shock, ut, will persist indefinitely and will therefore influence the properties of yt for ever, but its effect upon yt will diminish exponentially as time goes on. In the first case, the series yt could be written as an infinite sum of past shocks, and therefore the effect of a given shock will persist indefinitely, and its effect will not diminish over time. 3. (i) Box and Jenkins were the first to consider ARMA modelling in this logical and coherent fashion. Their methodology consists of 3 steps: Identification - determining the appropriate order of the model using graphical procedures (e.g. plots of autocorrelation functions). Estimation - of the parameters of the model of size given in the first stage. This can be done using least squares or maximum likelihood, depending on the model. Diagnostic checking - this step is to ensure that the model actually estimated is “adequate”. B & J suggest two methods for achieving this: - Overfitting, which involves deliberately fitting a model larger than that suggested in step 1 and testing the hypothesis that all the additional coefficients can jointly be set to zero. - Residual diagnostics. If the model estimated is a good description of the data, there should be no further linear dependence in the residuals of the estimated model. Therefore, we could calculate the residuals from the estimated model, and use the Ljung-Box test on them, or calculate their acf. If either of these reveal evidence of additional structure, then we assume that the estimated model is not an adequate description of the data. If the model appears to be adequate, then it can be used for policy analysis and for constructing forecasts. If it is not adequate, then we must go back to stage 1 and start again! (ii) The main problem with the B & J methodology is the inexactness of the identification stage. Autocorrelation functions and partial autocorrelations for actual data are very difficult to interpret accurately, rendering the whole procedure often little more than educated guesswork. A further problem concerns the diagnostic checking stage, which will only indicate when the proposed model is “too small” and would not inform on when the model proposed is “too large”. 2/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 (iii) We could use Akaike’s or Schwarz’s Bayesian information criteria. Our objective would then be to fit the model order that minimises these. We can calculate the value of Akaike’s (AIC) and Schwarz’s (SBIC) Bayesian information criteria using the following respective formulae AIC = ln ( 2 ) + 2k/T SBIC = ln ( 2 ) + k ln(T)/T The information criteria trade off an increase in the number of parameters and therefore an increase in the penalty term against a fall in the RSS, implying a closer fit of the model to the data. 4. The best way to check for stationarity is to express the model as a lag polynomial in yt. y t 0.803 y t 1 0.682 y t 2 ut Rewrite this as yt (1 0.803 L 0.682 L2 ) ut We want to find the roots of the lag polynomial (1 0.803 L 0.682 L2 ) 0 and determine whether they are greater than one in absolute value. It is easier (in my opinion) to rewrite this formula (by multiplying through by -1/0.682, using z for the characteristic equation and rearranging) as z2 + 1.177 z - 1.466 = 0 Using the standard formula for obtaining the roots of a quadratic equation, 1177 . 1177 . 2 4 * 1 * 1466 . = 0.758 or 1.934 z 2 Since ALL the roots must be greater than one for the model to be stationary, we conclude that the estimated model is not stationary in this case. 5. Using the formulae above, we end up with the following values for each criterion and for each model order (with an asterisk denoting the smallest value of the information criterion in each case). ARMA (p,q) model order (0,0) (1,0) (0,1) (1,1) (2,1) (1,2) (2,2) (3,2) (2,3) (3,3) log ( 2 ) 0.932 0.864 0.902 0.836 0.801 0.821 0.789 0.773 0.782 0.764 AIC 0.942 0.884 0.922 0.866 0.841 0.861 0.839 0.833* 0.842 0.834 SBIC 0.944 0.887 0.925 0.870 0.847 0.867 0.846 0.842* 0.851 0.844 The result is pretty clear: both SBIC and AIC say that the appropriate model is an ARMA(3,2). 3/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 6. We could still perform the Ljung-Box test on the residuals of the estimated models to see if there was any linear dependence left unaccounted for by our postulated models. Another test of the models’ adequacy that we could use is to leave out some of the observations at the identification and estimation stage, and attempt to construct out of sample forecasts for these. For example, if we have 2000 observations, we may use only 1800 of them to identify and estimate the models, and leave the remaining 200 for construction of forecasts. We would then prefer the model that gave the most accurate forecasts. 7. This is not true in general. Yes, we do want to form a model which “fits” the data as well as possible. But in most financial series, there is a substantial amount of “noise”. This can be interpreted as a number of random events that are unlikely to be repeated in any forecastable way. We want to fit a model to the data which will be able to “generalise”. In other words, we want a model which fits to features of the data which will be replicated in future; we do not want to fit to sample-specific noise. This is why we need the concept of “parsimony” - fitting the smallest possible model to the data. Otherwise we may get a great fit to the data in sample, but any use of the model for forecasts could yield terrible results. Another important point is that the larger the number of estimated parameters (i.e. the more variables we have), then the smaller will be the number of degrees of freedom, and this will imply that coefficient standard errors will be larger than they would otherwise have been. This could lead to a loss of power in hypothesis tests, and variables that would otherwise have been significant are now insignificant. 8. (a) We class an autocorrelation coefficient or partial autocorrelation coefficient as 1 significant if it exceeds 1.96 = 0.196. Under this rule, the sample T autocorrelation functions (sacfs) at lag 1 and 4 are significant, and the spacfs at lag 1, 2, 3, 4 and 5 are all significant. This clearly looks like the data are consistent with a first order moving average process since all but the first acfs are not significant (the significant lag 4 acf is a typical wrinkle that one might expect with real data and should probably be ignored), and the pacf has a slowly declining structure. (b) The formula for the Ljung-Box Q* test is given by m Q* T (T 2) k 1 k2 T k m2 using the standard notation. In this case, T=100, and m=3. The null hypothesis is H0: 1 = 0 and 2 = 0 and 3 = 0. The test statistic is calculated as 4/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 0.420 2 0.104 2 0.032 2 Q* 100 102 19.41. 100 1 100 2 100 3 The 5% and 1% critical values for a 2 distribution with 3 degrees of freedom are 7.81 and 11.3 respectively. Clearly, then, we would reject the null hypothesis that the first three autocorrelation coefficients are jointly not significantly different from zero. 9. (a) To solve this, we need the concept of a conditional expectation, i.e. Et 1 ( yt yt 2 , yt 3 ,...) For example, in the context of an AR(1) model such as , yt a0 a1 yt 1 ut If we are now at time t-1, and dropping the t-1 subscript on the expectations operator E ( yt ) a0 a1 yt 1 E ( yt 1 ) a0 a1 E ( yt ) = a0 a1 yt 1 (a0 a1 yt 1 ) = a0 a0a1 a12 yt 1 E ( yt 2 ) a0 a1 E ( yt 1 ) = a0 a1 (a0 a1 E ( yt )) = = = = a0 a0a1 a12 E ( yt ) a0 a0a1 a12 E ( yt ) a0 a0a1 a12 (a0 a1 yt 1 ) a0 a0a1 a12 a0 a13 yt 1 etc. f t 1,1 a 0 a1 yt 1 f t 1,2 a 0 a1 f t 1,1 f t 1,3 a0 a1 f t 1,2 To forecast an MA model, consider, e.g. yt ut b1ut 1 E ( yt yt 1 , yt 2 ,...) So = E (u t b1u t 1 ) b1u t 1 = ft-1,1 = = E (u t 1 b1u t ) = 0 b1u t 1 But E ( yt 1 yt 1 , yt 2 ,...) Going back to the example above, 5/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 yt 0.036 0.69 yt 1 0.42u t 1 ut Suppose that we know t-1, t-2,... and we are trying to forecast yt. Our forecast for t is given by E ( yt yt 1 , yt 2 ,...) = f t 1,1 0.036 0.69 y t 1 0.42u t 1 u t ft-1,2 = E( yt 1 = 0.036 +0.693.4+0.42(-1.3) = 1.836 yt 1 , yt 2 ,...) 0.036 0.69 yt 0.42ut ut 1 But we do not know yt or ut at time t-1. Replace yt with our forecast of yt which is ft-1,1. ft-1,2 = 0.036 +0.69 ft-1,1 = 0.036 + 0.69*1.836 = 1.302 ft-1,3 = 0.036 +0.69 ft-1,2 = 0.036 + 0.69*1.302 = 0.935 etc. (b) Given the forecasts and the actual value, it is very easy to calculate the MSE by plugging the numbers in to the relevant formula, which in this case is 1 N MSE ( x t 1 n f t 1, n ) 2 N n 1 if we are making N forecasts which are numbered 1,2,3. Then the MSE is given by 1 MSE (1836 . 0.032) 2 (1302 . 0.961) 2 (0.935 0.203) 2 3.489 0116 . 0.536 4.141 3 Notice also that 84% of the total MSE is coming from the error in the first forecast. Thus error measures can be driven by one or two times when the model fits very badly. For example, if the forecast period includes a stock market crash, this can lead the mean squared error to be 100 times bigger than it would have been if the crash observations were not included. This point needs to be considered whenever forecasting models are evaluated. An idea of whether this is a problem in a given situation can be gained by plotting the forecast errors over time. (c) This question is much simpler to answer than it looks! In fact, the inclusion of the smoothing coefficient is a “red herring” - i.e. a piece of misleading and useless information. The correct approach is to say that if we believe that the exponential smoothing model is appropriate, then all useful information will have already been used in the calculation of the current smoothed value (which will of course have used the smoothing coefficient in its calculation). Thus the three forecasts are all 0.0305. (d) The solution is to work out the mean squared error for the exponential smoothing model. The calculation is 6/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 MSE 1 (0.0305 0.032) 2 (0.0305 0.961) 2 (0.0305 0.203) 2 3 1 0.0039 0.8658 0.0298 0.2998 3 Therefore, we conclude that since the mean squared error is smaller for the exponential smoothing model than the Box Jenkins model, the former produces the more accurate forecasts. We should, however, bear in mind that the question of accuracy was determined using only 3 forecasts, which would be insufficient in a real application. 10. (a) The shapes of the acf and pacf are perhaps best summarised in a table: Process acf pacf White noise No significant coefficients No significant coefficients AR(2) Geometrically declining or damped First 2 pacf coefficients significant, sinusoid acf all others insignificant MA(1) First acf coefficient significant, all Geometrically declining or damped others insignificant sinusoid pacf ARMA(2,1) Geometrically declining or damped Geometrically declining or damped sinusoid acf sinusoid pacf A couple of further points are worth noting. First, it is not possible to tell what the signs of the coefficients for the acf or pacf would be for the last three processes, since that would depend on the signs of the coefficients of the processes. Second, for mixed processes, the AR part dominates from the point of view of acf calculation, while the MA part dominates for pacf calculation. (b) The important point here is to focus on the MA part of the model and to ignore the AR dynamics. The characteristic equation would be (1+0.42z) = 0 The root of this equation is -1/0.42 = -2.38, which lies outside the unit circle, and therefore the MA part of the model is invertible. (c) Since no values for the series y or the lagged residuals are given, the answers should be stated in terms of y and of u. Assuming that information is available up to and including time t, the 1-step ahead forecast would be for time t+1, the 2-step ahead for time t+2 and so on. A useful first step would be to write the model out for y at times t+1, t+2, t+3, t+4: y t 1 0.036 0.69 y t 0.42u t u t 1 y t 2 0.036 0.69 y t 1 0.42u t 1 u t 2 y t 3 0.036 0.69 y t 2 0.42u t 2 u t 3 y t 4 0.036 0.69 y t 3 0.42u t 3 u t 4 The 1-step ahead forecast would simply be the conditional expectation of y for time t+1 made at time t. Denoting the 1-step ahead forecast made at time t as ft,1, the 2-step ahead forecast made at time t as ft,2 and so on: 7/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 E( yt 1 yt , yt 1 ,...) f t ,1 Et [ yt 1 ] Et [0.036 0.69 yt 0.42ut ut 1 ] 0.036 0.69 yt 0.42ut since Et[ut+1]=0. The 2-step ahead forecast would be given by E( yt 2 yt , yt 1,...) ft , 2 Et [ yt 2 ] Et [0.036 0.69 yt 1 0.42ut 1 ut 2 ] 0.036 0.69 f t ,1 since Et[ut+1]=0 and Et[ut+2]=0. Thus, beyond 1-step ahead, the MA(1) part of the model disappears from the forecast and only the autoregressive part remains. Although we do not know yt+1, its expected value is the 1-step ahead forecast that was made at the first stage, ft,1. The 3-step ahead forecast would be given by E( yt 3 yt , yt 1,...) ft ,3 Et [ yt 3 ] Et [0.036 0.69 yt 2 0.42ut 2 ut 3 ] 0.036 0.69 f t , 2 and the 4-step ahead by E( yt 4 yt , yt 1,...) ft , 4 Et [ yt 4 ] Et [0.036 0.69 yt 3 0.42ut 3 ut 4 ] 0.036 0.69 f t ,3 (d) A number of methods for aggregating the forecast errors to produce a single forecast evaluation measure were suggested in the paper by Makridakis and Hibon (1995) and some discussion is presented in Sections 5.12.8 and 5.12.9. Any of the methods suggested there could be discussed. A good answer would present an expression for the evaluation measures, with any notation introduced being carefully defined, together with a discussion of why the measure takes the form that it does and what the advantages and disadvantages of its use are compared with other methods. (e) Moving average and ARMA models cannot be estimated using OLS – they are usually estimated by maximum likelihood. Autoregressive models can be estimated using OLS or maximum likelihood. Pure autoregressive models contain only lagged values of observed quantities on the RHS, and therefore, the lags of the dependent variable can be used just like any other regressors. However, in the context of MA and mixed models, the lagged values of the error term that occur on the RHS are not known a priori. Hence, these quantities are replaced by the residuals, which are not available until after the model has been estimated. But equally, these residuals are required in order to be able to estimate the model parameters. Maximum likelihood essentially works around this by calculating the values of the coefficients and the residuals at the same time. Maximum likelihood involves selecting the most likely values of the parameters given the actual data sample, and given an assumed statistical distribution for the errors. This technique will be discussed in greater detail in the section on volatility modelling in Chapter 8. 11. (a) Some of the stylised differences between the typical characteristics of macroeconomic and financial data were presented in Section 1.2 of Chapter 1. In particular, one important difference is the frequency with which financial asset return time series and other quantities in finance can be recorded. This is of particular relevance for the models discussed in Chapter 5, since it is usually a requirement that all of the time-series data series used in estimating a given model must be of the same frequency. Thus, if, for example, we wanted to build a model for forecasting hourly changes in exchange rates, it would be difficult to set up a structural model containing macroeconomic explanatory variables since the macroeconomic variables are likely to be measured on a quarterly or at best monthly basis. This gives a motivation for using pure time-series approaches (e.g. ARMA models), rather than structural formulations with separate explanatory variables. 8/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 It is also often of particular interest to produce forecasts of financial variables in real time. Producing forecasts from pure time-series models is usually simply an exercise in iterating with conditional expectations. But producing forecasts from structural models is considerably more difficult, and would usually require the production of forecasts for the structural variables as well. (b) A simple “rule of thumb” for determining whether autocorrelation coefficients and partial autocorrelation coefficients are statistically significant is to classify them as 1 significant at the 5% level if they lie outside of 1.96 * , where T is the sample T size. In this case, T = 500, so a particular coefficient would be deemed significant if it is larger than 0.088 or smaller than –0.088. On this basis, the autocorrelation coefficients at lags 1 and 5 and the partial autocorrelation coefficients at lags 1, 2, and 3 would be classed as significant. The formulae for the Box-Pierce and the Ljung-Box test statistics are respectively m Q T k2 k 1 m and Q* T (T 2) k 1 k2 T k . In this instance, the statistics would be calculated respectively as Q 500 [0.307 2 (0.013 2 ) 0.086 2 0.0312 (0.197 2 )] 70.79 and 0.307 2 (0.013 2 ) 0.086 2 0.0312 (0.197 2 ) Q* 500 502 71.39 500 2 500 3 500 4 500 5 500 1 The test statistics will both follow a 2 distribution with 5 degrees of freedom (the number of autocorrelation coefficients being used in the test). The critical values are 11.07 and 15.09 at 5% and 1% respectively. Clearly, the null hypothesis that the first 5 autocorrelation coefficients are jointly zero is resoundingly rejected. (c) Setting aside the lag 5 autocorrelation coefficient, the pattern in the table is for the autocorrelation coefficient to only be significant at lag 1 and then to fall rapidly to values close to zero, while the partial autocorrelation coefficients appear to fall much more slowly as the lag length increases. These characteristics would lead us to think that an appropriate model for this series is an MA(1). Of course, the autocorrelation coefficient at lag 5 is an anomaly that does not fit in with the pattern of the rest of the coefficients. But such a result would be typical of a real data series (as opposed to a simulated data series that would have a much cleaner structure). This serves to illustrate that when econometrics is used for the analysis of real data, the data generating process was almost certainly not any of the models in the ARMA family. So all we are trying to do is to find a model that best describes the features of the data to hand. As one econometrician put it, all models are wrong, but some are useful! (d) Forecasts from this ARMA model would be produced in the usual way. Using the same notation as above, and letting fz,1 denote the forecast for time z+1 made for x at time z, etc: Model A: MA(1) f z ,1 0.38 0.10u t 1 9/10 “Introductory Econometrics for Finance” © Chris Brooks 2002 f z , 2 0.38 0.10 0.02 0.378 f z , 2 f z ,3 0.38 Note that the MA(1) model only has a memory of one period, so all forecasts further than one step ahead will be equal to the intercept. Model B: AR(2) xˆ t 0.63 0.17 xt 1 0.09 xt 2 f z ,1 0.63 0.17 0.31 0.09 0.02 0.681 f z , 2 0.63 0.17 0.681 0.09 0.31 0.718 f z ,3 0.63 0.17 0.718 0.09 0.681 0.690 f z , 4 0.63 0.17 0.690 0.09 0.716 0.683 (e) The methods are overfitting and residual diagnostics. Overfitting involves selecting a deliberately larger model than the proposed one, and examining the statistical significances of the additional parameters. If the additional parameters are statistically insignificant, then the originally postulated model is deemed acceptable. The larger model would usually involve the addition of one extra MA term and one extra AR term. Thus it would be sensible to try an ARMA(1,2) in the context of Model A, and an ARMA(3,1) in the context of Model B. Residual diagnostics would involve examining the acf and pacf of the residuals from the estimated model. If the residuals showed any “action”, that is, if any of the acf or pacf coefficients showed statistical significance, this would suggest that the original model was inadequate. “Residual diagnostics” in the Box-Jenkins sense of the term involved only examining the acf and pacf, rather than the array of diagnostics considered in Chapter 4. It is worth noting that these two model evaluation procedures would only indicate a model that was too small. If the model were too large, i.e. it had superfluous terms, these procedures would deem the model adequate. (f) There are obviously several forecast accuracy measures that could be employed, including MSE, MAE, and the percentage of correct sign predictions. Assuming that MSE is used, the MSE for each model is 1 MSE ( Model A) (0.378 0.62) 2 (0.38 0.19) 2 (0.38 0.32) 2 (0.38 0.72) 2 0.175 4 MSE ( Model B) 1 (0.681 0.62) 2 (0.718 0.19) 2 (0.690 0.32) 2 (0.683 0.72) 2 0.326 4 Therefore, since the mean squared error for Model A is smaller, it would be concluded that the moving average model is the more accurate of the two in this case. 10/10 “Introductory Econometrics for Finance” © Chris Brooks 2002