ANOVA, REGRESSION, CORRELATION

advertisement

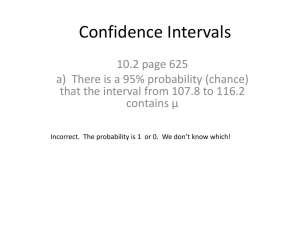

1 252regr 2/26/07 (Open this document in 'Outline' view!) Roger Even Bove G. LINEAR REGRESSION-Curve Fitting 1. Exact vs. Inexact Relations 2. The Ordinary Least Squares Formula We wish to estimate the coefficients in Y 0 1 X . Our ‘prediction’ will be Yˆ b0 b1 X and our error will be e Y Yˆ so that Y b b X e . 0 1 (See appendix for derivation) b1 XY nXY X nX 2 b0 Y b1 X 2 3. Example i Y X XY X 2 Y 2 1 0 0 0 0 0 2 2 1 2 1 4 3 1 2 2 4 1 4 3 1 3 1 9 5 1 0 0 0 1 6 3 3 9 9 9 7 4 4 16 16 16 8 2 2 4 4 4 9 1 2 2 4 1 10 2 1 2 1 4 sum 19 16 40 40 49 X 16 , First copy n 10, Y 19, XY 40, X 40 and Y X 16 1.60 Y Y 19 1.90 . Then compute means: X 2 n 10 Use these to compute ‘Spare Parts’: SS x Y nY 49 101.9 12.90 SST XY nXY 40 10 1.61.9 9.60 . SS y S xy X n 2 2 2 2 2 49 . 10 nX 40 101.602 14.40 2 (Total Sum of Squares) Note that SS x and SS y must be positive, while S xy can be either positive or negative. We can compute the coefficients: b1 S xy SS x XY nXY X nX 2 2 9.60 0.6667 14 .40 b0 Y b1 X 1.90 0.6667 1.60 0.8333 So our regression equation is Yˆ 0.8333 0.6667 X or Y 0.8333 0.6667 X e . 2 4. R 2 , the Coefficient of Determination SST SSR SSE , SSR b1 S xy is Regression (Explained) Sum of Squares. SSE SST SSR is the Error (Unexplained or Residual) Sum of Squares, and is defined as Y Yˆ 2 ,a formula that should never be used for computation. R2 S xy 2 9.62 .4961 SSR b1 S xy 14 .40 12 .90 SST SSy SS x SS y An alternate formula, if no spare parts have been computed, is R 2 b0 Y b XY nY Y nY 2 1 2 2 R 2 r 2 . The coefficient of determination is the square of the correlation. Note that SS x , b1 , and r all have the same sign. H. LINEAR REGRESSION-Simple Regression 1. Fitting a Line 2. The Gauss Markov Theorem OLS is BLUE Y Yˆ 2 3. Standard Errors – The standard error is defined as s e2 s e2 SSE n2 n2 . SS y b 2 SS x SSE SST SSR SS y b1 S xy . n2 n2 n2 n2 Or, if no spare parts are available, s e2 Note also that if R 2 is available s e2 Y 2 b0 SS y 1 R 2 Y b XY . . 1 n2 n2 Using data from G3, and using our spare parts SS x 14 .40, SS y 12.90 SST, S xy 9.60 s e2 Y 2 XY nXY SS nY 2 b1 n2 y b1 S xy n2 12 .90 0.6667 9.60 0.8125 8 4. The Variance of b0 and b1 . 1 X 2 2 2 1 s b20 s e2 and s b1 s e n SS x SS x I. LINEAR REGRESSION-Confidence Intervals and Tests 1. Confidence Intervals for b1 . 1 b1 t 2 sb1 of variation in x . df n 2 The interval can be made smaller by increasing either n or the amount 3 2. Tests for b1 . H 0 : 1 10 b 10 To test use t 1 . Remember 10 is most often zero – and if the null hypothesis is H : s b1 10 1 1 false in that case we say that 1 is significant. To continue the example in G3: R 2 R2 b0 Y b XY nY 1 SS y 2 S xy 2 SS x SS y 9.62 .4961 or 14.40 12.90 0.8333 19 0.6667 40 10 1.90 2 .4961 12 .90 SSR b1 S xy 0.66679.60 6.400. We have already computed s e2 0.8125 , which implies that 1 0.8125 s b21 s e2 0.0564 and sb1 0.0564 0.2374 . SS x 14 .40 H 0 : 1 0 The significance test is now df n 2 10 2 8 . Assume that .05 , so that for a 2H 1 : 1 0 sided test t n2 2 t .8025 2.306 and we reject the null hypothesis if t is below –2.306 or above 2.306. Since t b1 0 0.6667 2.809 is in the rejection region, we say that 1 is significant. A further test says that s b1 0.2374 1 is not significantly different from 1. If we want a confidence interval 1 b1 t sb1 0.6667 2.3060.2374 0.667 0.547 . Note that this 2 includes 1, but not zero. X s e2 . This indicates that n 1 X 2 nX 2 n 1 s x2 both the a large sample size, n , and a large variance of x will tend to make s b21 smaller and thus decrease Note that since s x2 SS x n 1 2 nX 2 , s b21 s e2 1 the size of a confidence interval for 1 or increase the size (and significance) of the t-ratio. To put it more negatively, small amounts of variation in x or small sample sizes will tend to produce values of b1 that are not significant. The common sense interpretation of this statement is that we need a lot of experience with what happens to y when we vary x to be able to put any confidence in our estimate of the slope of the equation that relates them. 4 3. Confidence Intervals and Tests for b0 H 0 : 0 00 b 00 We are now testing with t 0 . s b0 H 1 : 0 00 1 1.60 2 1 X 2 s b20 s e2 0.8125 0.2778 0.2256 . So sb0 0.2256 0.4749 . If 0.8125 n SS x 10 14 .40 H 0 : 0 0 b 0 0.8333 we are testing t 0 1.754 . Since the rejection region is the same as in I2, H : 0 s b0 0.4749 1 0 we accept the null hypothesis and say that 0 is not significant. A confidence interval would be 0 b0 t 2 sb0 0.8333 2.3060.4749 0.883 1.095 A common way to summarize our results is, Yˆ 0.8333 0.6667 X . The equation is written with the 0.4749 0.2374 standard deviations below the equation. For a Minitab printout example of a simple regression problem, see 252regrex1. 4. Prediction and Confidence Intervals for y 1 X X The Confidence Interval is Y0 Yˆ0 t sYˆ , where sY2ˆ s e2 0 n SS x 2 and the Prediction Interval 1 X X 2 is Y0 Yˆ0 t sY , where sY2 s e2 0 1 . In these two formulas, for some specific X 0 , n SS x ˆ Y b b X . For example, assume that X 5 so that for the results in G3, 0 0 1 0 0 1 X X Yˆ0 0.8333 0.66675 4.168 . Then sY2ˆ s e2 0 n SS x 2 0.8125 1 5 1.62 0.733 10 14 .40 and sYˆ 0.733 0.856 , so that the confidence interval is Y0 Yˆ0 t sYˆ 4.168 2.306 0.856 4.168 1.974 . This represents a confidence interval for the average value that Y will take when X 5 . For the same data 1 X X 2 1 5 1.62 sY2 s e2 0 1 0.8125 1 1.545 and sY 1.545 1.243 , so that the 10 n 14 .40 SS x prediction interval is Y Yˆ t s 4.168 2.3061.243 4.168 2.866 . This is a confidence interval 0 0 Y for the value that Y will take in a particular instance when X 5 . Ignore the remainder of this document unless you have had calculus! 5 Appendix to G2– Explanation of OLS Formula Assume that we have three points: X 1 , Y1 , X 2 , Y2 and X 3 , Y3 . We wish to fit a regression line to these points, with the equation Yˆ b0 b1 X and the characteristic that the sum of squares, SS Y Ŷ 2 is a minimum. If we imagine that there is a 'true' regression line Y 0 1 X we can consider b0 and b1 to be estimates of 0 and 1 . Let us make the definition e Y Yˆ . Note that if we substitute our equation for Ŷ , we find that e Y Yˆ Y b0 b1 X , or Y b0 b1 X e . This has two consequences: First the sum of squares can be written as SS Y Yˆ Y b 2 0 e 0 or the mean of Y and Ŷ b1 X 2 e 2 ; and second, that if we fit the line so that is the same we have Y a bX . Now if we subtract the equation for Y b0 b1 X Y from the equation for Y we find Y b0 b1 X e . Now let us measure X and Y as b1 X X e ~ ~ deviations from the mean, replacing X with X X X and Y with Y Y Y . This means that ~ ~ ~ ~ Y b1 X e or e Y b1 X . If we substitute this expression in our sum of squares, we find that ~ ~2 SS e2 Y b1 X . Y Y Now write this expression out in terms of our three points and differentiate it to minimize SS with respect to b1 . To do this, recall that b1 is our unknown and that the X s and Y s are numbers (constants!), ~~ ~~ ~ ~ so that d db1 b1 XY XY and d db1 b12 X 2 2b1 X 2 . ~ ~ ~ ~ ~ ~ ~ ~ SS e Y b X Y b X Y b X Y b X 2 2 2 1 1 1 1 2 2 1 2 2 3 1 3 . ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ (Y12 2b1 X 1Y1 b12 X 12 ) (Y22 2b1 X 2Y2 b12 X 22 ) (Y32 2b1 X 3Y3 b12 X 32 ) If we now take a derivative of this expression with respect to b1 and set it equal to zero to find a minimum, we find that: ~ ~ ~2 ~ ~ ~2 ~ ~ ~2 d db1 SS 0 2 X 1Y1 2b1 X 1 0 2 X 2 Y2 2b1 X 2 0 2 X 3Y3 2b1 X 3 . ~~ ~ ~~ ~ 2 XY 2b1 X 2 2 XY b1 X 2 0 ~~ ~ ~~ ~ ~~ ~ ~~ ~ XY b1 X 2 0 or XY b1 X 2 0 , then XY b1 X 2 or XY b1 X 2 , so But if 2 ~~ XY ~ ~ that if we solve for b1 , we find b1 ~ 2 . But if we remember that X X X and Y Y Y , we can X write this as b1 X X Y Y or b XY nXY . X nX X X 2 1 2 2 Of course, we still need b0 , but remember that Y b0 b1 X , so that b0 Y b1 X .