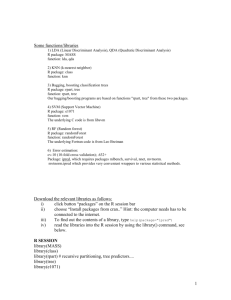

rpart - UCLA Human Genetics

advertisement

Biostatistics 278, discussion 3:

R code for supervised learning:

k-nearest neighbor predictors,

linear discriminant analysis, rpart, error estimation

Steve Horvath

E-mail: shorvath@mednet.ucla.edu

http://www.ph.ucla.edu/biostat/people/horvath.htm

The notes are based in part on R code described in:

http://bioinformatics.med.yale.edu/proteomics/BioSupp1.html by the Yale NHLBI/Proteomics Center.

All analyses were done within the R statistical analyses software. R links: http://www.rproject.org/ for general information, and http://cran.r-project.org/ for downloading.

We will use the following functions/libraries

1) LDA (Linear Discriminant Analysis), QDA (Quadratic Discriminant Analysis)

R package: MASS

function: lda, qda

2) KNN (k-nearest neighbor)

R package: class

function: knn

3) Bagging, boosting classification trees

R package: rpart, tree

function: rpart, tree

Our bagging/boosting programs are based on functions "rpart, tree" from these two packages.

4) SVM (Support Vector Machine)

R package: e1071

function: svm

The underlying C code is from libsvm

5) RF (Random forest)

R package: randomForest

function: randomForest

The underlying Fortran code is from Leo Breiman

6) Error estimation:

cv-10 (10-fold cross-validation); .632+

Package: ipred, which requires packages mlbench, survival, nnet, mvtnorm.

mvtnorm.ipred which provides very convenient wrappers to various statistical methods.

1

Download the relevant libraries as follows:

i)

click button “packages” on the R session bar

ii)

choose “Install packages from cran..” Hint: the computer needs has to be

connected to the internet.

iii)

To find out the contents of a library, type help(package="ipred")

iv)

read the libraries into the R session by using the library() command, see

below.

R SESSION

library(MASS)

library(class)

library(rpart) # recursive partitioning, tree predictors....

library(tree)

library(e1071)

library(randomForest)

library(mlbench);library(survival); library(nnet); library(mvtnorm)

library(ipred)

# the followin function takes a table and computes the error rate.

# it assumes that the rows are predicted class outcomes while the #columns are observed

#(test set) outcomes

rm(misclassification.rate)

misclassification.rate=function(tab){

num1=sum(diag(tab))

denom1=sum(tab)

signif(1-num1/denom1,3)

}

# Chapter 1: Simulated data set with 50 observations.

# set a random seed for reproducing results later, any integer

set.seed(123)

#Binary outcome, 25 observations are class 1, 25 are class 2

no.obs=50

# class outcome

y=rep(c(1,2),c(no.obs/2,no.obs/2))

# the following covariate contains a signal

x1=y+0.8*rnorm(no.obs)

# the remaining covariates contain noise (random permutations of x1)

x2=sample(x1)

x3=sample(x1)

x4=sample(x1)

x5=sample(x1)

dat1=data.frame(y,x1,x2,x3,x4,x5)

dim(dat1)

names(dat1)

2

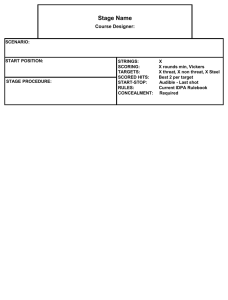

# RPART (tree analysis)

rp1=rpart(factor(y)~x1+x2+x3+x4+x5,data=dat1)

plot(rp1)

text(rp1)

x1< 1.421

|

1

2

summary(rp1)

Call:

rpart(formula = factor(y) ~ x1 + x2 + x3 + x4 + x5, data = dat1)

n= 50

CP nsplit rel error xerror

xstd

1 0.64

0

1.00

1.36 0.1319394

2 0.01

1

0.36

0.40 0.1131371

Node number 1: 50 observations,

complexity param=0.64

predicted class=1 expected loss=0.5

class counts:

25

25

probabilities: 0.500 0.500

left son=2 (24 obs) right son=3 (26 obs)

Primary splits:

x1 < 1.421257 to the left, improve=10.2564100, (0

x4 < 2.640618 to the left, improve= 2.0764120, (0

x3 < 0.525794 to the left, improve= 0.7475083, (0

x2 < 1.686658 to the left, improve= 0.6493506, (0

x5 < 1.089018 to the right, improve= 0.4010695, (0

Surrogate splits:

x4 < 1.964868 to the left, agree=0.64, adj=0.250,

x2 < 0.7332517 to the left, agree=0.60, adj=0.167,

x5 < 0.820739 to the left, agree=0.58, adj=0.125,

x3 < 0.7332517 to the left, agree=0.56, adj=0.083,

missing)

missing)

missing)

missing)

missing)

(0

(0

(0

(0

split)

split)

split)

split)

Node number 2: 24 observations

predicted class=1 expected loss=0.1666667

class counts:

20

4

probabilities: 0.833 0.167

Node number 3: 26 observations

predicted class=2 expected loss=0.1923077

class counts:

5

21

probabilities: 0.192 0.808

3

# Let us now eliminate the signal variable!!!

# further we choose 3 fold cross-validation and a cost complexity parameter=0

rp1=rpart(factor(y)~x2+x3+x4+x5,control=rpart.control(xval=4, cp=0), data=dat1)

plot(rp1)

text(rp1)

x4< 2.641

|

x3< 1.883

2

1

2

Note that the above tree overfits the data since x4 and x5 have nothing to do with y!

From the following output you can see that the cross-validated relative error rate is 1.28,

i.e. it is worth than the naive predictor (stump tree), that assigns each observation the

class 1.

summary(rp1)

summary(rp1)

Call:

rpart(formula = factor(y) ~ x2 + x3 + x4 + x5, data = dat1, control =

rpart.control(xval = 4,

cp = 0))

n= 50

CP nsplit rel error xerror

xstd

1 0.20

0

1.00

1.12 0.1403994

2 0.12

1

0.80

1.24 0.1372880

3 0.00

2

0.68

1.28 0.1357645

ETC

4

# let us cross-tabulate learning set predictions versus true learning set outcomes:

tab1=table(predict(rp1,newdata=dat1,type="class"),dat1$y)

tab1

1 2

1 18 10

2 7 15

misclassification.rate(tab1)

[1] 0.34

# Note the error rate is unrealistically low, given that the predictors have nothing to do

# with the outcome. This illustrates that the “resubstitution” error rate is biased.

#Let’s create a test set as follows

ytest=sample(1:2,100,replace=T)

x1test=ytest+0.8*rnorm(100)

dattest=data.frame(y=ytest, x1=sample(x1test), x2=sample(x1test),

x3=sample(x1test),x4=sample(x1test),x5=sample(x1test))

# Now let’s cross-tabulate the test set predictions with the test set outcomes:

tab1=table(predict(rp1,newdata=dattest,type="class"),dattest$y)

tab1

> tab1

1 2

1 34 26

2 20 20

misclassification.rate(tab1)

[1] 0.46

# this test set error rate is realistic given that the predictor contained no information.

5

#Linear Discriminant Analysis

dathelp=data.frame(x1,x2,x3,x4,x5)

lda1=lda(factor(y)~ . , data=dathelp ,CV=FALSE, method="moment")

> Call:

lda(factor(y) ~ ., data = dathelp, CV = FALSE, method = "moment")

Prior probabilities of groups:

1

2

0.5 0.5

Group means:

x1

x2

x3

x4

x5

1 0.9733358 1.474684 1.450246 1.405641 1.491884

2 2.0817099 1.580361 1.604800 1.649404 1.563162

Coefficients of linear discriminants:

LD1

x1 1.31534493

x2 0.12657254

x3 0.16943895

x4 0.06726993

x5 0.07174623

# resubstitution error

tab1=table(predict(lda1)$class,y)

tab1

misclassification.rate(tab1)

> tab1

y

1 2

1 19 6

2 6 19

> misclassification.rate(tab1)

[1] 0.24

### leave one out cross-validation analysis

lda1=lda(factor(y)~.,data=dathelp,CV=TRUE, method="moment")

tab1=table(lda1$class,y)

> tab1

y

1 2

1 18 7

2 7 18

> misclassification.rate(tab1)

[1] 0.28

6

# Chapter 2: The Iris Data

data(iris)

### parameter values setup

cv.k = 10 ## 10-fold cross-validation

B = 100 ## using 100 Bootstrap samples in .632+ error estimation

C.svm = 10 ## Cost parameters for svm, needs to be tuned for different datasets

#Linear Discriminant Analysis

ip.lda <- function(object, newdata) predict(object, newdata = newdata)$class

# 10 fold cross-validation

errorest(Species ~ ., data=iris, model=lda,

estimator="cv",est.para=control.errorest(k=cv.k), predict=ip.lda)$err

[1] 0.02

# The above is the 10 fold cross validation error rate, which depends

# on how the observations are assigned to 10 random bins!

# Bootstrap error estimator .632+

errorest(Species ~ ., data=iris, model=lda, estimator="632plus",

est.para=control.errorest(nboot=B), predict=ip.lda)$err

[1] 0.02315164

# The above is the boostrap estimate of the error rate. Note that it is comparable to

# the cross-validation estimate of the error rate

#Quadratic Discriminant Analysis

ip.qda <- function(object, newdata) predict(object, newdata = newdata)$class

# 10 fold cross-validation

errorest(Species ~ ., data=iris, model=qda, estimator="cv",

est.para=control.errorest(k=cv.k), predict=ip.qda)$err

[1] 0.02666667

# Bootstrap error estimator .632+

errorest(Species ~ ., data=iris, model=qda, estimator="632plus",

est.para=control.errorest(nboot=B), predict=ip.qda)$err

[1] 0.02373598

# Note that both error rate estimates are higher in QDA than in LDA

7

#k-nearest neighbor predictors#

#Currently, there is an error in the underlying wrapper code for "knn" in package ipred.

#The error is due to the name conflict of variable "k" used in the wrapper function

#"ipredknn" and the original function "knn".

# We need to change variable "k" to something else (here "kk") to avoid conflict.

bwpredict.knn <- function(object, newdata) predict.ipredknn(object, newdata,

type="class")

## 10 fold cross validation, 1 nearest neighbor

errorest(Species ~ ., data=iris, model=ipredknn, estimator="cv",

est.para=control.errorest(k=cv.k), predict=bwpredict.knn, kk=1)$err

[1] 0.03333333

## 10 fold cross validation, 3 nearest neighbors

errorest(Species ~ ., data=iris, model=ipredknn, estimator="cv",

est.para=control.errorest(k=cv.k), predict=bwpredict.knn, kk=3)$err

[1] 0.04

## .632+

errorest(Species ~ ., data=iris, model=ipredknn, estimator="632plus",

est.para=control.errorest(nboot=B), predict=bwpredict.knn, kk=1)$err

[1] 0.04141241

errorest(Species ~ ., data=iris, model=ipredknn, estimator="632plus",

est.para=control.errorest(nboot=B), predict=bwpredict.knn, kk=3)$err

[1] 0.03964991

# Note that the k=3 nearest neighbor predictor leads to lower error rates

# than the k=1 NN predictor.

# Random forest predictor

#out of bag error estimation

randomForest(Species ~ ., data=iris, mtry=2, ntree=B, keep.forest=FALSE)$err.rate[B]

[1] 0.04

## compare this to 10 fold cross-validation

errorest(Species ~ ., data=iris, model=randomForest, estimator = "cv",

est.para=control.errorest(k=cv.k), ntree=B, mtry=2)$err

[1] 0.05333333

8

# bagging rpart trees

# Use function "bagging" in package "ipred" which calls "rpart" for classification.

## The error returned is out-of-bag estimation.

bag1=bagging(Species ~ ., data=iris, nbagg=B, control=rpart.control(minsplit=2, cp=0,

xval=0), comb=NULL, coob=TRUE, ns=dim(iris)[1], keepX=TRUE)

> bag1

Bagging classification trees with 100 bootstrap replications

Call: bagging.data.frame(formula = Species ~ ., data = iris, nbagg = B,

control = rpart.control(minsplit = 2, cp = 0, xval = 0),

comb = NULL, coob = TRUE, ns = dim(iris)[1], keepX = TRUE)

Out-of-bag estimate of misclassification error:

0.06

# The following tables lists the out-of bag estimates versus observed species

table(predict(bag1),iris$Species)

setosa versicolor virginica

setosa

50

0

0

versicolor 0

46

5

virginica

0

4

45

# Note that the OOB error rate is 0.06=9/150

#support vector machine (SVM)

## 10 fold cross-validation, note the misclassification cost

errorest(Species ~ ., data=iris, model=svm, estimator="cv", est.para=control.errorest(k =

cv.k), cost=C.svm)$error

[1] 0.03333333

## .632+

errorest(Species ~ ., data=iris, model=svm, estimator="632plus",

est.para=control.errorest(nboot = B), cost=C.svm)$error

[1] 0.03428103

9

Chapter 3: How to filter genes and use filtered genes in kNN

predictors

Let’s first install bioconductor as follows.

1) Copy and paste the following functions into your R session.

getBio C <- function (libName = "default", relLevel = "release",

destd ir, versForce=TRUE,

verbose = TRUE, bundle = T RUE,

force=TRUE, getAllDeps=T RUE, method="auto") {

## !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

## !!! Alway s change version number when updating th is file

getBioCVersio n <- "1.2.52 "

writeLines(paste("Running getBio C version ",getBio CVersion,"....\n ",

"If y ou encounter problems, first make s ure that\n",

"y ou are running the latest version of getBioC()\n ",

"wh ich can be found at: www.b ioconductor. org/getBio C.R",

"\n\n ",

"Please direct any concerns or questions to ",

" b ioconductor@stat.math.ethz.ch. \n",sep=""))

## !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

MINIMUM Rel <- "1.8.1 "

MINIMUMDev <- "1.9 .0"

rInfo <- R.Version()

rVers <- paste(rInfo$major,rInfo$minor,sep=".")

## !!! Again, using commpareVersion() here as we

## !!! have not boo tstrapped w/ reposT ools for versionNumber

## !!! class

MINIMUM <- switch(relLevel,

"devel"=MINIMUMDev,

MINIMU MRel)

if (compareVersion(rVers,MINIMUM) < 0)

{

sto p(paste("\nYou are currently running R version ",rVers,

", ho wever R version ",MINIMUM ,

" is required.",sep=""))

}

## Chec k the specified libName. If it is 'all' we want to warn

## that they 're about ready to get a metric ton of packages.

if (libName == "all") {

## Ma ke s ure they want to get all pac kages

msg <- paste("\nYou are down loading all of the Bioconductor",

" pac kages and any dependencies.\n",

"Depending on y our sy stem this will be about ",

"60-6 5 packages and be quite large.\n ",

"\nAre y ou sure that y ou want to do this ?", sep="")

out <- G BCu serQuery (msg,c("y ","n"))

if (out == "n ") {

cat("\nN ot down loading . if y ou wish to see other down load options, \n",

"please go to the URL:",

" http://www.bioconductor.org/faq.h tml#getBioC\n", sep="")

return(inv isib le(NULL))

}

}

curLibPaths <- .libPaths()

on.exit(.libPaths(curLibPaths), add=TRUE)

## make sure to ex pand out the destd ir param

if (!missing(destd ir))

destd ir <- path.expand(destdir)

## Chec k the specified relLevel

validLevels <- c("release", "devel")

if (!(relLevel %in% validLevels))

sto p(paste("Invalid relLevel parameter: ",relLevel,

". Must be one of: ",

paste(validLevels, collapse=", "),

".", sep=""))

## Stifle the "connected to www.... garbage output

curNetOpt <- getOption( "internet. info")

on.exit(op tion s(internet.info=curNetOpt), add=T RUE)

optio ns(internet. info=3)

## First chec k to ma ke sure they have HTTP capability . If they do

## not, there is no point to this exercise.

http <- as.lo gical(capabilities(what="http /ftp"))

if (http == FA LSE) {

sto p(paste("Your R is no t currently configured to allow HT TP",

"\nco nnections, w hich is required for getBio C to",

"wor k properly ."))

}

## find o ut where we thin k that b ioC is

bio Coption <- getOption("BIOC")

if (is.nu ll(bio Cop tion))

bio Cop tion <- "h ttp://www.b ioconductor.org "

## Now check to see if we can connect to the Bio C website

biocURL <- url(paste(bioCop tion ,"/m ain.html",sep=""))

optio ns(show.error.messages=FALSE)

test <- try (readLines(biocURL)[1])

optio ns(show.error.messages=TRUE)

if (inherits(tes t,"try -error"))

sto p(paste("Your R can no t connect to the Bioconductor",

"website, which is required for getBioc to",

"wor k properly . The most likely cause of this",

"is the in ternet configuration of R"))

else

close(biocURL)

## Get the des tination directory

if (missing(destdir)) {

lP <- .libPaths()

if (length( lP) == 1)

destdir <- lP

else {

dDval <- menu(lP,

title="Please select an ins tallatio n directory :")

if (dDval == 0)

stop("N o ins tallation directory selected")

else

destdir <- lP[dDval]

}

}

else

.libPaths(destdir)

if (length(destd ir) > 1)

sto p("Invalid destdir parameter, must be of length 1")

PLATFORM <- .Platform$OS.ty pe

if (file.access(destdir,mode=0) < 0)

sto p(paste("Directory ",destdir,"d oes not seem to exist.\n ",

"Please check y our 'destdir' parameter and try again."))

if (file.access(destdir,mode=2) < 0)

sto p(paste("You do not have write access to",destdir,

"\nPlease check y our permission s or provide ",

"a different 'destdir' parameter"))

messages <- paste("Your packages are up to date.",

"No downloading/installation w ill be performed.",

sep="\n")

packs <- NULL

## Get the names of packages s pecified by the user

if(bundle){

for(i in libName){

pac ks <- c(packs, getPac kNames(i))

}

}else{

packs <- libName

}

## Download and ins tall reposTo ols and Bio base first

## Get the pac kage descriptio n file from Biocond uctor

getReposT ools <- getReposToo ls(relLevel, PLATFO RM, des tdir, method=method,

bioCop tion=b ioCoptio n)

require(reposTools) || stop("Needs reposToo ls to continue")

## Get Repo sitory entries from Bioconductor

urlPath <- switch(PLATFO RM,

"u nix"= "/Source",

"/Win32 ")

bio CRepU RL <- getReposU RL(relLevel,urlPath, bio Cop tion)

bio CEntries <- getReposEntry (bioCRepURL)

curOps <- getOption( "repositories 2")

on.exit(op tion s(repositories2=curOps), add=TRUE)

repNames <- names(curOps)

curOps <- gsub("http://www.b ioconductor.org ",bio Cop tion,curOps)

names(curOps) <- repNames

if (relLevel == "devel")

optReps <- curOps[c("BIOCDevel","BIOCData",

"BIO CCo urses","BIOCcdf",

"BIO Cprobes", "CRAN",

"BIO COmegahat")]

else

optReps <- curOps[c("BIOCRel1.3 ","BIOCData",

"BIO CCo urses","BIOCcdf", "BIO Cprobes",

"CRAN", "BIO COmegahat")]

optio ns(repositories2=optReps)

## Sy nc lib lis t

sy ncLocalLibList(destd ir)

reposToolsVersion <- package.description("reposToo ls",

lib.loc=destdir,

fields="Version")

if (compareVersion(reposToolsVersio n, "1. 3.12") < 0) {

## Th is is the old sty le reposTools , need to do old s ty le

## getBio C

out <- in stall. packages2(pac ks, b ioCE ntries, lib=destdir,

ty pe = ifelse(PLATFORM == "u nix", "Source",

"Win32"), vers Force=versForce, recurse=FALSE,

getAllDeps=getAllDeps, method=method,

force=force, searchOptions=TRUE)

}

else {

## 'packs' might be NULL, imply ing every thing in the

## main repository (release/devel)

if (is.n ull(pac ks))

pac ks <- repPkgs(b ioCEntries)

## Need to determine which 'packs' are alreaedy

## installed and which are not. Call install on the latter

## and u pdate on the former.

load.locLib(destd ir)

locP kgs <- unlist(lapp ly (locLibList, Pac kage))

havePkg s <- packs % in% locP kgs

ins tallP kgs <- pac ks[! havePkgs]

updateP kgs <- packs[havePkgs]

out <- new("p kgStatusList", status List=list())

if (length(u pdatePkg s) > 0) {

up dateList <- update.pac kages2(updateP kgs, b ioCE ntries,

lib s=destdir,

ty pe = ifelse(PLATFORM == "un ix", "Source",

"Win 32"), vers Force=versForce, recurse=FALSE,

getAllDep s=getAllDeps, method=method,

force=force, searchOptions=TRUE)

s tatusList(out) <- updateList[[destdir]]

}

sy ncLocalLibList(des tdir, qu iet=TRUE)

if (length( installPkgs) > 0)

s tatusList(out) <- in stall.pac kages2(ins tallP kgs, bio CEntries, lib=destdir,

ty pe = ifelse(PLATFORM == "unix ", "Source",

"W in32 "), versForce=versForce,

recurse=FALSE,

getA llDeps=getAllDeps,

method=method,

force=force, searchOptions=TRUE)

}

if (length(updated(ou t)) == 0)

print( "All requested packages are up to date")

else

print(o ut)

## Window s doesn't currently have Rgraphviz or rhdf5

if (PLATFORM != "wind ows") {

if (libName %in% c("all","prog ","graph")) {

otherPkgsO ut <- paste("Packages Rgraphviz and rhdf5 require",

" special libraries to be insta lled.\n ",

"Please see the URL ",

"h ttp ://www.b ioconductor. org/faq.html#Other No tes",

" for\n",

"more details o n ins talling these pac kages",

" if they fail\nto install properly \n\n",

sep=""

)

cat(otherPkgsOut)

}

}

## If they are using 'default', alert the user that they have not

## gotten all pac kages

if (libName == "default") {

out <- paste("Yo u have downloaded a default set of packages. \n",

"If y ou wish to see other down load options, please",

" g o to the URL:\n",

"h ttp://www.b ioconductor.org /faq.html#getBio C\n ",

sep="")

cat(out)

}

}

getReposToo ls <- function(relLevel, p latform, destdir=NULL,

method="auto", b ioCoption) {

## This funciton will check to see if reposTools needs to be

## updated, and if so will dow nload/install it

PACKAGES <- getPACKAG ES(relLevel, bio Cop tion)

### check repos Tools ala checkLib s

if (checkRepo sTools(PACKAGES)) {

sourceUrl <- getDLURL("reposT ools ", PACKAGE S, p latform)

## Get the package file name for reposTools

fileName <- getFileName(sourceUrl, destdir)

## Try the connection firs t before downloadin g

options(sh ow.error.messages = FALSE)

try Me <- try (url(sourceUrl, "r"))

options(sh ow.error.messages = TRUE)

if(inherits(try Me, "try -error"))

s top("Could not get the required package reposToo ls")

else {

## Clo se the connection for chec king

close(try Me)

## D ownload and install

prin t("Installing repo sTools ...")

dow nload.file(sourceUrl, fileName,

mode = getMode(platform), quiet = T RUE, method=method)

in stallPac k(platform, fileName, destdir)

if (!("reposToo ls" % in% in stalled.pac kages(lib. loc=destdir)[,"Package"]))

stop("Failed to in stall package reposToo ls")

un lin k(fileName)

}

return(invisible(NULL))

}

}

packNameOutput <- function() {

out <- paste("\n default:\ttargets affy , cdna and exprs.\n",

"exprs:\t\tpackages Biobase, anno tate, genefilter, ",

"geneplo ter, edd, \n\t\tROC, multtest, pamr and limma.\n",

"affy :\t\tpac kages affy , affy data, ",

"annaffy , affyPLM, makecdfenv,\n\t\t",

"matchprobes and vsn p lus 'exprs'.\n",

"cdna:\t\tpac kages marray Input, marray Classes, ",

"marray Norm, marrayPlots,\n\t\tmarray Tools, vsn,",

" plus 'exprs'.\nprog:\t\tpackages graph, hexb in, ",

"externalVector.\n",

"graph:\t\tpackages graph, Rgraphviz, RBGL ",

"\nw idgets :\tpac kages tkWidgets, w idgetToo ls,",

" Dy nDoc.\ndesign :\t\tpac kages daMA and factDesign \n",

"externalData:\tpackages externalVector and rhdf5.\n ",

"database:\tA nnBuilder, SAGEly zer, Rdb i and ",

"RdbiPgSQ L.\n ",

"analy ses:\tpac kages Biobase, ctc, daMA, edd, ",

"factDesign,\n \t\tgenefilter, geneplo tter, globaltest, ",

"gpls, limma,\n\t\tMAG EML, multtest, pamr, RO C, ",

"siggenes and splicegear.\n",

"annotation:\tpac kages annotate, Ann Builder, ",

"humanLLMappings\n\t\tKEGG, GO, SNPtoo ls, ",

"makecdfenv and ontoTools.",

"\nall:\t\tA ll of the Biocond uctor packages. \n",

sep="")

out

}

## This function p ut to gether a vector containing Biocond uctor's

## packages based on a defined libName

getPackNames <- function(libName) {

error <- paste("The library ", libName, " is not valid.\n ",

"Usage:\n", pac kNameOutput())

AFFY <- c("affy ", "vsn", "affy data", "annaffy ",

"affy PLM", "matchprobes", "gcrma", "makecdfenv")

CDNA <- c("marray Input", "marray Classes", "marray Norm",

"marray Plots", "marray Tools", "vsn")

EXPRS <-c("Biobase", "annotate", "genefilter",

"geneplotter", "edd", "ROC",

"multtest", "pamr", "limma", "MAGE ML",

"siggenes ", "g lobaltes t")

PROG <- c("graph", "hexb in", "externalVector", "Dy nDoc", "Ruu id")

GRAPH <- c("graph", "Rgraphviz", "RBGL")

WIDGETS <- c("tkWidgets", "widgetT ools ", "Dy nDoc")

DATABA SE <- c("AnnBu ilder", "SAGE ly zer", "Rdbi",

"RdbiPg SQL")

DESIGN <- c("daMA", "factDesign")

ANNOTATION <- c("annotate", "Ann Bu ilder", "humanLLMapping s",

"KEGG ", "GO ", "SNPtools", "makecdfenv",

"on toToo ls")

ANALYSE S <- c("Bio base", "ctc", "daMA ", "edd ", "factDesign ",

"genefilter", "geneplo tter", "g lobaltes t",

"gp ls", "limma", "MAG EML", "multtest", "pamr",

"RO C", "s iggenes", "splicegear")

EXTERNALDATA <- c("externalVector", "rhdf5 ")

packs <- sw itch(tolower(libName),

"all"=NULL,

"default" = c(EXPRS, A FFY, CDNA),

"exprs " = EXPRS,

"affy " = c(EXPRS, AFFY),

"cdna" = c(EXPRS, CDNA),

"prog " = PROG,

"graph" = GRAPH,

"widgets" = WIDGET S,

"design" = DE SIGN,

"anno tation " = ANNOTATION,

"database" = DATA BASE ,

"analy ses" = ANALYSE S,

"externaldata" = EXTE RNALDATA,

sto p(error))

packs <- un ique(packs)

packs

}

## Returns the mode that is going to be u sed to call dow nload.file

## depending o n the platform

getMode <- function(platform){

switch(platform,

"u nix " = return("w"),

"w indows " = return("wb"),

stop(paste(platform,"is n ot currently supported")))

}

## Installs a given pac kage

installPac k <- function(platform, fileName, destdir=NULL){

if(platform == "unix"){

cmd <- paste(file.path(R.home(), "bin", "R"),

"CMD IN STALL")

if (!is.nu ll(destd ir))

cmd <- paste(cmd, "-l", destdir)

cmd <- paste(cmd, fileName)

sy stem(cmd)

}else{

if(platform == "windows "){

zip.u npack(fileName, .libPaths()[1])

}else{

s top(paste(platform,"is not currently supported "))

}

}

}

## Returns the surce url for a given pac kage

getDLURL <- function(pa kName, rep, platform){

temp <- rep[rep[, "Package"] == pakName]

names(temp) <- colnames(rep)

switch(platform,

"u nix " = return(temp[names(temp) == "SourceURL"]),

"w indows " = return(temp[names(temp) == "WIN32URL"]),

stop(paste(platform,"is n ot currently supported")))

}

## Returns the description file (PACKAGE) that contains the name,

## version number, url, ... of Bioco nductor pac kages.

getPACKAGES <- functio n (relLevel, bio Coption){

URL <- getRepo sURL(relLevel,"/PACKAGE S", b ioCoption)

con <- url(URL)

optio ns(show.error.messages = FALSE)

try Me <- try(read.dcf(con))

optio ns(show.error.messages = TRUE)

if(inherits(try Me, "try -error"))

stop(pas te("The url:",URL,

"does n ot seem to have a valid PACKAGE S file."))

close(con)

return(try Me)

}

## Returns the url for some files that are needed to perform the

## functions . name is added to teh end of the URL

getReposU RL <- function(relLevel, name="", bio Coption){

URL <- switch(relLevel,

"devel"= pas te(bioCo ptio n,

"repository /devel/package",

name, sep ="/"),

"release"=paste(bioCo ptio n,

"repos itory /release1.3",

"/package", name,sep="/"),

character())

URL

}

## Returns the file name with the des tination path (if any ) attached

getFileName <- function(url, destd ir){

temp <- unlist(str split(url, "/"))

if(is.null(destdir))

return(temp[length(temp)])

else

return(file.path(destd ir, temp[length(temp)]))

}

## getBioC has to check to see if "repos Tools " has

## already been loaded and generates a message if any has.

checkReposT ools <- functio n(PACKAGES){

p kgVers <- PACKAGES[,"Vers ion"]

## First get package version

## !!! Not y et using Versio nNumber classes here

## !!! boots trapping issue as th is comes from reposTools

## !!! use compareVersion for now

if ("reposTools" %in% ins talled.pac kages()[,"Package"]) {

curVers <- package.description("reposT ools ",fields="Version")

if (compareVersion(curVers,pkgVers) < 0) {

if ("package:reposToo ls" % in% search()) {

error <- paste("reposTools is o ut of date bu t",

" currently loaded in y our R ses sion. ",

"\nIf y ou would like to continue,",

" p lease either detach this pac kage",

" or restart\ny our R seesion before",

" runn ing getBio C.", sep="")

stop(error)

}

}

else

return(FAL SE)

}

return(TRUE)

}

## From reposTools

GBCu serQuery <- function(msg, allowed=c("y es","y ","no","n ")) {

## Prompts the user with a strin g and for an answer

## repeats until it gets allowable inpu t

repeat {

allow Msg <- paste("[",pas te(allowed,collapse="/"),

"] ", sep="")

outMsg <- paste(msg,allow Msg)

cat(outMs g)

ans <- readLines(n=1)

if (ans %in% allowed)

brea k

else

cat(paste(ans,"is not a valid response, try again.\n"))

}

ans

}

10

2) Now activate the installation by typing

getBioC()

3)In the following we will use the following bioconductor libraries

library(Biobase)

library(genefilter)

# Let’s read in the data

#change working directory to where the data are, the type

dat1=read.csv(“MicroarrayExample.csv”,header=T,row.names=1)

# Now we will use the filter functions that are described in vignette 1,

# Vignettes are pdf files that can be accessed by typing

openVignette("genefilter")

TASK1: Let’s select genes that are expressed above 500 in at least 10 samples.

# create a filter function

f1=kOverA(10,500)

# assemble the filter functions into a filtering function

ffun=filterfun(f1)

# apply the filtering function to the expression matrix

which=genefilter(dat1,ffun)

table(which)

> table(which)

which

FALSE TRUE

880

120

#To arrive at the gene names of the corresponding genes type

which[which]

TASK 2: Let us now filter genes by a multigroup comparison test (ANOVA)

#Recall that the following tissues are in the data set

names(dat1)

[1] "E1" "E2" "E3" "E4" "E5" "E6" "E7" "E8"

[13] "E13" "E14" "E15" "E16" "E17" "E18" "E19" "B1"

[25] "N1" "N2" "N3" "N4" "N5" "N6" "N7" "Q1"

>

"E9"

"B2"

"Q2"

"E10" "E11" "E12"

"B3" "B4" "B5"

"Q3"

# Therefore we define the following 4 tissue types

tissue1=factor(rep(c(“E”,”B”,”N”,”Q”),c(19,5,7,3)))

11

# Now we define the Anova filter.

# which filters out genes that are significantly different across the 4 tissues (p<0.01)

Afilter=Anova(tissue1,0.01)

aff=filterfun(Afilter)

which2=genefilter(dat1,aff)

table(which2)

# TASK 3: Let us now filter out genes that are significantly different across

# the tissues AND are expressed above 100 in at least 10 samples

Afilter=Anova(tissue1,0.01)

f1=kOverA(10,100)

aff=filterfun(Afilter,f1)

which2=genefilter(dat1,aff)

table(which2)

which2

FALSE TRUE

483

517

library(class)

# Here we use a new definition of a cross validation function for k-nearest neighbor

# compare it to knn.cv in the class library

rm(knnCV)

knnCV = function(EXPR, selectfun, cov, Agg, pselect = 0.01,Scale = FALSE) {

nc <- ncol(EXPR)

outvals <- rep(NA, nc)

for (i in 1:nc) {

v1 <- EXPR[, i]

expr <- EXPR[, -i]

glist <- selectfun(expr, cov[-i], p = pselect)

expr <- expr[glist, ]

if (Scale) {

expr <- scale(expr)

v1 <- as.vector(scale(v1[glist]))

}

else v1 <- v1[glist]

out <- paste("iter ", i, " num genes= ", sum(glist),sep ="")

print(out)

Aggregate(row.names(expr), Agg)

if (length(v1) == 1)

# the red number selects k=5 nearest neighbors

outvals[i] <- knn(expr, v1, cov[-i], k = 5)

else outvals[i] <- knn(t(expr), v1, cov[-i], k = 5)

}

return(outvals)

}

12

rm(gfun)

gfun <- function(expr, cov, p = 0.05,k1=5,level1=100) {

f2 <- Anova(cov, p = p)

f3= kOverA(k1,level1)

ffun <- filterfun(f2,f3)

which <- genefilter(expr, ffun)

}

Agg <- new("aggregator")

# Now we do leave one out cross-validation where

# genes are selected on each training set!

testcase <- knnCV(dat1[1:200,], gfun, tissue1, Agg, pselect = 0.05)

testcase

[1] 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 1 3 1 1 4 3 3 3 3 3 3 3 3 1 4

> tab1=table(testcase,tissue1)

tissue1

testcase B E N Q

1 3 0 0 1

2 0 19 0 0

3 1 0 7 1

4 1 0 0 1

misclassification.rate(tab1)

[1] 0.118

genes.used=multiget(ls(env=aggenv(Agg)),env=aggenv(Agg))

genes.counts=as.numeric(genes.used)

names(genes.counts)=names(genes.used)

sort(genes.counts)

.....

51

AFFX.HSAC07/X00351.5.at

AFFX.HUMGAPDH/M33197.5.at

51

51

AFFX.HUMGAPDH/M33197.M.at

51

51

AFFX.HSAC07/X00351.M.at

51

51

The variable genes.counts contains for each gene the number of times it was

selected in the cross validation.

13

Homework problems 2

Microarray Data and Supervised Learning

Biostats 278, Steve Horvath

To understand this homework, read the corresponding discussion notes carefully.

Use the data set MicroarrayExample.csv for the following analyses.

0) Fit an rpart tree using genes as covariates and tissue1 as outcome.

Hint: rp1=rpart(factor(tissueE)~., data=t(dat2))

plot(rp1);text(rp1)

1) Filter out genes that are have an expression value of 200 in at least 5 samples

How many genes satisfy the condition?

Hint: Use the following functions in the library genefilter

f1=kOverA(3,300)

ffun=filterfun(f1)

which1=genefilter(dat1,ffun)

table(which1)

2) Create a new data set that contains the genes found in 1). Note that this data set

contains a subset of genes that was found without looking at tissue type. Hint:

dat2=dat1[which1,]

3) Let’s assume that we want to form a predictor for comparing E tissues versus all other

tissues (B,N,Q), i.e. this is a 2 class outcome. Hint: tissueE=as.numeric(tissue1==”E”)

A) Use data set dat2 to estimate the 10 fold and .632 bootstrap error rate for

predicting E with

i)

LDA

ii)

QDA

iii)

rpart

iv)

support vector machines

B) Compute the out of bag estimates when using random forest predictors.

Hint:

RF1=randomForest(factor(tissueE)~., data=t(dat2),ntree=1000)

RF1

C) Compute the out of bag estimate when using bagged rpart trees

Hint:

bag1=bagging(tissueE ~ ., data=data.frame(t(dat2)), nbagg=B,

control=rpart.control(minsplit=2, cp=0, xval=0), comb=NULL, coob=TRUE,

ns=dim(t(dat2))[1], keepX=TRUE)

tab1=table(predict(bag1),tissueE); misclassification.rate(tab1)

4) Again use tissueE as outcome in the following.

Use the function knnCV to record the leave one out cross-validation error of k-nearest

neighbor predictors that use k=1,3,5,11 neighbors. As done in the discussion notes, use

an Anova filter and the kOverA function to select genes in the training data. To speed up

the analysis you may want to restrict the to the first 200 genes.

14