2. A model with one endogenous regression variable

advertisement

Probit models with dummy endogenous regressors

Jacob Nielsen Arendta and Anders Holmb

a

b

Institute of Public Health – Health Economics, University of Southern Denmark, Odense.

Department of Sociology, University of Copenhagen.

Abstract

This study considers heckit-type approximations useful for a number of different trivariate

probit models. They are simple to use and have no convergence problems like full maximum

likelihood. Simulations show that a heckit and a least squares approximation perform as well as

the trivariate probit estimator in small samples when the degree of endogeneity is not too

severe. A simple double-heckit and a heteroskedasticity corrected heckit approximation seem

particularly robust and promising for testing exogeneity. The methods are used to estimate the

impact of physician advice on physical activity, where the heckit approximations work as well

as full maximum likelihood.

JEL codes: C25, C35, I12, I18

Keywords: Multivariate Probit, Two-step estimation, Endogeneity, Selection, Medical advice.

Corresponding author: Jacob Nielsen Arendt, Institute of Public Health – Health Economics,

University of Southern Denmark, J. B. Winsløwsvej 9B, 5000 Odense C, Denmark. Phone:

+45 6550 3843. Fax: +45 6550 3880. Email: jna@sam.sdu.dk

1. Introduction

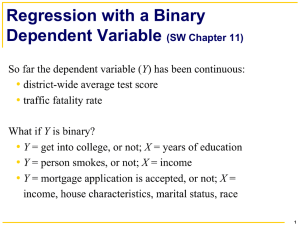

Estimation of binary regression models with dummy endogenous variables is a difficult issue

in econometrics. In contrast to models with continuous endogenous variables, no simple

consistent two-stage methods exist and it is only recently that more advanced semi-parametric

alternatives have appeared, see e.g. Vytlacil and Yildiz (2006). Therefore, problems of

endogeneity or sample selection are often ignored. When not ignored, maximum likelihood

estimation (MLE) remains a preferred model, the most popular among economists probably

being the multivariate probit and its variants (Zellner and Lee, 1965; Ashford and Sowden,

1970; Heckman, 1978; Poirier, 1980; Wynand and van Praag, 1981; Abowd and Farber, 1982;

O’Higgins, 1995; Evans and Schwab, 1995; Carrasco, 2001). In the case of several endogenous

dummy variables MLE may however become impractical or even impossible to obtain, see e.g.

Nawata (1994) in the case of sample selection models or Lechner et al. (2005) in the case of

panel data models, who discuss alternative simulation estimators. In this paper we focus on

commonly applied multivariate probit models and propose an alternative way to estimate the

effect of several endogenous dummy variables in a binary response model by simple two stage

methods. These are computational less demanding compared to MLE and they always

converge.

In general the problem of dummy endogenous variables in binary response models is of great

importance in most social science disciplines, since these frequently rely on non-experimental

data. It is well-known from especially linear models that failing to take endogeneity into

account may result in substantially biased results. Yatchew and Griliches (1985) derive the

approximate bias in a probit model with continuous endogenous regressors and it is natural to

assume that such bias extends to non-linear models. There are therefore several reasons to

2

focus on models with binary variables: 1) Less attention has been paid to these models than to

models where either dependent or independent endogenous variables are continuous, 2) one

frequently encounters binary variables in social science applications, and 3) when both

dependent and independent variables are binary, correct modelling requires more complex

models than if either is continuous. Specifically, simple two-stage methods exist which account

for endogeneity in models where either the dependent or the independent endogenous variable

is continuous (see e.g. Alvarez and Glasgow, 2000; Bollen et al., 1995; Mroz, 1999, for a

comparison of methods and Bhattacharya et al. 2006 for a brief review), whereas such

procedures are not consistent with dummy endogenous variables.

Although consistent estimates can be obtained by multivariate modelling, this gives rise to

several difficulties both with respect to estimation and with respect to making such models

readily understandable (and applicable) to a wider audience. Consequently, we think that it is

of great value to consider simpler models that approximate the true effects. We consider two

types of approximations: a heckit type and a least-squares type. These are defined below.

Although the former may seem complicated to some we provide GAUSS and Stata code for the

implementation and given this, the estimators are simple to obtain.

Nicoletti and Perracchi (2001) consider how the heckit approximation performs in a very

similar case: a binary model with sample selection. The main contribution in this paper is to

extend the heckit approximation to the case with two dummy endogenous explanatory

variables or other models where full information MLE involves a variant of the trivariate

probit. Bhattacharya et al. (2006) compare other approximations to bi- and trivariate probit.

Our study differs from this in a number of ways. First because we consider other approximate

3

estimators, second because we look smaller samples (where MLE may have more bias) and

third by varying simulation scenarios along different dimensions. Needless to say that the

higher the dimension of the model, the more fruitful a simple approximation may be. Not only

because multivariate models become more cumbersome but also because the approximations

can be applied to a range of different models, e.g. combining sample selection and endogeneity

or with switching regressions. We provide simulation results that illustrate the bias of this

approximation as well as of a simpler least-squares-based approximation in different settings.

We find that what we refer to as the tri-heckit approximation and the least-squares

approximation work well and that they overall performs as well as full maximum likelihood

estimation in small samples when there is not too severe endogeneity. These two estimators are

however often severely biased in terms of estimation of correlation coefficients, which are the

parameters of interest when assessing whether endogeneity is a problem in the first place. We

find that taking the heteroskedasticity inherent in the heckit approximation into account yields

an overall more robust estimator which often outperforms full MLE with respect to estimation

of correlation coefficients.

To illustrate empirically how the approximation works with real data we apply the

approximations for estimation of the influence of physician advice about exercise on physical

activity for people having hypertension, accounting for endogeneity of receiving an advice and

selectivity due to the restricted sample of individuals with hypertension. We show that taking

endogeneity and selection into account severely affects the results, implying that simple

estimates underestimate the impact of physician advice. Moreover, the heckit approximations

perform as well (or bad) as maximum likelihood estimators. Caution in interpretation of these

results should be taken, as the standard errors are very high, leaving most coefficients

4

insignificant. This illustrates that it is a data demanding task to account for both endogeneity

and selectivity, something which however appears to affect both approximations and full

maximum likelihood estimation.

The paper is organized as follows: the next section introduces the binary model with one

endogenous regressor. Section three extends the model to two dummy endogenous variables

and show how the approximations can be used in related models with sample selection or

switching regressions, where the full MLE also relies on trivariate normal probabilities. In

section four, simulation evidence for the estimators is presented. Section five presents the

empirical application on the impact of physician advice on physical activity and section six

provides some concluding remarks.

2. A model with one endogenous regression variable

In this section we present the case with two dummy variables, y1 and y2 , where y2 may have a

causal effect on y1 , but where the variables are spuriously related due to observed as well as

unobserved independent variables. This situation is illustrated in the following fully parametric

model:

y1 1( y2 x11 1 0),

y2 1( x2 2 2 0),

(1)

(1 , 2 | x1 , x2 ) ~ N (0, 0,1,1, ),

where 1(.) is the indicator function taking the value one if the statement in the brackets is true

and zero otherwise. , 1 , 2 are regression coefficients, and N (.,.,.,., ) indicates the standard

bivariate normal distribution with correlation coefficients , where variances have been

5

normalized to one without loss of generalization. When is zero, the model for y1 reduces to

the standard probit model.

Basically, the model states three reasons why we might observe y1 and y2 to be correlated: 1) a

causal relation due to the influence of y2 on y1 through the parameter , 2) y2 and y1 may

depend on correlated observed variables (the x’s) and 3) y2 and y1 may depend on correlated

unobserved variables (the ’s).

Consistent and asymptotically efficient parameter estimates are obtained by maximum

likelihood estimation of the bivariate probit model. This is based on a likelihood function

consisting of a product of individual contributions of the type:

Li ( , 1 , 2 | yi1 , y2i , x1i , x2i ) P( yi1 , y2i | x1i , x2i ) P( yi1 | y2i , x1i ) P( y2i | x2i ).

(2)

The second part of the latter term of the likelihood is simply a probit for y2. The first part of the

individual likelihood contributions is given as (see e.g. Wooldridge, 2002, p. 478):

P( yi1 1| y2i 1, x1i ) P( x1i 1 1i 0 | 2i x2i 2 )

x ( )

1i 1

2i

2

d 2i .

2

( x2i 2 )

1

x2 i

2

(3)

Even though rather precise procedures for evaluation of (2) exist, they are often timeconsuming in an iterative optimization context. Furthermore, when approaches one it can be

seen from (3) that the integral numerically blows up and estimation becomes imprecise.

2.1 A heckit approximation

If y1 was continuous we could rely on the ingenious work by Heckman (1974; 1976) to obtain

consistent parameters estimates:

6

E ( y1* | y2* 0) y2i x1i 1 E 2 | 2 x2i 2 x1i 1

( x2i 2 )

( x2i 2 )

(4)

y1* x1i 1 1i , y2* x2i 2 2i .

where the correction factor, the ratio /, is the inverse Mill’s ratio. Note that this applies to

continuous variables, not to a binary variable, hence applying a similar correction procedure to

a binary model gives only an approximation:

( x2i 2 )

P( y2i x1i 1 1i 0 | 2i x2i 2 ) x1i 1

( x2i 2 )

(5)

It is therefore thus not a consistent method. Nevertheless, replacing the probabilities given in

(3) in the likelihood function by the approximated probabilities, estimates of the parameters of

interest can be obtained by the following two-stage procedure: first estimate 2 in a probit

model for y2 . Then calculate the correction factor and estimate (1) in a probit model with

the correction factor as additional explanatory variables. This procedure circumvents both

drawbacks of the full MLE mentioned above: it is fast and the likelihood does not blow up

when approaches one.

As shown by Nicoletti and Perracchi (2001), the approximation and the probability on which it

is based (that is, (4)) have equal first-order Taylor expansions and the approximation is exact

for 0 (where both are equal to the standard univariate normal cdf without correction term).

Moreover, it is rather precise for values of even as high as 0.8. The heckit correction works

particularly well if the heteroskedasticity inherent in the correction is taken into account.

2.2 A least squares approximation

7

An even simpler approximation than the heckit correction would be to use simple least squares

residuals as corrections rather than inverse Mill’s ratios. This would be valid if y2 was

continuous, and may again be a good approximation when y2 is discrete:

y2i x2i 2 2i , P( y1i 1| y2i ) y2i x1i 1 2i .

(6)

Again, however, this is also only an approximation when y2 is binary and does therefore not

provide consistent estimates (given that the joint normal model is true). We note that under the

null that equals zero, both the least squares approximation and the heckit approximations are

identical to the probit models, thus the test that equals zero are valid tests of exogeneity of

y2 .

2.3 Other approximations

Bhattacharya et al. (2006) and Angrist (2001) provide related comparisons. Angrist (2001)

argues that the common use of parametric models overly complicates inference when the

statistic of interest is causal effects and suggests that 2SLS performs as well as parametric

estimators in a labour-supply model. But as noted by among others Moffitt (2001) the “good

properties” of 2SLS depends crucially on where in the sample we look for causal effects. Due

to the constant effect assumption of 2SLS, 2SLS and probit are bound to give different answers

if we do not consider average effects or if we consider individuals with “extreme

characteristics”, i.e. where x1i ˆ is not near zero.

Bhattarya et al. (2006) conduct an analysis which shares some features with the current study.

They compare the simple and bivariate probit to 2SLS and a two-step probit estimator using

Monte Carlo analysis. The two-stage probit estimator augments the second stage for y1 with

8

the predicted probability of y2 from initial probit regression of y2 . They also consider the case

with two endogenous dummy variables. Their analysis differs from ours in that they consider

other approximations, larger samples (5000 observations) and for given values of correlations

they change the treatment effects. We focus on smaller samples and change correlations for

given treatment effects. Bhattacharya et al. (2006) confirm that with one dummy endogenous

regressor 2SLS approximates average treatment effects well, whereas the two-stage probit may

have larger bias for large treatment effects. Both 2SLS and two-stage probit estimators become

more biased when the mean of the outcome is near 0 or 1. In the case with two dummy

endogenous regressors they find that 2SLS has a larger bias than the two-stage probit

estimator, especially for large treatment effects and surprisingly also for misspecified models.

For this reason we do not consider 2SLS any further.

3. A model with two dummy endogenous regression variables

In this section we explore situations where an approximation may be even more fruitful,

namely when the dimension of the endogeneity problem increases. We focus on the case of a

binary response model with two dummy endogenous variables. A simple extension of the

model in the previous section that includes one more dummy endogenous regressor would be:

y1 1( 2 y2 3 y3 x11 1 0),

y2 1( x2 2 2 0),

(7)

y3 1( x3 3 3 0),

(1 , 2 , 3 | x1 , x2 , x3 ) ~ N (0, 0, 0,1,1,1, 12 , 13 , 23 ).

Full maximum likelihood estimation requires estimation of a trivariate probit model, which is

consistent and asymptotically efficient. The likelihood function in this case is based on the

trivariate joint normal probabilities:

9

P( yi1 1, y2i 1, y3i 1| x1i , x2i , x3i )

P( 2 y2i 3 y2i x1i 1 1i 0, 2i x2i 2 , 3i x3i 3 )

(8)

Writing Phjk for P( yi1 h, y2i j, y3i k | x1i , x2i , x3i ) we can write the different likelihood

contributions under normality as:

P000 (c1i , c2i , c3i ; 12 , 13 , 23 )

P001 (c1i , c2i , c3i ; 12 , 13 , 23 )

P010 (c1i , c2i , c3i ; 12 , 13 , 23 )

P100 (c1i , c2i , c3i ; 12 , 13 , 23 )

P011 (c1i , c2i , c3i ; 12 , 13 , 23 )

(9)

P101 (c1i , c2i , c3i ; 12 , 13 , 23 )

P110 (c1i , c2i , c3i ; 12 , 13 , 23 )

P111 (c1i , c2i , c3i ; 12 , 13 , 23 )

c1i ( 2 y2i 3 y2i x1i 1 ), c2i x2i 2 , c3i x3i 3

The trivariate probit estimates can be obtained using numerical integration or simulation

techniques. The most common simulation estimator is probably the GHK simulated maximum

likelihood estimator of Geweke (1991), Hajivassiliou (1990), and Keane (1994), which is

available e.g. in the statistical program packages STATA (triprobit or mvprobit, see Cappellari

and Jenkins, 2003) and LIMDEP. The GHK simulator of multivariate normal probabilities has

been shown to generally outperform other simulation methods (e.g. Hajivassiliou, McFadden

and Ruud, 1996).

3.1 A trivariate heckit approximation

Just as in the bivariate case, we may encounter several practical problems with the multivariate

probit model. An empirically tractable alternative may therefore be to use a multivariate heckit

type of approximation:

P( 2 y2i 3 y3i x1i 1 1i 0 | 2i x2i 2 , 3i x3i 2 )

2 y2i 3 y3i x1i 1 E (1i | 2i x2i 2 , 3i x3i 2 ) .

10

(10)

To obtain this approximation we need the first moment in a trivariate truncated normal

distribution. It simplifies greatly if we assume 23 0 . Then we just get a double heckman

correction:

E 1 | 2 x2i 2 , 3 x3i 3 12

( x3 3 )

( x2 2 )

13

.

( x2 2 )

( x3 3 )

(11)

We refer to this as the bi-variate heckit approximation. In the general case, where 23 0 , the

correction terms become more complicated. They were applied by Fishe et al. (1981) in a

model where y1 is continuous and are found e.g. in Maddala (1983), p. 282:

E 1 | 2 h, 3 k 12 M 23 13 M 32 ; M ij (1 232 )1 ( Pi 23 Pj ),

Pi E ( i | 2 h, 3 k ), i 2,3.

(12)

We refer to this as the trivariate heckit correction. Fishe et al. (1981) evaluated these using

numerical approximations. They can however be simplified using results found in Maddala

(1983), p. 368:

P( x h, y k ) E ( x | x h, y k ) (h) 1 (k * ) (k ) 1 (h* )

h*

h k

1 2

, k*

k h

1 2

, cov( x, y ) .

(13)

The formulas for the correction terms in the four cases of combinations of Y2 and Y3 being 0 or

1 are presented in the appendix. In order to calculate the two correction terms, M23 and M32, we

need initial estimates of 2 and 3 and 23. This can be obtained from a bivariate probit for Y2

and Y3. Alternatively we may use two linear probability models (LP) for Y2 and Y31. The model

therefore involves several steps:

1

Using the LP estimates of 2 and 3 as initial estimates, we need to rescale them as 2 and 3 estimates are not

from the normal model. The scaling of linear probability coefficients by 2.5 (subtracting 1.25 from the constant)

has shown to work well (Maddala, 1983, p. 23). The initial estimate of 23 is obtained from the LP models as the

correlation between the residuals.

11

1. Perform estimations for Y2 and Y3 and calculate the correlation between errors (using

bivariate probit or linear probability models).

2. Calculate the correction terms

3. Perform a probit estimation for Y1 adding the correction terms as additional covariates.

An issue is the heteroskedasticity inherited in the heckit-correction. Recall that Nicoletti and

Perracchi (2001) found it useful to account for heteroskedasticity in the case with one

endogenous regressor. This is consistent with findings by Horowitz (1993) who showed that

heteroskedasticity may severely bias standard probit models. The variance formula needed for

the heteroskedasticity correction with two dummy endogenous variables is found in the

appendix. Given an expression for this variance, V, the heteroskedasticity-corrected model is:

3 x1i 1 12 M 23 13 M 32

P(Y1 1| Y2 1, Y3 1) 2

V

.

(14)

The variance depends upon 2, 3, 23 and 12, 13. While the three former can be obtained by

least squares as above, the latter two can be obtained from initial trivariate heckit estimates

without the heteroskedasticity correction.

As a first-hand impression of how the approximation performs, we evaluate how well the

trivariate heckit probabilities approximates the trivariate normal probabilities using graphical

illustrations and Taylor expansions around ( 12 , 13 ) (0, 0) . Both the bivariate heckit

correction and the trivariate normal probability have the same first-order Taylor

approximations if 23 0 :

P(Y1 1| Y2 0, Y3 0) ( x11 ) 12

( x3 3 )

( x2 2 )

( x11 ) 13

( x11 ).

( x2 2 )

( x3 3 )

12

(15)

The trivariate heckit (with 23 0 ) has a similar first-order Taylor expansion where one

replaces the inverse of the Mill’s ratios by M23 and M32 found in (10) and (11). It is easily seen

that the heteroskedasticity corrected trivariate heteroskedasticity heckit has the same first-order

expansion, since the truncated variance reduces to one when ( 12 , 13 ) (0, 0) . We simulate

and plot the Heckit probabilities along with the true probabilities (using the cdfmvn GAUSScode due to Jon Breslaw) where 12 is varied from -1 to 1 is shown for different combinations

of high, low, positive and negative values of other correlations and x’s. Selected figures are

shown in the appendix. It should be noted that correlation matrices are restricted to be positive

definite, and whenever they are not, the true trivariate normal probability is set to 0, as seen in

the figures. It is shown that especially the trivariate heckit approximates the true normal

probability rather well even for high correlation coefficients, whereas the bivariate heckit is

often badly behaved (when 23 0 ).

3.2 Variants of the multivariate probit model

It is not only discrete models with dummy endogenous regressors that give rise to multivariate

probit models. Variants of the multivariate probit arise in a number of empirically relevant

models with e.g. sample selection or switching regressions. In fact, any combination of models

with dummy endogenous regressors, sample selection or switching regressions yields new

higher order multivariate models. Since this highlights the potential usefulness of

approximations to the trivariate probit we summarize some of these models:

1. Two sample selection restrictions (censoring)

If we only observe y1 if y2=1 and y3=1, we have a bivariate sample selection problem.

Therefore all the 8 combinations of outcomes are not observed. The likelihood will be based on

the observed probabilities, P011, P111, P00 where the latter is the probability that either y2 or y3

13

are zero.This is also called a partial or censored probit and was examined in the bivariate case

in different variants by among others Poirier (1980) and Wynand and van Praag (1981).

2. One trivariate endogenous regressor variable

If one regressor variable is endogenous with three outcomes, it can be modelled as two

separate bivariate outcomes (e.g. z = yes, no, no response. Then, for instance, y2 =1(z=no) and

y3 =1(z=no response)). This also gives rise to partial observability since y2 and y3 can never be

1 at the same time. Therefore the likelihood is based on the following probabilities: P000, P001,

P010, P100, P101 and P1102.

3. A switching regression with one dummy endogenous regressor.

With a switching regression, the relationship between y1 and regressors depends upon the

outcome of another binary variable which in general gives rise to a bivariate probit model. This

is the discrete version of the Roy model, which has become very popular in the treatment

evaluation literature. An application is given in Carrasco (2001), studying the impact of having

given birth to a child recently (y2) on female labour force participation (y1). If in addition one

of the regressors is a dummy endogenous variable we need a trivariate probit model. With one

endogenous regressor the model could be defined as:

2

If the endogenous variable is an ordered qualitative variable, we could make use of the ordering and hence

J

increase efficiency by specifying: y2 1(y*2 c j ) where cj are unobserved thresholds. This gives rise to a

j=1

bivariate model with the following heckit corrections:

E (1 | y2 j ) 12 E ( 2 | c j 1 x2 2 2 c j )

( j 1 ) ( j )

, j c j x2 2 .

( j ) ( j 1 )

In relation to these type of models, Orme and Weeks (1999) show how the bivariate probit model - and Nagakura

(2004) show how the sequential and the ordered probit model - are all constrained versions of the multinomial

probit.

14

y1i 1( j y2i xij j j >0) if y3i j , j 0,1

(16)

If instead of a dummy endogenous variable, one encounter a sample selection model, it gives

rise to the next model.

4. A switching regression with sample selection.

This model could be written as:

y1i 1( xi j j >0) if y2i j , j 0,1

y1i observed if y3i 1

(17)

As in the case with double selection, there is partial observability, so the likelihood depends

upon P001, P011, P101, P111 and P0 where the latter is the marginal probability of y3 = 0. The

trivariate probabilities here and in case 3 are slightly different than those described in (10),

since they are derived from an underlying fourth-dimensional normal distribution of

( 0 , 1 , 2 , 3 ) with e.g.:

P( y1i 1, y2i 1, y3i 1) (c1 , c2 , c3 ; 12 , 13 , 23 )

P( y1i 1, y2i 0, y3i 1) (c0 , c2 , c3 ; 02 , 03 , 23 )

(18)

Note that the correlation 01 is not identified.

5. One dummy endogenous variable and sample selection.

We are interested in the effect of y2 on y1 but only observe the latter if y3=1. This model could

be stated as:

y1i 1( y2i xi 1 1 >0)

y1i observed if y3i 1

(19)

The likelihood consists of the same probabilities as in case 4. This is the model that we apply

in the application below.

4. Simulations

15

In this section we report simulation results demonstrating the performance of the three heckit

approximations and the least squares approximation described above (which in tables are

labelled short-hand as bi-heckit, tri-heckit homo (without heteroskedasticity) and tri-heckit

hetero (with heteroskedasticity) and OLS). The model consists of the following three

endogenous variables:

y1*

y2*

y3*

10 11 x11 12 x12 1 y2 2 y3 e1 ,

20 21 x21 22 x22 e2 ,

30 31 x31 32 x32 e3 .

(20)

with ( j 0 , j1 , j 2 ) (0, 0.5, 0.5), j 1, 2,3, ( 1 , 2 ) (0.5, 0.5)

e1

e

2

e3

0 1 12 13

N 0 , 12 1 23 ,

0

13 23 1

1 if y*j 0

yj

, j 1, 2,3,

0 otherwise

(21)

where y2 and y3 are endogenous to y1 if the e2 or e3 are correlated with e1. Furthermore, if e2

and e3 are correlated a full trivariate model is required. This is where the trivariate heckit

approximation is expected to be most relevant. The regressors are drawn as independent

standard normal variables with 500 independent draws in each simulation. Therefore y1 has

mean 0.5. The trivariate heckit is based on initial scaled (see footnote 3) OLS estimates of

parameters in the equations for y2 and y3 and so is the estimate of 23 . For comparison we

have also simulated the naïve and the trivariate probit. For the latter we use the GHK simulated

MLE3. We apply the rule-of-thumb (see Cappellari and Jenkins, 2003) that the number of

draws made by the GHK estimator for each simulation is the square root of the number of

observations, here 23. Experimenting with the number of simulations shows that results do not

3

This is done using STATA vrs. 9.0 and the mvprobit procedure written by Cappellari and Jenkins. The other

simulations are conducted in GAUSS. The trivariate heckit correction is available upon request from the authors

in both GAUSS and STATA code.

16

change when altering the number of Monte Carlo simulations from 100 to 1000. To obtain a

higher precision we use 200 in most simulations and 100 in some additional simulations.

4.1 The basic scenarios

Table 1 shows average estimation results with various combinations of values of correlations

between the three error terms. To save space, we only show results for effects of y2 and y3 in

the y1 equation. We show additional results as well as results for correlation coefficients in the

appendix.

-

Table 1 about here –

From the table we find that all estimators are unbiased when all rho’s are zero. The

multivariate methods however have a larger mean squared error (MSE) than the naïve probit

estimator. The MSEs of the multivariate approaches are reasonably close, OLS and trivariate

probit performing slightly better than the three heckit approaches which show similar

performance. The higher MSE is the cost of using a multivariate procedure when it is not

needed. The gain of this is that one has an assessment of the degree of endogeneity in the form

of estimates of 12 and 13 , and the test that these parameters are zero is a test of exogeneity of

y2 and y3 . We will consider the estimates of the correlations below.

Reading from left to right in table 1 we gradually increase the correlations. First of all, we see

that whenever 12 and 13 are different from zero, the single equation probit estimator that

completely ignores endogeneity has a large bias. From the table it is seen that with low but

positive correlations (0.15) all approximate estimators are almost as good as full MLE in terms

17

of bias. In fact, in all the first four cases, when correlations are at most 0.30, MLE never has

the lowest bias. This reflects a small-sample bias of MLE. The least squares estimator has the

lowest bias in most cases, although the homoskedastic heckit approximations are close to it. In

terms of MSE, full MLE is slightly better in most cases.

Note that biheckit is as good as triheckit, when 12 and 13 are 0.30 but 23 is very high which

is unexpected given that biheckit ignores the correlation 23 . In the latter case, full MLE does

not converge in 5 percent of the simulations, whereas the approximations converge in all cases.

When either of the correlation coefficients are of size 0.60, we see some un-negligible biases in

the heckit estimators (up to size 0.3) but also for full MLE (up to size 0.15) whereas the least

squares estimator performs better in most cases.

The heteroskedasticity corrected heckit overall seem to perform worse than the other

approximate estimators when correlations are 0.60 or lower and the least squares estimator best

with the homoskedastic triheckit lagging just behind.

With really high correlations (0.8) it seems that MLE outperforms most of the approximations.

The bias of approximations are of magnitude 0.10-0.20 in the presented cases (i.e. 40 percent),

with the heteroskedasticity corrected heckit and least squares performing best.

Finally, in general it seems as if the naïve probit and full MLE have a positive (small-sample)

bias and that the approximate estimators have a negative bias when correlations are positive

and vice versa when correlations are negative. Furthermore, even when correlations are all

18

equal we sometimes encounter large differences in the bias of 1 and 2 . This seems peculiar as

the model should be symmetric in these parameters. For the approximations it could reflect

biases due to multicollinearity between the correction terms and endogenous regressors

(Nawata, 1994), but it also occurs to some extent for full MLE. It may also reflect an impact of

the chosen design where the effect of y2 and y3 have opposite signs.

We therefore conducted similar simulations where y2 and y3 had equal signs (not reported).

We still found the mentioned asymmetries. Overall the same results are found, but it seems that

the heteroskedastic triheckit performs even better in this scenario with very high correlation

coefficients.

With respect to the correlation coefficients (cf. table A1 in the appendix, which presents results

for 12 and 13 , which are the those of key interest) it seems that the approximations are rather

biased while being far closer to the true values for the full MLE. Particularly the least squares

approximation is very bad at recovering the correlations. Two exceptions are the biheckit and

the heteroskedasticity corrected heckit. These estimators often have smaller bias than full MLE

for the correlation coefficients. Similar results are obtained when y2 and y3 have equal signs.

Therefore, the approximations gives a good indication of the extent to which endogeneity or

sample selection matters, by looking at whether predicted effects change, but are not good for

testing exogeneity or no sample selection through tests on correlations being zero. For doing

this one could use the biheckit or heteroskedastic heckit approximations as start values for a

full MLE.

19

It is also worth noting that in all simulations the estimated coefficients for the exogenous

explanatory variables (except the constant term) seem to be well-estimated in the single

equation probit model, irrespective of the severity of the endogeneity of y2 and y3. This is

surprising (as bias in one parameter should run through other parameters in nonlinear models)

but may be due to the fact that all regressors are uncorrelated.

4.2 Alternative scenarios

In order to see how our simulation results are influenced by our set-up we have carried out

further simulations where we have used larger samples and skewed distributions of the

endogenous variable of interest, y1.

We have used larger samples sizes to infer whether the bias of the MLE eventually would

become negligible compared to the inconsistency of the approximations. Some results are

outlined in table A2 and A3. They do seem to suggest that in large samples the MLE tends to

outperform the approximate estimators all though the differences to the least squares in all

cases and to the heckit estimators with homoskedasticity in the cases where 12 and 13 are of

opposite sign seems not to far in terms of bias of the parameters of interest. The MLE of the

correlation coefficients are much better than for those of the tri-heckit with homoskedasticity

and the least squares approximations. It is interesting to note, however, that in the case of large

samples, the tri-variate heckit with heteroskedasticity (as with small samples) and the bi-variate

heckit again seem to get quite close the MLE’s for the estimates of the correlations. All

multivariate estimators are far better than the naive probit.

20

We also carried out simulations with skewed distribution of y1 to see how the assumption of

normality affects the estimates. More skewed samples are samples with a less symmetric

distribution of y1 and they are obtained by setting the constant term in the generation equation

for y1 to one rather than zero as in the above simulations. In this case the mean of y1 rises to

0.78. The results of these estimates are illustrated in table A4 and A5. Table A4 shows that all

estimators are unbiased in skewed samples when all correlations are zero. In table A5 all

correlations are 0.5. The results in this case are not clear-cut. For one of the parameters of

interest, both the heckit estimators with homoskedasticity and the least squares estimator has a

lower bias than MLE whereas for the other parameters the heckit estimators are far more

biased than the least squares and MLE.

Finally we have plotted bias of the various estimators against the condition number of the

correlation matrix of the error terms in the simulations. This is done to illustrate the behaviour

of the approximations as the correlations increase. We have left out results for the naïve probit

estimator, as it always has a much larger bias than any of the other estimators. The relationship

between the bias of the estimators and the condition number is illustrated in figure 1 and 2

below:

-

figure 1 about here –

-

figure 2 about here –

First note that the condition number may be high all though the bias or inconsistencies of all

estimators are quite low. This happens when the condition number is high due to a high

correlation between e2 and e3 but that e1 and e2 and e1 and e3 are uncorrelated.

21

For relatively small condition numbers the approximate estimators perform as well as the MLE

or better. For large conditions numbers the MLE outperform most of the approximate

estimators, which becomes quite unreliable. This suggests that our approximations are quite

reasonable to use when the correlations are low or modest, while they are more unreliable for

high correlations. This confirms the overall previous result. Note however, that the

heteroskedasticity corrected tri-variate heckit and the bivariate heckit estimator do not become

more unreliable as the condition number increases. This might suggest that these estimators

could be useful for providing starting values for the MLE. Their usefulness is further supported

by the fact that they also replicate the correlation coefficients quite, even when correlations are

high.

Finally it is worth observing that in some simulations the MLE often failed in converging and

hence estimates where unobtainable for the MLE. In contrast, our approximate estimators are

always able to produce an estimate, all though sometimes of questionable reliability.

In sum our simulations indicate that in small samples the approximate estimators might prove

useful when the correlations are small or modestly high, compared to the difficulties with

obtaining the MLE. When correlations are very high the performance of the MLE becomes

clearly better than most of the approximate estimators, but some still seems to be able to

provide useful starting values for the MLE and information on whether endogenity is present.

Further, in some cases it is not possible to obtain the MLE while this is always possible for the

approximate estimators.

22

5. An application

In order to gain insights as to how the approximations work with real data we consider whether

physician’s advice of doing more exercise has any impact on the amount of physical activity

that people conduct. We seek to control for potential endogeneity of receiving advice as well as

for the fact that we have a selected sample. Our sample only includes information on physician

advice for people having hypertension. The application therefore illustrates how to use the

approximations in another type of model than that considered in the simulations (namely case

five considered in section three: a probit model with an dummy endogenous variable and

sample selection). The example is inspired by Kenkel and Terza (2001), who consider the

importance of taking endogeneity into account of physician advice with respect to alcohol

consumption. They mention that sample selection might be a problem, but argue that it is likely

a minor one as health problems are not the main reason people do not drink. With respect to

exercise, we think it might be more likely that individuals start to exercise because of health

problems – i.e. that a selection problem occurs - that is at least what we seek to control for.

The data used for the application is an update of the data used by Kenkel and Terza (2001).

They used the NHIS 1990 data, and we use NHIS 2003. This is the latest of the NHIS surveys

which were available at the time of initiation of this project.

Lack of exercise is a serious public health problem that affects longevity, physical and

psychological well-being as well as being a tremendous cost to society in form of potentially

lost productivity and increased treatment expenses due to e.g. obesity and related diseases

(Erlichman, Kerbey & James, 2002a; 2002b; Eden et al., 2002). As opposed to many other

health risk factors like drinking and smoking, physician advice is one of the relatively few

23

ways that have been used to affect exercise (public information campaigns are another

possibility). Although economic support for doing exercise is becoming more widespread,

countries do not tax lack of exercise or punish individuals in other ways as we can by taxing

alcohol or banning drunk driving. Physician advice is relatively costless at the individual level

and as opposed to public information campaigns it is a very direct way to improve individual

medical information. General research about the impact of medical information suggests that it

plays an important role for good health behaviour, with a probable larger effect for higher

educated (e.g. Kenkel, 1991; Glied and Lleras-Muney 2003). However, existing evidence

about whether physician advice really matters is sparse. US Preventive Force Services Task

Force Report concludes that the existing evidence is not sufficient to support unambiguously

that doctors should spend more time on advising patients with respect to exercise (Eden et al.,

2002). One problem is that randomized controlled trials focus on the impact of additional

training in giving advice, and that it is hard to control for advice-giving in the control-group or

previous training. In addition, one might question whether behaviour induced through clinical

trials replicate actual behaviour of individuals not being monitored. Therefore, observational

data is useful as a complementary source of evidence. However, to our knowledge no such

studies exist.

Now why may receipt of (or seeking) advice be endogenous to exercise? The reason is of

course, that individuals receive advice for a reason, i.e. they may in general be in poorer health

than those who do not receive advice. However, another possibility is that those who receive

advice exercise more than those who do not receive advice, because they generally are more

precautious. Finally, there may be other factors that influence both whether seeking a

physician and whether doing exercise (being a health fanatic), creating a spurious correlation.

24

We have two measures of whether individuals exercise. We use the one based upon the

question:

“How often do you do VIGOROUS activities for AT

LEAST 10 MINUTES that cause HEAVY sweating or

LARGE increases in breathing or heart rate?”

The answer to the question is in the format of number of times per week. The other measure

relates to light or moderate activities. This includes some daily activities and it may be more

difficult to distinguish changes in behaviour from this than from the former question

(Erlichman, Kerbey & James, 2002b).

5.1 Descriptive statistics

We use a sample of white men between the ages of 30 and 60. They are in a risk group for

getting hypertension and still not too old to view vigorous exercise as a means to improve

health. Initially we examined the impact of advice for both men and women, but as the

instruments used for women are insignificant, we choose to focus on men. The sample consists

of 7371 observations. However, only 1623 report they received physician advice about

exercise because they have hypertension.

The dependent variable is a dummy for exercising vigorously at least once per week. We do

not include all the explanatory variables as in Kenkel and Terza (2001), since variables like

income, work status and marital status are likely to be endogenous as well. Therefore we only

include age dummies, region of living and dummies for being black and one for being neither

black nor white. Kenkel and Terza (2001) used dummies for having bought health insurance or

being on medicare as instruments for seeking advice. However, these are invalid if health

25

insurance status affects exercise, e.g. through economic means or socio-economic position,

something we find likely. Therefore we use other instruments, namely whether the individual

within the last 12 months dropped to seek a doctor because of too long phone queue or because

of too long waiting time at the clinic. We need an additional instrument, namely for the

selection equation of having hypertension. For this we use a dummy taking value one if anyone

in the family has hypertension, stroke, diabetes or heart diseases.

-

Table 2 about here –

Descriptive statistics for our selected sample is presented in table 2. It is seen that relatively

many have received the advice that they ought to be doing more exercise, namely 66 percent.

On the other hand, in the light of the selected sample of people already being told they have

hypertension, and that exercise is known to prevent hypertension, this number rather seem a bit

low, perhaps reflecting the reluctance of doctors to interfere in the patients personal life or a

disbelief in whether the advice will be followed. We also see that 42 percent are exercising

vigorously at least once a week. The first three sets of columns show means and standard

deviations for those who have not received an advice, those who have received an advice and

those with missing information on advice. We see that those who received advice are

exercising more (39 versus 31 percent), but that those with missing information are exercising

even more frequently (44 percent). This on the one hand points towards a positive relationship

between exercise and advice reflecting either that advice works or an endogeneity effect, but

also that this association may be subject to a sample selection problem.

-

Table 3 about here –

26

5.2 Results

Table 3 presents the estimation results for the impact of advice on exercise from various probit

models controlling for age, region and ethnicity. The naïve probit results in column two reveal

that there is a positive association between advice and exercise when adjustment for these

covariates has been made. The next four pairs of columns show results for three corrections

that each account for potential endogeneity of advice and for sample selection. As described

above, the bivariate heckit and the least-squares approximations do not allow for correlation

between unobservables, which the triheckit estimators do. Finally, only the hetero-heckit

estimator takes heteroskedasticity into account. Before looking at the results, table 4 presents

the auxiliary regressions used to calculate correction terms. To keep things simple these are

simple linear probability models for receiving advice and for having hypertension. The

important point to note is whether the instruments have significant explanatory power. As seen

the instrument in the selection equation, family members has high blood pressure is significant

and its F-value is about 6. The instruments for advice are also jointly significant.

-

Table 4 about here –

All the approximate estimators show that when correcting for sample selection and

endogeneity, the coefficient on advice is greatly magnified. Note that the standard errors blow

up by the same magnitude as the coefficients, leaving nearly all coefficients insignificant at

conventional significance levels. We do note though that the selection correction term is

significant. The many insignificant coefficients may reflect that the models have low power.

This is often observed in instrumental variable studies where the explanatory power of the

instruments is weak. In our case, the explanatory power is however not alarmingly low with F-

27

values for the instrumental variables being above five, Staiger & Stock (1997). On the basis of

these results we cannot really judge whether there is truly an endogeneity problem or whether

it reveals a fundamental problem with the estimator. A comparison with maximum likelihood

estimators will help judge on this. Table 5 therefore presents three maximum likelihood

estimates, assuming joint normality. The first only accounts for endogeneity of advice, the

second only accounts for sample selection while the third is the full maximum likelihood

estimator accounting for endogeneity and sample selection. The model and the full likelihood

function are presented in the appendix.

-

Table 5 about here –

The bivariate probit estimates are very similar to the triheckit estimates with very high

coefficients on advice. Importantly, even though the maximum likelihood estimator is an

asymptotically efficient estimator, it is seen that the standard errors of the bivariate probit

estimates are very high, as was the case for the heckit estimates. The full maximum likelihood

estimates also has a much higher coefficient on advice than the naïve probit. Only the selection

model has a smaller coefficient. If estimating the selection model using only a simple heckit

correction, similar results are found (low coefficient and low standard errors). We note that the

models accounting for endogeneity and selection independently can be estimated with Stata

using the “biprobit” and “heckprob” commands. As far as we are aware, no statistical package

contains the full MLE for this model and it is therefore estimated using maxlik in GAUSS 6.0.

We experienced a couple of problems with the full MLE estimator. First of all, it converged

but the Hessian could not be inverted. Therefore standard errors are not presented. Second,

when changing the start parameters it converged to another local optimum. The likelihood

28

function deviated from the first only on the fifth decimal, and one may question whether the

accuracy of GAUSS is sufficient to distinguish between these cases. The estimate of the

coefficient on advice is a bit smaller in the second optimum.

The probit coefficients do not yield evidence about the magnitude of the impact of receiving

advice. We therefore calculate the average treatment effects (ATE) as:

ATE

1 n

( X i ) ( X i )

n i 1

(22)

Where n is the size of the selected sample, is the coefficient to advice and X contain other

exogenous regressors. The ATE is presented for each estimator in the last row. The naïve

probit estimator predicts that those who receive advice have 8.5 higher percentage point

probability of exercising. In comparison, the ATE of biheckit, triheckit, hetero-triheckit and

least-squares approximations are 38.8 and 49.4, 30.3 and 22.8 while the ATE of the maximum

likelihood estimators biprobit, selection and full MLE are 37.1, 2.2 and 32.9 respectively (ATE

for the heckit estimator accounting only for selection is 0.086). The MLE estimates that

account for endogeneity therefore agrees with the approximate estimates that the naïve probit

underestimates the effect of advice.

One lesson from this application is that it is a data demanding task to account for both selection

and endogeneity in discrete choice problems. This cannot be resolved by using the full

maximum likelihood estimates. On the contrary both heckit estimates and maximum likelihood

estimates were uncertain with high standard errors. They however agree upon that the naïve

probit underestimated the effect of advice, and if anything, the heckit estimator circumvents the

problem of a demanding likelihood function which may be more difficult to optimize.

29

Therefore, the application did not reveal any substantial problems with the heckit

approximations.

6. Conclusion

We have introduced several approximations of a binary normal model with two dummy

endogenous regressors as an alternative to the more complex trivariate probit model. We

considered the small sample properties of the approximations and showed that a standard

probit model that does not account for endogeneity is severely biased in the presence of even

moderate endogeneity. The approximations are less biased. This is particularly so for the heckit

approximation and the least squares approximation when the degree of endogeneity is not too

severe. When endogeneity is severe, the bias of both the least squares and the heckit

approximations is not negligible with the exception that a heteroskedasticy corrected heckit

performs almost as well as full MLE. The heteroskedasticy corrected heckit also has a very

small bias for the estimated correlation coefficients, where other heckit estimators and in

particular the least squares estimator are severely biased. The heteroskedasticity corrected

heckit approximation therefore provides a good starting point for tests of exogeneity or absence

of sample selection. The heteroskedasticy corrected heckit also seems more robust to cases

with a high degree of multicollinearity defined as a high condition number for the correlation

matrix. Through our application we show the importance of taking endogeneity of dummy

variables into account and demonstrate that in this case the trivarite heckit estimator is a more

useful tool for doing so as MLE.

All taken together we view the heckit approximations as a good initial working tool e.g. for

initial estimates before doing full MLE. In future research it would be beneficial to extend the

30

current methods to even higher dimensions, perhaps relate it more closely to the multinomial

probit or to examine the power of the test for exogeneity using the heteroskedasticity corrected

heckit estimator.

Acknowledgements

We would like to thank Mads Meier Jæger and participants at the 28th Symposium for Applied

Statistics in Copenhagen, 2006, and at the Health Economics Seminar, University of Southern

Denmark, for useful comments.

31

References

Alvarez, R. M. and G. Glasgow, (2000). Two-Stage Estimation of Nonrecursive Choice

Models. Political Analysis 8 (2), 11-24.

Angrist, J. D. (2001). Estimation Of Limited Dependent Variable Models With Dummy

Endogenous Regressors: Simple Strategies For Empirical Practice. Journal of Business and

Economic Statistics 19 (1), 2-15.

Ashford, J. R. and R. R. Sowden (1970). Multivariate probit analysis. Biometrics 26, 535-546.

Bhattacharya, J., Goldman, D. and D. McCaffrey (2006). Estimating probit models with selfselected treatments. Statistics in Medicine 25, 389-413.

Bollen, K. A., Guilkey, D. K. and T. A. Mroz (1995). Binary Outcomes and Endogenous

Explanatory Variables: Tests and Solutions with an Application to the Demand for

Contraceptive Use in Tunisia Demography 32 (1), 111-131.

Cappellari, L. and S. P. Jenkins (2003). Multivariate probit regression using simulated

maximum likelihood. Stata Journal 3 (3), 278-294.

Carrasco, R. (2001). Binary choice with binary endogenous regressors in panel data:

Estimating the effect of fertility on Female Labor Participation. Journal of Business &

Economic Statistics 19 (4): 385-394.

32

Eden, K., T. Orleans, Mulrow, C. D., Pender, N. J., Teutsch, S. M. (2002). Does Counseling by

Clinicians Improve Physical Activity? A Summary of the Evidence for the U.S. Preventive

Services Task Force. Annals of Internal Medicine 137 (3), 208-215.

Erlichman, J., A. L. Kerbey & W. P. T. James (2002a). Physical activity and its impact on

health outcomes. Paper 1: the impact of physical activity oncardiovascular disease and allcause mortality: an historical perspective. Obesity Reviews 3 (4), 257-271.

Erlichman, J., A. L. Kerbey & W. P. T. James (2002b). Physical activity and its impact on

health outcomes. Paper 2: prevention of unhealthy weight gain and obesity by physical

activity: an analysis of the evidence. Obesity Reviews 3 (4), 273-287.

Evans, W. N. and R. Schwab (1995). Finishing High School and Starting College: Do Catholic

Schools Make a Difference? Quarterly Journal of Economics 110 (4), 941-974.

Fishe, R. P. H., R. P. Trost and P. Lurie (1981). Labor force earnings and college choice of

young women: An examination of selectivity bias and comparative advantage. Economics of

Education Review 1 (2), 169-191.

Fleming, M.F., M. P. Mundt, M. T. French, B. K. Lawton, M. L. Baier and E. A. Stauffacher.

(2001). Benefit-cost analysis of brief physician advice with problem drinkers in primary care

practices. Medical Care 38(1), 7-18.

33

Geweke, J. (1991). Efficient Simulation from the Multivariate Normal and Student-t

Distributions Subject to Linear Constraints, Computer Science and Statistics: Proceedings of

the Twenty-Third Symposium on the Interface, 571-578.

Glied, S., & Lleras-Muney, A. (2003). Health inequality, education and medical innovation.

NBER Working Paper 9738.

Hajivassiliou, V. (1990). Smooth Simulation Estimation of Panel Data LDV Models.

Unpublished Manuscript.

Hajivassiliou, V., D. McFadden and P. Ruud. (1996). Simulation of multivariate normal

rectangle probabilities and their derivatives. Theoretical and computational results..Journal of

Econometrics 72, 85-134.

Heckman, J. J. (1974). Shadow Prices, Market Wages, and Labor Supply. Econometrica 42 (4),

679-694

Heckman, J. J. (1976). The common structure of statistical models of truncation, sample

selection and limited dependent variables and a simple estimator for such models. Annals of

Economic and Social Measurement 15, 475-492.

Heckman, J. J. (1978). Dummy endogenous variables in a simultaneous equation system.

Econometrica 46 (6), 931-959.

34

Horowitz, J. L. (1993). Semiparametric and Nonparametric Estimation of Quantal Response

Models in G. S. Maddala, C. R. Rao and H. D. Vinod (Eds.): Handbook in Statistics vol. 11,

45-72

Keane, M. P. (1994). A Computationally Practical Simulation Estimator for Panel Data.

Econometrica 62(1), 95-116.

Lechner, M., Lollivier, S. and T. Magnac (2005). Parametric binary choice models. Chapter

prepared for Matyas and Sevestre (Eds.): The Econometrics of Panel Data.

Maddala, G. S. (1983). Limited-Dependent and Qualitative Variables in Econometrics.

Econometric Society Monographs. Cambridge University Press.

Moffitt, R. A. (2001). Comment on Angrist: Estimation Of Limited Dependent Variable

Models With Dummy Endogenous Regressors: Simple Strategies For Empirical Practice.

Journal of Business and Economic Statistics 19 (1), 20-23.

Mroz, T. (1999). Discrete factor approximations in simultaneous equation models: Estimating

the impact of a dummy endogenous variable on a continuous outcome. Journal of

Econometrics 92, 233-274.

Nagakura, D. (2004). A note on the relationship of the ordered and sequential probit models to

the multinomial probit model. Economic Bulletin 3 (40), 1-7.

35

Nawata, K. (1994). Estimation of sample selection bias models by the maximum likelihood

estimator and Heckmans two-step estimator. Economics Letters 45, 33-40.

Nicoletti, C. and F. Perracchi (2001). Two-step estimation of binary response models with

sample selection, unpublished working paper.

O’Higgins, N. (1994). YTS, employment, and sample selection bias. Oxford Economic Papers

46, 605-628.

Poirier, J. (1980). Partial observability in bivariate probit models. Journal of Econometrics 12

(2), 209-217.

Staiger, D., & Stock, J. H. (1997). Instrumental variables regression with weak instruments.

Econometrica, 65(3), 557–586.

U.S. Department of Health and Human Services (1996). Physical Activity and Health: A Report of the

Surgeon General. Atlanta, GA: U.S. Department of Health and Human Services, Centers for Disease

Control and Prevention, National Center for Chronic Disease Prevention and Health Promotion.

Vytlacil, E. and N. Yildiz (2006). Dummy endogenous variables in weakly separable models.

Mimeo. Columbia University.

Weeks, M. and C. Orme (1996). The statistical relationship between bivariate and multinomial

choice models. Mimeo. Cambridge University.

36

Wooldridge, J. M. (2002). Econometric Analysis of Cross Section and Panel Data. Cambridge

MA: MIT Press.

Wynand, P. and B. van Praag (1981). The demand for deductibles in private health insurance:

A probit model with sample selection. Journal of Econometrics 17, 229-252.

Yatchew, A. and Z. Griliches (1985). Specification Error in Probit Models. The Review of

Economics and Statistics 67 (1), 134-139.

Zellner, A. and T. H. Lee (1965). Joint estimation of relationships involving discrete random

variables. Econometrica 33 (2), 382-394.

37

Appendix 1. The correction terms in the trivariate heckit

The formulas needed for the trivariate Heckman correction are derived. Two formulas from

Maddala (1983) are used repeatedly. They are:

E 1 | 2 h, 3 k 12 M 23 13 M 32

(*)

2 1

M ij (1 23

) ( Pi 23 Pj ), Pi E ( i | 2 h, 3 k ), i 2,3

and:

P( x h, y k ) E ( x | x h, y k ) (h) 1 (k * ) (k ) 1 (h* )

s

h*

h k

1 2

, k*

k h

1 2

, cov( x, y ) .

(**)

There are four terms in the correction corresponding to pairs of combinations of. To derive

these, the following change of variables is used: z 2 , v 3 , i.e.:

E (1 | Y2 1, Y3 1) E (1 | 2 x2 2 , 3 x3 3 ) E (1 | z x2 2 , v x3 3 )

We can now use (*) to get:

E (1 | z x2 2 , v x3 3 ) 12 M 23 13 M 32

2 1

M ij (1 23

) ( Pi 23 Pj )

P2 E ( z | z x2 2 , v x3 3 ), P3 E (v | z x2 2 , v x3 3 ).

In order to obtain the latter parts, we need to rearrange (**):

P( x h, y k ) E ( x | x h, y k ) P( z h, v k ) E ( z | z h, v k ) .

We therefore get an adjusted version of (**) that can be used directly to obtain the Pis in (*):

k h

h k

(k ) 1

(h) 1

1 2

1 2

E ( x | x h, y k )

( x h, y k ; )

co rr ( x, y )

Therefore:

38

P2 E ( z | z x2 2 , v x3 3 )

x x

x x

23 2 2

23 3 3

23 ( x3 3 ) 1 2 2

( x2 2 ) 1 3 3

2

2

1 23

1 23

( x2 2 , x3 3 ; 23 )

P3 can be obtained from this expression by interchanging x2 2 and x3 3 .

Proceeding in the same fashion, we get:

E (1 | Y2 0, Y3 1) E (1 | 2 x2 2 , v x3 3 )

12 M 23 13 M 32

2 1

M ij (1 23

) ( Pi 23 Pj )

P2 E ( 2 | 2 x2 2 , v x3 3 ), P3 E (v | 2 x2 2 , v x3 3 ).

Again the adjusted (**)-formula gives us:

P2 E ( 2 | 2 x2 2 , v x3 3 )

x x

x x

23 2 2

23 3 3

23 ( x3 3 ) 1 2 2

( x2 2 ) 1 3 3

2

1 23

1 232

( x2 2 , x3 3 ; 23 )

P3 E (v | 2 x2 2 , v x3 3 )

x x

x x

23 3 3

23 2 2

23 ( x2 2 ) 1 3 3

( x3 3 ) 1 2 2

2

1 23

1 232

( x2 2 , x3 3 ; 23 )

The third correction is:

E (1 | Y2 1, Y3 0) E (1 | z x2 2 , 3 x3 3 )

12 M 23 13 M 32

2 1

M ij (1 23

) ( Pi 23 Pj )

P2 E ( z | z x2 2 , 3 x3 3 ), P3 E ( 3 | z x2 2 , 3 x3 3 ).

The adjusted (**)-formula now gives us:

39

.

.

P2 E ( z | z x2 2 , 3 x3 3 )

x x

x x

23 2 2

23 3 3

23 ( x3 3 ) 1 2 2

( x2 2 ) 1 3 3

2

1 23

1 232

( x2 2 , x3 3 ; 23 )

P3 E ( 3 | z x2 2 , 3 x3 3 )

x x

x x

23 3 3

23 2 2

23 ( x2 2 ) 1 3 3

( x3 3 ) 1 2 2

2

1 23

1 232

( x2 2 , x3 3 ; 23 )

Finally, the last correction terms are:

E (1 | Y2 0, Y3 0) E (1 | 2 x2 2 , 3 x3 3 )

12 M 23 13 M 32

2 1

M ij (1 23

) ( Pi 23 Pj )

Pi E ( i | 2 x2 2 , 3 x3 3 ), i 2,3

where:

Pi E ( i | 2 x2 2 , 3 x3 3 )

x3 3 23 x2 2

x2 2 23 x3 3

( x2 2 ) 1 (

) 23 ( x3 3 ) 1 (

)

2

2

1 23

1 23

.

( x2 2 , x3 3 ; 23 )

40

.

Appendix 2. Heteroskedasticity correction

To account for the heteroskedasticyy inherent in heckman corrections we derive the truncated

variance in a trivariate normal distributiosn. Assuming joint normality of the errors:

(1 , 2 , 3 | x1 , x2 , x3 ) ~ N (0,0,0,1,1,1, 12 , 13 , 23 ) .

Then we know E (1 | 2 x2 , 3 x3 ) from the formulas in Maddala (1983), p. 282.

To find the truncated variance we use properties of the multivariate normal distribution and

write 1 as a regression function of 2 and 3 :

122 32 132 22 2 12 13 23

1 b2 2 b3 3 v, v ~ N (0,1

), v 2 , 3

22 32 232

1

2

12 23 13

b2 2 23 12

1

b

2 2

2

2

b3 32 3 13 2 3 23 13 23 12

Because we assumed unit variances, this simplifies to:

1

12 23 13

13 23 12

122 132 12 13 23

v

,

v

~

N

0,1

2

3

2

2

2

1 23

1 23

1 23

cov( 2 , v) cov( 3 , v) 0

It is easy to see that we obtain the truncated mean given in Maddala (1983), when inserting this

in E (1 | 2 x2 , 3 x3 ) :

23 13

13 23 12

E (1 | 2 x2 , 3 x3 ) E 12

v

|

x

,

x

2

3

2

2

3

3

2

1 232

1 23

1

1

23 13

23 12

12

P2 13

P3 12

( P2 23 P3 ) 13

( P3 23 P2 )

2

2

2

2

1 23

1 23

1 23

1 23

12 M 23 13 M 32

Where P2 and P3 are defined in appendix 1. We can obtain the variance in the same way:

41

V (1 | A) E (12 | A) E (1 | A) 2

E ((b2 2 b3 3 v) 2 | A) E ((b2 2 b3 3 v) | A) 2

E (b22 22 b32 32 v 2 2b2b3 2 3 2b2 2 v 2b313 3v | A) E ((b2 2 b3 3 v) | A) 2

b22 E ( 22 | A) b32 E ( 32 | A) 2b2b3 E ( 2 3 | A) E (v 2 ) b22 E ( 2 | A) 2 b32 E ( 3 | A) 2 2b2b3 E ( 2 | A) E ( 3 | A)

b22V ( 2 | A) b32V ( 3 | A) 2b2b3 cov( 2 , 3 | A) E (v 2 )

Where b2 and b3 are defined above. With this in hand, we can use Rosenbaum’s formulas

(Maddala, 1983, p. XXX) to obtain the final result. For instance with A { 2 x2 , 3 x3} ,

we get:

E ( 2 | 2 x2 , 3 x3 ) ( x2 )(1 ( x3* )) 23 ( x3 )(1 ( x2* )) / F

1 232

*

E ( 22 | 2 x2 , 3 x3 ) 1 x2 ( x2 )(1 ( x3* )) 232 x3 ( x3 )(1 ( x2* )) 23

( x23

) / F

2

1 232

*

*

*

E ( 2 3 | 2 x2 , 3 x3 ) 23 23 x2 ( x2 )(1 ( x3 )) 23 x3 ( x3 )(1 ( x2 ))

( x23

) / F

2

x

*

2

x2 23 x3

2

1 23

,x

*

3

x3 23 x2

1 232

,x

*

23

x22 x32 2 23 x2 x3

1 232

, F ( x2 , x3 , 23 )

And the conditional first and second moments of 3 are obtained from the two first formulas by

interchanging x2 ans x3. Gathering results:

42

V (1 | 2 x2 , 3 x3 )

b22 E ( 22 | A) b32 E ( 32 | A) 2b2b3 E ( 2 3 | A) E (v 2 )

b22 E ( 2 | A) 2 b32 E ( 3 | A) 2 2b2b3 E ( 2 | A) E ( 3 | A)

1

23 1 232

*

2

*

*

b 1 x2 ( x2 )(1 ( x3 )) 23 x3 ( x3 )(1 ( x2 ))

( x23

)

F

2

1

23 1 232

2

*

2

*

*

b3 1 x3 ( x3 )(1 ( x2 )) 23 x2 ( x2 )(1 ( x3 )

( x23

)

F

2

2

2

1

2b2b3 23

F

1 232

*

*

*

23 x2 ( x2 )(1 ( x3 )) 23 x3 ( x3 )(1 ( x2 ))

( x23 )

2

1

b ( x2 )(1 ( x3* )) 23 ( x3 )(1 ( x2* ))

F

2

2

2

2

1

b ( x3 )(1 ( x2* )) 23 ( x2 )(1 ( x3* ))

F

1

2b2b3 ( x2 )(1 ( x3* )) 23 ( x3 )(1 ( x2* )) *

F

122 132 12 13 23

1

*

*

(

x

)(1

(

x

))

(

x

)(1

(

x

))

1

3

2

23

2

3

F

1 232

2

3

Other conditional sets are derived in a manner similar to that outlined in appendix 1.

43

Appendix 3. Taylor expansions

We show that the bivariate heckit correction has the same first order Taylor expansion around

( 12 , 13 ) (0,0) as the trivariate conditional probability P(Y1=1| Y2=0,Y3=0) of the multivariate

probit under the assumption that 23 0 . We also derive the first order Taylor expansion of the

trivariate heckit.

Starting with the latter:

P(Y1 1| Y2 0, Y3 0) ( x11 12 M 23 13 M 32 )

( x11 ) 12

( x11 12 M 23 13 M 32 )

( x11 12 M 23 13 M 32 )

|( 12 , 13 )(0,0) 13

|( 12 , 13 ) (0,0)

12

13

( x11 ) 12 M 23 ( x11 ) 13 M 32 ( x11 )

It is clear that if 23 0 (i.e. for the bivariate heckit correction), the same equation is obtained

with the M-functions replaced by the standard inverse Mill’s ratios:

P(Y1 1| Y2 0, Y3 0) ( x1 1 12 2 13 3 )

( x1 1 ) 12 ( x2 2 ) ( x1 1 ) 13 ( x3 3 ) ( x1 1 ).

Next we look at the trivariate multivariate probit probabilities. For simplicity we have only

found the first order Taylor expansion under the assumption that 23 0 . Using Bayes’

formula:

P(Y1 , Y2 , Y3 ) P(Y2 , Y3 | Y1 ) P(Y1 ) P(Y2 | Y1 ) P(Y3 | Y1 ) P(Y1 )

P(Y1 , Y2 ) P(Y1 , Y3 ) / P(Y1 )

i.e.

P(Y1 1 | Y2 0, Y3 0) P(Y1 1, Y2 0) P(Y1 0, Y3 0) /( P(Y1 1) P(Y2 0) P(Y3 0)).

With the latent variable structure we can write these probabilities in the usual way with the

normal cdf evaluated at appropriate indices, which we for simplicity denote by a’s here. The

Taylor expansion of this is:

44

P(Y1 1 | Y2 0, Y3 0) (a1 , a2 ) (a1 , a3 ) /( (a1 )( a2 )( a3 ))

(a1 , a2 )

(a1 ) (a2 ) (a1 ) (a3 ) /( ( a1 )( a2 )( a3 )) 12

( a1 , a3 ) /(( a1 )( a2 )( a3 ))

12

( 12 , 13 ) (0,0)

(a1 , a3 )

13

(a2 , a3 ) /( (a1 ) ( a2 ) ( a3 ))

.

13

( 12 , 13 ) (0,0)

Noting that the derivative of the bivariate distribution function with respect to the correlation is

just the bivariate density, we get:

P(Y1 1 | Y2 0, Y3 0) (a1 ) 12

(a1 ) (a2 )

(a2 )

13

(a1 ) (a3 )

(a1 )

(a1 ) 12 (a1 ) (a2 ) 13 (a3 ) (a1 ).

i.e. the same as for the bivariate heckit. We have plotted the Taylor approximation along with

the true trivariate probabilities and the bivariate and trivariate heckit approximations. These are

shown in three cases in appendix 4, figure 1-3. In most scenarios the trivariate heckit works

well, but this is not the case for the bivariate heckit. Figure 1 shows a case where all are alike.

Figure 2 shows a case where the bivariate heckit does not work well whereas the trivariate does

(since 23 is not zero), and finally figure 3 shows a case where the trivariate heckit does not

work well. But of course this is only selective evidence.

45

Appendix 4. Figures of simulated probabilities.

Figure 3. Example where approximations work well.

Figure 4. Example where triheckit works well, but biheckit does not work well.

46

Figure 5. Example where triheckit does not work well

47

Appendix 5. Additional Simulations.

Table A1. Simulation results. Correlation coefficients.

Low correlations

Tri-Heckit homo

Tri-Heckit hetero

OLS

Bivar heckit

MLE

Small correlations

Tri-Heckit homo

Tri-Heckit hetero

OLS

Bivar heckit

MLE

Medium and mixed

correlations

Tri-Heckit homo

Tri-Heckit hetero

OLS

Bivar heckit

MLE

Medium High correlations

Tri-Heckit homo

12

Bias

13

12 0; 13 0; 23 0

12

MSE

13

12

MSE

13

2

Bias

12

13

12 .15; 13 .15; 23 .15

0.0002

0.0015

0.0500

0.0467

0.0255

0.0391

0.0509

0.0514

0.0034

-0.0006

0.0440

0.0411

0.0095

0.0151

0.0396

0.0374

0.0055

0.0016

0.1012

0.0911

0.0814

0.0865

0.1056

0.0965

0.0024

0.0002

0.0476

0.0446

0.0137

0.0209

0.0472

0.0432

0.0050

0.0264

0.0350

0.0291

-0.0470

-0.0420

0.0288

0.0199

12 .3; 13 .3; 23 .3

12 .3; 13 .3; 23 .3

0.0562

0.0741

0.0588

0.0560

0.0591

-0.0533

0.0608

0.0587

-0.0009

0.0035

0.0340

0.0299

0.0046

0.0006

0.0379

0.0355

0.1375

0.0126

0.1425

0.0165

0.1153

0.0470

0.0992

0.0377

0.1470

0.0228

-0.1341

-0.0160

0.1201

0.0500

0.1179

0.0515

-0.0526

-0.0604

0.0389

0.0286

-0.0466

0.0303

0.0238

0.0217

12 .6; 13 0; 23 .3

12 .3; 13 .3; 23 .95

0.0432

0.0540

0.0688

0.0629

0.2276

-0.0614

0.0830

0.0574

-0.0129

-0.0097

0.0418

0.0346

0.1445

-0.0597

0.0650

0.0404

0.0304

-0.0675

0.0294

-0.0605

0.1035

0.0538

0.0905

0.0463

0.3444

0.1927

-0.1950

-0.1554

0.1313

0.0626

0.1333

0.0707

-0.0412*

-0.0242*

0.0609*

0.0648*

0.0073

-0.0061

0.0501

0.0289

12 .6; 13 .6; 23 .6

12 .6; 13 .6; 23 .6

0.1706

0.1742

0.0677

0.0721

0.1859

-0.1782

0.0748

0.0714

Tri-Heckit hetero

OLS

Bivar heckit

MLE

High correlations

0.0392

0.0034

0.0572

0.0573

0.0371

-0.0369

0.0611

0.0657

0.2170

0.1697

0.0922

0.0837

0.2406

-0.2209

0.1024

0.0971

0.0215

-0.0412

0.0383

0.0362

0.0609

-0.0567

0.0479

0.0527

0.0287

0.0127

0.0388

0.0361

-0.0037

-0.0369

0.0336

0.0180

Tri-Heckit homo

Tri-Heckit hetero

OLS

Bivar heckit

MLE

0.1763

0.1659

0.0354

0.0346

0.1675

-0.1708

0.0354

0.0379

0.1490

0.0931

0.0347

0.0321

0.1242

-0.1193

0.0361

0.0334

0.1809

0.1514

0.0378

0.0386

0.1768

-0.1731

0.0381

0.0423

0.0744

-0.0141

0.0264

0.0334

0.1102

-0.0995

0.0341

0.0348

0.1437

0.1550

0.1586

0.1425

0.1713

-0.1837

0.1387

0.1262

Notes: Repetitions = 200; n =500;

12 .8; 13 .8; 23 .8

12 .8; 13 .8; 23 .8

1 = 0.5; 2 =-0.5.

48

Table A2. Simulations results for 1 and 2 . Endogenous variables. Large samples.

Bias

1

Correlations

Probit

Tri-Heckit homo

Tri-Heckit hetero

OLS

Bivar heckit

MLE

0.6327

-0.1080

-0.2790

-0.1429

-0.2287

0.0392

Repetitions = 100; n =2500;

MSE

2

1

12 0.5; 13 0.5; 23 0.5

0.5406

0.4031

-0.1112

0.0368

-0.2882

0.0927

0.1385

0.0401

-0.2309

0.0790

0.0132

0.0120

Bias

2

0.2956

0.0406

0.0972

0.0328

0.0764

0.0062

1 = 0.5; 2 =-0.5.

49

1

0.6299

-0.1211

-0.3009

0.1508

-0.2262

0.0548

MSE

2

1

2

12 0.5; 13 0.5; 23 0.5

-0.5335

0.3999

0.2885

0.2102

0.0369

0.0699

-0.1118

0.1059

0.0186

-0.1623

0.0386

0.0315

0.1082

0.0664

0.0376

-0.0834

0.0149

0.0184

Table A3. Simulation results. Correlation coefficients. Large samples

Correlations

12

13

12

13

Bias

MSE

12 0.5; 13 0.5; 23 0.5