SW 11 - Academics

advertisement

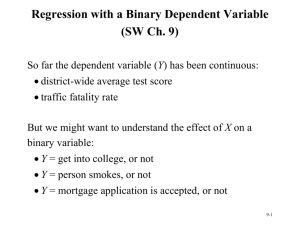

Regression with a Binary Dependent Variable (SW Chapter 11) 1 Example: Mortgage denial and race The Boston Fed HMDA data set 2 The Linear Probability Model Yi = b0 + b1Xi + ui But: !Y · What does b1 mean when Y is binary? Is b1 = ? !X · What does the line b0 + b1X mean when Y is binary? · What does the predicted value Yˆ mean when Y is binary? For example, what does Yˆ = 0.26 mean? 3 The Linear Probability Model Yi = b0 + b1Xi + ui E(Yi|Xi) = E(b0 + b1Xi + ui|Xi) = b0 + b1Xi and E(Y|X) = Pr(Y=1|X) so Yˆ = the predicted probability that Yi = 1, given X b1 = change in probability that Y = 1 for a given Dx: Pr(Y = 1| X = x + Dx ) - Pr(Y = 1| X = x ) b1 = Dx 4 Example: Linear Prob Model 5 Linear probability model: HMDA data ! deny = -.080 + .604P/I ratio (.032) (.098) (n = 2380) · What is the predicted value for P/I ratio = .3? ! Pr( deny = 1| P / Iratio = .3) = -.080 + .604*.3 = .151 · Calculating “effects:” increase P/I ratio from .3 to .4: ! Pr( deny = 1| P / Iratio = .4) = -.080 + .604*.4 = .212 The effect on the probability of denial of an increase in P/I ratio from .3 to .4 is to increase the probability by .0604, that is, by 6.04 percentage points. 6 Linear probability model: HMDA data ! deny = -.091 + .559P/I ratio + .177black (.032) (.098) (.025) Predicted probability of denial: · for black applicant with P/I ratio = .3: !deny = 1) = -.091 + .559*.3 + .177*1 = .254 Pr( · for white applicant, P/I ratio = .3: !deny = 1) = -.091 + .559*.3 + .177*0 = .077 Pr( · difference = .177 = 17.7 percentage points · Still plenty of room for omitted variable bias… 7 Linear probability model: Application Cattaneo, Galiani, Gertler, Martinez, and Titiunik (2009). “Housing, Health, and Happiness.” American Economic Journal: Economic Policy 1(1): 75 - 105 • What was the impact of Piso Firme, a large-scale Mexican program to help families replace dirt floors with cement floors? • A pledge by governor Enrique Martinez y Martinez led to State of Coahuila offering free 50m2 of cement flooring ($150 value), starting in 2000, for homeowners with dirt floors 8 Cattaneo et al. (AEJ:Economic Policy 2009) “Housing, Health, & Happiness” X1 = “Program dummy” = 1 if offered Piso Firme. 9 Cattaneo et al. (AEJ:Economic Policy 2009) “Housing, Health, & Happiness” X1 = “Program dummy” = 1 if offered Piso Firme Interpretations? 10 Probit and Logit Regression The probit & logit models satisfies this: · 0 ≤ Pr(Y = 1|X) ≤ 1 for all levels of X and for changes in X, such as X=# kids and increasing from 0 to 4 11 Probit Regression Pr(Y = 1|X) = F(z) = F(b0 + b1X) · F is standard normal cumulative distribution function · z = b0 + b1X is the “z-value” or “z-index” of probit model Example: Suppose b0 = -2, b1= 3, X = .4, so Pr(Y = 1|X=.4) = F(-2 + 3*.4) = F(-0.8) Pr(Y = 1|X=.4) = .2118554 . display normal(-0.8) 12 STATA Example: HMDA data 13 STATA Example: HMDA data, ctd. 14 Probit regression with multiple regressors Pr(Y = 1|X1, X2) = F(b0 + b1X1 + b2X2) · F is standard normal cumulative distribution function. · z = b0 + b1X1 + b2X2 is the “z-value” or “z-index” of the probit model. · b1 is the effect on the z-score of a unit change in X1, holding constant X2 15 STATA Example: HMDA data 16 STATA Example: HMDA data . probit deny p_irat black, r; Probit estimates Log likelihood = -797.13604 Number of obs Wald chi2(2) Prob > chi2 Pseudo R2 = = = = 2380 118.18 0.0000 0.0859 -----------------------------------------------------------------------------| Robust deny | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------p_irat | 2.741637 .4441633 6.17 0.000 1.871092 3.612181 black | .7081579 .0831877 8.51 0.000 .545113 .8712028 _cons | -2.258738 .1588168 -14.22 0.000 -2.570013 -1.947463 -----------------------------------------------------------------------------. scalar z1 = _b[_cons]+_b[p_irat]*.3+_b[black]*0; . display "Pred prob, p_irat=.3, white: " normal(z1); Pred prob, p_irat=.3, white: .07546603 NOTES: _b[_cons] is the estimated intercept (-2.258738) _b[p_irat] is the coefficient on p_irat (2.741637) scalar creates a new scalar which is the result of a calculation display prints the indicated information to the screen 17 STATA Example: HMDA data ! Pr( deny = 1| P / I , black ) = F(-2.26 + 2.74*P/I ratio + .71*black) (.16) (.44) (.08) · Is the coefficient on black statistically significant? · Estimated effect of race for P/I ratio = .3: ! Pr( deny = 1| .3,1) = F(-2.26+2.74*.3+.71*1) = .233 ! Pr( deny = 1| .3,0) = F(-2.26+2.74*.3+.71*0) = .075 · Difference in rejection probabilities = .158 (15.8 % pts) · Still plenty of room still for omitted variable bias… 18 Probit Regression Marginal Effects Pr(Y = 1|X) = F(z) = F(b0 + b1X1 +b2X2 + b3X3 ) ˆ d Pr(Y = 1 X1, X 2 , X3 ) d(X1 ) = ! (²ˆ0 + ²ˆ1 X1 + ²ˆ2 X 2 + ²ˆ3 X3 )* ²ˆ1 · F is standard normal cumulative distribution function · ! is the standard normal probability density function · Marginal effect depends on all the variables, even without interaction effects 19 Probit Regression Marginal Effects . sum pratio; Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------pratio | 1140 1.027249 .286608 .497207 2.324675 . scalar meanpratio = r(mean); . sum disp_pepsi; Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------disp_pepsi | 1140 .3640351 .4813697 0 1 . scalar meandisp_pepsi = r(mean); . sum disp_coke; Variable | Obs Mean Std. Dev. Min Max -------------+-------------------------------------------------------disp_coke | 1140 .3789474 .4853379 0 1 . scalar meandisp_coke = r(mean); . probit coke pratio disp_coke disp_pepsi; Iteration Iteration Iteration Iteration 0: 1: 2: 3: log log log log likelihood likelihood likelihood likelihood Probit regression Log likelihood = -710.94858 = = = = -783.86028 -711.02196 -710.94858 -710.94858 Number of obs LR chi2(3) Prob > chi2 Pseudo R2 = = = = 1140 145.82 0.0000 0.0930 -----------------------------------------------------------------------------coke | Coef. Std. Err. z P>|z| [95% Conf. Interval] -------------+---------------------------------------------------------------pratio | -1.145963 .1808833 -6.34 0.000 -1.500487 -.791438 disp_coke | .217187 .0966084 2.25 0.025 .027838 .4065359 disp_pepsi | -.447297 .1014033 -4.41 0.000 -.6460439 -.2485502 _cons | 1.10806 .1899592 5.83 0.000 .7357465 1.480373 ------------------------------------------------------------------------------ 20 Probit Regression Marginal Effects Pr(Y = 1|X) = F(z) = F(b0 + b1X1 +b2X2 + b3X3 ) ˆ d Pr(Y = 1 X) d( pratio) = ! (²ˆ0 + ²ˆ1 pratio + ²ˆ2 dispcoke + ²ˆ3disppepsi) * ²ˆ1 · Marginal effect at the means is ˆ d Pr(Y = 1 X) = ! (²ˆ0 + ²ˆ1 pratio + ²ˆ2 dispcoke + ²ˆ3 disppepsi) * ²ˆ1 d( pratio) · Average Marginal Effect is ˆ = 1 Xi ) 1 n d Pr(Y = ! n i=1 d( pratio) 1 n !!!!!!!!!!! ! ! (²ˆ0 + ²ˆ1 pratioi + ²ˆ2 dispcokei + ²ˆ3disppepsii ) * ²ˆ1 n i=1 21 Logit Regression Pr(Y = 1|X) = F(b0 + b1X) F is the cumulative standard logistic distribution function: 1 where F(b0 + b1X) = 1 + e - ( b 0 + b1 X ) Example: b0 = -3, b1= 2, X = .4, so b0 + b1X = -3 + 2*.4 = -2.2 so Pr(Y = 1|X=.4) = 1/(1+e–(–2.2)) = .0998 Why bother with logit if we have probit? · Historically, logit is more convenient computationally · In practice, logit and probit are very similar 22 STATA Example: HMDA data 23 Predicted probabilities from estimated probit and logit models usually are (as usual) very close in this application. 24 Logit Regression Marginal Effects Pr(Y = 1|X) = F(z) = F(b0 + b1X1 +b2X2 + b3X3 ) ˆ d Pr(Y = 1 X) d( pratio) = f (!ˆ0 + !ˆ1 pratio + !ˆ2 dispcoke + !ˆ3disppepsi)* !ˆ1 · F is logistic cumulative distribution function · f is the logistic probability density function e! x f (x) = ,! ² < x < ² !x 2 · (1+ e ) · Marginal effect depends on all the variables, even without interaction effects 25 Comparison of Marginal Effects LPM Probit Logit Marginal Effect at Means for Price Ratio -.4008 (.0613) -.4520 (.0712) via -.4905 (.0773) Average Marginal Effect of Price Ratio -.4008 (.0613) -.4096 -.4332 (beyond eco205) (beyond eco205) Marginal Effect at Means for Coke display dummy .0771 (.0343) .0856 (.0381) .0864 (.0390) Average Marginal Effect For Coke display dummy .0771 (.0343) .0776 .0763 (beyond eco205) (beyond eco205) nlcom via nlcom via nlcom via nlcom 26 Probit model: Application Arcidiacono and Vigdor (2010). “Does the River Spill Over? Estimating the Economic Returns to Attending a Racially Diverse College.” Economic Inquiry 48(3): 537 – 557. • Does “diversity capital” matter and does minority representation increase it? • Does diversity improve postgraduate outcomes of non-minority students? • College & Beyond survey, starting college in 1976 27 Arcidiacono & Vigdor (EI, 2010) 28 Arcidiacono & Vigdor (EI, 2010) 29 Arcidiacono & Vigdor (EI, 2010) 30 Logit model: Application Bodvarsson & Walker (2004). “Do Parental Cash Transfers Weaken Performance in College?” Economics of Education Review 23: 483 – 495. • When parents pay for tuition & books does this undermine the incentive to do well? • Univ of Nebraska @ Lincoln & Washburn Univ in Topeka, KS, 2001-02 academic year 31 Bodvarsson & Walker (EconEduR,2004) 32 Bodvarsson & Walker (EconEduR,2004) 33 Estimation and Inference in Probit (and Logit) Models Probit model: Pr(Y = 1|X) = F(b0 + b1X) · Estimation and inference · How can we estimate b0 and b1? · What is the sampling distribution of the estimators? · Why can we use the usual methods of inference? 34 Probit estimation by maximum likelihood 35 Special case: probit MLE with no X 36 37 The likelihood is the joint density, treated as a function of the unknown parameters, which here is p: n n n Y å i =1Yi ) ( å i =1 i f(p;Y ,…,Y ) = p (1 - p ) 1 n The MLE maximizes the log likelihood. ln[f(p;Y1,…,Yn)] = (å Y ) ln( p) + (n - å Y ) ln(1 - p) n n i =1 i i =1 i Set the derivative = 0: n n æ -1 ö 1 d ln f ( p;Y1 ,..., Yn ) = å i =1Yi =0 + n - å i =1Yi ç ÷ p dp è1- p ø Solving for p yields the MLE; that is, pˆ MLE satisfies, ( ) ( ) 38 39 The MLE in the “no-X” case (Bernoulli distribution), ctd.: 40 The MLE in the “no-X” case (Bernoulli distribution), ctd: · The theory of maximum likelihood estimation says that pˆ MLE is the most efficient estimator of p – of all possible estimators – at least for large n. o much stronger than the Gauss-Markov theorem · STATA note: to emphasize requirement of large-n, the printout calls the t-statistic the z-statistic; and instead of the F-statistic, it’s called a chi-squared statistic (= q*F). · Now we extend this to probit – in which the probability is conditional on X – the MLE of the probit coefficients. 41 The probit likelihood with one X 42 The probit likelihood function: 43 The Probit MLE, ctd. 44 The logit likelihood with one X · The only difference between probit and logit is the functional form used for the probability: F is replaced by the cumulative logistic function (see SW Appendix 11.2) · As with probit, · bˆ0MLE , bˆ1MLE are consistent · bˆ0MLE , bˆ1MLE are normally distributed · standard errors computed by STATA · testing & confidence intervals proceed as usual 45 Measures of fit for logit and probit 46 Application to the Boston HMDA Data (SW Section 11.4) · Mortgages (home loans) are an essential part of buying a home. · Is there differential access to home loans by race? · If two otherwise identical individuals, one white and one black, applied for a home loan, is there a difference in the probability of denial? 47 The HMDA Data Set · Data on individual characteristics, property characteristics, and loan denial/acceptance · The mortgage application process circa 1990 -1991: · Go to a bank or mortgage company · Fill out an application (personal+financial info) · Meet with the loan officer · Then the loan officer decides – by law, in a race -blind way. Presumably, the bank wants to make profitable loans, and the loan officer doesn’t want to originate defaults. 48 The loan officer’s decision · Loan officer uses key financial variables: · P/I ratio · housing expense-to-income ratio · loan-to-value ratio · personal credit history · The decision rule is nonlinear: · loan-to-value ratio > 80% · loan-to-value ratio > 95% (what happens in default?) · credit score 49 Regression specifications 50 51 52 53 Table 11.2, ctd. 54 Table 11.2, ctd. 55 Summary of Empirical Results 56