The Least Squares Assumptions in the Multiple Regression Model

advertisement

Cross-Sectional, Time Series, and Panel Data

Cross-sectional data consists of multiple entities observed at a single time

period.

Time series data consists of a single entity observed at multiple time

periods.

Panel data (also known as longitudinal data) consists of multiple entities,

where each entity is observed at two or more periods.

Expected Value and the Mean

Suppose the random variable Y takes on k possible values y1, ... yk , where

y1 denotes the first value, y2 denotes the second value, and so on, and the

probability that Y takes on y1 is p1 , the probability that Y takes on y2 is p2 , and

so forth. The expected value of Y , denoted E (Y ) , is

k

E (Y ) y1 p1 y2 p2 ... yk pk yi pi

i 1

The expected value of Y is also called the mean of Y or the expectation of Y and

is denoted Y .

Variance and Standard Deviation

The variance of the discrete random variable Y , denoted Y2 , is

k

Y2 var(Y ) E[(Y Y ) 2 ] ( yi Y ) 2 pi

i 1

The standard deviation of Y is Y , the squared root of the variance. The units of

the standard deviation are the same as the units of Y .

Means, Variances, and Covariances of Sums of Random Variables

Let X , Y , and V be random variables, let X and X2 be the mean and variance

of X , let XY be the covariance between X and Y (and so forth for the other

variables), and let a , b , and c be constants. The following facts follow from the

definitions of the mean, variance and covariance.

E (a bX cY ) a b X cY ,

var(a bY ) b 2 Y2 ,

var(aX bY ) a 2 X2 2ab XY b 2 Y2 ,

E (Y 2 ) Y2 Y2 ,

Cov(a bX cV , Y ) b XY c VY , and

E ( XY ) XY X Y

Corr ( X , Y ) 1 and | XY | X2 Y2

Estimators and Estimates

An estimator is a function of a sample of data to be drawn randomly from a

population. An estimate is the numerical value of the estimator when it is actually

computed using data from a specific sample. An estimator is a random variable

because of randomness in selecting the sample, while an estimate is a

nonrandom number.

Bias, Consistency, and Efficiency

Let Y be an estimator of Y . Then

The bias of Y is E ( Y ) Y

Y is an unbiased estimator of Y if E ( Y ) Y

p

Y is a consistent estimator of Y if Y Y

Let Y be another estimator of Y , and suppose that both Y and Y are

unbiased. Then Y is said to be more efficient than Y if var ( Y )

var( Y ) .

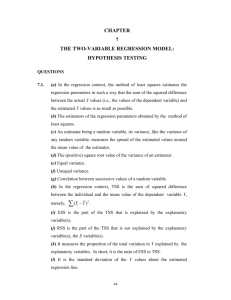

The terminology of Hypothesis Testing

The prespecified rejection probability of a statistical hypothesis test under the null

hypothesis is the significance level of the test. The critical value of the test

statistic is the value of the statistic for which the test just rejects the null

hypothesis at the given significance level. The set of values of the test statistic for

which the test rejects the null is the rejection region, and the set of values of the

test statistic for which it does not reject the null hypothesis is the acceptance

region. The probability that the test actually incorrectly rejects the null hypothesis

when the null is true is the size of the test, and the probability that the test

correctly rejects the null hypothesis when the alternative is true is the power of

the test.

The p-value is the probability of obtaining a test statistic, by random sampling

variation, at least as adverse to the null hypothesis value as is the statistic

actually observed, assuming the null hypothesis is correct. Equivalently, the pvalue is the smallest significance level at which you can reject the null

hypothesis.

Example: Testing the Hypothesis E (Y ) Y ,0 Against the Alternative E (Y ) Y ,0

Computer the standard error of Y , SE (Y )

Compute the t-statistic t

Y Y ,0

SE (Y )

Reject the null hypothesis if | t | 1.96

Confidence Interval for the Population Mean

A 95% two-sided confidence interval for Y is an interval constructed so that it

contains the true value of Y in 95% of its applications. When the sample size n

is large, 90%, 95% and 99% confidence interval for Y are

90% confidence interval for Y = {Y 1.64SE (Y )} .

95% confidence interval for Y = {Y 1.96SE (Y )} .

99% confidence interval for Y = {Y 2.57 SE (Y )} .

Terminology for the Linear Regression Model with a Single Regressor

The linear regression model is:

Yi 0 1 X i ui ,

where:

the subscript i runs over observations, i 1,...n;

Yi is the dependent variable, the regressand, or simply the left-hand variable;

X i is the independent variable, the regressor, or simply the right-hand variable;

0 1 X i is the population regression line or population regression function;

0 is the intercept of the population regression line;

1 is the slope of the population regression line; and

ui is the error term.

The OLS Estimator, Predicted Values, and Residuals

The OLS estimators of the slope 1 and the intercept 0 are:

n

1

(X

i 1

i

X )(Yi Y )

n

(X

i 1

i

X )2

s XY

s X2

0 Y 1 X

The OLS predicted values Yi and residuals u i are:

Yi 0 1 X i , i 1,...n

ui Yi Yi , i 1,...n

The estimated intercept ( 0 ), slope ( 1 ) and residuals ( ui ) are computed from a

sample of n observations of X i and Yi , i 1,...n . These are estimates of the

unknown true population intercept ( 0 ), slope ( 1 ) and residuals ( ui ).

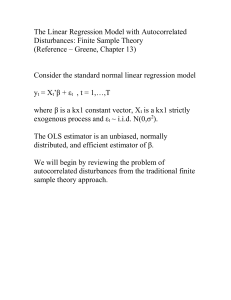

The Least Squares Assumptions

Yi 0 1 X i ui , i 1,...n , where:

1. The error term ui has conditional mean zero given X i , that is

E (ui | X i ) 0 ;

2. ( X i , Yi ) , i 1,...n are independent and identically distributed (i.i.d.) draw

from their joint distribution; and

3. ( X i , ui ) have nonzero finite fourth moments.

General Form of the t-Statistic

In general, the t-statistic has the form:

t=(estimator – hypothesized value) / (standard error of the estimator).

Testing the Hypothesis 1 1,0 Against the Alternative 1 1,0

1. Computing the standard error of 1 , SE ( 1 ) 2 , where

1

n

2

1

1

( X i X ) 2 ui2

1 n 2 i 1

*

1 n

n

[ ( X i X ) 2 ]2

n i 1

2. Computer the t-statistic t

1 1,0

SE ( 1 )

3. Computing the p-value. Reject the hypothesis at 5% significance level if

the p-value is less than 0.05 or, equivalently, if the absolute t-value is

greater than 1.96.

Confidence Interval for 1

A 95% two-sided confidence interval for 1 is an interval that contains the true

value of 1 with a 95% probability, that is, it contains the true value of 1 in 95%

of all possible randomly drawn samples. Equivalently, it is also the set of values

of 1 that cannot be rejected by a 5% two-sided hypothesis test.

95% confidence interval for 1 = ( 1 1.96SE ( 1 ),1 1.96SE ( 1 )) .

The R2

The Regression R2 is the fraction of the sample variance of Yi explained by X i .

The definitions of predicted value and residuals (Key concept 10) allow us to

write the dependent variable Yi as the sum of the predicted value Y i , plus the

residuals u i :

Yi Y i u i

Define the explained sum of squares as

n

ESS (Y i Y ) 2

i 1

and the total of sum of squares as

n

(Y Y )

i 1

2

i

so that the sum of squares residuals is

n

SSR u i TSS ESS

2

i 1

2

The R is the ratio of explained sum of squares to the total sum of squares

ESS

SSR

R2

1

TSS

TSS

Note that the R2 ranges between 0 and 1.

The Standard Error of the Regression

The standard error of regression (SER) is an estimator of the standard deviation

of the regression error ui . Because the regression errors u1...un are not observed,

the SER is computed using the OLS residuals u1...u n . The formula for the SER is

1 n 2 SSR

SER su where su2

ui n 2

n 2 i 1

Omitted Variable Bias in Regression with a Single Regressor

Omitted variable bias is the bias in the OLS estimator that arises when the

regressor, X , is correlated with an omitted variable. For omitted variable bias to

occur, tow conditions must be true:

1. X is correlated with the omitted variable; and

2. the omitted variable is a determinant of the dependent variable Y .

(The Mozart Effect: Omitted Variable Bias?)

The Multiple Regression Model

The multiple regression model is

Yi 0 1 X1i 2 X 2i ... k X ki ui i 1,..., n.

where

a. Yi is i th observation on the dependent variable; X1i , X 2i ... X ki are the

i th observations on each of the k regressors; and ui is the error term.

b. The population regression line is the relationship that holds between

Y and X ' s on average in the population:

E (Y | X1i x1 , X 2i x2 ,..., X ki xk ) 0 1 x1 ... k xk .

c. 1 is the slope coefficient on X1 , 2 is the slope coefficient on X 2 ,etc.

The coefficient 1 is the expected change in Yi resulting from change of X1 by

one unit, holding constant X 2i ... X ki . The coefficients on the other X ' s are

interpreted similarly.

d. The intercept 0 is the expected value of Y when all the X ' s equal

zero. The intercept can be thought of as the coefficient on a regressor, X 0 , that

equals one for all i .

The OLS Estimators, Predicted Values, and Residuals in the Multiple

Regression Model

The OLS estimators 0, 1, ... k , are the values of b0 , b1 ,...bk that minimize the

n

sum of squared prediction mistakes

(Y b

i 1

i

0

b1 X 1i b2 X 2i ... bk X ki ) 2 . The OLS

predicted value Y i and residuals u i are:

Y i 0 1 X 1i 2 X 2i ... k X ki ,i 1,..., n and

u i Yi Yi ,i 1,..., n .

The OLS estimators 0, 1, ... k , and residuals u i are computed from a sample of

n observations of ( X1i ,... X ki , Yi ),i 1,..., n . There are estimators of the unknown

true coefficients 0 , 1 ,... k and error term, ui .

The Least Squares Assumptions in the Multiple Regression Model

Yi 0 1 X1i 2 X 2i ... k X ki ui , i 1,..., n , where

a. ui has conditional mean zero given X 1i , X 2i ,... X ki , that is

E (ui | X 1i , X 2i ,... X ki ) 0

b. ( X1i , X 2i ,... X ki , Yi ), i 1,..., n are independently and identically distributed

draws from their joint distribution;

c. ( X1i , X 2i ,... X ki , ui ) has nonzero finite fourth moments; and

d. there is no perfect multicollinearity.

Testing the Hypothesis j j ,0 Against the Alternative j j ,0

1.Computing the standard error of j , SE ( j )

2.Computer the t-statistic t

j j ,0

SE ( j )

3.Computing the p-value. Reject the hypothesis at 5% significance level if

the p-value is less than 0.05 or, equivalently, if the absolute t-value is

greater than 1.96.

R2

The “Adjusted R2 ”In multiple regression, the R2 increases whenever a regressor

is added, unless the new regressor is perfectly multicollinear with the original

regressors. To see this, think about starting with one regressor and then adding a

second. When you use OLS to estimate the model woth both regressors, OLS

finds the value of the coefficients that minimize the sum of squared residuals. If

OLS happens to choose the coefficient on the new regressor to be exactly zero,

then the SSR will be the same whether or not the second variable is included in

the regression. But if OLS choose any value other than zero, then it must be that

this value reduces the SSR relative to the regression that excludes this

regressor. In practice, it is extremely unusual for an estimated coefficient to be

exactly zero, so in general the SSR will decrease when a new regressor is added.

But this means that R2 generally increases when a new regressor is added.

An increase in the R2 does not mean that adding a variable actually improves

the fit of the model. One way to correct that is to deflate the R2 by some factor,

and this is what the adjusted R2 or R 2 , does.

The R 2 is modified version of the R2 that does not necessarily increase when a

new regressor is added. The R 2 is

R2 1

s2

n 1 SSR

1 u2

n k 1 TSS

sY

There are three useful things to know about R 2 .

n 1

First,

is always greater than 1, so R 2 is always less than R2 .

n k 1

Second, adding a regressor has two opposite effects on the R 2 . On the one

n 1

hand the SSR falls, which increases R 2 . On the other hand, the factor

n k 1

increases. Whether the R 2 increases or decreases depends on which of these

two factors is stronger.

Third, the R 2 can be negative. This happens when the regressors, taken

together, reduce the sum of squared residuals by such a small amount that this

n 1

reduction fails to offset the factor

.

n k 1

R2 and R 2 : What they tell you – and What They Don’t

R2 and R 2 tell you

whether the regressors are good at predicting, or explaining the values of the

dependent variable in the sample of data on hand. If R2 or( R 2 ) is nearly one,

then the regressors produce good predictions of the dependent variable in that

sample, in the sense that the variance of the OLS residual is small compared to

the variance of the dependent variable. If R2 or( R 2 ) is nearly zero, the opposite is

true.

R2 and R 2 do NOT tell you

1. an included variable is statistically significant;

2. the regressors are a true cause of the movements in the dependent

variable;

3. there is omitted variable bias; or

4. you have chosen the most appropriate set of regressors.

Logarithms in Regression: Three Cases

Logarithms can be used to transform the dependent variable Y , an independent

variable X , or both (but they must be positive). The following table summarizes

these three cases and the interpretation of the regression coefficient 1 . In each

case, 1 can be estimated by applying OLS after taking the logarithm of the

dependent/independent variable

Case

I

Regression Specification

Yi 0 1 ln( X i ) ui

II

ln(Yi ) 0 1 X i ui

III

ln(Yi ) 0 1 ln( X i ) ui

Interpretation of 1

A 1% increase in X is associated with a

change in Y of 0.011

A change in X by 1 unit ( X 1 ) is

associated with a 100 1 % change in Y

A 1% increase in X is associated with a

1 % in Y , so 1 is the elasticity of Y with

respect to X .