ECSE 6650 Computer Vision Project 2

advertisement

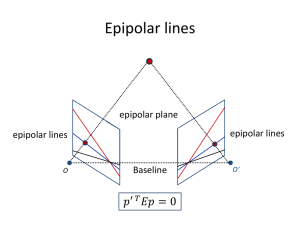

1 ECSE 6650 Computer Vision Project 2 3-D Reconstruction Zhiwei Zhu, Ming Jiang, Chih-ting Wu 1. Introduction The objective of this project is to investigate and implement the methods to recover the 3D properties of object from a pair of stereo images. The computation of 3D reconstruction from a pair of stereo images generally consists of the following 3 steps: (1) rectification, (2) correspondence search, and (3) reconstruction. Given a pair of stereo images, rectification determines a transformation of each image such that pairs of conjugate epipolar lines become collinear and parallel to the horizontal image axis. The importance of rectification is to reduce the correspondence problem from 2-D search to just 1-D search. In the correspondence problem, we need to determine for each pixel in the left image, which pixel in the right image corresponds to it. The search will be correlation-based. Since the images have been rectified, to find the correspondence of a pixel in the left image does not require a search in the whole right image. Instead, we just need to search on the same row in the right image. Due to different occlusion in the both images, some pixels do not have correspondences. In the step of reconstruction, by triangulating each pixel and its correspondence, we can compute the 3D coordinate at that pixel. 2. Camera Calibration 2.1 Calibration Theory Calibration is a technique to estimate the extrinsic and intrinsic parameters of the stereo system from 2D image and provide the priori information for building the 3D structure straightforward. In the full perspective projection camera, each calibration point ( xi yi z i ) projects onto an image plane point with coordinates (ci ri ) determined by the equation 2 xi p1t ci y ri P i p2t z 1 i p3t 1 x p14 i y p24 i z p34 i 1 (2-1) P WM and (2-2) ,where is a scale factor, P is the homogeneous projection fs x matrix, W 0 0 0 fs y 0 r1 c0 r0 is the intrinsic matrix, and M ( R T ) r2 r 1 3 tx t y is the t z extrinsic matrix. Hence, equation(1) can be rewritten as ci s x fr1 c0 r3 ri s y fr2 r0 r3 1 r3 x s x ft x c0 t z i y s y ft y r0 t z i zi tz 1 (2-3) For each pair of 2-D and 3-D point i =0…N , we have equation as p1t M i p14 ci p3t M i ci p34 0 (2-4) p 2t M i p 24 ri p3t M i ri p34 0 (2-5) , where M i ( xi yi z i ) . We can set up a system of linear equations as Mi 0 AV M N 0 1 0 0 ci M i 0 1 Mi ri M i 0 cN M N 1 0 0 1 MN rN M N p1t ci p14 ri t p2 0 p 24 c N t p3 rn p34 (2-6) where 0 (0 0 0) . In general, equation (2-6) has no exact solution, due to errors in the sampling process, and has to be approximated by using a least square approach, i.e. minimizing AV imposing the additional normalization constraint 2 by p3 1 2 || AV || 2 (|| p3 || 2 1) (2-7) 3 Decomposing A into two matrices B and C, and V into Y and Z A ( B C) Y V Z M1 0 , where B M N 0 1 0 1 0 M1 0 0 MN c1 1 r1 0 cN 1 rN 0 c1 M 1 r1 M 1 , C cN M N r M N N (2-8) (2-9) p1 p14 Y p2 p 24 p 34 ,and Z p3 then the equation(2-7) can be written as 2 || BY CZ || 2 (|| Z || 2 1) (2-10) Taking partial derivatives of ε2 with respect to Y and Z and setting them to equal to 0 yield Y ( B t B) 1 B t CZ C t ( I B( B t B) 1 B t )CZ Z (2-11) (2-12) The solution to Z is the eigenvector of matrix C t ( I B( B t B) 1 B t )C . Given Z , then we can solve for Y . Substituting Y into || BY + CZ||2 =λ. This could prove that solution Z corresponds to the eigenvector of the smallest positive eigenvalue of matrix. 2.2. Camera Calibration Results The left and right images taken from the same camera are shown in Figure 1. Figure 1. The left and right images taken from the same camera We manually measured the pixel coordinates of the grid corners in the calibration panels, 4 and these pixel coordinates are composed of the 2D point set used for camera calibration. The 3D points of these 2D points are already given. Then calibrated the camera based on these 2D-3D-point correspondence set. The estimated camera intrinsic and extrinsic parameters are as shown in Table 1. Table 1: The estimated intrinsic and extrinsic parameters Intrinsic Parameter Matrix Rotation Matrix Translation Left Camera 317.3359 858.3853 0 0 - 851.2035 274.9800 0 0 1.0000 - 0.6096 0.7923 0.0235 0.0579 0.0174 0.9982 - 0.7905 - 0.6099 0.0565 - 1.2242 - 6.5227 65.8155 Right Camera 314.2041 861.6958 0 0 - 850.1881 282.0719 0 0 1.0000 - 0.8353 0.5491 0.0281 0.0466 0.0304 0.9984 - 0.5474 - 0.8353 0.0510 4.6503 - 6.2438 68.8479 From the above table, we can find out the fact that the estimated intrinsic parameters for left and right cameras are a little different from each other, although they are the same camera. When we measured the pixel coordinates of the grid corners in the left and right images, we cannot get the exact one. Usually, there are around 2 or 3 pixels error. Therefore, the measurement of the error can cause the deviation between the estimated left and right camera intrinsic parameters. 3. Fundamental Matrix and Essential Matrix Both the fundamental and essential matrices could completely describe the geometric relationship between corresponding points of a stereo pair of cameras. The only difference between the two is that the fundamental matrix deals with uncalibrated cameras, while the essential matrix deals with calibrated cameras. In this paper, we derived the essential and fundamental followed by the eight-point algorithm. 3.1 Fundamental Matrix Since the fundamental matrix F is a 3×3 matrix determined up to an arbitrary scale factor, 8 equations are required to obtain a unique solution. We manually established correspondences between points on the calibration pattern between two images and applied the matched points followed Eight-point algorithm, i.e. given 8 corresponding points or more we could get a set of linear equations whose null-space are non-trivial. For any pair of matching points u (u, v,1) T , u ' (u ' , v' ,1) T from the epipolar geometry, we have 5 u 'T Fu 0 u' v' 1 , or T f 11 f 21 f 31 f12 f 22 f 32 f 13 u f 23 v 0 f 33 1 (3-1) (3-2) The equation corresponding to a pair of points u (u, v,1) T and , u ' (u ' , v' ,1) T will be uu' f11 uv' f 21 uf 31 vu' f12 vv' f 22 vf32 u' f13 v' f 23 f 33 0 (3-3) From all points matches, we obtained a set of linear equation of the form Af 0 (3-4) , where f is a nine-vector containing the entries of the matrix F, and A is the equation matrix. The fundamental matrix, and hence the solution vector f. This system of equation can be solved by Singular Value Decomposition (SVD). Applying SVD to A yields the decomposition USVt with U, the column-orthogonal matrix and V , the orthogonal matrix and S, a diagonal matrix containing the singular values. These singular values σ1≧σ2 ≧…≧σ9≧ 0 are positive or zero elements in decreasing order. In our caseσ9 is zero (8 equations for 9 unknowns) and thus the last column of V is the solution. 3.2 Essential Matrix The Essential matrix contains five parameters (three for rotation and two for the direction of translation). An Essential matrix is obtained from the Fundamental matrix by a transformation involving the intrinsic parameters of the pair of cameras associated with the two views. Thus, constraints on the Essential matrix can be translated into constraints on the intrinsic parameters of the pair of cameras. From Fundamental matrix F Wl T EWr1 (3-5) E Wl T FWr (3-6) the Essential matrix is 3.3 Experiment Results 6 In the following, we will show the computed fundamental matrix F and the essenti al matrix E: F = 0.0000 - 0.0000 0.0013 0.0000 0.0000 - 0.0023 - 0.0026 - 0.0039 1.0000 E= 0.4173 1.8403 0.6147 - 4.9621 6.9256 - 2.1074 - 0.5248 1.7849 - 0.0329 4. Compute R and T Figure 2 Triangulation If both extrinsic and intrinsic parameters are known, we can computer the 3D location of points from their projections p l and p r unambiguously via Triangulation Algorithm [1]. It is the technique to estimate the intersection of two rays through p l and p r , however, due to the image noise, two rays may not actually intersect in space. The goal of this algorithm is identify the line segment that interests and orthogonal to the two rays, and the estimate the center of the segment. Conveniently following up this geometric solutions, we can find out the relationship between the extrinsic parameters of the stereo system. For a point P in the world reference frame we have (4-1) Pr Rr P Tr Pl Rl P Tl and (4-2) From equation(4-1) and (4-2), we have 7 Pl Rl P Tl Rl [ Rr1 ( Pr Tr )] Tl Rl Rr1 Pr Rl Rr1Tr Tl (4-3) Since the relationship between Pl and Pr is given by Pl RPr T , we equate the terms to get R Rl Rr1 Rl RrT T Tl Rl RrT Tr Tl R T Tr and (4-4) (4-5) The relative orientation and translation R and T of the two cameras are shown as follows: R= 0.9449 0.0136 - 0.3270 - 0.0165 0.9999 0.0047 0.3270 - 0.0009 0.9450 T = 16.9770 - 0.5262 - 0.7741 5. Rectification 5.1 Rectification by Construction of New Perspective Projection Matrices Given a pair of stereo images, epipolar rectification determines a transformation of each image plane such that pairs of conjugate epipolar lines become collinear and parallel to one of the image axes (usually the horizontal one). The rectified images can be thought of as acquired by a new stereo rig, obtained by rotating the original cameras. The important advantage of rectification is that computing stereo correspondences is made simpler, because search is done along the horizontal lines of the rectified images. In the implementation of the rectification process, we have tried several methods. Though the algorithm given in the lecture notes can be proved analytically, we have found that the right rectified image has an obvious shift in vertical direction, thus corresponding image points can’t be located along the same row as the given point on the other image. We then switched to the algorithm given in the textbook. It turns out that this algorithm works well with the given image and camera data. However, the book doesn’t give a detailed proof of this algorithm. Suspecting the correctness of both algorithms, we then implemented the algorithm presented in Rectification with Constrained Stereo Geometry, where this algorithm has been proved analytically by A Fusiello. Our implementation of 8 this algorithm has been proved to be successful, supported by the perfectly rectified image and the properties of the epipolar lines and epipoles. The only input of the algorithm is the pair of the perspective projection matrices (PPM) of the two cameras. The output is a pair of rectifying perspective projection matrices, which can be used to compute the rectified images. The idea behind rectification is to define two new PPMs and obtained by rotating the old ones around their optical centers until focal planes becomes coplanar, thereby containing the baseline. This ensures that epipoles are at infinity, hence epipolar lines are parallel. To have horizontal epipolar lines, the baseline must be parallel to the new X axis of both cameras. In addition, to have a proper rectification, conjugate points must have the same vertical coordinate. This is obtained by requiring that the new cameras have the same intrinsic parameters. Note that, being the focal length the same, retinal planes are coplanar too. The optical centers of the new PPMs are the same as the old cameras, whereas the new orientation (the same for both cameras) differs from the old ones by suitable rotations; intrinsic parameters are the same for both cameras. Therefore, the two resulting PPMs will differ only in their optical centers, and they can be thought as a single camera translated along the X axis of its reference system. The algorithm consists of the following steps: 1. Calculate the intrinsic parameters and extrinsic parameters by camera calibration. 2. Decide the positions of the optical center. The position of the optical center is constraint by the fact that its projection to the image plane is at 0. 3. Calculate the new coordinate system. Let c1 and c2 be the two optical centers. The new x axis is along the direction of the line c1-c2, and the new y digestion is orthogonal to the new x and the old z. The new z axis is then orthogonal to baseline and y. Then the rotation part R the new projection matrix is derived based on this. 4. Construct the new PPMs and the rectification matrices. 5. Apply the new rectification matrixes to the images. 5.2 Resampling-Bilinear Interpolation Once the shift vector (u, v) is updated after the previous iteration the image J must be resampled into a new image Jnew according to (-u, -v) in order to align the matching pixels in I and Jnewand provide for the correct alignment error computation. The shift (u, v) is 9 approximated with subpixel precision, so as we are applying it, it may take us to locations in-between the actual pixel values (see diagram below). Figure 3 Bilinear Interpolation The grayscale value at location (x, y) at each new warped pixel in Jnew as Bx,y This can be achieved in two stages: First we defined B x,0 = (1 - x) B0,0 + xB1,0 and B x,1 = (1 - x) B0,1 + x B1,1. From these two equations, we get Bx,y= (1 - y) B x,0+ y Bx,1=(1 - y)(1 - x) B0,0 + x (1 - y) B1,0+ y (1 - x) B0,1+ xyB1,1 5.3 Rectification Results (5-6) (5-7) (5-8) 10 Figure 4. Rectified left and right images Figure 2 shows the rectified left and right images. We can see that for each image point in the left image, the corresponding point in the right image is always in the same row, as indicated as the white lines in Figure 2. 6. Draw the Epipolar Lines Epipoles before rectification: Left: 1.0e+004 * (-1.8508 -0.0304) Right: 1.0e+003 *(-1.8514 0.2502) Epiloles after rectification: Left: 1.0e+018 *( -1.0271 0.0000) Right: 1.0e+018 *( -1.0068 0.0000) This means that both the epipoles are at infinity along the x axis of the image coordinates, parallel to the baseline. This can be explained by the special form of fundamental matrix after rectification. Our experiments show that the fundamental matrix has a null vector of [1 0 0]’. Eipolar lines corresponding to the corners of A5: in the form of a*U+b*V+c=0 Before rectification: -0.0006 -0.0198 3.7996 -0.0005 -0.0199 3.9630 0.0000 -0.0198 4.9736 0.0001 -0.0199 5.0736 After rectification: -0.0000 -0.0200 3.5044 11 -0.0000 -0.0000 -0.0200 -0.0200 3.7241 4.9826 The lines are shown in the figures Epipolar lines before rectification 12 Epipolar lines after rectification 7. Correlation-based Point Matching After rectification, for each image point in the left image, we can always find its corresponding image point in the right image by searching the same row. Therefore, the rectification procedure reduces the point matching procedure from 2D search to 1D search. Thus, we can improve both the matching speed and the matching accuracy. In the following, we will discuss the correlation-based method to do 1D search for the point matching. 7.1 Correlation Method For each left image pixel within this rectangular area, its correlation with a right image pixel is determined by using a small correlation window of fixed size, in which we compute the sum of squared differences (SSD) of the pixel intensities: W W c(d ) ( I l (i k , j l ), I r (i k d1 , j l d 2 )) k W l W where (6-1) 13 (2W+1) is the width of the correlation window. Il and Ir are the intensities of the left and right image pixels respectively. [i, j] are the coordinates of the left image pixel. d =[d1, d2]T is the relative displacement between the left and right image pixels. (u, v) (u v) 2 is the SSD correlation function. 7.2 Disparity Map Figure 5. Triangulation map From Figure 5, we can get the following equation: Z fb u1 u2 (6-2) Where, u1 u2 is the disparity, b is the baseline distance, and f is the focus length. After locating the corresponding points on the same row in the left and right images, we can compute the disparity for each matched pair one by one. Also, we can see that the depth Z is inversely proportional to the disparity d, in here, we set d = u1 u2 . In order to illustrate the disparity for each matched pair, we first normalize the disparity values within the pixel intensity range (0-255). Thus we can display the disparity as pixel intensities. As a result, the brighter is the image point, the higher is the disparity and the smaller is the depth. Note that since we only consider well-correlated points on the maps, we set the disparity to 255 for lowly correlated points. Figure 3 shows the selected region in the left image to do the correlation-based point matching. Also, the corresponding 14 searching region in the right image is cropped and shown. Figure 6. (a) Left rock image (b) Right rock image From Figure 3, we can see that the rock is in the left lower part of the image. The calculated disparity maps with different threshold value for correlation is shown in Figure 4. From the disparity maps, we can distinguish the rock from the background based on the pixel intensities very well because the rock is brighter than the background. Also, this shows that the rock is closer to the camera than the background. Due to the some mismatched points between the left and right points, some background points are also as bright as the rock part, but that is not very significant. Compared with rock part, most of the background points are darker. (a) Threshold value = 0.3 (b) Threshold value = 0.4 15 (c) Threshold value = 0.5 (d) Threshold value = 0.6 Figure 7. The disparity maps with different threshold values (a) (b) (c) (d) 8. 3-D Reconstruction 8.1 Known Both Extrinsic and Intrinsic Parameters If we known the relative orientation R and T , and the intrinsic parameters Wl and Wr , we can reconstruct 3D geometry from two methods: 1) by coinciding object frame with left camera frame.2) by geometric solutions. 8.1.1 Reconstruction by Triangulation Assume the object frame coincide with the left camera frame and let (cl, rl) and (cr, rr) be the left and right image points respectively. The 3-D coordinates (x, y, z) can be solved through the perspective projection equations from left and right image as x cl y λl rl Wl M l (8-1) z 1 1 x cr y λr rr Wr M r and (8-2) z 1 1 This equation system contains of 5 unknowns (x, y, z, l, r) and 6 linear equations, the solution can be obtained using the least-squares method. Let Pl Wl M l and Pr Wr M r represent the projective matrix for left image and right image, respectively, thus we have 16 x x cl Pl 11 Pl 12 Pl 13 Pl 14 cl 0 y y Pl λl rl Pl 21 Pl 22 Pl 23 Pl 24 λl rl 0 z z 1 P 1 0 l 31 Pl 32 Pl 33 Pl 34 1 1 x x c r Pr 11 Pr 12 Pr 13 Pr 14 cr 0 y y Pr λr rr Pr 21 Pr 22 Pr 23 Pr 24 λr rr 0 z z 1 P 1 0 r 31 Pr 32 Pr 33 Pr 34 1 1 (8-3) (8-4) The combination of the equation (7-3)and(7-4), we have Pl 11 Pl 21 P l 31 Pr 11 P r 21 P r 31 Pl 12 Pl 13 cl Pl 22 Pl 23 rl Pl 32 Pl 33 1 Pr 12 Pr 13 0 Pr 22 Pr 23 0 Pr 32 Pr 33 0 0 P x l 14 0 Pl 24 y 0 Pl 34 z c r Pr 14 rr l Pr 24 1 r Pr 34 (8-5) The least-squares solution of the linear system AX B is given by X ( AT A) 1 AT B (8-6) The 3-D coordinates can be thus obtained from the two corresponding image points. The constructed 3D map are shown in Figure 8. 17 Figure 8. 3D reconstruction of rock pile The output of 3d coordinates can be found in the uploaded files, which is named as “3D.txt”. 9. Summary and Conclusion In this project, we performed 3D reconstruction of a scene of a pile of rocks from its 2 images taken from two different viewpoints. There are three main steps to do the 3D reconstruction: rectification, correspondence search and reconstruction. The experimental results showed that we have successfully reconstructed the 3D scene of the rock which is invisible in both images. This most difficult part is the rectification part, but finally, we utilized a new method to rectify the left and right images, and good results are archived. Code: Version 1: Calibration Version2 Calibration Rectification Reconstruction Rectification Reconstruction