To be competitive companies have to mitigate their risks

advertisement

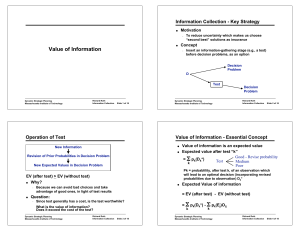

MGS 9920: Practice Decision Tree Problem 1. Decision Tree and Incorporating New Information (Posterior Probabilities) GM has entered into a VEBA agreement to settle its Other Postemployment Benefits (OPEB) to its employees. However, there is a risk in this agreement that the settlement may be denied or be approved by the court in the future. GM estimates the probability of a court approval is 0.7. After considering the inputs of the labor union, General Motors considers an alternative to VEBA, to pursue a Mandatory Agreement. The payoffs (in millions of $) for each of the decision alternative are shown in the table below. Probabilities Decision Alternatives Stay with New VEBA Pursue Mandatory Agreement 0.7 0.3 States of nature Approve (A) Deny (D) 29 -12 3 11 For this problem, if we want to do a decision tree, a. How many decision nodes are required? b. How many branches come out of each decision node? c. How many event nodes are required? d. How many branches come out of each event node? e. Draw the decision tree. Label each branch completely including probabilities and payoffs. f. Solve the decision tree and find the best decision alternative or strategy. 1.1. GM wants to hire a campaigning firm to improve the odds of court approval for this particular New VEBA agreement. If hired, the firm will provide a report whether the campaign this year is going to be Successful or Unsuccessful. For the above GM problem and the decision tree, consider the reliabilities of the campaigning firm given below, and compute the posterior probabilities, draw the revised decision tree (a blank tree is provided below), and compute EVPI, EVSI, and the efficiency of sample information. Incorporating New Information Successful = Unsuccessful= Approve = Deny = Successful Unsuccessful RELIABILITIES Approve 0.80 Deny 0.70 PRIOR PROBABILITIES Approve Deny 0.70 0.30 JOINT & MARGINAL PROBABILITIES Approve Deny Successful Unsuccessful POSTERIOR PROBABILITIES Approve Deny Successful Unsuccessful Incorp. New info. = = Approve 29 Deny -12 Stick with VEBA Pursue Mandatory Successful Approve 3 Deny 11 = = Unsuccessful Approve Deny 29 -12 Stick with VEBA Pursue Mandatory Approve Deny EVSI = Maximum possible expected return with sample information maximum possible expected return without sample information EVPI = Efficiency of sample information = EVSI / EVPI = = 3 11 Practice Decision Tree Problem 2. Decision Tree and Incorporating New Information (Posterior Probabilities) (Refer to the previous FEMA problem). After considering the guidance provided by their internal advisory board, FEMA (Federal Emergency Management Agency) dropped the alternative of ‘low preparation’ and changed the probabilities of the states of nature. The modified savings (payoffs) table is provided below. Probabilities Decision Alternatives High Preparation Medium Preparation 0.4 0.6 States of nature Weak (W) Strong (S) -9 26 10 16 For this problem, if we want to do a decision tree, a. How many decision nodes are required? b. How many event nodes are required? c. How many branches come out of each event node? d. Draw the decision tree. Label each branch completely including probabilities and payoffs. e. Solve the decision tree and find the best preparation strategy. 2.1. For the above FEMA problem and the decision tree, consider the reliabilities given below, and compute the posterior probabilities, draw the revised decision tree (a blank tree is provided below), and compute EVPI, EVSI, and the efficiency of sample information. Bayes' Theorem 0.4 Weak -9 High Preparation 0 -9 12 -9 0.6 Strong 26 26 26 2 13.6 0.4 Weak 10 Medium Preparation 0 10 13.6 10 0.6 Strong 16 16 16 Encouraging = Discouraging = Weak = Strong = RELIABILITIES Weak Encouraging 0.60 Discouraging Strong 0.70 PRIOR PROBABILITIES Weak Strong 0.40 0.60 JOINT & MARGINAL PROBABILITIES Weak Strong Encouraging Discouraging POSTERIOR PROBABILITIES Weak Strong Encouraging Discouraging MGS 9920 = = Weak -9 Strong 26 Weak 10 Strong 16 High Preparation Medium Preparation Encouraging = = Discouraging Weak -9 Strong 26 Weak 10 Strong 16 High Preparation Medium Preparation EVSI = Maximum possible expected return with sample information maximum possible expected return without sample information EVPI = Efficiency of sample information = EVSI / EVPI = =