252y0333

advertisement

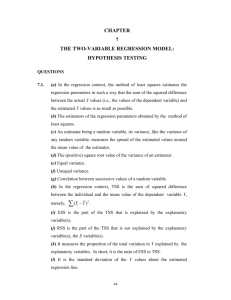

252y0333 11/25/03 (Page layout view!) ECO252 QBA2 THIRD HOUR EXAM Nov 25, 2003 Name KEY Hour of Class Registered (Circle) I. (30+ points) Do all the following (2points each unless noted otherwise). TABLE 11-0 Shiffler and Adams present the partially complete ANOVA table below that resulted from the analysis of a problem with 3 rows and 3 columns. ANOVA Source of Variation SS Columns 18 Rows 40 df MS F F Interaction Within (Error) 208 Total 296 62 1. Complete the table. Assume a 5% significance level. You may not be able to get exactly the degrees of freedom you are looking for, but you should be able to come close. (4) Solution: The SS column must add up, which accounts for the 30. For c columns the DF must be c 1 2 . For r rows, DF = r 1 2 . For interaction, DF = r 1c 1 22 4. DF for within is 62 – 2 – 2 – 4 =54. MS column is SS column divided by df column. F column is MS column divided by MSW 3.852 . F is from F table. ANOVA F Source of Variation SS df MS F Columns Rows Interaction Within (Error) 18 40 30 208 2 2 4 54 9 20 7.5 3.852 2.336ns 5.192 s 1.947ns 3.17 3.17 2.54 Total 296 62 2. Is there significant interaction? Explain your answer. Solution: Since the computed F is less than the table F, we do not reject the null hypothesis of no interaction – this is shown by ns for ‘not significant,’ though I need to know why it is not significant. Remember SS and df columns must add up. 252y0333 11/25/03 TABLE 13-6 The following Minitab table (with many parts deleted) was obtained when "Score received on an exam (measured in percentage points)" (Y) is regressed on "percentage attendance" (X) for 22 students in a Statistics for Business and Economics course. Regression Analysis: Orders versus Weight The regression equation is Score = …… + ….. Attendance Predictor Constant Attendance Coef 39.3927 0.34058 S = 20.2598 SE Coef 37.2435 0.52852 R-Sq = 2.034% T 1.0576 0.6444 P 0.3028 0.5266 R-Sq(adj) = -2.864% Analysis of Variance Source Regression Residual Error Total DF 1 20 21 SS MS F P 0.523 3. Referring to Table 13-6, which of the following statements is true? a) -2.86% of the total variability in score received can be explained by percentage attendance. b) -2.86% of the total variability in percentage attendance can be explained by score received. c) *2% of the total variability in score received can be explained by percentage attendance. d) 2% of the total variability in percentage attendance can be explained by score received. Explanation: R 2 is the total variability in Y that is explained by the regression line. 4. Referring to Table 13-6, which of the following statements is true? a) If attendance increases by 0.341%, the estimated average score received will increase by 1 percentage point. b) If attendance increases by 1%, the estimated average score received will increase by 39.39 percentage points. c) *If attendance increases by 1%, the estimated average score received will increase by 0.341 percentage points. d) If the score received increases by 39.39%, the estimated average attendance will go up by 1%. Explanation: The equation is Score 39.3927 .34058 Attendance . If attendance goes up by 1, Score goes up by .34058. 2 252y0333 11/25/03 5. (Text CD problem 12.51)The manager of a commercial mortgage department has collected data over 104 weeks concerning the number of mortgages approved. The data is the x and O columns below ( x is the number of mortgages approved and O is the number of weeks that happened, for example there were 32 weeks in which 2 mortgages were approved) and the problem asks if it follows a Poisson distribution. x Row O E 1 2 3 4 5 6 7 8 9 10 11 12 13 0 1 2 3 4 5 6 7 8 9 10 11 12 13 25 32 17 9 6 1 1 0 0 0 0 0 12.7355 26.7445 28.0817 19.6572 10.3200 4.3344 1.5170 0.4551 0.1195 0.0279 0.0059 0.0011 0.0002 Since we have no guide as to what the parameter of the distribution is, the x and O columns were multiplied together to tell us that there were 219 mortgages approved over 104 weeks to give us an average of 2.1 mortgages per week. The E above is the computer – generated Poisson distribution multiplied by 104 . In a Kolmogorov – Smirnov procedure we make the O and E into cumulative distributions and compare them as is done below. Row Fo Fe D 1 2 3 4 5 6 7 8 9 10 11 12 13 0.12500 0.36538 0.67308 0.83654 0.92308 0.98077 0.99038 1.00000 1.00000 1.00000 1.00000 1.00000 1.00000 0.12246 0.37962 0.64963 0.83864 0.93787 0.97955 0.99414 0.99851 0.99966 0.99993 0.99999 1.00000 1.00000 0.0025435 0.0142304 0.0234453 0.0021047 0.0147973 0.0012180 0.0037536 0.0014857 0.0003369 0.0000689 0.0000126 0.0000019 0.0000000 Assume this is correct and explain how you would finish this analysis and why you would or would not reject the null hypothesis. (4) Solution: The null hypotheses is H 0 : Poisson . n O 104 . We use the K-S table and find that the critical value for the maximum 1.36 1.36 0.1334 . since the maximum of the D column is less than the n 104 critical value, we do not reject the null hypothesis. discrepancy is 6. Referring to the previous problem, a more direct method of comparing the observed and expected data is below. Answer the following questions. a) What method is being used? (1) Answer: Chi-squared. b) How many degrees of freedom do we have? (1) Answer: Normally, DF would be the number of rows minus 1, but here we have estimated a parameter from the data and lost a degree of freedom. 7 – 1 – 1 = 5. 252y0333 11/25/03 3 c) Why are the columns shorter here than in Problem 5? (1) Answer: The text says that E cannot be below 1. The E s are that are below 1 and the corresponding O s are added together to form a new 7th line. d) Do we reject our null hypothesis? Why? (3) Solution: To get our computed chi – 2 squared, take the O column and subtract n 104 . The result, 1.8415, must be E compared with the 5% value of chi-squared from the table. The value from the table is 11.00705, so we do not reject the null hypothesis. O2 O E E Row 1 2 3 4 5 6 7 13 25 32 17 9 6 2 104 12.7355 26.7445 28.0817 19.6572 10.3200 4.3344 2.1267 104.0000 13.2700 23.3693 36.4650 14.7020 7.8488 8.3056 1.8808 105.8415 7. In problems 5 and 6, one of the methods was used improperly. Why? Answer: The K-S method was wrong because we did not completely know the distribution we were testing for and had to estimate the mean from the data. 8. Random samples of salaries (in thousands) for lawyers in 3 cities are presented by Dummeldinger. They are repeated in the three left columns. 1 2 3 4 5 6 7 Atlanta 45.5 47.9 43.1 42.0 49.0 52.0 39.0 DC 41.5 40.1 39.0 56.5 37.0 49.0 43.0 LA 52.0 72.0 41.0 54.0 33.0 42.0 50.0 rank-At 12.0 13.0 11.0 8.5 14.5 17.5 3.5 80.0 rank-DC 7.0 5.0 3.5 20.0 2.0 14.5 10.0 62.0 rank-LA 17.5 21.0 6.0 19.0 1.0 8.5 16.0 89.0 You are asked to analyze them, which you do using a Kruskal – Wallis procedure. You are aware that the tables you have are only appropriate for columns with 5 or fewer items in them, so you drop the last two items in each column and after ranking the items from 1 to 15 get a Kruskal – Wallis H of 1.82. If you use the tables, What did you test and what is the conclusion? (3) Answer: The table gives a number of p-values, but none for 1.82. However, it should be plain from the values given, that 1.82 has a p-value above .102 We cannot reject the null hypothesis, which is equal medians, because the p-value is above any significance level we are likely to use. Another way to do this is to note from the Friedman table that the p-value for H 5.66 is .057 and the p-value for H 5.78 is .049, so that H .05 5.8. Since 1.83 is cousiderably below 5.8, do not reject the null hypothesis. 9. You remember how to work with column sizes that are too large for the table. You rank the data as appears in the three right columns above. Compute the Kruskal – Wallis H and use it to test your null hypothesis at the 5% significance level.(3) 4 252y0333 11/25/03 12 SRi 2 3n 1 Solution: Compute the Kruskal-Wallis statistic H nn 1 i ni 12 80 2 62 2 89 2 322 .02597 18165 66 1.4025 . Since the 5% chi-square 7 7 7 2122 7 for 2 degrees of freedom is 5.9915, we do not reject our null hypothesis. Note: In spite of my warnings, most of you changed the 12 to something else, most likely n . If this worked the formula 1 would be H ? n 1 SRi 2 3n 1 . Unless I was lying, this is not the case. n i i 10. The Kruskal – Wallis test above was done on the assumption that the underlying data did not follow the Normal distribution. Let’s assume that you found out that the underlying distributions were Normal and had a common variance. The method to use would be. a) Friedman Test b) Chi – squared test. c) *One way ANOVA d) Two – way ANOVA TABLE 13-8 The regression equation is GPA = 0.5681 + .1021 ACT Predictor Constant ACT S = 0.2691 Coef .5681 .1021 SE Coef 0.9284 0.0356 R-Sq = 0.5774% T 0.6119 2.8633 P 0.5630 0.0286 R-Sq(adj) = 0.5069% Analysis of Variance Source Regression Residual Error Total DF 1 6 7 SS 0.5940 0.4347 1.0287 MS 0.5940 0.0724 F 8.1986 P .0287 It is believed that GPA (grade point average, based on a four point scale) should have a positive linear relationship with ACT scores. Given below is the Excel output from regressing GPA on ACT scores using a data set of 8 randomly chosen students from a Big Ten university. 11. Referring to Table 13-8, the interpretation of the coefficient of determination in this regression is that a) *57.74% of the total variation of ACT scores can be explained by GPA. b) ACT scores account for 57.74% of the total fluctuation in GPA. c) GPA accounts for 57.74% of the variability of ACT scores. d) *none of the above (error) 12. Referring to Table 13-8, the value of the measured test statistic to test whether there is any linear relationship between GPA and ACT is a) 0.0356. b) 0.1021. c) 0.7598. d) *2.8633. The t statistic. 5 252y0333 11/25/03 13. Referring to Table 13-8, what is the predicted average value of GPA when ACT = 20? a) *2.61 .5681 + .1021(20) = 2.6101 b) 2.66 c) 2.80 d) 3.12 14. Referring to Table 13-8, what are the decision and conclusion on testing whether there is any linear relationship at the 1% level of significance between GPA and ACT scores? a) *Do not reject the null hypothesis; hence, there is not sufficient evidence to show that ACT scores and GPA are linearly related. b) Reject the null hypothesis; hence, there is not sufficient evidence to show that ACT scores and GPA are linearly related. c) Do not reject the null hypothesis; hence, there is sufficient evidence to show that ACT scores and GPA are linearly related. d) Reject the null hypothesis; hence, there is sufficient evidence to show that ACT scores and GPA are linearly related. Answer: The p-value is above the significance level, so do not reject the null hypothesis. The null hypothesis is usually that a relationship is not significant. 6 252y0333 11/25/03 ECO252 QBA2 Third EXAM Nov 25 2003 TAKE HOME SECTION Name: _________________________ Social Security Number: _________________________ Please Note: computer problems 2 and 3 should be turned in with the exam. In problem 2, the 2 way ANOVA table should be completed. The three F tests should be done with a 5% significance level and you should note whether there was (i) a significant difference between drivers, (ii) a significant difference between cars and (iii) significant interaction. In problem 3, you should show on your third graph where the regression line is. II. Do the following: (23+ points). Assume a 5% significance level. Show your work! 1. Assume that each column below represents a random sample of sales of the popular cereal brand, ‘Whee!’ As it was moved from shelf 1 (lowest) to shelf 4 (highest) of a group of supermarkets. Assume that the underlying distribution is Normal and test the hypothesis 1 2 3 4 . a) Before you start add the second to last digit of your social security number to the 451 in column 4 and find the sample variance of sales from shelf 4. For example, Seymour Butz’s SS number is 123456789 and he will change 451 to 459. This should not change the results by much. (2) b) Test the hypothesis (6) Show your work – it is legitimate to check your results by running these problems on the computer, but I expect to see hand computations for every part of them. c) Compare means two by two, using any one appropriate statistical method, to find out which shelves are significantly better than others. (3) d) (Extra Credit) What if you found out that each row represented one store? If this changes your analysis, redo the analysis. (5) e) (Extra Credit) What if you found out that each row represented one store and that the underlying distribution was not Normal? If this changes your analysis, redo the analysis. (5) f) I did some subsequent analysis on theist problem. The output, in part said Levene's Test (any continuous distribution) Test Statistic: 0.609 P-Value : 0.613 What was I testing for and what should my conclusion be? (2) Row 1 1 2 3 4 5 6 7 8 9 10 336 417 208 420 366 227 357 353 518 388 Sales of ‘Whee’ Cereal Shelf 2 3 4 440 277 374 421 481 349 328 449 462 373 464 479 492 456 338 413 383 554 497 510 354 423 321 424 518 451 311 462 339 202 Sum of Sum of 1362860 Sum of Sum of 1602366 Sum of Sum of 2140264 shelf 1 = 3590.0 squares of shelf 1 = shelf 2 = 3954.0 squares of shelf 2 = shelf 3 = 4586.0 squares of shelf 3 = 7 252y0333 11/25/03 The original data is presented in my format for 1-way ANOVA sum x1 x2 x3 x4 1 2 3 4 5 6 7 8 9 10 Sum nj 336 417 208 420 366 227 357 353 518 388 3590 10 440 277 374 421 481 349 328 449 462 373 +3954 +10 464 479 492 456 338 413 383 554 497 510 +4586 +10 354 423 321 424 518 451 311 462 339 202 + 3805 +10 x j 359.0 395.4 458.6 380.5 SS 13628460 + 1602366 +2140264 +1524677 x 2j 398.375 x =6630167 Most of this line was done for you. 128881 +156341.16+210313.96+144780.25 =640316.37 x 15935 , From the above x =15935 40 398.375 6630167 1602754.8125 = 40 n 40 , x 15935 398 .375 . n SST 2 ij 6630167 , x 2 i. 6630167 , and 40 x x x 2 ij n x 6630167 40 398 .375 2 6630167 6348105 .625 282061 .375 . 2 1524677 10 380 .52 8541 .6111 n 1 9 b) We are testing H o : 1 2 3 4 a) s 2 SSB 2 nx 2 n 2 j x j n x 10 640316 .37 40 398 .375 2 6403163 .7 6348105 .625 55058 .075 2 ANOVA Source of Variation SS df Between Within (Error) 55058 227003 3 36 Total 282061 39 MS 18353 6306 F F 3,36 2.91s F.05 2.87 Since Our computed F exceeds the table F, we reject the null hypothesis. 8 252y0333 11/25/03 c) Recall the following from the outline. Scheffe’ Confidence Interval: If we desire intervals that will simultaneously be valid for a given confidence level for all possible intervals between column means, use 1 1 . 1 2 x1 x2 m 1Fm 1, n m s n n2 1 Tukey Confidence Interval: This also applies to all possible differences. 1 2 x1 x2 q m,n m s 2 1 1 . This gives rise to Tukey’s HSD (Honestly Significant n1 n 2 Difference) procedure. Two sample means x .1 and x .2 are significantly different if x.1 x.2 is greater than 1 2 qm, n m s 2 1 1 n1 n2 From the solution to grass 3, If you used a Scheffe’ interval, there are m 4 columns. The error side is 1 1 1 1 32.87 6306 104 .206 n n 10 10 1 2 For the Tukey comparison, we have an error side with q m, n m q 4,36 .05 3.81 . m 1Fm1,nm s 1 1 6306 1 1 3.81 95 .676 2 10 10 2 n1 n 2 We have the following differences x.1 x.2 359 .0 395 .4 36.4 x.2 x.3 395 .4 458 .6 63 .2 q m, n m s x.1 x.3 359 .0 458 .6 99.6 x.2 x.4 395 .4 380 .5 14.90 x.1 x.4 359 .0 380 .5 21.5 x.1 x.4 359 .0 380 .5 21.5 For the Tukey comparison only, x.1 x.3 359 .0 458 .6 99.6 is larger than 95.676, so we say that there is a significant difference between those shelves. The more conservative Scheffe interval gives us nothing. d) 2-way ANOVA You have already done SSC. x3 x4 x 440 277 374 421 481 349 328 449 462 373 +3954 +10 464 479 492 456 338 413 383 554 497 510 +4586 +10 354 423 321 424 518 451 311 462 339 202 + 3805 +10 1594 1596 1395 1721 1703 1440 1379 1818 1816 1473 =15935 = 40 395.4 458.6 380.5 x1 x2 nj 336 417 208 420 366 227 357 353 518 388 3590 10 x j 359.0 1 2 3 4 5 6 7 8 9 10 Sum SS 13628460 + 1602366 +2140264 +1524677 x 2j i.. ni x i. 4 398.50 4 399.00 4 348.75 4 430.25 4 425.75 4 360.00 4 344.75 4 454.50 4 454.00 4 398.25 40 398.375 SS 647108 658988 528245 741353 747885 547300 478443 846570 843898 590577 6630167 x i2. 158802.2500 159201.0000 121626.5625 185115.0625 181263.0625 129600.0000 118852.5625 206570.2500 206116.0000 135608.0625 1602754.8125 398.375 x =6630167 128881 +156341.16+210313.96+144780.25 =640316.37 9 252y0333 11/25/03 x 15935 , n 40 , x 6630167 , x x 15935 398 .375 . 640316 .37 and x 2 ij From the above x 2 .j n 2 i. 6630167 40 n x 6630167 40398 .375 6630167 6348105 .625 282061 .375 SSC n x n x 10 640316 .37 40 398 .375 6403163 .7 6348105 .625 55058 .075 SSR n x n x 41602754 .8125 40 398 .375 6411019 6348105 62914 SST 2 x ij2 2 j j 2 i i. 2 2 2 2 2 ANOVA Source of Variation SS Columns (shelves) df MS F 55058 3 18353 Rows (stores) Within (Error) 62914 164090 9 27 6990 6077 Total 282061 39 F 3, 27 3.02s F.05 2.96 9, 27 1.15ns F.05 2.25 The null hypotheses are no significant difference between store means and no significant difference between shelf means. We reject the hypothesis that store means are equal. e) In general if the parent distribution is Normal use ANOVA, if it's not Normal, use Friedman or Kruskal-Wallis. If the samples are independent random samples use 1-way ANOVA or Kruskal Wallis. If they are cross-classified, use Friedman or 2-way ANOVA. So the other method that allows for cross-classification is Friedman and we use it if the underlying distribution is not Normal. The null hypothesis is H 0 : Columns from same distribution or H 0 : 1 2 3 4 . We use a Friedman test because the data is cross-classified by store. This time we rank our data only within rows. There are c 4 columns and r 10 rows. x1 x2 x3 x4 r1 r2 r3 r4 1 2 3 4 5 6 7 8 9 10 Sum 336 417 208 420 366 227 357 353 518 388 3590 440 277 374 421 481 349 328 449 462 373 +3954 464 479 492 456 338 413 383 554 497 510 +4586 354 423 321 424 518 451 311 462 339 202 + 3805 1 2 1 1 2 1 3 1 4 3 19 3 1 3 2 3 2 2 2 2 2 22 4 4 4 4 1 3 4 4 3 4 35 2 3 2 3 4 4 1 3 1 1 24 To check the ranking, note that the sum of the three rank sums is 19 + 22 + 35 +24 = 100, and that rcc 1 10 45 SRi 100 . the sum of the rank sums should be 2 2 10 252y0333 11/25/03 12 Now compute the Friedman statistic F2 rc c 1 SR 3r c 1 2 i i 12 19 2 22 2 35 2 24 2 310 5 0.06 361 484 1225 576 250 158 .76 150 8.76 10 45 . If we check the Friedman Table for c 4 and r 10 , we find that the problem is too large for the table. So we look up the 5% value of 2 with c 1 3 degrees of freedom.. It is 7.8147. Since the computed statistic exceeds that value we reject the null hypothesis. f) The Levene Test is a test for equality of variances. A high p-value indicates that the null hypothesis of the columns’ coming from populations with equal variance cannot be rejected. Your Solution: Go to the document 252y0333TH pp 2-32 and find Results for: 2x0333-0j, where j is the second to last digit of your SS number. The first things that appear are the sums and sums of squares for all the columns, followed by a printout of column 4. The one-way ANOVA had to be run twice, the first time in the form that you would use and the second time in the form that you used in Computer Assignment 2. The second version prints out Tukey confidence intervals. Minitab then prints the results of the 2-way ANOVA and the Friedman test. 11 252y0333 11/25/03 2. A company, operating in 12 regions, gives us its advertising expenses as a percent of those of its leading competitor, and its sales as a percent of those of its leading competitor. Row 1 2 3 4 5 6 7 8 9 10 11 12 Ad 77 110 110 93 90 95 100 85 96 83 100 95 Sales 85 103 102 109 85 103 110 86 92 87 98 108 Sum Sum Sum Sum of of of of Ad = 1134.0 squares of Ad = 108258 Sales = 1168.0 squares of Sales = 114750 Note that the sum and sum of squares of sales can’t be used directly, but they should help you to get the corrected numbers. Change the 103 in the ‘sales’ column by adding the second-to-last digit of your Social Security number to it. For example, Seymour Butz’s SS number is 123456789 and he will change 103 to 112. This should not change the results by much. The question is whether our relative advertising expenses affect our relative sales, so ‘Sales’ should be your dependent variable and ‘Ad’ should be your independent variable. Show your work – it is legitimate to check your results by running the problem on the computer, but I expect to see hand computations that show clearly where you got your numbers for every part of this problem. a. Compute the regression equation Y b0 b1 x to predict the ‘Sales’ on the basis of ‘Ad’. (2) b. Compute R 2 . (2) c. Compute s e . (2) d. Compute s b0 and do a significance test on b0 (2) e. Do an ANOVA table for the regression. What conclusion can you draw from this table about the relationship between advertising expenditures and sales? Why? (2) e. It is proposed to raise our expenditures to 110% of our competitors’ in every region. Use this to find a predicted value for sales and to create a confidence interval for sales. Explain the difference between this and a prediction interval and when the prediction interval would be more useful. (3) 12 252y0333 11/25/03 Solution: Working with the original data, we get the following table. The x and x 2 columns and their sums were not actually needed since they were done for you. Row Ad i x Sales crprice y x2 xy y2 1 2 3 4 5 6 7 8 9 10 11 12 77 110 110 93 90 95 100 85 96 83 100 95 1134 85 103 102 109 85 103 110 86 92 87 98 108 1168 7225 10609 10404 11881 7225 10609 12100 7396 8464 7569 9604 11664 108258 6545 11330 11220 10137 7650 9785 11000 7310 8832 7221 9800 10260 111090 5929 12100 12100 8649 8100 9025 10000 7225 9216 6889 10000 9025 124750 x 1134 , y 1168 , x n 12 , 2 108258 , Spare Parts Computation: x y 111090 and y 114750 . SSx x 2 nx 2 108258 1294.52 2 1095 .00 x x 1134 94.5 n Sxy 714 .00 12 y 1168 97.3333 y n a) b1 SSy y 2 ny 114750 12 97 .3333 2 2 1064 .6667 SST 12 Sxy SSx xy nx y 111090 1294.597.3333 xy nxy 714 0.652055 x nx 1095 2 2 b0 y b1 x 97.3333 0.652055 94.5 35.7141 So Y b b x becomes. Yˆ 35.7141 0.6521 x 0 1 SSR 465 xy nxy 0.6521 714 .00 465 .60 So R SST .4368 1064 .6667 xy nxy Sxy 714 .4372 SSxSSy x nx y ny 1095 1064 .6667 b) SSR b1 Sxy b1 2 2 2 R 2 2 2 2 2 2 c) d) SSE SST SSR 1064 .6667 465 599 .6667 1 R SST 1 R y 2 2 or s e2 n2 ny 2 n2 So s e 59 .9194 7.7408 1 d) s b20 s e2 n 2 ( s e2 s e2 SSE 599 .6667 59 .96667 n2 10 1 .4372 1064 .6667 59.9194 10 is always positive!) 2 59 .9194 1 94 .5 12 1095 X 2 nX 2 X2 or 59 .9194 .01077 8.15548 489 .69 sb0 489.69 22.12 . Formulas for s b0 and s b1 appear in the outline. 13 252y0333 11/25/03 H 0 : 0 00 b 00 The outline says that to test use t 0 . Remember 00 is most often zero – and if H : sb0 00 1 0 the null hypothesis is false, we say that 1 is significant. So t b0 00 35 .7141 1.615 . sb0 22 .12 Make a diagram of an almost Normal curve with zero in the middle and, if .05 , the rejection zones are above t n2 t 10 2.228 and below t n2 t 10 2.228 . Since our computed t-ratio is, at 2 .025 .025 2 1.615, nor in a rejection zone, we do not reject the null hypothesis that the coefficient is not significantly different from zero and we say that b1 is not significant. e) Note that the F test is the equivalent of a test on b1 Source SS DF Regression Error (Within) Total MS 465.0000 1 465.00 599.6667 1064.6667 10 11 59.97 F 7.75 F.05 F 1,10 4.96 s f) Our equation says that Yˆ 35.7141 0.6521 x , so, if x 110 , Yˆ 35.7141 0.6521110 107.45 . the Confidence Interval is Yˆ t s , where Y0 1 . sY2ˆ s e2 n X 0 X 2 X 2 nX 2 the confidence interval is Y0 Yˆ 0 2 59 .9194 1 110 94 .5 18 .140 s 18.140 4.259 , so that Yˆ 12 1095 Yˆ t s ˆ 107 .45 2.228 4.259 107 .45 9.49 or 97.96 to 116.94. 0 Y The confidence interval for Y gives an average value for many areas in which the ad budget price was 110, so it is not appropriate for prediction sales in one region. However, since the firm is making the ad budget uniform over all areas, it may be quite appropriate for projecting total sales. -------------------------------------------------------------See next page. 14 252y0333 11/25/03 Minitab output follows. Results for: 2x0333-10.mtw MTB > Name c15 = 'CLIM5' c16 = 'CLIM6' c17 = 'PLIM5' c18 = 'PLIM6' MTB > Regress c2 1 c1; SUBC> Constant; SUBC> Predict c6; SUBC> CLimits 'CLIM5'-'CLIM6'; SUBC> PLimits 'PLIM5'-'PLIM6'; SUBC> Brief 2. Regression Analysis: Sales versus Ad The regression equation is Sales = 35.7 + 0.652 Ad Predictor Constant Ad Coef 35.71 0.6521 S = 7.740 SE Coef 22.22 0.2339 R-Sq = 43.7% T 1.61 2.79 P 0.139 0.019 R-Sq(adj) = 38.1% Analysis of Variance Source Regression Residual Error Total DF 1 10 11 SS 465.57 599.10 1064.67 MS 465.57 59.91 F 7.77 P 0.019 Predicted Values for New Observations New Obs 1 Fit 107.44 SE Fit 4.26 95.0% CI 97.95, 116.93) ( ( 95.0% PI 87.76, 127.12) Values of Predictors for New Observations New Obs 1 Ad 110 Your Solution: Go to the document 252y0333TH pp 32-44 and find Results for: 2x0333-1j, where j is the second to last digit of your SS number. The first things that appear are the results of the regression with the correct prediction and confidence intervals. The next regression printout is wrong but useful. It computes the regression with sales as the independent variable (X) and Ad as the dependent variable (Y). This routine is set up to compute all the columns and column sums that you needed. However, because of the way the data was arranged, it has things reversed. x , sumx y , smysq x 2 , smxy x y , smxsq y2 , So n = n , sumy ybar x , xbar y , SSy y 2 SSy SSx x 2 nx , Sxy Sxy 2 xy nx y and SSx 2 ny SST . Sorry about that, but the alternative was a load of new programming. 15