Entropy is:

advertisement

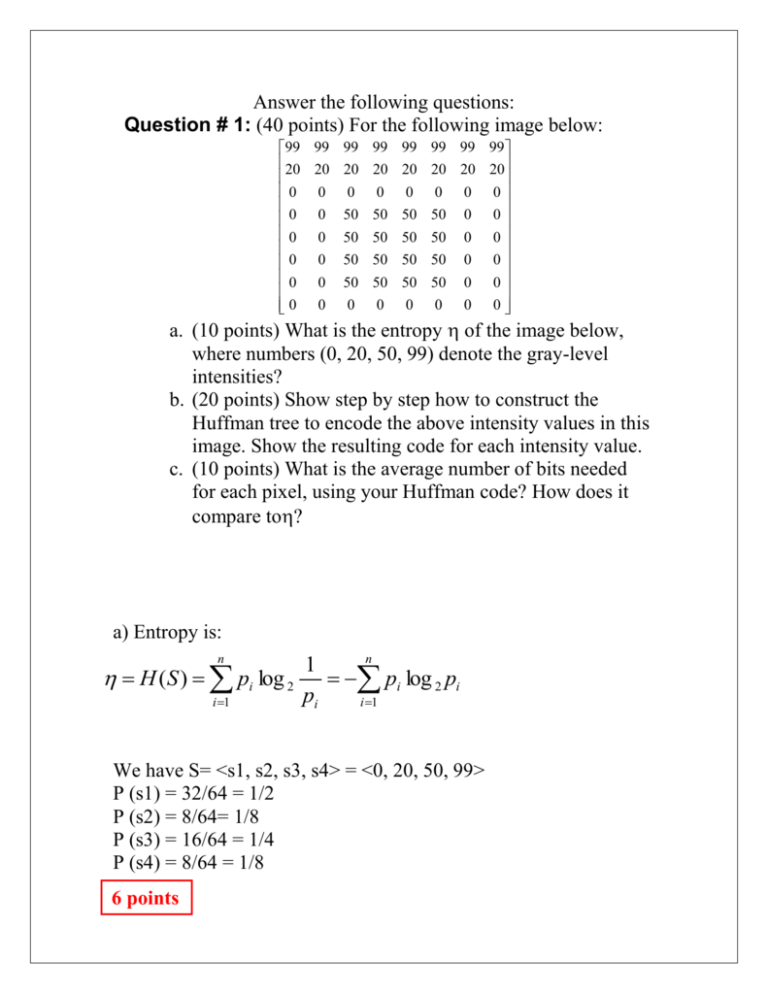

Answer the following questions:

Question # 1: (40 points) For the following image below:

99 99 99 99 99 99 99 99

20 20 20 20 20 20 20 20

0 0 0 0 0 0 0 0

0 0 50 50 50 50 0 0

0 0 50 50 50 50 0 0

0 0 50 50 50 50 0 0

0 0 50 50 50 50 0 0

0 0 0 0 0 0 0 0

a. (10 points) What is the entropy of the image below,

where numbers (0, 20, 50, 99) denote the gray-level

intensities?

b. (20 points) Show step by step how to construct the

Huffman tree to encode the above intensity values in this

image. Show the resulting code for each intensity value.

c. (10 points) What is the average number of bits needed

for each pixel, using your Huffman code? How does it

compare to?

a) Entropy is:

n

n

1

H (S ) pi log 2 pi log 2 pi

pi

i 1

i 1

We have S= <s1, s2, s3, s4> = <0, 20, 50, 99>

P (s1) = 32/64 = 1/2

P (s2) = 8/64= 1/8

P (s3) = 16/64 = 1/4

P (s4) = 8/64 = 1/8

6 points

So Entropy = - [½(log ½) + 1/8 (log1/8) + ¼(log1/4) + 1/8(log1/8)]

= - [-1/2 – 3/8 – ½ - 3/8] = 14/8

4 points

b) We will consider the probabilities as the frequencies of the

values

Value

Freq

20

99

50

0

1/8

1/8

¼

1/2

Then we create separate nodes for each of the values above with

their frequencies.

1/8

20

1/8

99

¼

50

½

0

2 points

Then we combine the least frequent nodes, 20 and 99 and name it

as result (1) and this should be inserted back in the forest of values

shown above.

¼

2 points

1/8

20

1/8

99

Then we combine value 50 and result (1) and name it as result (2)

and we insert it back to the forest in which we have only value 0

remained.

1/2

4 points

¼

50

¼

1/8

20

1/8

99

Then we combine value 0 and result (2) and name it as result (3)

and by this we have the tree with all values combined

1

½

0

1/2

4 points

¼

50

¼

1/8

20

1/8

99

Then we add the codes 0 and 1 on the edges so that any edge

linking a parent with its left child will take 0 and any edge that is

linking the parent with its right child will take 1.

1

1

0

½

0

1/2

0

4 points

1

¼

50

¼

0

1/8

20

1

1/8

99

So the code number for the values is as follows:

0 = 1, 50 = 00, 20 = 010, 99 = 011

4 points

c) The average number of pixels needed can be calculated as

follows:

∑ (Number of bits needed for a value) * (Probability of the value)

= 1(1/2) + 2(1/4) + 3(1/8) + 3(1/8) = ½ + ½ + 3/8 + 3/8 = 14/8

This is the same as the entropy.

8+2 points

Question # 2: (20 points) Consider an alphabet with two

symbols A, B, with probability Pr(A) = x and Pr(B) = 1 – x.

a. (10 points) Plot the entropy as a function of x. You might

want to use log 2 3 1.6 , log 2 7 2.8

b. (10 points) Discuss why it must be the case that if the

probability of the two symbols 0.5 + and 0.5 – , with

small , the entropy is less than the maximum.

a. Entropy is:

n

n

1

H (S ) pi log 2 pi log 2 pi

pi

i 1

i 1

S = <s1, s2> = <A, B>

P (A) = x

P (B) = 1-x

So the entropy is:

Entropy = - [xlogx + (x-1)log(x-1)]

The table below shows some plot values:

x

0.0500

0.1000

0.1500

0.2000

0.2500

0.3000

0.3500

0.4000

0.4500

0.5000

0.5500

0.6000

Entropy

0.2864

0.4690

0.6098

0.7219

0.8113

0.8813

0.9341

0.9710

0.9928

1.0000

0.9928

0.9710

0.6500

0.7000

0.7500

0.8000

0.8500

0.9000

0.9500

And the plot is shown below:

0.9341

0.8813

0.8113

0.7219

0.6098

0.4690

0.2864

10 points

b) Entropy is:

n

n

1

H (S ) pi log 2 pi log 2 pi

pi

i 1

i 1

S = <s1, s2> = <A, B>

And considering only two values, the entropy will be maximum if

the two values have the probability of exactly 0.5 and the entropy

will be at that time = 1. (Totally Random). The more we add the

probability to one of these values (and subsequently subtract the

same amount of probability from the other value) the less entropy

we will have.

10 points

Question # 3: (40 points) Suppose the alphabet is {A, B, C},

and the known probability distribution is Pr(A) = 0.5, Pr(B) =

0.4, and Pr(C) = 0.1. For simplicity, let us also assume that both

encoder and decoder know that the length of the messages is

always 3, so there is no need for a terminator.

a. (15 points) How many bits are needed to encode the

message BBB by Huffman coding?

b. (25 points)How many bits are needed to encode the

message BBB by arithmetic coding?

a) Using Huffman Coding:

We first list the frequencies (frequencies here are the same as

probabilities)

Value

Frequency

C

B

A

0.1

0.4

0.5

Then we make them as separate nodes

0.1

C

0.4

B

0.5

A

Then, we combine the less frequent C and B and name the result as

result (1) and insert result (1) back into the forest of values after

deleting nodes C and B

0.5

0.1

C

0.4

B

Then, we combine node A and result (1) to form the entire tree and

insert it back to the forest which should now contain only this tree.

1

0.5

A

0.5

0.4

B

0.1

C

Then we add the codes 0 and 1 on the edges so that any edge

linking a parent with its left child will take 0 and any edge that is

linking the parent with its right child will take 1.

1

0

1

0.5

A

10 points

0.5

0

0.1

C

1

0.4

B

So, the following is the code assignment:

A = 0, C = 10, B = 11

This means that B needs two bits to represent it.

So, the message BBB should take 2+2+2 = 6 bits

5 points

b) using Arithmetic coding:

First we list all the values with their probabilities:

A= 0.5

C= 0.1

B=0.4

We show the ranges table first

Value

A

B

C

Probability

0.5

0.4

0.1

Range

[0,0.5)

[0.5, 0.9)

[0.9, 1)

Then applying the algorithm we get for BBB:

Value

high

low

Range

1

0

1

B

0.9

0.5

0.4

B

0.86

0.7

0.16

B

0.844

0.78

0.064

6 points

12 points

Then we get the codeword using low = 0.78 and high = 0.844. WE

apply the algorithm in order to find a value between low and high

as follows:

k

1

2

3

4

Initial

bit

1

1

1

1

code

0.

0.1

0.11

0.111

0.1101

Value

Code

0

0.5

0.75

0.875

0.8125

Final

code

0.1

0.11

0.110

0.1101

7 points

and after four iterations we come up with following code: 1101

(0.1101)2 = 0.8125

This means that we need 4 bits to encode this message using

arithmetic coding.