Introduction to loglinear models

advertisement

Introduction to loglinear models

Loglinear models are models based on three

sets of assumptions:

Recursive structure

Conditional independence

Homogenous association

Loglinear models are graphical models with an

extra set of assumptions

1

Graphical models

Model 0

Conditional independencies

AC | BDE

EB | ACD

AD | BCE

EC | ABE

Markov graphs are visual representations of the assumption

that tells us something about the distribution

P( A, B, C, D, E)

P( A, B, C, D)P(C, D, E)

P ( B , C)

such that the model has a loglinear structure:

ln(P(A,B,C,D,E) =

ln(P(A,B,C,D) + ln(P(C,D,E) - ln(P(B,C))

2

Loglinear models

Conditional independence

Homogenous associations

=

Associations between variables are constant across

strata defined by other variables

Markov graphs capture the independence assumptions,

but tell us nothing about whether associations are

homogenous or heterogeneous

Loglinear model formulas are useful simple

representations of homogeneity assumptions.

They are defined in the following way …

3

A loglinear model formula

=

A set of model generators

A model generator

=

A subset of variables constructed in the following way:

Conditional independence

Two variables are assumed to be conditionally independent if and only if there is no model generator containing both variables.

Homogeneity

The strength of the association between two variables is

assumed to be constant across strata defined by a third

variable if and only if there are no model generators

containing all three variables.

4

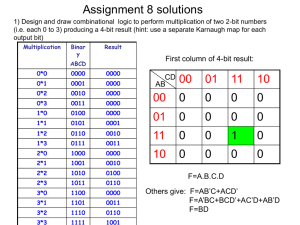

Variables A,B,C,D,E - 10 pairwise relationships

Model 1 = AB, AE, BDC, ED

Summary of assumptions concerning the relationships

between variables in Model 1.

Assumption

AB Homogenous

Why

AB appears only in the AB generator

AC

Cond.ind.

AC does not appear in any model generator

AD

Cond.Ind.

AD does not appear in any model generator

AE Homogenous

AE appears only in the AE generator

BC Heterogenous

The strength of association between B and C is

modified by D since B, C and D appears in the

same generator

The strength of association between B and D is

modified by C since B, C and D appears in the

same generator

BE does not appear in any model generator

BD Heterogenous

BE

Cond.Ind.

CD Heterogenous

CE

Cond.Ind.

DE Homogenous

The strength of association between C and D is

modified by B since B, C and D appears in the

same generator

CE does not appear in any model generator

DE appears only in the DE generator

The assumptions of conditional independence defines

the graphical model we saw before

5

Variables A,B,C,D,E - 10 pairwise relationships

Model 2 = AB, AE, BD,BC,CD, ED

Summary of assumptions concerning the relationships

between variables in Model 1.

Assumption

AB Homogenous

Why

AB appears only in the AB generator

AC

Cond.ind.

AC does not appear in any model generator

AD

Cond.Ind.

AD does not appear in any model generator

AE Homogenous

AE appears only in the AE generator

BC Homogenous

BC appears only in the AB generator

BD Homogenous

BD appears only in the AB generator

BE

Cond.Ind.

CD Homogenous

CE

Cond.Ind.

DE Homogenous

BE does not appear in any model generator

CD appears only in the AB generator

CE does not appear in any model generator

DE appears only in the DE generator

The assumptions of conditional independence defines

the same graphical model

6

Some problems

Models 0, 1 and 2 are based on different assumptions.

To which extent are they really different?

To answer this problem we need to derive

mathematical formulas for the joint probabilities of the

variables, since these probabilities will tell us exactly

what the assumptions of the models mean.

So what is

P(A,B,C,D,E) under Model 0

P(A,B,C,D,E) under Model 1

P(A,B,C,D,E) under Model 2

To answer this question we also need answers to:

What exactly do we mean when we say that the strength

of the association is the same in two strata?

According to which measure of association?

7

Measuring association between categorical

variables

Assume that we want to study the effect of X on Y where X

and Y are binary variables.

A prospective study would select a random sample of persons,

observe X first and later observe Y.

The result may look like this if the distribution of X is uniform

in the population

Y=0 Y=1 n

X = 0 400 100 500

X = 1 200 300 500

600 400 1000

A case-control study would select, say, 100 cases with Y = 1

and 300 controls with Y = 0 and get something like

X=0 X=1 n

Y = 0 200 100 300

Y = 1 25

75 100

225 175 400

Or with 300 cases and 300 controls

X=0 X=1 n

Y = 0 200 100 300

Y = 1 75

225 300

275 325 600

8

Three tables describing the same association based on

three different sampling schemes in the same population

Y=0 Y=1

X = 0 400 100 500

X = 1 200 300 500

600 400 1000

X=0 X=1

Y = 0 200 100 300

Y = 1 25

75 100

225 175 400

X=0 X=1

Y = 0 200 100 300

Y = 1 75

225 300

275 325 600

A measure of association should describe the

association in the population, irrespectively of

how persons were sampled for the study

The measure of association for a 22 table should

therefore give us the same number for the three tables

We need a measure of association that does not

depend on the way data is sampled

9

The cross-product / odds ratio

It can be shown that the only measures of association in

22 tables that do not depend on the way data is

collected (as long as persons are independent) are

functions of the cross-product/odds ratio coefficient

A B

C D

OR = The cross-product ratio =

AD

BC

Known functions of the odds ratio:

Ln(OR) = ln(AD) – ln(BC)

The gamma coefficient

OR 1

OR 1

10

The three tables

Y=0 Y=1

X = 0 400 100 500

X = 1 200 300 500

600 400 1000

X=0 X=1

Y = 0 200 100 300

Y = 1 25

75 100

225 175 400

X=0 X=1

Y = 0 200 100 300

Y = 1 75

225 300

275 325 600

In all of these tables, the odds ratio is equal to 6

Please note, that the relative risk calculated in the prospective

study is equal to 3

11

rc tables

It can also be shown that

the only measures of association in rc tables that do

not depend on the way data is collected are functions of

the set of cross-product/odds ratio coefficients that can

be defined in 22 tables including the first and another

row and the first and another column from the original

table.

A table

100

50

10

200

150

200

300

200

100

The set of odd ratio coefficients

1

1

1

1

1.5

10

1

1.33

3.33

The coefficient for an rc tables is not a

function of the set of odds ratio coefficients

12

Loglinear models and odds-ratio coefficients

Homogeneity

Loglinear models are models defined by assumptions

that the set of odds ratio coefficients describing the

association between two variables are constant across

strata defined by other variables

Probabilities

The probabilities of the loglinear models can be

described as functions of the set of odds-ratio

coefficients describing the association among variables

In other words:

The set of odds ratio coefficients describing the

associations among variables are the parameters of

the loglinear models

13

The 22 table

Probabilities

P11 P12

P21 P22

OR

P11P22

P12P21

If the two variables are independent then

Pxy = P(X=x)P(Y=y)

If not, we need some adjustment

Pxy = P(X=x)P(Y=y)Q(x,y)

In other words …

14

Pxy = f(x)g(y)h(x,y)

such that

Ln(Pxy) = 0+ x + y + xy

where 0 = 0, x = ln(f(x)), y = ln(g(y)), xy = ln(h(x,y))

This is the loglinear structure

We can reparameterize in many ways without

destroying the loglinear structure

(add a constant to 0 and subtract the constant from

one of the other parameters)

(add a constant depending on x to to x and subtract the

same constant from xy)

We can for instance do it in such a way that

xy = ln(ORxy)

15

or rather…

we can prove that we can rewrite the probabilities such

that

Pxy = kAxByCxy

where

A1 = 1

B1 = 1

C1y = Cx1 = 1

A2 = a

B2 = b

C22 = c

where c is equal to ORxy

P11 = k

P12 = kb

P21 = ka P22 = kabc

k = P11

b = P12/P11 and a = P21/P11

c = P22/(kab) = (P11P22)/(P12P21) = ORxy

16

This extends to rc tables:

Pxy= kAxByCxy

where

A1 = 1

B1 = 1

C1y = Cx1 = 1

and Cxy = ORxy =

P11Pxy

P1y Px1

such that

ln(Pxy) = 0 + x + y + xy

0 = ln(k)

x = ln(Ax)

y = ln(By)

xy = ln(ORxy)

The loglinear structure with natural parameters

17

The three-way table

Variables X,Y,Z

ORxy|z =

P11z Pxyz

P1xz P1yz

The XY-association is homogenous if

ORxy|z = ORxy

The conditional distribution of X and Y given Z=z is

loglinear

Ln(P(X,Y|Z=z)) = 0z + xz + yz + xy

The joint distribution is also loglinear

Ln(P(X,Y,Z)) = ln(P(Z=z)) + 0z + xz + yz + xy

which may be rewritten as ….

18

The loglinear model for three-way tables

Ln(P(X,Y,Z)) = 0 + x + y + z + xy + xz + yz

where

xy, xz, yz

are logarithms of the constant odds-ratios

describing the conditional association between

two of the variables given the third

19

Assume that the association between X and Y

had not been homogenous

ORXY|Z=1 ORXY|Z=2

Two different loglinear models

Ln(P(X,Y|Z=1)) = 0,Z=1 + x;Z=1 + y,Z=1 + xy,Z=1

Ln(P(X,Y|Z=2)) = 0,Z=2 + x;Z=2 + y,Z=2 + xy,Z=2

Set

xy = xy,Z=1 and xyz = xy,Z=2 - xy,Z=1

such that xy,Z=2 = xy + xyz

Ln(P(X,Y|Z=z)) = 0z + xz + yz + xy + xyz

which may be rewritten as

Ln(P(X,Y,Z)) = 0 + x + y + z + xy + xz + yz + xyz

20

Interpretation of parameters

0 = ln(P(X=1,Y=1,Z=1))

P(X x | Y 1, Z 1)

x ln

P(X

1

|

Y

1,

Z

1)

xy = ln(ORXY|Z=1)

Ln(ORxy|Z=z) = xy + xyz

Ln(ORxz|Y=y) = xz + xyz

Z modifies the association between X and Y

if and only if

Y modifies the association between X and Z

if and only if

X modifies the association between Y and Z

21

Loglinear models for multidimensional

contingency tables

The set of model generators define the loglinear

structure

MG = is the set of model generators

Ln(P(X1,…,Xk)) =

F

F GMG

where we summarize over all subsets of variables

included in at least on model generator

22

Model 1 = AB, AE, BCD, DE

Ln(P(A,B,C,D,E)

=

0 +a +b +c +d +e

+ab +ae +bc +bd +cd + de

+ bcd

Model 2 = AB, AE, BC,BD,CD, DE

Ln(P(A,B,C,D,E)

=

0 +a +b +c +d +e

+ab +ae +bc +bd +cd + de

23

Graphical models for discrete data are loglinear

The loglinear generators correspond to the

cliques of the independence graphs.

Model 0

A Clique is a completely connected subset of variables

Four cliques: AB, AE, DE and BCD

Model 0 = Model 1

24

Another example

Cliques/Generators: ABE, ADE, CDE, BCEF.

P ( a , b, c , d , e , f )

0

abAB adAD aeAE bcBC beBE bfBF cdCD ceCE cfCF deDE efEF

ABE

ADE

CDE

BCE

BCF

BEF

CEF

abe

ade

cde

bce

bcf

bef

cef

BCEF

bcef

25

Analyses by graphical models always assume that

associations between variables in cliques with more

than two variables are heterogeneous

An analysis by loglinear models are required if

hypotheses of homogeneity is of interest the

DIGRAM provides some support for such

analyses. For a complete loglinear analysis you

have to go to another program.

WinLEM is an excellent program

for this (and other) purposes

26

Two useful results from the theory of

graphical models

Marginalizing over variables of a graphical model

always leads to a new graphical model. Some

unconnected variables will be connected in the

independence graph of the marginal model.

P(B,C,D,E,F)

27

The conditional distribution of a subset, of the

variables given the remaining variables will be

a graphical model.

If V is the complete set of variables and V1 V.

The independence graph of P(V1 | V\V1) is equal to the

subgraph of V1.

P(A,B,C,D,E|F)

28

The loglinear structure of marginal graphical

models is sometimes simpler than assumed by

the graphical marginal model

The loglinear model

AB,BC,CD,DE,AE

No effect modification:

All associations are homogeneous

Now, collapse over C and D ….

29

The marginal ABE model

An edge has been

added between B and

E, because there is an

indirect association

between B and C

mediated by C and D

The graphical marginal model is ABE suggesting

that the AB association is modified by E

The loglinear structure is however collapsible

30

Separation implies parametric collapsibility in

loglinear models.

If all indirect paths between two variables, X

and Y, move through at least one variable in a

separating subset, S.

All parameters pertaining to X and Y are the

same in the complete model and in the

marginal model, P(X,Y,S).

31

The indirect path from A to B

=

A–E–D–C–B

E is a separator of this path

The loglinear parameters (the odds ratios) between A

and E is the same in the ABCDE model and in the

marginal ABE model

Since the ORAB|E are constant in P(A,B,C,D,E) then

ORAB|E will also be constant in P(A,B,E)

The marginal ABE model is therefore AB,BE,AE

32

Loglinear analyses of association

in graphical models

Association of interest: A – B

Two questions:

Is the association between A and B homogeneous or

modified by other variables?

What are the estimates of the odds ratios describing the

A-B association

What to do:

Find a set of separators cutting all indirect paths from A

to B

Identify the marginal loglinear model

For A, B and the separators

Test whether some of the higher order interactions

terms relating to AB are insignificant

Finally, estimate the parameters without the

insignificant interaction terms

33

Description of associations in DIGRAM

The Whittaker model

Association of interest:

Unemployed husband (C) – Wife working (A)

All indirect paths between C and A are separated by

The education of the husband (F) and the race of the wife (G)

The partial = - 0.55 is calculated across strata defined by F

and G

34

Loglinear analysis

F and G cuts all indirect paths between A and C

The loglinear structure may be studied in the ACFG

table

DESCRIBE AC

provides the following information on the loglinear

structure of this marginal table:

+----------------------+

|

|

| Marginal model: acfg |

|

|

+----------------------+

The marginal model is not graphical

Cliques of the marginal graph: acfg

Log linear generators

: acf,acg,afg,cfg

Fixed interactions

: afg,cfg

Collapsibility:

Parametric.

Estimable parameters

: acf,ac,acg

The ac interaction may be modified by fg

35

Comments

The marginal model is not graphical

Cliques of the marginal graph: acfg

Edges between all four variables in the marginal model

Log linear generators

: acf,acg,afg,cfg

The loglinear structure is simpler, with no four-factor

interaction

The A-C association may be modified by F, but G will

not modify the way F modifies the A-C association

Fixed interactions

: afg,cfg

The associations between A, F and G and between C, F

and G are partly due to association with other variables.

Analyses of the AFG and CFG interactions will tell us

nothing about the interactions in the complete model.

They are therefore fixed in the marginal ACFG model.

Collapsibility:

Parametric.

The AC interaction in the ACFG model is exactly the

same as in the ABCDEFGH model, but the estimates of

the parameters may be different

36

Estimable parameters

: acf,ac,acg

These are the loglinear parameters in the complete model that

may be estimated in the marginal model

The ac interaction may be modified by fg

A list of all that variables that may modify the strength of

association between A and C

The following output from DIGRAM’s DESCRIBE analysis

will only be reported for variables on this list

+----------------------------------------+

|

|

| Partial Gamma coefficients in f-strata |

|

|

+----------------------------------------+

Least square estimate:

Gamma = -0.5568 s.e.

=

0.1034

f: educhusb Gamma variance

s.e. weight residual

----------------------------------------------------1:< O-leve -0.58

0.0120

0.1094 0.893 -0.577

2: O-level -0.38

0.1002

0.3166 0.107

0.577

----------------------------------------------------Test for partial association:

X² =

0.3 df = 1 p = 0.564

No evidence of three-factor interaction between AC and F

according to DIGRAM

The analysis in DIGRAM is not a comprehensive loglinear

analysis. For such analyses you have to use programs like

WinLEM

37

Export of data to LEM

Select “Export to LEM” from the DATA menu

Following which the form on the next page turns up

38

Select a LEM project name. Since we are going to look at the ACFG

table I have called the project ACFG, but it could be any name

Enter ACFG in the field with manifest variables

Click on “Create model for manifest variables”

And click on “OK”

Following which two files will be created:

ACFG.inp with commands for WinLEM

ACFG.dat with data

Files for

LEM: ACFG.inp and ACFG.dat

39

ACFG.inp

* Data transferred from Whittaker

* a wifework: 1=not work 2=working

* c unempl. : 1=no 2=yes

* f educhusb: 1=< O-leve 2=O-level

* g asian

: 1=no 2=yes

lat 0

man 4

dim 2 2 2 2

lab a c f g

* Model:

mod

acfg {acf,acg,afg,cfg}

* Fixed in model {afg,cfg}

* input

rec 91

rco

dat ACFG.dat

* Settings

dum 1 1 1 1

* dummy coded loglinear parameters - the first category is always

reference

seed 9

* output

*nco

* suppress conditional probabilities

*nec

* suppress echo of input

nfr

* suppress observed and expected frequencies

*nla

* suppress latent class output

*nec

* suppress parameters of loglinear models

nR2

* suppress R-squared measures

*nse

* suppress standard errors and information on identification

nze

* suppress observed and expected information on zero observed cell

entries

*wco filename

* write conditional probabilities to file

*wda filename

* write oberved table to file

*wfi filename

* write expected table to file

*wla filename

* write latent class information for each person to file

*wpo filename

* write posterior probabilities to file

40

LEM input

Text after a ‘*’ are always treated as comments

lat

man

dim

lab

0

4

2 2 2 2

a c f g

Definitions of variables

No latent and four manifest variables

All variables have two categories

The variable labels transferred from DIGRAM

mod

acfg

{acf,acg,afg,cfg}

* Fixed in model

{afg,cfg}

The model suggested by DIGRAM.

acfg - the joint distribution of acfg

{acf,acg,afg,cfg} - the loglinear model suggested by DIGRAM

You may change the model during the analysis

In fact, changing the model is the only thing you need to care

about. The rest has been taken care of by DIGRAM

41

* input

rec 91

rco

dat ACFG.dat

* Settings

dum 1 1 1 1

seed 9

Information on input.

Do not touch this.

* dummy coded loglinear parameters

Output options that can be turned on and off

* output

*nco

* suppress conditional probabilities

*nec

* suppress echo of input

nfr

* suppress observed and expected frequencies

*nla

* suppress latent class output

*nec

* suppress parameters of loglinear models

nR2

* suppress R-squared measures

*nse

* suppress standard errors and information on identification

nze

* suppress observed and expected information on zero observed cell

entries

*wco filename

* write conditional probabilities to file

*wda filename

* write oberved table to file

*wfi filename

* write expected table to file

*wla filename

* write latent class information for each person to file

*wpo filename

* write posterior probabilities to file

42

Output from LEM

*** STATISTICS ***

Number of iterations = 7

Converge criterion

= 0.0000004575

X-squared

L-squared

Cressie-Read

Dissimilarity index

Degrees of freedom

Log-likelihood

Number of parameters

Sample size

BIC(L-squared)

AIC(L-squared)

BIC(log-likelihood)

AIC(log-likelihood)

=

=

=

=

=

=

=

=

=

=

=

=

0.0000 (0.9998)

0.0000 (0.9998)

0.0000 (0.9998)

0.0000

1

-1203.91152

14 (+1)

665.0

-6.4998

-2.0000

2498.8200

2435.8230

Test of no 4-factor interaction

against the saturated model

WARNING: 1 (nearly) boundary or non-identified (log-linear) parameters

WARNING: 2 zero estimated frequencies

43

Estimates and Wald tests of 3-factor associations with AC

No evidence of effect modification

effect

acf

1 1 1

1 1 2

1 2 1

1 2 2

2 1 1

2 1 2

2 2 1

2 2 2

acg

1 1 1

1 1 2

1 2 1

1 2 2

2 1 1

2 1 2

2 2 1

2 2 2

beta

0.0000

0.0000

0.0000

-0.0000

0.0000

0.0000

-0.0000

0.5002

0.0000

0.0000

0.0000

0.0000

0.0000

-0.0000

0.0000

******

std err

0.8143

******

z-value

exp(beta)

Wald

0.614

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.6490

0.38

*****

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.00E+0000

44

0.00

df

prob

1 0.539

1 1.000

The original model {acf,acg,afg,cfg}

Remove acf and acg interaction such that the association between A and C is

homogeneous

{ac,afg,cfg}

X-squared

L-squared

Cressie-Read

Dissimilarity index

Degrees of freedom

Log-likelihood

Number of parameters

Sample size

BIC(L-squared)

AIC(L-squared)

BIC(log-likelihood)

AIC(log-likelihood)

=

=

=

=

=

=

=

=

=

=

=

=

2.0996 (0.5520)

3.2523 (0.3543)

2.3268 (0.5074)

0.0070

3

-1205.53767

12 (+1)

665.0

-16.2470

-2.7477

2489.0728

2435.0753

45

Nice fit

Estimate of the AC parameter

effect

ac

1 1

1 2

2 1

2 2

beta

0.0000

0.0000

0.0000

-1.3220

std err

0.2891

z-value

exp(beta)

Wald

-4.574

1.0000

1.0000

1.0000

0.2666

20.92

Homogeneous odds ratio = 0.2666

OR 1 0.7334

0.579

OR 1 1.2666

DIGRAM’s estimate: = -0.55

46

df

prob

1 0.000