Simple Regression

advertisement

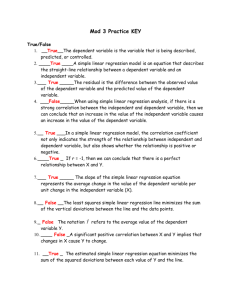

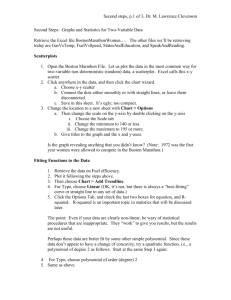

ECON 309 Lecture 8: Simple Regression I. Studying Relationships So far we have only been interested in the parameters (or characteristics) of a single variable: what is the mean number of customers in this store per day?, what is the mean number of defective products per production run?, etc. But often what we really want to know is the relationship between two variables; for example: How does the number of workers affect a firm’s output? How does the level of advertising affect a firm’s sales? How does the level of the minimum wage affect unemployment? And often we want to know the relationship between more than two variables; for example: How do screen size, HD capability, and number of tuners affect the price of a TV? How do the price of a good, price of other goods, and income affect the quantity demanded of a product? For now, we will be focusing on finding the relationship between just two variables; we call this doing a simple regression. When we get to three or more variables (that is, how two or more variables affect a third), we will call it multiple regression. II. The Functional Form of the Relationship When looking at a single variable, we usually supposed that it had certain fixed characteristics called parameters, and we came up with estimates of those parameters. E.g., a population has mean μ and standard deviation σ, which we estimate with x and s, respectively. Similarly, we assume that the relationship between two variables x and y is defined by fixed parameters. The usual hypothesized relationship is this: y x The parameters here are α and β; these are the items we want to estimate. Notice that the functional form is linear; we are supposing there is a linear relationship between the two variables. [Diagram the actual relationship; show that alpha is the yintercept, beta the slope on x.] In this function, the dependent variable is y and the independent variable is x. That is, we think that the value of y results from the value of x, which is determined independently. If you think causation runs the other way (y affects x), then reverse the variables. If you think causation runs both ways (x affects y and y affects x), then the problem becomes a lot more complex. We will discuss problems of simultaneous determination more later, but for now notice that many economic relationships are like that: price depends on quantity available, but quantity available depends on the expected price; the number of policemen can affect the crime rate, but the crime rate can affect the city’s decision about how many police to hire; etc. We’ve also added ε at the end of the equation; this is the error term. It represents the fact that the relationship is not perfect; sometimes the actual y will differ from the y that would result from the linear relationship. We generally assume that ε is normally distributed with mean of zero. (If it were not distributed with mean of zero, we could just add that mean to the α term, leaving an error that does have a mean of zero. So the big assumption here is not that the mean is zero, but that the error is normally distributed.) We will usually call our estimates the parameters a and b, respectively. Or in other words, we’ll estimate the line above with the following equation, which we call a best-fit line: yˆ a bx The y-with-a-hat is called the predicted value of y. It will differ from the observed y that corresponds to given x in our sample for two reasons: first, because there’s an error term; and second, because our estimates a and b won’t be exactly right. III. Ordinary Least Squares So the question is, how do we find a best-fit line? That is, how do we find our estimates a and b? The best-fit line gives us predicted values of y. As noted earlier, these will differ from the observed y’s most of the time. So we want to pick a and b in a way that minimizes the differences between observed and predicted y’s. Or, more accurately, we want to minimize the squared differences between observed and predicted y’s. [Draw a scatter-plot. Then show the best-fit line going through it. Then show the distance between the predicted and the observed value of y for each value of x.] So the method we’ll use is called Least Squares or Ordinary Least Squares. It means minimizing the sum of squared differences between observed and predicted y’s. That is, we minimize the following: n (y i 1 i yˆ i ) 2 It can be proven (using calculus) that this is minimized by the following formulas for a and b: b n xy x y n x 2 x 2 a y bx Fortunately for you, Excel can do all that for you. [Use adspendingkey.xls to demonstrate the hard way; then show the easy way. Emphasize the estimates of intercept and slope.] It’s important to be able to interpret the results of a regression. Put the coefficient estimates into the linear functional form. Then say what it means, by stating this-muchincrease-in-x will lead to that-much-increase-in-y. [In this case: A $1 million increase in ad spending corresponds to about 0.363 million (or 363,000) more retained impressions by consumers per week.] Another aspect of the regression you should look at is the R-squared value, also known as the coefficient of determination. Put simply, the R-squared is the percent of the variation in the dependent variable that is explained by variation in the independent variable. IV. Hypothesis Testing With Regressions Just as we tested hypotheses about the parameters of a single variable before, now we’ll test hypotheses about parameters of relationships. Most often, we’ll be interested in the slope coefficient. And typically the most important question is whether it is different from zero. If it is, then we have support for the existence of a relationship. So a common set of hypotheses is: H0: β = 0 H1: β ≠ 0 Note that this is a two-tail test. Because this is such a common test, Excel’s regression analysis does most of the work for us. For each estimated coefficient, it tells us the tvalue and the p-value. By looking at the p-value, we can see the most stringent significance level that would allow us to reject the null hypothesis. [Use adspending. We can support the hypothesis that spending affects the number of impressions on consumers at any commonly used level of significance, including 1%.] However, if you want to do any other hypothesis test (e.g., a one-tail test, or a test to see if the coefficient is significantly different from some number besides zero), you’ll have to set it up yourself. You can do this using the standard error. Use the following formula: t b Ho se Where se = the standard error given in the regression output. For the appropriate comparison t, use your t-table, with df = n – 2. Why df = n – 2? Because degrees of freedom are equal to n minus the number of things you’re estimating. In this case, you’re estimate the y-intercept and the slope on x. [Replicate the Excel output. The coefficient estimate divided by the standard error gives you the t-value.] You can also form confidence intervals around your estimates. The regression output already includes a 95% confidence interval. But you can form other confidence intervals. The general formula is: b t c se [Replicate the Excel output. For df = 21 – 2 = 19, and significance level of 5%, the tcritical is 2.093. Multiply this by the standard error from the regression; add and subtract this from the coefficient estimate to get the given confidence interval.] V. Finding and Correcting Non-Linearities It’s important to realize when your relationship might be non-linear. Often you can see this by looking at a scatterplot. If the relationship doesn’t look like a straight line, it’s likely you don’t have a non-linear relationship. In the ad spending example, the scatterplot seems to show a curved shape, getting flatter as the amount of spending gets larger. This makes sense: there are probably diminishing marginal returns to advertising. We can also see this by looking at the plots of residuals and line fit in the regression output. The line fit plot shows that the predicted value of y is often greater than the observed value for low values of x, and less than the observed value for moderate-to-high values of x. The residuals plot shows this more directly. Residuals are the differences between predicted and observed values. Notice that in this picture, we have negative residuals for low values of x, positive residuals for higher values of x. These are all good signs that we have a non-linear relationships. How can we correct for non-linearities? Here is where economic theory can be helpful. We already have an economic explanation: diminishing marginal returns. Do we know any functional forms that generate that kind of relationship? It turns out that an exponential function will do it. Suppose that x and y are related like so: y x With β < 1 (for example, ½). If you graph this, you’ll get a curve that starts steep but gets flatter and flatter [show graph]. But how can we estimate the parameters of this nonlinear relationship? We can make use of the properties of logarithms. Take the log of both sides to get: ln( y ) ln( x ) ln( y ) ln ln x Now we can transform our variables. Take the y series and create a new series called ln(y); take the x series and create a new series called ln(x). The relationship between these transformed variables is linear; the vertical intercept is lnα and the slope is β. [Do this in Excel with adspending. Notice that we get an even more significant coefficient on the slope, and a higher R-squared value. Also look at the line fit and residuals plots; the differences don’t seem systematically related to the size of x.] How should we interpret the results of a non-linear regression like this? The slope coefficient tells us that when the log-of-x goes up by one, the log-of-y goes up by the amount of the coefficient. Not very useful information. But it turns out, for reasons I won’t explain, that when a function has the exponential form given earlier, the exponent on x can be interpreted as the elasticity of y with respect to x. Thus, the slope coefficient tells us that when x goes up by 1%, y will increase by a percentage equal to the coefficient. [For adspending, this means a 1% increase in your advertising budget will increase your number of consumer impressions by 0.6%.]