Topic #1: Probability, Mean, Variance, Covariance, and Correlation

advertisement

Topic #1: Probability, Mean, Variance, Covariance,

and Correlation

I. Random Variable and Probability

A random variable is a variable that has yet to be determined. It may take on various

values and each value has a probability associated with it.

Example: You flip a coin. Heads X = 1 and Tails X = -1. If the coin is fair, then the

probability of a Heads is equal to ½. The same is true for Tails, i.e., P[Tails] = ½.

Therefore, f(x) = ½ if x = 1 and ½ if x = -1.

Example: Suppose that the probability your next customer will arrive before x minutes is

equal to F(x) = 1- e-λx for x ≥ 0 and λ > 0 .

Example: Suppose that the probability that your business will have x customers today is

equal to f(x) = (1-λ)λx for x = 0,1,2,3,... and for 0 < λ < 1.

There are many types of probability functions. Each economic phenomenon will have

its own special probability function. These functions can be divided into discrete

functions and continuous. Discrete functions are where x can only take on countably

many values, like x = 0, x = 1, x = -1, etc. Continuous functions are where x can take on

any value on the real line.

A very commonly used probability function is the normal probability density function.

We write this function as

f (x)

( x ) 2

exp{

}

2

22

1

1

2

for - ∞ < x < ∞.

We find that this function has the familiar bell-shape we have all seen before. If μ = 0 and

σ2 = 1 we have the following graph of a standard normal density.

The area underneath this curve is equal to 1. Therefore, any part of it is bounded between

1 and 0 and can be thought of as a probability. For example, the probability that x is less

than 1.5 is equal to the yellow area in the graph below.

The graph makes it clear how that we can calculate things such as P[ x ≤ 1.5], or even

P[-1 ≤ x ≤ 1.5] which is the area under the curve from -1 to 1.5.

Usually, we reserve the capital letter X for the random variable and the small letter x for

an observation (or data) on this random variable. From now on, we will follow this

convention and let X be random and x be non-random. Therefore, we should write the

above probabilities as P [X ≤ 1.5] and P[ -1 ≤ X ≤ 1.5] using the capital (random) X.

The yellow area in the graph above is equal to a definite integral from -∞ to 1.5 and we

write this as

12

1 2s

e

ds .

P[ X ≤ 1.5 ] =

2

1.5

This integral cannot be evaluated so easily, so we use a numerical table to approximate

the integral. This table is called the standard normal probability table and can be found

on the Internet at http://www.sjsu.edu/faculty/gerstman/EpiInfo/z-table.htm

Example: Using the table above, find P[X ≤ 1.5]. The answer is 0.9332.

Example: Using the table above, find P[X ≤ -1.25]. The answer is 0.1056.

Example: Using the table above, find P[ 1.5 ≤ X ≤ 2.7]. The answer is 0.0633.

Example: Using the table above, find Xα such that P[ |X| ≤ Xα] = 0.05.

The answer is Xα = 1.96. Draw a graph

showing the standard normal and mark off -Xα and Xα.

II. Mean and Variance

The mean of a random variable is its expected value. For symmetric distributions this is

in the middle. For example, it is clear that the above standard normal density is

symmetric since to the left of zero looks the same as to the right. It is the mirror image.

However, not all densities are symmetric. The negative exponential density is not

symmetric since if f(x) = λe-λx, then f(-x) ≠ f(x).

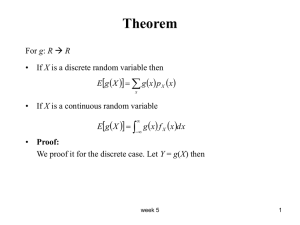

To compute the theoretical mean of a random variable we write

E[X] =

xf ( x )dx

which means simply that you multiply x to f(x) and then add it up (or integrate it).

Example: Suppose that f(x) =

Example: Suppose that f(x) =

1

2 x

for 0 ≤ x ≤ 1. Show that E[X] = 1 .

3

1

for 0 ≤ x ≤ 4. Show that E[X] = 2 .

4

( x ) 2

} for - ∞ < x < ∞.

2

22

Show that E[X] = μ. Consider where the midpoint of the distribution is

located.

Example: Suppose that f ( x )

1

exp{ 12

The variance of random variable X is defined as var[X] = E[ (X-E[X])2 ]. This can be

rewritten in the following way:

var[X] = E[ (X-E[X])2 ]

= E[ X2 - 2XE[X] + E[X]2 ]

= E[ X2] – E[2XE[X]] + E[E[X]2]

= E[ X2] – 2E[X]E[X] + E[X]2

= E[X2] – E[X]2.

To compute a theoretical variance we need to compute the mean E[X] and then compute

the 2nd raw moment about the origin, E[X2]. The latter of these is computed as follows:

E[X2] =

x f (x)dx ,

2

which means that we multiply f(x) by x2 and then add things up (or integrate). The

variance measures the dispersion of probability about the mean. One might say

(intuitively) that an increase in the variance of X, without a change in the mean of X,

increases the randomness of X.

Example: Suppose that f(x) =

Example: Suppose that f(x) =

Example: Suppose that f ( x )

1

2 x

for 0 ≤ x ≤ 1. Show that var(X) =

1

for 0 ≤ x ≤ 4.

4

1

22

exp{ 12

Show that var(X) =

4

.

45

4

.

3

( x ) 2

} for - ∞ < x < ∞.

2

Show var(X) = σ .

2

III. Covariance and Correlation

Finally, we turn to a consideration of two random variables, say X and Y. If we can

understand the operations on two random variables, then we can operate on any number

of them.

Just as X had a probability density function f(x), the two random variables X and Y have

a joint probability density function f(x, y). Everything is just about the same as before,

except that we now have to add up in two directions – one direction for x and one for y.

You already know this. For example, you must count in two directions to count the

number of people in the classroom. A double integral is just adding along two directions,

x and y. The joint probability density for random X and Y adds to 1. We write this as

1=

f (x, y)dxdy .

Of course, this means is that something MUST happen or adding the probabilities for all

x and y together gives unity.

Expected values and variances are computed as before, except we use a double integral.

For example,

E[X] =

xf (x, y)dxdy

and var(X) =

(x E[X])

2

f ( x, y)dxdy

The mean and variance of Y is computed in the same fashion.

The covariance of X and Y is defined as follows

Cov(X,Y) =

(x E[X])( y E[Y])f (x, y)dxdy

assuming of course that E[X] and E[Y] exist and the integral converges. The covariance

of X and Y tells how X changes when Y changes. If it is positive, then the two random

variables tend to move together in the same direction (on average). If the covariance is

negative, then X and Y tend to move inversely (again, on average). For example, the

covariance of X = economic growth and Y = unemployment rate is generally thought to

be negative. Higher growth is associated with lower unemployment—theoretically. The

data we have appears to confirm this theory, as well. But, we have not reached the stage

of data. We are speaking of the theoretical covariance now.

2

y

(x

) for 0 < x < 1 and 0 < y < 1. Show f(x, y)

3

x

4

7

integrates to 1. Show E[X] = , E[Y] = 11/18, and E[XY] =

. From this

9

27

Example: Suppose f(x, y) =

show that cov(X,Y) = E[XY] – E[X]E[Y] = -

1

. The integration here is

81

very simple, but involves multiple integration along the x and y axes.

The definition of the theoretical correlation coefficient is quite simple and is given by

corr(X,Y) =

cov( X, Y)

.

var( X) var( Y)

The correlation coefficient normalizes the covariance, so that correlation remains

between -1 and 1. A correlation of 1 means a perfect positive correlation, or that X and Y

move in the same positive linear direction. A correlation coefficient of -1 means a

perfect negative correlation, or that X and Y move in exactly opposite linear directions.

A zero correlation means that X and Y are not linearly related. The correlation

coefficient measures the degree and direction of the linear relation between two random

variables.

2

y

(x

) for 0 < x < 1 and 0 < y < 1.

3

x

4

3

Show var(X) =

. Show var(Y) =

. Therefore, corr(X,Y) = -.03381

9

10

Example: Suppose f(x, y) =

roughly.