Data

advertisement

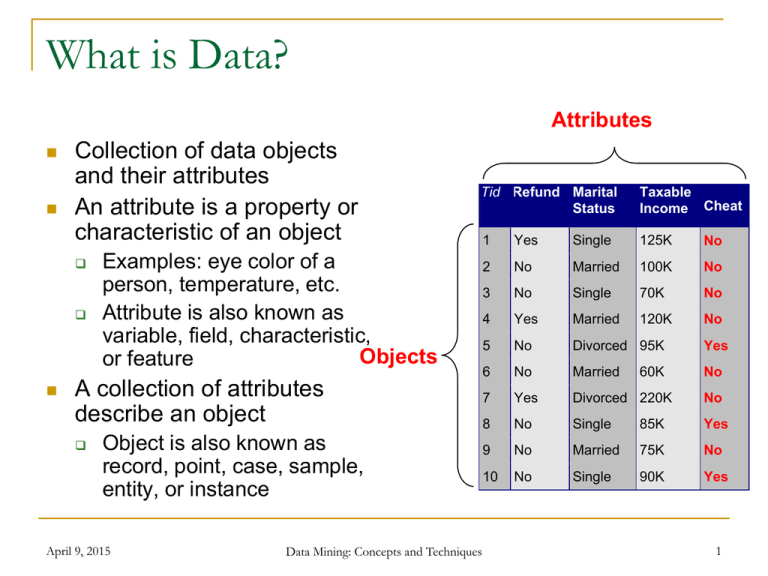

What is Data?

Attributes

Collection of data objects

and their attributes

An attribute is a property or

characteristic of an object

Tid Refund Marital

Status

Taxable

Income Cheat

1

Yes

Single

125K

No

2

No

Married

100K

No

3

No

Single

70K

No

4

Yes

Married

120K

No

5

No

Divorced 95K

Yes

6

No

Married

No

7

Yes

Divorced 220K

No

8

No

Single

85K

Yes

9

No

Married

75K

No

10

No

Single

90K

Yes

Examples: eye color of a

person, temperature, etc.

Attribute is also known as

variable, field, characteristic,

Objects

or feature

A collection of attributes

describe an object

Object is also known as

record, point, case, sample,

entity, or instance

April 9, 2015

60K

10

Data Mining: Concepts and Techniques

1

Transaction Data

Market-Basket transactions

April 9, 2015

TID

Items

1

Bread, Milk

2

3

4

5

Bread, Diaper, Beer, Eggs

Milk, Diaper, Beer, Coke

Bread, Milk, Diaper, Beer

Bread, Milk, Diaper, Coke

Data Mining: Concepts and Techniques

2

Data Matrix

[ 1456

2334

2211]

April 9, 2015

Data Mining: Concepts and Techniques

3

% 1. Title: Iris Plants Database %

% 2. Sources:

% (a) Creator: R.A. Fisher

% (b) Donor: Michael Marshall (MARSHALL%PLU@io.arc.nasa.gov)

% (c) Date: July, 1988

% @RELATION iris

@ATTRIBUTE sepallength NUMERIC

@ATTRIBUTE sepalwidth NUMERIC

@ATTRIBUTE petallength NUMERIC

@ATTRIBUTE petalwidth NUMERIC

@ATTRIBUTE class {Iris-setosa,Iris-versicolor,Iris-virginica}

The Data of the ARFF file looks like the following:

@DATA

5.1,3.5,1.4,0.2,Iris-setosa

4.9,3.0,1.4,0.2,Iris-setosa

4.7,3.2,1.3,0.2,Iris-setosa

April 9, 2015

Data Mining: Concepts and Techniques

4

uci machine learning repository

http://mlearn.ics.uci.edu/databases/

April 9, 2015

Data Mining: Concepts and Techniques

5

uci machine learning repository

http://archive.ics.uci.edu/ml/datasets.html/

April 9, 2015

Data Mining: Concepts and Techniques

6

Attribute Values

Attribute values are numbers or symbols assigned to

an attribute

Distinction between attributes and attribute values

Same attribute can be mapped to different attribute values

Example: height can be measured in feet or meters

Different attributes can be mapped to the same set of values

Example: Attribute values for ID and age are integers

But properties of attribute values can be different

April 9, 2015

ID has no limit but age has a maximum and

minimum value

Data Mining: Concepts and Techniques

7

Discrete and Continuous Attributes

Discrete Attribute (Categorical Attribute)

Has only a finite or countably infinite set of values

Examples: zip codes, counts, or the set of words in a collection of

documents

Often represented as integer variables.

Note: binary attributes are a special case of discrete attributes

Continuous Attribute (Numerical Attribute)

Has real numbers as attribute values

Examples: temperature, height, or weight.

Practically, real values can only be measured and represented

using a finite number of digits.

Continuous attributes are typically represented as floating-point

variables.

April 9, 2015

Data Mining: Concepts and Techniques

8

Discrete and Continuous Attributes

April 9, 2015

Data Mining: Concepts and Techniques

9

Chapter 3: Data Preprocessing

Why preprocess the data?

Data cleaning

Data integration and transformation

Data reduction

Discretization and concept hierarchy

generation

Summary

April 9, 2015

Data Mining: Concepts and Techniques

10

Why Data Preprocessing?

Data in the real world is dirty

incomplete: lacking attribute values, lacking

certain attributes of interest, or containing only

aggregate data

noisy: containing errors or outliers

inconsistent: containing discrepancies in codes or

names

No quality data, no quality mining results!

April 9, 2015

Quality decisions must be based on quality data

Data warehouse needs consistent integration of

quality data

Data Mining: Concepts and Techniques

11

Multi-Dimensional Measure of Data

Quality

A well-accepted multidimensional view:

Accuracy

Completeness

Consistency

Timeliness

Believability

Value added

Interpretability

Accessibility

Broad categories:

April 9, 2015

intrinsic, contextual, representational, and accessibility.

Data Mining: Concepts and Techniques

12

Major Tasks in Data Preprocessing

Data cleaning

Data integration

Normalization and aggregation

Data reduction

Integration of multiple databases, data cubes, or files

Data transformation

Fill in missing values, smooth noisy data, identify or remove

outliers, and resolve inconsistencies

Obtains reduced representation in volume but produces the

same or similar analytical results

Data discretization

Part of data reduction but with particular importance, especially

April 9, 2015 for numerical dataData Mining: Concepts and Techniques

13

Chapter 3: Data Preprocessing

Why preprocess the data?

Data cleaning

Data integration and transformation

Data reduction

Discretization and concept hierarchy

generation

April 9, 2015

Summary

Data Mining: Concepts and Techniques

14

Data Cleaning

Data cleaning tasks

Fill in missing values

Identify outliers and smooth out noisy data

Correct inconsistent data

April 9, 2015

Data Mining: Concepts and Techniques

15

Missing Data

Data is not always available

E.g., many tuples have no recorded value for several attributes,

such as customer income in sales data

Missing data may be due to

equipment malfunction

inconsistent with other recorded data and thus deleted

data not entered due to misunderstanding

certain data may not be considered important at the time of

entry

not register history or changes of the data

Missing data may need to be inferred.

April 9, 2015

Data Mining: Concepts and Techniques

16

Imputing Values to Missing Data

In federated data, between 30%-70% of the

data points will have at least one missing

attribute - data wastage if we ignore all

records with a missing value

Remaining data is seriously biased

Lack of confidence in results

Understanding pattern of missing data

unearths data integrity issues

April 9, 2015

Data Mining: Concepts and Techniques

17

How to Handle Missing Data?

Ignore the tuple: usually done when class label is missing

(assuming the tasks in classification—not effective when the

percentage of missing values per attribute varies considerably.

Fill in the missing value manually: tedious + infeasible?

Use a global constant to fill in the missing value: e.g., “unknown”,

a new class?!

Use the attribute mean to fill in the missing value

Use the attribute mean for all samples belonging to the same

class to fill in the missing value: smarter

Use the most probable value to fill in the missing value: inference-

April 9, 2015

Data Mining: Concepts and Techniques

based such as Bayesian

formula or decision tree

18

Noise

Noise refers to modification of original values

Examples: distortion of a person’s voice when

talking on a poor phone and “snow” on television

screen

Two Sine Waves

April 9, 2015

Two Sine Waves + Noise

Data Mining: Concepts and Techniques

19

Outliers

Outliers are data objects with characteristics

that are considerably different than most of

the other data objects in the data set

April 9, 2015

Data Mining: Concepts and Techniques

20

Outliers

Outliers are data objects with characteristics

that are considerably different than most of

the other data objects in the data set

April 9, 2015

Data Mining: Concepts and Techniques

21

Noisy Data

Noise: random error or variance in a measured

variable

Incorrect attribute values may due to

faulty data collection instruments

data entry problems

data transmission problems

technology limitation

inconsistency in naming convention

April 9, 2015

Data Mining: Concepts and Techniques

22

How to Handle Noisy Data?

Binning method:

Clustering

detect and remove outliers

Combined computer and human inspection

first sort data and partition into (equi-depth) bins

then one can smooth by bin means, smooth by bin

median, smooth by bin boundaries, etc.

detect suspicious values and check by human

Regression

smooth by fitting the data into regression functions

April 9, 2015

Data Mining: Concepts and Techniques

23

Binning Methods for Data Smoothing

* Sorted data for price (in dollars): 4, 8, 9, 15, 21, 21, 24, 25, 26,

28, 29, 34

* Partition into (equi-depth) bins:

- Bin 1: 4, 8, 9, 15

- Bin 2: 21, 21, 24, 25

- Bin 3: 26, 28, 29, 34

* Smoothing by bin means:

- Bin 1: 9, 9, 9, 9

- Bin 2: 23, 23, 23, 23

- Bin 3: 29, 29, 29, 29

* Smoothing by bin boundaries:

- Bin 1: 4, 4, 4, 15

- Bin 2: 21, 21, 25, 25

- Bin 3: 26, 26, 26,Data

34Mining: Concepts and Techniques

April 9, 2015

24

Simple Discretization Methods: Binning

Equal-width (distance) partitioning:

It divides the range into N intervals of equal size: uniform

grid

if A and B are the lowest and highest values of the attribute,

the width of intervals will be: W = (B-A)/N.

The most straightforward

But outliers may dominate presentation

Skewed data is not handled well.

Equal-depth (frequency) partitioning:

It divides the range into N intervals, each containing

approximately same number of samples

Good data scaling

Managing categorical attributes can be tricky.

April 9, 2015

Data Mining: Concepts and Techniques

25

Cluster Analysis

April 9, 2015

Data Mining: Concepts and Techniques

26

Regression

y

Y1

Y1’

y=x+1

X1

April 9, 2015

Data Mining: Concepts and Techniques

x

27

Chapter 3: Data Preprocessing

Why preprocess the data?

Data cleaning

Data integration and transformation

Data reduction

Discretization and concept hierarchy

generation

April 9, 2015

Summary

Data Mining: Concepts and Techniques

28

Data Integration

Data integration:

Schema integration

combines data from multiple sources into a coherent store

integrate metadata from different sources

Entity identification problem: identify real world entities from

multiple data sources, e.g., A.cust-id B.cust-#

Detecting and resolving data value conflicts

for the same real world entity, attribute values from different

sources are different

possible reasons: different representations, different scales,

e.g., metric vs. British units

April 9, 2015

Data Mining: Concepts and Techniques

29

Handling Redundant

Data in Data Integration

Redundant data occur often when integration of

multiple databases

The same attribute may have different names in different

databases

One attribute may be a “derived” attribute in another table,

e.g., annual revenue

Redundant data may be able to be detected by

correlational analysis

Careful integration of the data from multiple sources

may help reduce/avoid redundancies and

inconsistencies and improve mining speed and

April 9, 2015

30

Data Mining: Concepts and Techniques

quality

Data Transformation

Smoothing: remove noise from data

Aggregation: summarization, data cube

construction

Generalization: concept hierarchy climbing

Normalization: scaled to fall within a small,

specified range

min-max normalization

z-score normalization

normalization by decimal scaling

Attribute/feature construction

April 9, 2015

Data Mining: Concepts and Techniques

New attributes constructed from the given ones

31

Data Transformation:

Normalization

min-max normalization

v min A

v'

(new _ max A new _ min A) new _ min A

max A min A

z-score normalization

v m eanA

v'

stand _ devA

normalization by decimal scaling

v

v' j

10

April 9, 2015

Where j is the smallest integer such that Max(| v ' |)<1

Data Mining: Concepts and Techniques

32

Chapter 3: Data Preprocessing

Why preprocess the data?

Data cleaning

Data integration and transformation

Data reduction

Discretization and concept hierarchy

generation

April 9, 2015

Summary

Data Mining: Concepts and Techniques

33

Aggregation

Combining two or more attributes (or objects)

into a single attribute (or object)

Purpose

Data reduction

Change of scale

Reduce the number of attributes or objects

Cities aggregated into regions, states, countries, etc

More “stable” data

April 9, 2015

Aggregated data tends to have less variability

Data Mining: Concepts and Techniques

34

Aggregation

Variation of Precipitation in Australia

Standard Deviation of Average

Monthly Precipitation

April 9, 2015

Standard Deviation of Average

Yearly Precipitation

Data Mining: Concepts and Techniques

35

Sampling

Sampling is the main technique employed for data

selection.

It is often used for both the preliminary investigation of the

data and the final data analysis.

Statisticians sample because obtaining the entire set of

data of interest is too expensive or time consuming.

Sampling is used in data mining because processing the

entire set of data of interest is too expensive or time

consuming.

April 9, 2015

Data Mining: Concepts and Techniques

36

Sampling …

The key principle for effective sampling is the

following:

using a sample will work almost as well as using

the entire data sets, if the sample is

representative

A sample is representative if it has approximately

the same property (of interest) as the original set

of data

April 9, 2015

Data Mining: Concepts and Techniques

37

Types of Sampling

Simple Random Sampling

Sampling without replacement

There is an equal probability of selecting any particular item

As each item is selected, it is removed from the population

Sampling with replacement

Objects are not removed from the population as they are

selected for the sample.

In sampling with replacement, the same object can be picked

up more than once

Stratified sampling

Split the data into Data

several

partitions; then draw random

Mining: Concepts and Techniques

samples from each partition

2015

April 9,

38

Sample Size

8000 points

April 9, 2015

2000 Points

Data Mining: Concepts and Techniques

500 Points

39

Sampling

Raw Data

April 9, 2015

Cluster/Stratified Sample

Data Mining: Concepts and Techniques

40

Curse of Dimensionality

When dimensionality

increases, data

becomes increasingly

sparse in the space that

it occupies

Definitions of density

and distance between

points, which is critical

• Randomly generate 500 points

for clustering and

• Compute difference between max and min

distance between any pair of points

outlier detection,

become less

April 9, 2015

41

Data Mining: Concepts and Techniques

Dimensionality Reduction

Purpose:

Avoid curse of dimensionality

Reduce amount of time and memory required by

data mining algorithms

Allow data to be more easily visualized

May help to eliminate irrelevant features or reduce

noise

Techniques

Principle Component Analysis

Singular Value Decomposition

2015Others: supervised

and

techniques

April 9,

Data Mining:

Conceptsnon-linear

and Techniques

42

Feature Subset Selection

Another way to reduce dimensionality of data

Redundant features

duplicate much or all of the information contained

in one or more other attributes

Example: purchase price of a product and the

amount of sales tax paid

Irrelevant features

contain no information that is useful for the data

mining task at hand

Example: students' ID is often irrelevant to the

April 9, 2015

Data Mining: Concepts and Techniques

task of predicting students' GPA

43

Feature Creation

Create new attributes that can capture the

important information in a data set much

more efficiently than the original attributes

Three general methodologies:

Feature Extraction

domain-specific

Mapping Data to New Space

Feature Construction

April 9, 2015

combining features

Data Mining: Concepts and Techniques

44

Clustering

Partition data set into clusters, and one can store

cluster representation only

Can be very effective if data is clustered but not if

data is “smeared”

Can have hierarchical clustering and be stored in

multi-dimensional index tree structures

There are many choices of clustering definitions

and clustering algorithms, further detailed in

April 9, 2015

Data Mining: Concepts and Techniques

45

Chapter 3: Data Preprocessing

Why preprocess the data?

Data cleaning

Data integration and transformation

Data reduction

Discretization and concept hierarchy

generation

April 9, 2015

Summary

Data Mining: Concepts and Techniques

46

Discretization

Three types of attributes:

Nominal — values from an unordered set

Ordinal — values from an ordered set

Continuous — real numbers

Discretization:

divide the range of a continuous attribute into intervals

Some classification algorithms only accept categorical

attributes.

Reduce data size by discretization

Prepare for further analysis

April 9, 2015

Data Mining: Concepts and Techniques

47

Discretization and Concept hierachy

Discretization

reduce the number of values for a given continuous

attribute by dividing the range of the attribute into intervals.

Interval labels can then be used to replace actual data

values.

Concept hierarchies

April 9, 2015

reduce the data by collecting and replacing low level

concepts (such as numeric values for the attribute age) by

higher level concepts (such as young, middle-aged, or

senior).

Data Mining: Concepts and Techniques

48

Concept hierarchy generation for

categorical data

Specification of a partial ordering of attributes

explicitly at the schema level by users or experts

Specification of a portion of a hierarchy by explicit

data grouping

Specification of a set of attributes, but not of their

partial ordering

Specification of only a partial set of attributes

April 9, 2015

Data Mining: Concepts and Techniques

49

Specification of a set of attributes

Concept hierarchy can be automatically generated

based on the number of distinct values per

attribute in the given attribute set. The attribute

with the most distinct values is placed at the

lowest level of the hierarchy.

15 distinct values

country

April 9, 2015

province_or_ state

65 distinct values

city

3567 distinct values

street

674,339 distinct values

Data Mining: Concepts and Techniques

50