Sampling

advertisement

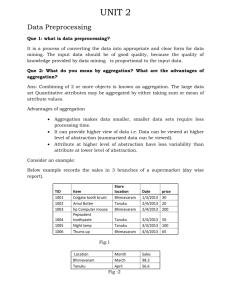

Data Transformation and Feature Selection/Extraction Qiang Yang Thanks: J. Han, Isabelle Guyon, Martin Bachler 2015年4月13日星期 一 Data Mining: Concepts and Techniques 1 Continuous Attribute Temperature Outlook Tempreature Humidity Windy Class Sunny 40 high false N sunny 37 high true N overcast 34 high false P rain 26 high false P rain 15 normal false P rain 13 normal true N overcast 17 normal true P sunny 28 high false N sunny 25 normal false P rain 23 normal false P sunny 27 normal true P overcast 22 high true P overcast 40 normal false P rain 31 high true N Discretization Three types of attributes: Nominal — values from an unordered set Example: attribute “outlook” from weather data Values: “sunny”,”overcast”, and “rainy” Ordinal — values from an ordered set Example: attribute “temperature” in weather data Values: “hot” > “mild” > “cool” Continuous — real numbers Discretization: divide the range of a continuous attribute into intervals Some classification algorithms only accept categorical attributes. Reduce data size by discretization Supervised (entropy) vs. Unsupervised (binning) Data Mining: Concepts and Techniques 3 Simple Discretization Methods: Binning Equal-width (distance) partitioning: It divides the range into N intervals of equal size: uniform grid if A and B are the lowest and highest values of the attribute, the width of intervals will be: W = (B –A)/N. The most straightforward But outliers may dominate presentation: Skewed data is not handled well. Equal-depth (frequency) partitioning: It divides the range into N intervals, each containing approximately same number of samples Data Mining: Concepts and Techniques 4 Histograms 30 25 20 15 10 Data Mining: Concepts and Techniques 100000 90000 80000 70000 60000 0 50000 5 40000 35 30000 40 20000 A popular data reduction technique Divide data into buckets and store average (sum) for each bucket Can be constructed optimally in one dimension using dynamic programming Related to quantization problems. 10000 5 Supervised Method: Entropy-Based Discretization Given a set of samples S, if S is partitioned into two intervals S1 and S2 using boundary T, the entropy after partitioning is | | | | E (S ,T ) S1 Ent ( | S| S 2 Ent ( ) ) S1 | S | S2 The boundary T that minimizes the entropy function over all possible boundaries is selected as a binary discretization. Greedy Method: the process is recursively applied when T goes from smallest to largest value of attribute A, until some stopping criterion is met, e.g., for some user-given Ent(S ) E (T , S ) Data Mining: Concepts and Techniques 6 How to Calculate ent(S)? Given two classes Yes and No, in a set S, Let p1 be the proportion of Yes Let p2 be the proportion of No, p1 + p2 = 100% Entropy is: ent(S) = -p1*log(p1) –p2*log(p2) When p1=1, p2=0, ent(S)=0, When p1=50%, p2=50%, ent(S)=maximum! Data Mining: Concepts and Techniques 7 Transformation: Normalization min-max normalization v min A v' (new _ max A new _ min A) new _ min A max A min A z-score normalization v' v normalization by decimal scaling v v' j 10 Where j is the smallest integer such that Max(| v ' |)<1 Data Mining: Concepts and Techniques 8 Transforming Ordinal to Boolean Simple transformation allows to code ordinal attribute with n values using n-1 boolean attributes Example: attribute “temperature” Temperature Temperature > cold Temperature > medium Cold False False Medium True False Hot True True Original data Transformed data How many binary attributes shall we introduce for nominal values such as “Red” vs. “Blue” vs. “Green”? Data Mining: Concepts and Techniques 9 Data Sampling 2015年4月13日星期 一 Data Mining: Concepts and Techniques 10 Sampling Allow a mining algorithm to run in complexity that is potentially sub-linear to the size of the data Choose a representative subset of the data Simple random sampling may have very poor performance in the presence of skew (uneven) classes Develop adaptive sampling methods Stratified sampling: Approximate the percentage of each class (or subpopulation of interest) in the overall database Used in conjunction with skewed data Data Mining: Concepts and Techniques 11 Sampling Raw Data Data Mining: Concepts and Techniques 12 Sampling Example Cluster/Stratified Sample Raw Data Data Mining: Concepts and Techniques 13 Summary Data preparation is a big issue for data mining Data preparation includes transformation, which are: Data sampling and feature selection Discretization Missing value handling Incorrect value handling Feature Selection and Feature Extraction Data Mining: Concepts and Techniques 14