Document

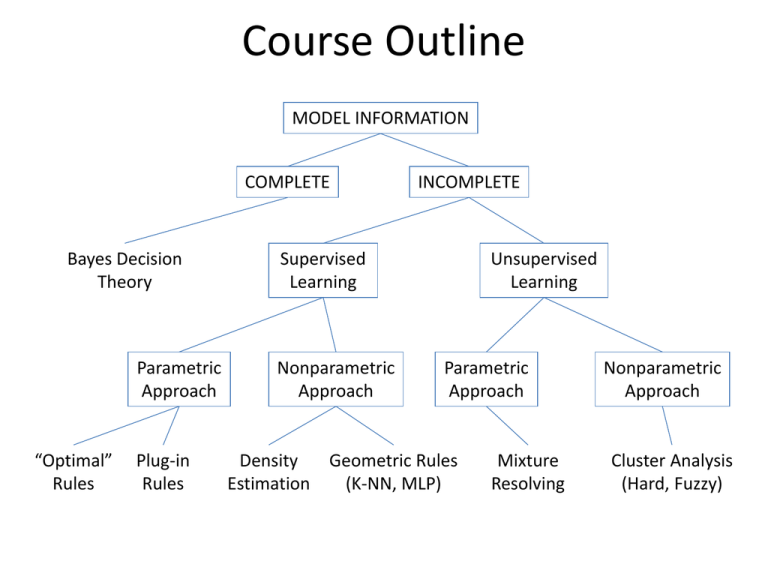

advertisement

Course Outline MODEL INFORMATION COMPLETE Bayes Decision Theory Parametric Approach “Optimal” Rules Plug-in Rules INCOMPLETE Supervised Learning Nonparametric Approach Unsupervised Learning Parametric Approach Density Geometric Rules Estimation (K-NN, MLP) Mixture Resolving Nonparametric Approach Cluster Analysis (Hard, Fuzzy) Two-dimensional Feature Space Supervised Learning Chapter 3: Maximum-Likelihood & Bayesian Parameter Estimation Introduction Maximum-Likelihood Estimation Bayesian Estimation Curse of Dimensionality Component analysis & Discriminants EM Algorithm 3 Introduction Bayesian framework We could design an optimal classifier if we knew: P(i) : priors P(x | i) : class-conditional densities Unfortunately, we rarely have this complete information! Design a classifier based on a set of labeled training samples (supervised learning) Assume priors are known Need sufficient no. of training samples for estimating class-conditional densities, especially when the dimensionality of the feature space is large Pattern Classification, Chapter1 3 Assumption about the problem: parametric model of P(x | i) is available 4 Assume P(x | i) is multivariate Gaussian P(x | i) ~ N( i, i) Characterized by 2 parameters Parameter estimation techniques Maximum-Likelihood (ML) and Bayesian estimation Results of the two procedures are nearly identical, but there is a subtle difference Pattern Classification, Chapter1 3 5 In ML estimation parameters are assumed to be fixed but unknown! Bayesian parameter estimation procedure, by its nature, utilizes whatever prior information is available about the unknown parameter MLE: Best parameters are obtained by maximizing the probability of obtaining the samples observed Bayesian methods view the parameters as random variables having some known prior distribution; How do we know the priors? In either approach, we use P(i | x) for our classification rule! Pattern Classification, Chapter1 3 Maximum-Likelihood Estimation Has good convergence properties as the sample size increases; estimated parameter value approaches the true value as n increases Simpler than any other alternative technique General principle Assume we have c classes and P(x | j) ~ N( j, j) P(x | j) P (x | j, j), where ( j , j ) 1 2 11 22 m n ( j , j ,..., j , j , cov(x j , x j )...) Use class j samples to estimate class j parameters Pattern Classification, Chapter2 3 6 7 Use the information in training samples to estimate = (1, 2, …, c); i (i = 1, 2, …, c) is associated with the ith category Suppose sample set D contains n iid samples, x1, x2,…, xn k n P(D | ) P(x k | ) F() k 1 P(D | ) is called the likelihood of w.r.t. the set of samples) ˆ that ML estimate of is, by definition, the value maximizes P(D | ) “It is the value of that best agrees with the actually observed training samples” Pattern Classification, Chapter2 3 8 Pattern Classification, Chapter2 3 9 Optimal estimation Let = (1, 2, …, p)t and be the gradient operator , ,..., p 1 2 We define l() as the log-likelihood function l() = ln P(D | ) New problem statement: determine that maximizes the log-likelihood t ˆ arg maxl() Pattern Classification, Chapter2 3 10 Set of necessary conditions for an optimum is: k n ( l ln P(x k | )) k 1 l = 0 Pattern Classification, Chapter2 3 Example of a specific case: unknown P(x | ) ~ N(, ) (Samples are drawn from a multivariate normal population) 1 1 1 d t ln P(x k | ) ln (2) (x k ) (x k ) 2 2 1 and ln P(x k | ) (x k ) = , therefore the ML estimate for must satisfy: k n 1 (x k ˆ ) 0 k 1 Pattern Classification, Chapter2 3 11 12 • Multiplying by and rearranging, we obtain: 1 k n ˆ x k n k 1 which is the arithmetic average or the mean of the samples of the training samples! Conclusion: Given P(xk | j), j = 1, 2, …, c to be Gaussian in a ddimensional feature space, estimate the vector = (1, 2, …, c)t and perform a classification using the Bayes decision rule of chapter 2! Pattern Classification, Chapter2 3 13 ML Estimation: Univariate Gaussian Case: unknown & = (1, 2) = (, 2) l ln P( x k | ) 1 1 ln 2 2 ( x k 1 ) 2 2 2 2 (ln P( x k | )) 1 0 l (ln P( x k | )) 2 1 (x k 1 ) 0 2 2 ( x ) 1 k 1 0 2 2 2 2 2 Pattern Classification, Chapter2 3 14 Summation: k n 1 ( x ) 0 k 1 k 1 ˆ 2 k n 2 k n ˆ 1 ( x k 1 ) 0 k 1 ˆ 2 k 1 ˆ 22 (1) (2) Combining (1) and (2), one obtains: k n k n x k 1 k n ; 2 (x k ) 2 k 1 n Pattern Classification, Chapter2 3 15 Bias ML estimate for 2 is biased n1 2 1 2 E ( x i x ) . 2 n n An unbiased estimator for is: 1 k n t C (x k )(x k ˆ ) 1 k 1 n- Sample covariance matrix Pattern Classification, Chapter2 3 Bayesian Estimation (Bayesian learning approach for pattern classification problems) In MLE was supposed to have a fixed value In BE is a random variable The computation of posterior probabilities P(i | x) lies at the heart of Bayesian classification Goal: compute P(i | x, D) Given the training sample set D, Bayes formula can be written P(x | i , D ).P(i | D ) P(i | x, D ) c P(x | j , D ).P( j | D ) j 1 3 1 Pattern Classification, Chapter 16 17 To demonstrate the preceding equation, use: P(x, D | i ) P(x | D i ).P(D | i ) P(x | D ) P(x, j | D ) j P(i ) P(i | D ) (Training sample provides this!) Thus : P(i | x, D ) P(x | i , D i ).P(i ) c P(x | j , D ).P( j ) j 1 3 1 Pattern Classification, Chapter 18 Bayesian Parameter Estimation: Gaussian Case Goal: Estimate using the a-posteriori density P( | D) The univariate Gaussian case: P( | D) is the only unknown parameter P(x | ) ~ N(, 2 ) P( ) ~ N( 0 , 20 ) 0 and 0 are known! 4 1 Pattern Classification, Chapter 19 P(D | ).P( ) P( | D ) P(D | ).P( )d (1) k n P(x k | ).P( ) k 1 Reproducing density P( | D ) ~ N(n , n2 ) (2) The updated parameters of the prior: n 20 2 ˆ n n . 0 2 2 2 2 n 0 n0 0 and n2 20 2 n 20 2 4 1 Pattern Classification, Chapter 20 4 1 Pattern Classification, Chapter 21 The univariate case P(x | D) P( | D) has been computed P(x | D) remains to be computed! P(x | D ) P(x | ).P( | D )d is Gaussian It provides: P(x | D ) ~ N(n , 2 n2 ) Desired class-conditional density P(x | Dj, j) P(x | Dj, j) together with P(j) and using Bayes formula, we obtain the Bayesian classification rule: Max P( j | x, D Max P( x | j , D j ).P( j ) j j 4 1 Pattern Classification, Chapter 22 Bayesian Parameter Estimation: General Theory P(x | D) computation can be applied to any situation in which the unknown density can be parametrized: the basic assumptions are: The form of P(x | ) is assumed known, but the value of is not known exactly Our knowledge about is assumed to be contained in a known prior density P() The rest of our knowledge about is contained in a set D of n random variables x1, x2, …, xn that follows P(x) 5 1 Pattern Classification, Chapter 23 The basic problem is: “Compute the posterior density P( | D)” then “Derive P(x | D)” Using Bayes formula, we have: P(D | ).P() P( | D ) , P(D | ).P()d And by independence assumption: k n P(D | ) P(x k | ) k 1 5 1 Pattern Classification, Chapter Overfitting Problem of Insufficient Data • How to train a classifier (e.g., estimate the covariance matrix) when the training set size is small (compared to the number of features) • Reduce the dimensionality – Select a subset of features – Combine available features to get a smaller number of more “salient” features. • Bayesian techniques – Assume a reasonable prior on the parameters to compensate for small amount of training data • Model Simplification – Assume statistical independence • Heuristics – Threshold the estimated covariance matrix such that only correlations above a threshold are retained. Practical Observations • Most heuristics and model simplifications are almost surely incorrect • In practice, however, the performance of the classifiers base don model simplification is better than with full parameter estimation • Paradox: How can a suboptimal/simplified model perform better than the MLE of full parameter set, on test dataset? – The answer involves the problem of insufficient data Insufficient Data in Curve Fitting Curve Fitting Example (contd) • The example shows that a 10th-degree polynomial fits the training data with zero error – However, the test or the generalization error is much higher for this fitted curve • When the data size is small, one cannot be sure about how complex the model should be • A small change in the data will change the parameters of the 10th-degree polynomial significantly, which is not a desirable quality; stability Handling insufficient data • Heuristics and model simplifications • Shrinkage is an intermediate approach, which combines “common covariance” with individual covariance matrices – Individual covariance matrices shrink towards a common covariance matrix. – Also called regularized discriminant analysis • Shrinkage Estimator for a covariance matrix, given shrinkage factor 0 < < 1, (1 )ni i n i ( ) (1 )ni n • Further, the common covariance can be shrunk towards the Identity matrix, ( ) (1 ) I Problems of Dimensionality Introduction • Real world applications usually come with a large number of features – Text in documents is represented using frequencies of tens of thousands of words – Images are often represented by extracting local features from a large number of regions within an image • Naive intuition: more the number of features, the better the classification performance? – Not always! • There are two issues that must be confronted with high dimensional feature spaces – How does the classification accuracy depend on the dimensionality and the number of training samples? – What is the computational complexity of the classifier? Statistically Independent Features • If features are statistically independent, it is possible to get excellent performance as dimensionality increases • For a two class problem with multivariate normal classes P( x | j ) ~ N (μ j , ), and equal prior probabilities, the probability of error is 1 P (e) 2 e r u 2 2 du 2 where the Mahalanobis distance is defined as 1 r (μ1 μ2 ) (μ1 μ2 ) 2 T Statistically Independent Features • When features are independent, the covariance matrix is diagonal, and we have i1 i 2 r i i 1 d 2 2 • Since r2 increases monotonically with an increase in the number of features, P(e) decreases • As long as the means of features in the differ, the error decreases Increasing Dimensionality • If a given set of features does not result in good classification performance, it is natural to add more features • High dimensionality results in increased cost and complexity for both feature extraction and classification • If the probabilistic structure of the problem is completely known, adding new features will not possibly increase the Bayes risk Curse of Dimensionality • In practice, increasing dimensionality beyond a certain point in the presence of finite number of training samples often leads to lower performance, rather than better performance • The main reasons for this paradox are as follows: – the Gaussian assumption, that is typically made, is almost surely incorrect – Training sample size is always finite, so the estimation of the class conditional density is not very accurate • Analysis of this “curse of dimensionality” problem is difficult A Simple Example • Trunk (PAMI, 1979) provided a simple example illustrating this phenomenon. 1 p1 p2 μ1 μ, μ 2 μ 2 2 1 1 N 1 2 xi i px | 1 e p X | 1 ~ Gμ1 , I i 1 2 2 1 1 p X | 2 ~ Gμ2 , I N 1 2 xi i p x | 2 e i 1 2 N: Number of features 1 i i i th componentof themean vector 1 1 1 μ1 1, , , ,... 2 3 4 1 1 1 μ 2 1, , , ,... 2 3 4 Case 1: Mean Values Known • Bayes decision rule: Decide1 if xt μ x11 x2 2 ... xN N 0 N xi or i1 i 0 1 12 z 2 N 1 2 Pe e dz 2 μ1 μ 2 4i 1 i / 2 2 Pe N i1 i N 1 i 1 1 12 z 2 e dz 2 is a divergent series Pe N 0 as N Case 2: Mean Values Unknown • m labeled training samples are available 1 m i μˆ i 1 x , xi is replaced by -xi if xi 2 m POOLED ESTIMATE Plug-in decision rule Decide1 if xt μˆ x1ˆ1 x2 ˆ 2 ... xN ˆ N 0 Pe N , m P2 .P xt μˆ 0 | x 2 P1 .P xt μˆ 0 | x 1 P xt μˆ 0 | x 2 due tosymmetry Let z x μ i 1 xi μˆ i t N It is difficult to computer he t distribution of z Case 2: Mean Values Unknown 1 1 N 1 N E z i 1 VARz 1 i 1 i m i m z E z lim ~ G0,1, Standard Normal N VARz N z E z E z Pe N , m Pz 0 | x 2 P VAR z VAR z 1 2 1 2z Pe m, N e dz N 1 2 i1 /2 N lim PN 0 N 1 lim Pe m, N N 2 E z c VARz 1 N 1 N 1 i 1 m i m Case 2: Mean Values Unknown 42 Component Analysis and Discriminants Combine features in order to reduce the dimension of the feature space Linear combinations are simple to compute and tractable Project high dimensional data onto a lower dimensional space Two classical approaches for finding “optimal” linear transformation PCA (Principal Component Analysis) “Projection that best represents the data in a least- square sense” MDA (Multiple Discriminant Analysis) “Projection that best separates the data in a least-squares sense” 8 1 Pattern Classification, Chapter