Mathematical%20Statistics%20-%20Chapter

advertisement

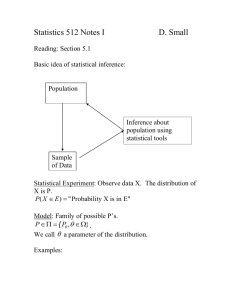

Mathematical Statistics Lecture Notes Chapter 8 – Sections 8.1-8.4 General Info I’m going to try to use the slides to help save my voice. First homework is now posted – covers 8.1-8.5 and is due next Wednesday, Feb. 2. We should be finished that material by Friday. Structure of these notes: series of definitions, etc. then examples (by hand on board with more comments) Chapter 8 – Estimation What is an estimator? A rule, often expressed as a formula, that tells how to calculate the value of an estimate based on measurements in a sample. What estimators are you already familiar with? Two should come to mind. If you want to estimate the proportion of Amherst College students who participated in community service over Winter Break, what estimator would you use? If you want to estimate the average amount of money spent in traveling expenses by Amherst College students over Winter Break, what estimator would you use? Questions about Estimators to Think About What estimator should I use? If I have multiple estimators, how do I pick one? How do we know which is best? How do we place bounds on the estimate or the error of the estimate? How well does the estimator perform on average? We’ll look at all these questions in Chapter 8 and 9. 8.2 helps with question 2. 8.4 helps with question 3. 8.2 – Bias and Mean Square Error (MSE) of Point Estimators What is a point estimator? It is an estimator that is a single value (or vector). It is NOT an interval of possible values (like a confidence interval). The estimators you are familiar with are most likely point estimators. For notation, let be the parameter to estimate and let ˆ be the estimator for a general estimation setting. Definition of Bias ˆ is an unbiased estimator for if E (ˆ) . The bias of a point estimator ˆ is given by: (ˆ) E(ˆ) How are we going to compute bias? We need some basic information about the distribution of ˆ . This may mean using methods of transformations (from Probability) to obtain a pdf, etc. (For reference in current text: Chapter 6) Why not look at the variance of the estimator? Well, you could. You would want the variance of the estimator to be small. It turns out there is a another quantity, called the mean square error that can be examined to gain information about the bias and variance of your estimator. Definition of Mean Square Error (MSE) The MSE of a point estimator ˆ is given by: MSE(ˆ) E[(ˆ )2 ] We note (as a useful result) that: MSE(ˆ) Var(ˆ) [ (ˆ)]2 This means that if our estimator is unbiased, the MSE is equal to the variance of the estimator. Proof of Useful Result See Board Please bear with me as I try to make notes about the computation so you can follow. 8.3 – Some Common Unbiased Estimators See chart on board Standard deviation vs. Standard error Standard deviations involve the unknown parameters Standard errors mean you have plugged in some logical estimators for those parameters. Your book will use them interchangeably (more than I would like). Basically if you need an actual value for a calculation, go ahead and use the standard error. Fortunately for us, due to large sample asymptotics, the math still works out. 8.4 – Definition of Error of Estimation The error of estimation is the distance between an estimator and its target parameter: | ˆ | The error of estimation is a random variable because it depends on the estimator! The norm is usual Euclidean distance. More on Error of Estimation We can make probabilistic statements about ; well really, they are statements about the estimator and parameter. This concept leads to confidence intervals (8.5). For example: P(|ˆ | b) P(b ˆ b) P(ˆ b ˆ b) Set , and then find b so that (if you have the pdf of the ˆ b estimator): ˆ b f (ˆ)dˆ Could also use Tchebysheff’s. Examples See board. Bear with me again as I try to make notes so the computation is easy to follow.