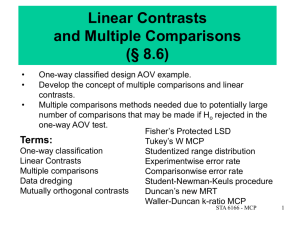

Linear Contrasts and Multiple Comparisons

advertisement

Linear Contrasts

and Multiple Comparisons

(Chapter 9)

•

•

•

One-way classified design AOV example.

Develop the concept of multiple comparisons and linear

contrasts.

Multiple comparisons methods needed due to potentially large

number of comparisons that may be made if Ho rejected in the

one-way AOV test.

Terms:

Linear Contrasts

Multiple comparisons

Data dredging

Mutually orthogonal contrasts

Experimentwise error rate

Comparisonwise error rate

MCPs:

Fisher’s Protected LSD

Tukey’s W (HSD)

Studentized range distribution

Student-Newman-Keuls procedure

Scheffe’s Method

Dunnett’s procedure

STA 6166 - MCP

1

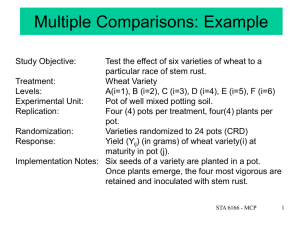

One-Way Layout Example

A study was performed to examine the effect of a new sleep inducing drug on a

population of insomniacs. Three (3) treatments were used:

Standard Drug

New Drug

Placebo (as a control)

What is the role of the placebo in this study?

What is a control in an experimental study?

18 individuals were drawn (at random) from a list of known insomniacs

maintained by local physicians. Each individual was randomly assigned to

one of three groups. Each group was assigned a treatment. Neither the

patient nor the physician knew, until the end of the study, which treatment they

were on (double-blinded).

Why double-blind?

A proper experiment should be:

randomized, controlled, and double-blinded.

STA 6166 - MCP

2

Response:

Average number of hours of sleep per night.

Placebo:

Standard Drug:

New Drug:

5.6, 5.7, 5.1, 3.8, 4.6, 5.1

8.4, 8.2, 8.8, 7.1, 7.2, 8.0

10.6, 6.6, 8.0, 8.0, 6.8, 6.6

yij = response for the j-th individual on the i-th treatment.

Standard

Placebo

Drug

New Drug

5.60

8.40

10.60

5.70

8.20

6.60

5.10

8.80

8.00

3.80

7.10

8.00

4.60

7.20

6.80

5.10

8.00

6.60

sum

29.900

47.700

46.600

mean

4.983

7.950

7.767

variance

0.494

0.455

2.359

pooled variance

1.102

SSW

16.537

variance of the means

2.764

Between mean SSQ (SSB)

16.582

Degrees

Sums of

of

Source

Squares Freedom

Between Groups

33.16

2

Within Groups

16.54

15

Total

49.70

17

TSS

( yij y )2

i, j

Hartley’s test for equal variances:

Fmax = 4.77

< Fmax_critical = 10.8

Mean

Square F statistic

16.582

15.04

1.102

SSW

( yij yi ) 2

P-value

0.00026

SSB

ni ( yi y ) 2

i, j

i

SSB

t 1

SSW

2

MSW sw

nT t

MSB

F

~ Fdfb ,dfw

MSW

2

MSB sb

STA 6166 - MCP

3

Excell Analysis Tool Output

Anova: Single Factor

SUMMARY

Groups

Placebo

Standard Drug

New Drug

ANOVA

Source of Variation

Between Groups

Within Groups

Total

Count

6

6

6

SS

33.163333

16.536667

49.7

Sum

Average Variance

29.9 4.983333 0.493667

47.7

7.95

0.455

46.6 7.766667 2.358667

df

MS

F

2 16.58167 15.04082

15 1.102444

P-value

F crit

0.00026 3.682317

17

What do we conclude here?

STA 6166 - MCP

4

Linear Contrasts and Multiple Comparisons

If we reject H0 of no differences in treatment means in favor of

HA, we conclude that at least one of the t population means

differs from the other t-1.

Which means differ from each other?

Multiple comparison procedures have been developed to help

determine which means are significantly different from each other.

Many different approaches - not all produce the same result.

Data dredging and data snooping - analyzing only those

comparisons which look interesting after looking at the data

– affects the error rate!

Problems with the confidence assumed for the comparisons:

1-a for a particular pre-specified comparison?

1-a for all unplanned comparisons as a group?

STA 6166 - MCP

5

Linear Comparisons

Any linear comparison among t population means, m1, m2, ...., mt can

be written as:

l a1m1 a2m2 at mt

t

ai 0

Where the ai are constants satisfying the constraint:

i 1

Example: To compare m1 to m2 we use the equation:

l m1 m2

with

coefficients

a1 1

a2 1

a3 a4 at 0

Note

constraint

is met!

m2 m3

l m1

(1)m1 ( 21 )m 2 ( 21 )m 3

2

STA 6166 - MCP

6

Linear Contrast

l m 1 m 2 y1 y2

A linear comparison

estimated by using group

means is called a linear

contrast.

l m m 2 m 3 (1)y ( 1 )y ( 1 )y

1

1

2 2

2 3

2

Variance of a linear contrast:

V (l)

2 a1

sw n

1

2

2

a2

n2

t

ai yi

Test of

i 1

MSl

significance

t ai2

i 1 ni

H : l = 0 vs. H : l 0

o

2

at

n

t

2

2

sw

t

i 1

2

ai

ni

sw2 MSE

( MSW )

MSl

F

~ F1,nT t

MSE

a

STA 6166 - MCP

7

Orthogonal Contrasts

lˆ1 a1 y1 a2 y2 at yt

lˆ2 b1 y1 b2 y2 bt yt

These two contrasts are said to be orthogonal if:

t

l1 l2 a1b1 a2b2 at bt ai bi 0

i 1

in which case l1 conveys no information about l2 and viceversa.

A set of three or more contrasts are said to

be mutually orthogonal if all pairs of linear

contrasts are orthogonal.

STA 6166 - MCP

8

ˆl y y2 y3

1

1

2

lˆ y y

2

2

a1 1

1

a2

2

1

a3

2

Compare average of drugs (2,3) to placebo (1).

Contrast drugs (2,3).

3

b1 0

Orthogonal

b2 1

b3 1

Non-orthogonal

Contrast Standard drug (2) to placebo (1).

Contrast New drug (3) to placebo (1).

lˆ3 y1 y2

lˆ4 y1 y3

a1 1

a2 1

a3 0

b1 1

b2 0

b3 1

STA 6166 - MCP

9

Drug Comparisons

Standard

Placebo

Drug

New Drug

5.60

8.40

10.60

5.70

8.20

6.60

5.10

8.80

8.00

3.80

7.10

8.00

4.60

7.20

6.80

5.10

8.00

6.60

sum

29.900

47.700

46.600

mean

4.983

7.950

7.767

variance

0.494

0.455

2.359

pooled variance

1.102

SSW

16.537

variance of the means

2.764

Between mean SSQ (SSB)

16.582

F

MSl1

~ F1, nT t

MSE

MSlˆ1 33.10

F1

30.04

MSE 1.102

MSlˆ2 0.10

F2

0.09

MSE 1.102

F1,15,.05 4.54

Degrees

Sums of

of

Source

Squares Freedom

Between Groups

33.16

2

Within Groups

16.54

15

Total

49.70

17

Mean

Square F statistic

16.582

15.04

1.102

P-value

0.00026

y y

7.95 7.77

lˆ1 y1 2 3 4.98

2.88

2

2

lˆ y y 7.95 7.77 0.18

2

2

3

2

(1) 2 (.5) 2 (.5) 2

a22 a32

2 a1

ˆ

1.102(0.25)

V l1 sW 1.102

n

n

n

6

6

6

2

3

1

2

(0) 2 (1) 2 (1) 2

b22 b32

2 b1

ˆ

1.102(0.33)

V l2 sW 1.102

n

n

n

6

6

6

2

3

1

2

2

lˆ1

2.88

33.10

ai2

0.25

i n

i

2

2

lˆ2

0.18

ˆ

ˆ

SSl2 MSl2

0.10

bi2

0.33

STA 6166 - MCP

i n

i

SSlˆ1 MSlˆ1

10

Importance of Mutual Orthogonality

Assume t treatment groups, each group having n individuals (units).

•

•

t-1 mutually orthogonal contrasts can be formed from the t

means. (Remember t-1 degrees of freedom.)

Treatment sums of squares (SSB) can be computed as the sum

of the sums of squares associated with the t-1 orthogonal

contrasts. (i.e. the treatment sums of squares can be partitioned

into t-1 parts associated with t-1 mutually orthogonal contrasts).

contrastsorthogonal SSl1 SSlt 1 SSB

t-1 independent pieces of information about the

variability in the treatment means.

STA 6166 - MCP

11

Example of Linear Contrasts

Objective:

Treatments:

Treatment

A

B

C

D

Test the wear quality of a new paint.

Weather and wood combinations.

Code

m1

m2

m3

m4

Combination

hardwood, dry climate

hardwood, wet climate

softwood, dry climate

softwood, wet climate

(Obvious) Questions:

Q1: Is the average life on hardwood the same as average life

on softwood?

Q2: Is the average life in dry climate the same as average life

in wet climate?

Q3: Does the difference in paint life between wet and dry

climates depend upon whether the wood is hard

soft?

STAor

6166

- MCP

12

Treatment

Mean

(in years)

ni

Population

parameter

A

B

C

D

13

3

14

3

20

3

21

3

m1

m2

m3

m4

Q1

MSE=

t=

nt -t=

5

4

8

Q1: Is the average life on hardwood the same as average life on softwood?

H10 :

m1 m2 m3 m4

2

2

Comparison:

OR

m1 m2 m3 m4

2 2 0

l1 ( 12 )m1 ( 12 )m2 ( 12 )m3 ( 12 )m 4

ˆl ( 1 )y ( 1 )y ( 1 )y ( 1 )y

1

1

2

3

4

2

2

2

2

Estimated Contrast

Test H0: l1 = 0 versus HA: l1 0

Test Statistic: F

What is MSl1 ?

MSl1

MSE

Rejection Region: Reject H0 if

F F1,nT t,a

STA 6166 - MCP

13

2

t

2

a

y

1

1

1

1

i i

2 y1 2 y 2 2 y 3 2 y 4

i 1

MSl1

t ai2

1 2 1 2 1 2 1 2

2

2

2

2

n

i

1

i

n2

n3

n4

n1

2

t

2

a

y

1

1

1

1

i i

13

14

20

21

2 2 2

2 49

MSl1 i1t 2

147

2

2

2

2

1

ai

1

1

12

12

2

2

3

n

i1 i

3

3

3

3

MSl1 147

=

= 29.4

MSE

5

F1,8,0.05 = 5.32

F=

Conclusion: Since F=29.4 > 5.32 we reject H0 and conclude that

there is a significant difference in average life on hard versus

soft woods.

STA 6166 - MCP

14

Treatment

Mean

(in years)

ni

Population

parameter

A

B

C

D

13

3

14

3

20

3

21

3

m1

m2

m3

m4

Q2

MSE=

t=

nt -t=

5

4

8

Q2: Is the average life in dry climate the same as average life in wet climate?

H 02 :

m1 m3

m2 m4

OR

m1 m3 m 2 m 4

0

2 2

2

2

Comparison: l2 ( 12 )m1 ( 12 )m 2 ( 12 )m3 ( 12 )m 4

ˆl ( 1 )y ( 1 )y ( 1 )y ( 1 )y

2

1

2

3

4

2

2

2

2

Estimated Contrast

Test H0: l2 = 0 versus HA: l2 0

Test Statistic: F =

MSl2

MSE

Rejection Region: Reject H0 if

F > F1,nT - t,a

STA 6166 - MCP

15

2

t

2

a

y

1

1

1

1

i i

2 y1 2 y 2 2 y3 2 y 4

i1

MSl2

t ai2

1 2 1 2 1 2 1 2

2

2

2

2

n

i

1

i

n2

n3

n4

n1

2

t

2

a

y

1

1

1

1

i i

13

14

20

21

2 2 2

2 12

i1

MSl2

3

2

2

2

2

2

t

1

ai

1

1

12

12

2

2

3

n

i1 i

3

3

3

3

MSl2

3

= = 0.6

MSE 5

F1,8,0.05 = 5.32

F=

Conclusion: Since F=0.6 < 5.32 we do not reject H0 and

conclude that there is not a significant difference in average life

in wet versus dry climates.

STA 6166 - MCP

16

Treatment

Mean

(in years)

ni

Population

parameter

A

B

C

D

13

3

14

3

20

3

21

3

m1

m2

m3

m4

Q3

MSE=

t=

nt -t=

5

4

8

Q3: Does the difference in paint life between wet and dry climates depend

upon whether the wood is hard or soft?

H30 : m1 m2 m3 m4

OR (m1 m2 ) (m3 m4 ) 0

Comparison: l3 (1)m1 ( 1)m2 ( 1)m3 (1)m 4

ˆl (1)y ( 1)y ( 1)y (1)y

3

1

2

3

4

Estimated Contrast

Test H0: l3 = 0 versus HA: l3 0

Test Statistic: F

MSl3

MSE

Rejection Region: Reject H0 if

F F1,nT t,a

STA 6166 - MCP

17

2

t

2

a

y

i i

1 y1 1 y 2 1 y 3 1 y 4

i 1

MSl3

t ai2

12 12 12 12

n

i

1

i

n2

n3

n4

n1

2

t

2

a

y

i i

1

13

1

14

1

20

1

21

02

i1

MSl3

0

2

2

2

2

2

t

ai

1

4

1

1

1

3

n

i1 i

3

3

3

3

MSl3

0

= = 0

MSE 5

F1,8,0.05 = 5.32

F=

Conclusion: Since F=0 < 5.32 we do not reject H0 and conclude

that the difference between average paint life between wet and

dry climates does not depend on wood type. Likewise, the

difference between average paint life for the wood types does

STA 6166 - MCP

not depend on climate type (i.e. there is no interaction).

18

Mutual Orthogonality

Contrast

l1

l2

l3

a1

a2

1

2

1

2

1

2

1

2

1

1

a3

12

1

2

1

a4

12

12

1

l1 l2 14 14 14 14 0

l1 l3 12 12 12 12 0

l2 l3 12 12 12 12 0

The three are mutually orthogonal.

SSl1 = MSl1

SSl2 = MSl2

SSl3 = MSl3

Treatment SS

=

=

=

=

147

3

0

150

The three mutually orthogonal contrasts

add up to the Treatment Sums of

Squares.

Total Error SS = dferror x MSE = 8 x 5 = 40

STA 6166 - MCP

19

(Type I)

Error

Rate

STA 6166 - MCP

20

If Ho is true, and

α=0.05, we can

expect to make a

Type I error 5% of

the time…

1 out of every 20

will yield

p-value<0.05,

even though

there is no

effect!

STA 6166 - MCP

21

Types of Error Rates

Compairsonwise Error Rate - the probability of making a Type I error

in a single test that involves the comparison of two means. (Our

usual definition of Type I error thus far…)

Question: How should we define Type I error in an experiment (test)

that involves doing several tests? What is the “overall” Type I

error?

The following definition seems sensible:

Experimentwise Error Rate - the probability of observing an

experiment in which one or more of the pairwise comparisons are

incorrectly declared significantly different. This is the probability of

making at least one Type I error.

STA 6166 - MCP

22

Error Rates: Problems

Suppose we make c mutually orthogonal

(independent) comparisons, each with Type I

comparisonwise error rate of a. The

experimentwise error rate, e, is then:

e 1 (1 a )

c

If the comparisons are not orthogonal, then the

experimentwise error rate is smaller.

Thus in most situations we actually have:

e 1 (1 a )

c

Number of

Type I

Experimentwise

comparsons Error Rate

Error Rate

1

0.05

0.050

2

0.05

0.098

3

0.05

0.143

4

0.05

0.185

5

0.05

0.226

6

0.05

0.265

7

0.05

0.302

8

0.05

0.337

9

0.05

0.370

10

0.05

0.401

11

0.05

0.431

12

0.05

0.460

13

0.05

0.487

14

0.05

0.512

15

0.05

0.537

16

0.05

0.560

17

0.05

0.582

18

0.05

0.603

19

0.05

0.623

20

0.05

0.642

STA 6166 - MCP

23

The Bonferroni Solution

Solution: set e=0.05 and solve for a:

But there’s a problem…

a 1 (1 e)1/ c

E.g. if c=8, we get a=0.0064!

Very conservative…, thus type II error is large.

Bonferroni’s inequality provides an approximate

solution to this that guarantees:

e 1 (1 a )

We set:

c

a e/c

E.g. if c=8, we get a=0.05/8=0.0063.

Still conservative!

STA 6166 - MCP

24

Multiple Comparison Procedures (MCPs):

Overview

Terms:

• If the MCP requires a significant overall F test, then the procedure is

called a protected method.

• Not all procedures produce the same results. (An optimal procedure

could be devised if the degree of dependence, and other factors,

among the comparisons were known…)

• The major differences among all of the different MCPs is in the

calculation of the yardstick used to determine if two means are

significantly different. The yardstick can generically be referred to as

the least significant difference. Any two means greater than this

difference are declared significantly different.

y i y j " yardstick" " TabledValue"" SEof difference"

STA 6166 - MCP

25

Multiple Comparison Procedures: Overview

y i y j " yardstick" " TabledValue"" SEof difference"

• Yardsticks are composed of a standard error term and a critical

value from some tabulated statistic.

• Some procedures have “fixed” yardsticks, some have “variable”

yardsticks. The variable yardsticks will depend on how far apart

two observed means are in a rank ordered list of the mean values.

• Some procedures control Comparisonwise Error, other

Experimentwise Error, and some attempt to control both. Some are

even more specialized, e.g. Dunnett’s applies only to comparisons

of treatments to a control.

STA 6166 - MCP

26

Fisher’s Least Significant Difference - Protected

Mean of group i (mi) is significantly different from the mean of group

j (mj) if

y i y j LSD

LSDij ta 2 ,df error MSE

1

ni

n1j

ta 2 ,df error MSE n2

if all groups have

same size n.

Type I (comparisonwise) error rate = a

Controls Comparisonwise Error. Experimentwise error control

comes from requiring a significant overall F test prior to performing

any comparisons, and from applying the method only to pre-planned

comparisons.

STA 6166 - MCP

27

Tukey’s W (Honestly Significant

Difference) Procedure

Primarily suited for all pairwise comparisons among t means.

Means are different if:

yi y j W

MSE

W qa (t , df error )

n

{Table 10 - critical values of the studentized range.}

Experimentwise error rate = a

This MCP controls experimentwise error rate! Comparisonwise

error rates is thus very low.

STA 6166 - MCP

28

Student Newman Keul Procedure

A modified Tukey’s MCP. Rank the t sample means from smallest

to largest. For two means that are r “steps” apart in the ranked

list, we declare the population means different if:

y i y j Wr

Wr qa (r , df error )

MSE

n

{Table 10 - critical values of the studentized range. Depends on

which mean pair is being considered!}

y[1] min

r=2

y [ 3]

y [ 2]

r=3

y [5]

y [ 4]

r=4

r=5

y[6] max

r=6

varying

yardstick

STA 6166 - MCP

29

Duncan’s New Multiple Range Test (Passe)

Neither an experimentwise or comparisonwise error rate control alone.

Based on a ranking of the observed means.

Introduces the concept of a “protection level” (1-a)r-1

Number of steps

Apart, r

2

3

4

5

6

7

y i y j Wr

Protection Level

(0.95)r-1

.950

.903

.857

.815

.774

.735

Probability of

Falsely Rejecting H0

.050

.097

.143

.185

.226

.265

Wr qa (r , df error )

MSE

n

{Table A -11 (later) in these notes}

STA 6166 - MCP

30

Dunnett’s Procedure

A MCP that is used for comparing treatments to a control. It

aims to control the experimentwise error rate.

Compares each treatment mean (i) to the mean for the control

group (c).

yi yc D

D da (k , v) MSE n2

dα(k,v) is obtained from Table A-11 (in the book) and is based on:

•

α = the desired experimentwise error rate

•

k = t-1, number of noncontrol treatments

•

v = error degrees of freedom.

STA 6166 - MCP

31

Scheffé’s S Method

For any linear contrast:

Estimated by:

With estimated variance:

l a1m1 a2m2 at mt

ˆl a y a y a y

1 1

2 2

t t

t

ai2

ˆ

ˆ

V (l ) MSE ni

To test H0: l = 0 versus Ha: l 0

For a specified value of a, reject H0 if:

where:

i 1

lˆ S

S Vˆ (lˆ) (t 1) Ft 1,df error ,a

STA 6166 - MCP

32

Adjustment for unequal sample sizes:

The Harmonic Mean

If the sample sizes are not equal in all t groups, the

value of n in the equations for Tukey and SNK can be

replaced with the harmonic mean of the sample sizes:

nt

t

(1 / n )

i 1

i

W qa (t, df err ) MSE / n

E.g. Tukey’s W becomes:

Or can also use Tukey-Cramer

method:

MSE 1 1

*

W qa (t , df err )

2 ni n j

STA 6166 - MCP

33

MCP Confidence Intervals

In some MCPs we can also

form simultaneous confidence

intervals (CI’s) for any pair of

means, μi - μj.

• Fisher’s LSD:

• Tukey’s W:

• Scheffe’s for a contrast I:

( yi y j ) LSDij

( yi y j ) W

Iˆ S

STA 6166 - MCP

34

A Nonparametric MCP (§9.9)

• The (parametric) MCPs just discussed all assume the

data are random samples from normal distributions with

equal variances.

• In many situations this assumption is not plausible,

e.g. incomes, proportions, survival times.

• Let τi be the shift parameter (e.g. median) for

population i, i=1,…,t. Want to determine if populations

differ with respect to their shift parameters.

• Combine all samples into one, rank obs from smallest

to largest. Denote mean of ranks for group i by:

Ri

STA 6166 - MCP

35

Nonparametric Kruskal-Wallis MCP

This MCP controls experimentwise error rate.

• Perform the Kruskall-Wallis test of equality of shift

parameters (null hypothesis).

• If this test yields an insignificant p-value, declare no

differences in the shift parameters and stop.

• If not, declare populations i and j to be different if

Ri R j KWij

qa (t , ) nT (1 nT ) 1 1

KWij

n n

12

2

j

i

STA 6166 - MCP

36

Comparisonwise error

rates for different MCP

STA 6166 - MCP

37

Experimentwise error rates

for different MCP

STA 6166 - MCP

38