lecture note 7

advertisement

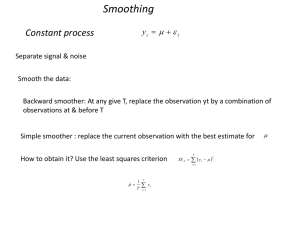

STAT 497 LECTURE NOTES 7 FORECASTING 1 FORECASTING • One of the most important objectives in time series analysis is to forecast its future values. It is the primary objective of modeling. • ESTIMATION (tahmin)the value of an estimator for a parameter. • PREDICTION (kestirim)the value of a r.v. when we use the estimates of the parameter. • FORECASTING (öngörü)the value of a future r.v. that is not observed by the sample. 2 FORECASTING Yt Yt 1 at ˆ (Estimate of ) Yˆt ˆYt 1 (Predictio n) Yˆt 1 (Forecast) 3 FORECASTING FROM AN ARMA MODEL THE MINIMUM MEAN SQUARED ERROR FORECASTS Observed time series, Y1, Y2,…,Yn. Observed sample Y1 Y2 ………………….. Yn Yn+1? Yn+2? n: the forecast origin Yˆn 1 the forecast value of Yn1 Yˆn 2 the forecast value of Yn 2 Yˆn the forecast value of Yn step ahead forecast of Yn minimum MSE forecast of Yn 4 FORECASTING FROM AN ARMA MODEL Yˆn E Yn Yn , Yn1 ,, Y1 The conditional expectatio n of Yn given the observed sample 5 FORECASTING FROM AN ARMA MODEL • The stationary ARMA model for Yt is p B Yt 0 q B at or Yt 0 1Yt 1 pYt p at 1at 1 q at q • Assume that we have data Y1, Y2, . . . , Yn and we want to forecast Yn+l (i.e., l steps ahead from forecast origin n). Then the actual value is Yn 0 1Yn1 pYn p an 1an1 q anq 6 FORECASTING FROM AN ARMA MODEL • Considering the Random Shock Form of the series q B Yn 0 B at 0 at p B 0 an 1an1 2 an2 an 7 FORECASTING FROM AN ARMA MODEL • Taking the expectation of Yn+l , we have Yˆn E Yn Yn , Yn1 ,, Y1 an 1an1 where 0, j 0 E an j Yn ,, Y1 an j , j 0 8 FORECASTING FROM AN ARMA MODEL • The forecast error: en Yn Yˆn an 1an 1 1an 1 1 i an i i 0 • The expectation of the forecast error: E en 0 • So, the forecast in unbiased. • The variance of the forecast error: 1 1 2 2 Var en Var i an i a i i 0 i 0 9 FORECASTING FROM AN ARMA MODEL • One step-ahead (l=1) Yn1 0 an1 1an 2 an1 Yˆn 1 0 1an 2 an1 en 1 Yn1 Yˆn 1 an1 Var en 1 2 a 10 FORECASTING FROM AN ARMA MODEL • Two step-ahead (l=2) Yn 2 0 an 2 1an1 2 an Yˆn 2 0 2 an en 2 Yn 2 Yˆn 2 an 2 1an1 Var en 2 a2 1 12 11 FORECASTING FROM AN ARMA MODEL • Note that, lim Yˆn 0 lim Var en 0 • That’s why ARMA (or ARIMA) forecasting is useful only for short-term forecasting. 12 PREDICTION INTERVAL FOR Yn+l • A 95% prediction interval for Yn+l (l steps ahead) is Yˆn 1.96 Var en 1 Yˆn 1.96 a i2 i 0 For one step-ahead the simplifies to Yˆn 1 1.96 a For two step-ahead the simplifies to 2 ˆ Yn 2 1.96 a 1 1 • When computing prediction intervals from data, we substitute estimates for parameters, giving approximate prediction intervals13 REASONS NEEDING A LONG REALIZATION • Estimate correlation structure (i.e., the ACF and PACF) functions and get accurate standard errors. • Estimate seasonal pattern (need at least 4 or 5 seasonal periods). • Approximate prediction intervals assume that parameters are known (good approximation if realization is large). • Fewer estimation problems (likelihood function better behaved). • Possible to check forecasts by withholding recent data . • Can check model stability by dividing data and analyzing both sides. 14 REASONS FOR USING A PARSIMONIOUS MODEL • Fewer numerical problems in estimation. • Easier to understand the model. • With fewer parameters, forecasts less sensitive to deviations between parameters and estimates. • Model may applied more generally to similar processes. • Rapid real-time computations for control or other action. • Having a parsimonious model is less important if the realization is large. 15 EXAMPLES • AR(1) • MA(1) • ARMA(1,1) 16 UPDATING THE FORECASTS • Let’s say we have n observations at time t=n and find a good model for this series and obtain the forecast for Yn+1, Yn+2 and so on. At t=n+1, we observe the value of Yn+1. Now, we want to update our forecasts using the original value of Yn+1 and the forecasted value of it. 17 UPDATING THE FORECASTS The forecast error is 1 en Yn Yˆn i ani i 0 We can also write this as en1 1 Yn11 Yˆn1 1 i 0 i 0 i an11i i ani 1 i ani an i 0 en 18 UPDATING THE FORECASTS Yn Yˆn 1 1 Yn Yˆn an Yˆn Yˆn1 1 an Yˆn Yˆn1 1 Yn Yˆn 1 1 Yˆn1 Yˆn 1 Yn1 Yˆn 1 n=100 Yˆ1011 Yˆ100 2 1Y101 Yˆ100 1 19 FORECASTS OF THE TRANSFORMED SERIES • If you use variance stabilizing transformation, after the forecasting, you have to convert the forecasts for the original series. • If you use log-transformation, you have to consider the fact that EYn Y1,,Yn expElnYn lnY1,,lnYn 20 FORECASTS OF THE TRANSFORMED SERIES • If X has a normal distribution with mean and variance 2, 2 . Eexp X exp 2 • Hence, the minimum mean square error forecast for the original series is given by 1 ˆ expZ n Varen where Zn lnYn 2 EZn Z1 ,, Zn 2 Var Z n Z1 , , Z n 21 MEASURING THE FORECAST ACCURACY 22 MEASURING THE FORECAST ACCURACY 23 MEASURING THE FORECAST ACCURACY 24 MOVING AVERAGE AND EXPONENTIAL SMOOTHING • This is a forecasting procedure based on a simple updating equations to calculate forecasts using the underlying pattern of the series. Not based on ARIMA approach. • Recent observations are expected to have more power in forecasting values so a model can be constructed that places more weight on recent observations than older observations. 25 MOVING AVERAGE AND EXPONENTIAL SMOOTHING • Smoothed curve (eliminate up-and-down movement) • Trend • Seasonality 26 SIMPLE MOVING AVERAGES • 3 periods moving averages Yt = (Yt-1 + Yt-2 + Yt-3)/3 • Also, 5 periods MA can be considered. Period Actual 3 Quarter MA Forecast 5 Quarter MA forecast Mar-83 239.3 Missing Missing Jun-83 239.8 Missing Missing Sep-83 236.1 Missing Missing Dec-83 232 238.40 Missing Mar-84 224.75 235.97 Missing Jun-84 237.45 230.95 234.39 Sep-84 245.4 231.40 234.02 Dec-84 251.58 235.87 235.14 … So on.. 27 SIMPLE MOVING AVERAGES • One can impose weights and use weighted moving averages (WMA). Eg Y t = 0.6Yt-1 + 0.3Yt-2+ 0.1Yt-2 • How many periods to use is a question; more significant smoothing-out effect with longer lags. • Peaks and troughs (bottoms) are not predicted. • Events are being averaged out. • Since any moving average is serially correlated, any sequence of random numbers could appear to exhibit cyclical fluctuation. 28 SIMPLE MOVING AVERAGES • Exchange Rates: Forecasts using the SMA(3) model Date Rate Three-Quarter Moving Average Mar-85 Jun-85 Se-85 Dec-85 Mar-86 257.53 250.81 238.38 207.18 187.81 missing missing 248.90 232.12 211.12 Three-Quarter Forecast missing missing missing 248.90 232.12 29 SIMPLE EXPONENTIAL SMOOTHING (SES) • Suppressing short-run fluctuation by smoothing the series • Weighted averages of all previous values with more weights on recent values • No trend, No seasonality 30 SIMPLE EXPONENTIAL SMOOTHING (SES) • Observed time series Y1, Y2, …, Yn • The equation for the model is St Yt 1 1 St 1 where : the smoothing parameter, 0 1 Yt: the value of the observation at time t St: the value of the smoothed obs. at time t. 31 SIMPLE EXPONENTIAL SMOOTHING (SES) • The equation can also be written as St St 1 Yt 1 St 1 the forecast error • Then, the forecast is St 1 Yt 1 St St Yt St 32 SIMPLE EXPONENTIAL SMOOTHING (SES) • Why Exponential?: For the observed time series Y1,Y2,…,Yn, Yn+1 can be expressed as a weighted sum of previous observations. Yˆt 1 c0Yt c1Yt 1 c2Yt 2 where ci’s are the weights. • Giving more weights to the recent observations, we can use the geometric weights (decreasing by a constant ratio for every unit increase in lag). i ci 1 ; i 0,1,...;0 1. 33 SIMPLE EXPONENTIAL SMOOTHING (SES) • Then, 0 1 2 ˆ Yt 1 1 Yt 1 Yt 1 1 Yt 2 Yˆt 1 Yt 1 Yˆt 1 1 St+1 St 34 SIMPLE EXPONENTIAL SMOOTHING (SES) • Remarks on (smoothing parameter). – Choose between 0 and 1. – If = 1, it becomes a naive model; if is close to 1, more weights are put on recent values. The model fully utilizes forecast errors. – If is close to 0, distant values are given weights comparable to recent values. Choose close to 0 when there are big random variations in the data. – is often selected as to minimize the MSE. 35 SIMPLE EXPONENTIAL SMOOTHING (SES) • Remarks on (smoothing parameter). – In empirical works, 0.05 0.3 commonly used. Values close to 1 are used rarely. – Numerical Minimization Process: • • • • Take different values ranging between 0 and 1. Calculate 1-step-ahead forecast errors for each . Calculate MSE for each case. Choose which has the min MSE. n et Yt St min e t 1 2 t 36 SIMPLE EXPONENTIAL SMOOTHING (SES) • EXAMPLE: Time Yt St+1 (=0.10) (YtSt)2 1 5 - - 2 7 (0.1)5+(0.9)5=5 4 3 6 (0.1)7+(0.9)5=5.2 0.64 4 3 (0.1)6+(0.9)5.2=5.08 5.1984 5 4 (0.1)3+(0.9)5.28=5.052 1.107 TOTAL 10.945 SSE MSE 2.74 n 1 • Calculate this for =0.2, 0.3,…,0.9, 1 and compare the MSEs. Choose with minimum MSE 37 SIMPLE EXPONENTIAL SMOOTHING (SES) • Some softwares automatically chooses the optimal using the search method or nonlinear optimization techniques. INITIAL VALUE PROBLEM 1. Setting S1 to Y1 is one method of initialization. 2. Take the average of, say first 4 or 5 observations and use this as an initial value. 38 DOUBLE EXPONENTIAL SMOOTHING OR HOLT’S EXPONENTIAL SMOOTHING • Introduce a Trend factor to the simple exponential smoothing method • Trend, but still no seasonality SES + Trend = DES • Two equations are needed now to handle the trend. St Yt 1 1 St 1 Tt 1 ,0 1 Tt St St 1 1 Tt 1 ,0 1 Trend term is the expected increase or decrease per unit time 39 period in the current level (mean level) HOLT’S EXPONENTIAL SMOOTHING • Two parameters : = smoothing parameter = trend coefficient • h-step ahead forecast at time t is Yˆt h St hTt Current level Current slope • Trend prediction is added in the h-step ahead forecast. 40 HOLT’S EXPONENTIAL SMOOTHING • Now, we have two updated equations. The first smoothing equation adjusts St directly for the trend of the previous period Tt-1 by adding it to the last smoothed value St-1. This helps to bring St to the appropriate base of the current value. The second smoothing equation updates the trend which is expressed as the difference between last two values. 41 HOLT’S EXPONENTIAL SMOOTHING • Initial value problem: – S1 is set to Y1 – T1=Y2Y1 or (YnY1)/(n1) and can be chosen as the value between 0.02< ,<0.2 or by minimizing the MSE as in SES. 42 HOLT’S EXPONENTIAL SMOOTHING • Example: (use = 0.6, =0.7; S1 = 4, T1= 1) Holt Holt time Yt St Tt 1 3 4 1 2 5 3.8 0.64 3 4 4.78 0.74 4 - 4.78+0.74 5 - 4.78+2*0.74 43 HOLT-WINTER’S EXPONENTIAL SMOOTHING • Introduce both Trend and Seasonality factors • Seasonality can be added additively or multiplicatively. • Model (multiplicative): Yt 1 St 1 St 1 Tt 1 It s Tt St St 1 1 Tt 1 Yt I t 1 I t s St 44 HOLT-WINTER’S EXPONENTIAL SMOOTHING Here, (Yt /St) captures seasonal effects. s = # of periods in the seasonal cycles (s = 4, for quarterly data) Three parameters : = smoothing parameter = trend coefficient = seasonality coefficient 45 HOLT-WINTER’S EXPONENTIAL SMOOTHING • h-step ahead forecast Yˆt h St hTt I t h s • Seasonal factor is multiplied in the h-step ahead forecast , and can be chosen as the value between 0.02< ,,<0.2 or by minimizing the MSE as in SES. 46 HOLT-WINTER’S EXPONENTIAL SMOOTHING • To initialize Holt-Winter, we need at least one complete season’s data to determine the initial estimates of It-s. • Initial value: s 1.S0 Yt / s t 1 1 Ys 1 Y1 Ys 2 Y2 Ys s Ys 2.T0 s s s s s 2s or T0 Yt / s Yt / s / s t 1 t s 1 47 HOLT-WINTER’S EXPONENTIAL SMOOTHING • For the seasonal index, say we have 6 years and 4 quarter (s=4). STEPS TO FOLLOW STEP 1: Compute the averages of each of 6 years. 4 An Yi / 4, n 1,2, ,6 The yearly averages i 1 48 HOLT-WINTER’S EXPONENTIAL SMOOTHING • STEP 2: Divide the observations by the appropriate yearly mean. Year 1 2 3 4 5 6 Q1 Y1/A1 Y5/A2 Y9/A3 Y13/A4 Y17/A5 Y21/A6 Q2 Y2/A1 Y6/A2 Y10/A3 Y14/A4 Y18/A5 Y22/A6 Q3 Y3/A1 Y7/A2 Y11/A3 Y15/A4 Y19/A5 Y23/A6 Q4 Y4/A1 Y8/A2 Y12/A3 Y16/A4 Y20/A5 Y24/A6 49 HOLT-WINTER’S EXPONENTIAL SMOOTHING • STEP 3: The seasonal indices are formed by computing the average of each row such that Y1 Y5 Y9 Y13 Y17 Y21 I1 /6 A1 A2 A3 A4 A5 A6 Y2 Y6 Y10 Y14 Y18 Y22 I2 /6 A1 A2 A3 A4 A5 A6 Y3 Y7 Y11 Y15 Y19 Y23 I3 /6 A1 A2 A3 A4 A5 A6 Y Y Y Y Y Y I 4 4 8 12 16 20 24 / 6 A1 A2 A3 A4 A5 A6 50 HOLT-WINTER’S EXPONENTIAL SMOOTHING • Note that, if a computer program selects 0 for and , this does not mean that there is no trend or seasonality. • For Simple Exponential Smoothing, a level weight near zero implies that simple differencing of the time series may be appropriate. • For Holt Exponential Smoothing, a level weight near zero implies that the smoothed trend is constant and that an ARIMA model with deterministic trend may be a more appropriate model. • For Winters Method and Seasonal Exponential Smoothing, a seasonal weight near one implies that a nonseasonal model may be more appropriate and a seasonal weight near zero implies that deterministic seasonal factors may be present. 51 EXAMPLE > HoltWinters(beer) Holt-Winters exponential smoothing with trend and additive seasonal component. Call: HoltWinters(x = beer) Smoothing parameters: alpha: 0.1884622 beta : 0.3068298 gamma: 0.4820179 Coefficients: [,1] a 50.4105781 b 0.1134935 s1 -2.2048105 s2 4.3814869 s3 2.1977679 s4 -6.5090499 s5 -1.2416780 s6 4.5036243 s7 2.3271515 s8 -5.6818213 s9 -2.8012536 s10 5.2038114 s11 3.3874876 s12 -5.6261644 52 EXAMPLE (Contd.) > beer.hw<-HoltWinters(beer) > predict(beer.hw,n.ahead=12) Jan Feb Mar Apr May Jun Jul Aug 1963 1964 49.73637 55.59516 53.53218 45.63670 48.63077 56.74932 55.04649 46.14634 Sep Oct Nov Dec 1963 48.31926 55.01905 52.94883 44.35550 53 ADDITIVE VS MULTIPLICATIVE SEASONALITY • Seasonal components can be additive in nature or multiplicative. For example, during the month of December the sales for a particular toy may increase by 1 million dollars every year. Thus, we could add to our forecasts for every December the amount of 1 million dollars (over the respective annual average) to account for this seasonal fluctuation. In this case, the seasonality is additive. • Alternatively, during the month of December the sales for a particular toy may increase by 40%, that is, increase by a factor of 1.4. Thus, when the sales for the toy are generally weak, than the absolute (dollar) increase in sales during December will be relatively weak (but the percentage will be constant); if the sales of the toy are strong, than the absolute (dollar) increase in sales will be proportionately greater. Again, in this case the sales increase by a certain factor, and the seasonal component is thus multiplicative in nature (i.e., the multiplicative seasonal component in this case would be 1.4). 54 ADDITIVE VS MULTIPLICATIVE SEASONALITY • In plots of the series, the distinguishing characteristic between these two types of seasonal components is that in the additive case, the series shows steady seasonal fluctuations, regardless of the overall level of the series; in the multiplicative case, the size of the seasonal fluctuations vary, depending on the overall level of the series. • Additive model: Forecastt = St + It-s • Multiplicative model: Forecastt = St*It-s 55 ADDITIVE VS MULTIPLICATIVE SEASONALITY 56 Exponential Smoothing Models 1. No trend and additive seasonal variability (1,0) 3. Multiplicative seasonal variability with an additive trend (2,1) 2. Additive seasonal variability with an additive trend (1,1) 4. Multiplicative seasonal variability with a multiplicative trend (2,2) Exponential Smoothing Models 5. Dampened trend with additive seasonal variability (1,1) 6. Multiplicative seasonal variability and dampened trend (2,2) • Select the type of model to fit based on the presence of – Trend – additive or multiplicative, dampened or not – Seasonal variability – additive or multiplicative OTHER METHODS (i) Adaptive-response smoothing .. Choose from the data using the smoothed and absolute forecast errors (ii) Additive Winter’s Models .. The seasonality equation is modified. (iii) Gompertz Curve .. Progression of new products (iv) Logistics Curve .. Progression of new products (also with a limit, L) (v) Bass Model 59 EXPONENTIAL SMOOTING IN R General notation: ETS(Error,Trend,Seasonal) ExponenTial Smoothing ETS(A,N,N): Simple exponential smoothing with additive errors ETS(A,A,N): Holt's linear method with additive errors ETS(A,A,A): Additive Holt-Winters' method with additive errors 60 EXPONENTIAL SMOOTING IN R From Hyndman et al. (2008): • Apply each of 30 methods that are appropriate to the data. Optimize parameters and initial values using MLE (or some other method). • Select best method using AIC: AIC = -2 log(Likelihood) + 2p where p = # parameters. • Produce forecasts using the best method. • Obtain prediction intervals using underlying state space model (this part is done by R automatically). ***http://robjhyndman.com/research/Rtimeseries_handout.pdf 61 EXPONENTIAL SMOOTING IN R • • • • • ets() function Automatically chooses a model by default using the AIC Can handle any combination of trend, seasonality and damping Produces prediction intervals for every model Ensures the parameters are admissible (equivalent to invertible) Produces an object of class ets. ***http://robjhyndman.com/research/Rtimeseries_handout.pdf 62 EXPONENTIAL SMOOTING IN R > library(tseries) > library(forecast) > library(expsmooth) R automatically finds the best model. > fit=ets(beer) > fit2 <- ets(beer,model="MNM",damped=FALSE) We are defining the model as MNM > fcast1 <- forecast(fit, h=24) > fcast2 <- forecast(fit2, h=24) sigma: 1.2714 AIC AICc BIC 478.1877 480.3828 500.8838 63 EXPONENTIAL SMOOTING IN R > fit ETS(A,Ad,A) Call: ets(y = beer) Smoothing parameters: alpha = 0.0739 beta = 0.0739 gamma = 0.213 phi = 0.9053 Initial states: l = 38.2918 b = 0.6085 s=-5.9572 3.6056 5.1923 -2.8407 sigma: 1.2714 AIC AICc BIC 478.1877 480.3828 500.8838 64 EXPONENTIAL SMOOTING IN R 65 EXPONENTIAL SMOOTING IN R > fit2 ETS(M,N,M) Call: ets(y = beer, model = "MNM", damped = FALSE) Smoothing parameters: alpha = 0.3689 gamma = 0.3087 Initial states: l = 39.7259 s=0.8789 1.0928 1.108 0.9203 sigma: 0.0296 AIC AICc BIC 490.9042 491.8924 506.0349 66 EXPONENTIAL SMOOTING IN R 67 EXPONENTIAL SMOOTING IN R • GOODNESS-OF-FIT > accuracy(fit) ME RMSE MAE MPE MAPE MASE 0.1007482 1.2714088 1.0495752 0.1916268 2.2306151 0.1845166 > accuracy(fit2) ME RMSE MAE MPE MAPE MASE 0.2596092 1.3810629 1.1146970 0.5444713 2.3416001 0.1959651 The smaller is the better. 68 EXPONENTIAL SMOOTING IN R > plot(forecast(fit,level=c(50,80,95))) 69 EXPONENTIAL SMOOTING IN R > plot(forecast(fit2,level=c(50,80,95))) 70