Context Quantization - McMaster University

advertisement

Context-Based

Adaptive Entropy Coding

Xiaolin Wu

McMaster University

Hamilton, Ontario, Canada

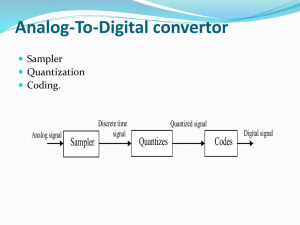

Data Compression System

Transform

a block of n

samples

Entropy

Coding

Quantization

transform

coefficients

bit patterns for

the Q-indices

quantized

coefficients

lossless compression (entropy coding) of the

quantized data

entropy coding removes redundancy and achieves

compression

2

Entropy Coding Techniques

Huffman code

Golomb-Rice code

Arithmetic code

Optimal in the sense that it can approach source entropy

Easily adapts to non-stationary source statistics via context

modeling (context selection and conditional probability

estimation).

Context modeling governs the performance of arithmetic coding.

3

Entropy (Shannon 1948)

Consider a random variable

source alphabet : {x0 , x1,...,xN 1}

probability mass function : pX ( xi ) prob( X xi )

Self-information of xi is i( xi ) log P( xi )

X

measured in bits if the log is base 2.

event of lower the probability carries more information

Self-entropy is the weighted average of self

information

H ( X ) P( xi ) log P( xi )

i

4

Conditional Entropy

Consider two random variables

X

and C

Alphabet of X : {x0 , x1,...,xN 1}

Alphabet of C : {c0 , c1 ,...,cM 1}

Conditional Self-information of xi is

i( xi | cm ) log P( xi | cm )

Conditional Entropy is the average value of

conditional self-information

N 1 M 1

H ( X | C ) P( xi | cm ) P(cm ) log2 P( xi | cm )

i 0 m 0

5

Entropy and Conditional Entropy

The conditional entropy H ( X | C ) can be interpreted

as the amount of uncertainty remaining about the X ,

given that we know random variable C .

The additional knowledge of C should reduce the

uncertainty about X .

H ( X | C) H ( X )

6

Context Based Entropy Coders

Consider a sequence of symbols

S

symbol to code

form the context space, C

0 0 1 0 0 1 1 0 1 1 1 0 1 0 0 1 0 0 0 0 1 0 0 1 1 1 0 1 0 1 1 0 1 1 0 1 1 0 ...

C0 0 0 0

C1 0 0 1

C7 1 1 1

EC

EC

H (C0 )

H (C1 )

R

P(C )H (C )

i

i

i

EC

H (C7 )

7

Context model – estimated

conditional probability

Variable length coding schemes need estimates of

probability of each symbol - model

Model can be

Static - Fixed global model for all inputs

Semi-adaptive - Computed for specific data being coded

and transmitted as side information

English text

C programs

Adaptive - Constructed on the fly

Any source!

8

Adaptive vs. Semi-adaptive

Advantages of semi-adaptive

Disadvantages of semi-adaptive

Overhead of specifying model can be high

Two-passes of data required

Advantages of adaptive

Simple decoder

one-pass universal As good if not better

Disadvantages of adaptive

Decoder as complex as encoder

Errors propagate

9

Adaptation with Arithmetic

and Huffman Coding

Huffman Coding - Manipulate Huffman tree on the

fly - Efficient algorithms known but nevertheless

they remain complex.

Arithmetic Coding - Update cumulative probability

distribution table. Efficient data structure / algorithm

known. Rest essentially same.

Main advantage of arithmetic over Huffman is the

ease by which the former can be used in conjunction

with adaptive modeling techniques.

10

Context models

If source is not iid then there is complex dependence between

symbols in the sequence

In most practical situations, pdf of symbol depends on

neighboring symbol values - i.e. context.

Hence we condition encoding of current symbol to its context.

How to select contexts? - Rigorous answer beyond our scope.

Practical schemes use a fixed neighborhood.

11

Context dilution problem

The minimum code length of sequence x1 x2 xn

achievable by arithmetic coding, if P( xi | xi 1xi 2 x1 )

is known.

The difficulty in estimating P( xi | xi 1 xi 2 x1 ) due to

insufficient sample statistics, preventing the use of

high-order Markov models.

12

Estimating probabilities in

different contexts

Two approaches

Maintain symbol occurrence counts within each context

number of contexts needs to be modest to avoid context dilution

Assume pdf shape within each context same (e.g.

Laplacian), only parameters (e.g. mean and variance)

different

Estimation may not be as accurate but much larger number of

contexts can be used

13

Context Explosion

Consider an image

quantized to 16-levels

“causal” context space

65,536 contexts!

No enough data to “learn” the histograms

the data dilution problem

solution: “quantize” the context space

14

Current Solutions

Non-Binary Source

JPEG: simple entropy coding without context

J2K: ad-hoc context quantization strategy

Binary Source

JBIG: use suboptimal context templates of modest sizes

15

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Context-Based Classification and Quantization

Conclusions and Future Work

16

Context Quantization

S

Define

Context

C1 C256

Quantize

Context

Gm

Estimate

Prob.

p(S | Gm ) Entropy

Bits

Coders

G1

Adaptive arithmetic coder 1

GN

Adaptive arithmetic coder N

C25 C1000

C3021 C4021

Ci

Group the contexts with similar histogram

17

Distortion Measure

Kullback-Leibler distance between pmf histograms

Always non-zero

d (T , R) H (T || R )

T

k

log(Tk / Rk )

k

0.5

0.4

prob.

prob.

0.3

0.2

0.1

0

1

2

3

4

symbol value (k)

T (0.3,0.2,0.1,0.4)

d (T , R) 0.28bits / sym

0.6

0.5

0.4

0.3

0.2

0.1

0

1

2

3

4

symbol value (k)

R (0.5,0.1,0.2,0.2)

d (T , T ) d ( R, R) 0bits / sym

18

Context Quantization

GLA (k-mean)

algorithm

Nearest Neighbor

Centroid Computation

Context

Histograms

Centroid

Histogtrams

Voronoi

Region

Repeat until converge

19

Experimental Results

1st experiment: source

use a controlled source with memory

1st-order Gauss-Markov

x[n]= r x[n - 1]+ w[n]

generate 107 samples with r = 0.9

quantize into 32 levels

flip sign of every sample with probability 0.5

20

1st experiment setup

context space

L3

L2

context size = 1024

L1

S

all the dependence is here

How many groups do we expect?

max of 16 are needed

all will be bimodal

21

1st experiment: results

vary M, the number of groups

M

Rate [bits/sym]

1

4.062

2

3.709

4

3.563

8

3.510

16

3.504

774

3.493

only need 8!

number of non-empty contexts

22

histograms for M=16

0.25

probability

0.2

0.15

0.1

0.05

0

1

3

5

7

9 11 13 15 17 19 21 23 25 27 29 31

symbol value (16 corresponds to zero)

23

2nd experiment: source

a 512512 “natural image” - barb

wavelet transform, 9-7 filters, 3-levels

each subband scalar quantized to 24 levels

S0

test a sub-image

24

2nd experiment: setup

context space

L4

L3

L1

S

L2

context size = 313,776

group histogram structure?

unknown

25

2nd experiment: results

subband S0 (low pass)

estimated entropy

true rate

5

4

Rate [bits/pixel]

3

2

1

0

1

2

4

8

16

32

64

128

256 3020

Number of Groups

need our method to quantize the context space!

26

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Context-Based Classification and Quantization

Conclusions and Future Work

27

Quantizer description

G1

GN

S

C1

Define

Context

C25

C1000

C1021

Ci Quantize

Context

C1197

G

m

Estimate

Prob.

Describe

Context

Book

p(S | Gm )

SideBits

Entropy DataBits

Coders

G1

Adaptive arithmetic coder 1

GN

Adaptive arithmetic coder N

C26 C25 C1000

C1021C1197

28

Coarse Context Quantization

output

Full Resolution Quantizer

Low Resolution

Context Quantizers

Number of contexts

0

input

X2

X1

X0

0

0

0

9*9*9=729

6*5*3=90

S

0

29

State Sequence

…8 6 5

2 4 3 5 8 6 5 4 1 2 4 3 1 8 6 5 2 2 7…

… G3 … … … … … G3 … … … …

G1

C25

C1000

Context Book

GN

C1021

C1197

Context Indices

C1021

C25

C1000

C1197

Group Indices

GN

G1

G1

GN

30

Experimental Results

Experiment: source

a 512512 “natural image” - barb

wavelet transform, 9-7 filters, 3-levels

each subband scalar quantized to 24 levels

31

Experimental Results

2

Side Rate

(bpp)

1.5

Direct

CM

1

0.5

0

???

HL1 LH1 HH1 HL2 LH2 HH2 HL3 LH3 HH3

32

Experimental Results

Side Information Rate

2.4

Data Rate

1.8

2.1

1.5

1.8

1.2

1.5

Bit Rate

1.2

(bpp)

0.9

Bit Rate

0.9

(bpp)

0.6

0.6

0.3

0.3

0

No Context

Grouping

Direct

CM

CCQ +CM

Subband LH2

0

No

Context

Grouping

Direct

CM

CCQ +CM

Subband LH3

33

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context-Based Classification and Quantization

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Conclusions and Future work

34

MDL-Based Context Quantizer

G1

GN

S

C1

Define

Context

C25

C1000

C1021

Ci Quantize

Context

C1197

G

m

Estimate

Prob.

Describe

Context

Book

p(S | Gm )

SideBits

Entropy DataBits

Coders

G1

Adaptive arithmetic coder 1

GN

Adaptive arithmetic coder N

C26 C25 C1000

C1021C1197

35

Distortion Measure

0.9

0.7

0.8

1

0.9

0.6

0.8

0.7

0.4

0.3

0.5

0.7

0.4

0.6

0.5

Prob.

0.5

Prob.

Prob.

0.6

0.3

0.2

0.2

0.1

0.1

0

0.1

0

0

0

1

0

Symbol Value

1

0

Symbol Value

A(0.2,0.8)

0.1

0.2

C(0.9,0.1)

B

0.3

0.4

C

0.5

0.6

1

Symbol Value

B(0.4,0.6)

A

0

0.4

0.3

0.2

0.7

0.8

0.9

1.0

Z P(0)

d ( A, B) d ( A, C )

36

Context Quantization

Vector Quantization

(Local Optima)

Context

Histograms

Centroid

Histogtrams

q1

Scalar Quantization

(Global Optima)

0

0.1

Voronoi

Region

q2

0.2

0.3

0.4

qM

0.5

0.6

0.7

0.8

0.9

1.0

min (code length)

q1 , q2 ,..., qM

37

Proposed Method

Context mapping description

Using training set to obtain pmf of “common” context

Applying classification map method for “rare” context

Minimum Description Length Context Quantizer

Minimize the objective function

M

M

n{Z (qm 1, qm ]}

min N{Z (qm1 , qm ]}H ( X | Z (qm1 , qm ]) n{Z (qm 1, qm ]}log

q,M

n

{

Z

[

0

,

1

]}

m 1

m1

Data Code Length

Side Info. Code Length

Dynamic programming method is applied to achieve the

global minima

38

Contributions

A new context quantizer design approach based on

the principle of minimum description length.

An input-dependent context quantizer design

algorithm with efficient handling of rare contexts

Context quantization for minimum adaptive code

length.

A novel technique to handle the mismatch of

training statistics and source statistics.

39

Experimental Results

Bit rates (bpp) of dithering halftone images

Bit rates (bpp) of error diffusion halftone images

40

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Context-Based Classification and Quantization

Conclusions and Future Work

41

Motivation

MDL-based context quantizer is designed mainly

based on the training set statistics.

If there is any mismatch in statistics between the

input and the training set, the optimality of the

predesigned context quantizer can be compromised

42

Input-dependent context

quantization

Context Quantizer Design

Raster scan the input image to obtain the conditional

probabilities Px|c (1 | c) and the number of occurrence N (c)

of each context instance c

Minimize the objective function by dynamic programming

M

min N{Z (qm1 , qm ]}H ( X | Z (qm1 , qm ])

q,M

m 1

Reproduction pmfs m Px|Q(c ) (1 | m), m 0,...,M 1 are sent as

the side information

43

Handling of Rare Context

Instances

Context template definition

Estimation of conditional probabilities from the

training set

Rare contexts : estimated by decreased size context

Only used as initial one and being updated when coding the

input image

44

Coding Process

S Context

Calculation

c Prob.

Estimation

Pˆx | c (1 | c) Context

Quantization

Q(c) m

m

Entropy DataBits

Coders

Update

Pˆx|c (1 | c)

The context quantizer output may change according to

the more accurate estimate of the conditional

probability along the way

45

Experimental Results

Bit rates (bpp) of error diffusion halftone images

46

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Context-Based Classification and Quantization

Conclusions and Future Work

47

Motivation

To minimize the actual adaptive code length

instead of static code length

To minimize the effect of mismatch between the

input and training set statistics

48

Context Quantization for

Minimum Adaptive Code Length

The adaptive code length can be calculated

0

n0 1

n1 1

(

j

)

( j )

j 0

j 0

l log

n0 n1 1

j 0 (2 j )

n0 n1 1

( j )

j 0

log n0 n1 1

j 0 (2 j )

n0 0 and n1 0,

n0 0 and n1 0,

n0 0 xor n1 0,

The order of 0 and 1 appearance does not change

the adaptive code length

49

Context Quantization for

Minimum Adaptive Code Length

The objective function to minimize the effect of

mismatch between the input and the training set

min lm (n

M

M ,Q ( c ) m1

0

'n0 , n1 'n1 ) l (n0 ' , n1 ' )

m

50

Experimental Results

Bit rates (bpp) of error diffusion halftone images

51

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Context-Based Classification and Quantization

Conclusions and Future Work

52

Motivation

pdf

Single Quantizer

pdf

Quantizer after Classification

53

Single Quantizer

T

Q

EC

prob

Transform Coef.

54

Classification and Quantization

TC

T

IQ

C

Q

EC

…20.5 40.5 31

9 45

38 28 12 25 39.5 47 19

Initial Q

…2

4

3

0

4

3

2

1

2

3

4

1

2 …

Group Indies

…0

1

1

0

1

1

0

0

0

1

1

0

1 …

Transform Coef.

23.5…

C0

C1

G0

GN

CM

55

Experimental Results

56

Overview

Introduction

Context Quantization for Entropy Coding of Non-Binary

Source

Context Quantization for Entropy Coding of Binary Source

Context Quantization

Context Quantizer Description

Minimum Description Length Context Quantizer Design

Image Dependent Context Quantizer Design with Efficient Side Info.

Context Quantization for Minimum Adaptive Code Length

Context-Based Classification and Quantization

Conclusions and Future Work

57

Conclusions

Non-Binary Source:

Binary Source:

New context quantization method is proposed

Efficient context description strategies

Global optimal partition of the context space

MDL-based context quantizer

Context-based classification and quantization

58

Future Work

Context-based entropy coder

Classification and Quantization

Context shape optimization

Mismatch between the training set and test data

Classification tree among the wavelet subbands

Apply these techniques to video codec

59

?

60