i(x,y)

advertisement

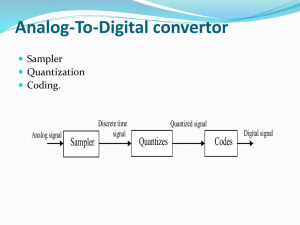

Digital Image Processing (DIP) Lecture # 5 Dr. Abdul Basit Siddiqui Assistant Professor-FURC FURC-BCSE7 1 Classification of DIP and Computer Vision Processes Low-Level Process: (DIP) – Primitive operations where inputs and outputs are images; major functions: image pre-processing like noise reduction, contrast enhancement, image sharpening, etc. Mid-Level Process (DIP and Computer Vision) – Inputs are images, outputs are attributes (e.g., edges); major functions: segmentation, description, classification / recognition of objects High-Level Process (Computer Vision) – Make sense of an ensemble of recognized objects; perform the cognitive functions normally associated with vision FURC-BCSE7 2 Image Processing Steps FURC-BCSE7 3 DIP Course Digital Image Fundamentals and Image Acquisition (briefly) Image Enhancement in Spatial Domain – Pixel operations – Histogram processing – Filtering Image Enhancement in Frequency Domain – Transformation and reverse transformation – Frequency domain filters – Homomorphic filtering Image Restoration – Noise reduction techniques – Geometric transformations FURC-BCSE7 4 DIP Course Wavelets and Multi-Resolution Processing – Multi-resolution expansion – Wavelet transforms, etc. Image Segmentation – Edge, point and boundary detection – Thresholding – Region based segmentation, etc FURC-BCSE7 5 Image Representation • Image – Two-dimensional function f(x,y) – x, y : spatial coordinates • Value of f : Intensity or gray level FURC-BCSE7 6 Digital Image • A set of pixels (picture elements, pels) • Pixel means – pixel coordinate – pixel value – or both • Both coordinates and value are discrete FURC-BCSE7 7 Example • 640 x 480 8-bit image FURC-BCSE7 8 FURC-BCSE7 9 Digital Image Processing (DIP) Digital Image Fundamentals and Image Acquisition FURC-BCSE7 10 Image Acquisition FURC-BCSE7 11 Image Description f (x,y): intensity/brightness of the image at spatial coordinates (x,y) 0< f (x,y)<∞ and determined by 2 factors: illumination component i(x,y): amount of source light incident reflectance component r(x,y): amount of light reflected by objects f (x,y) = i(x,y)r(x,y) Where 0< i(x,y)<∞: determined by the light source 0< r(x,y)<1: determined by the characteristics of objects FURC-BCSE7 12 Sampling and Quantization FURC-BCSE7 13 Sampling and Quantization Sampling: Digitization of the spatial coordinates (x,y) Quantization: Digitization in amplitude (also called gray-level quantization) 8 bit quantization: 28 =256 gray levels (0: black, 255: white) Binary (1 bit quantization):2 gray levels (0: black, 1: white) Commonly used number of samples (resolution) Digital still cameras: 640x480, 1024x1024, up to 4064 x 2704 Digital video cameras: 640x480 at 30 frames/second 1920x1080 at 60 f/s (HDTV) FURC-BCSE7 14 Sampling and Quantization Digital image is expressed as FURC-BCSE7 15 Sampling FURC-BCSE7 16 Effect of Sampling and Quantization FURC-BCSE7 17 RGB (color) Images FURC-BCSE7 18 Image Acquisition FURC-BCSE7 19 Basic Relationships between Pixels FURC-BCSE7 20 Basic Relationships between Pixels FURC-BCSE7 21 Basic Relationships between Pixels FURC-BCSE7 22 Basic Relationships between Pixels FURC-BCSE7 23 Distance Measures Chessboard distance between p and q: FURC-BCSE7 24 Distance Measures • D4 distance (city-block distance): – D4(p,q) = |x-s| + |y-t| – forms a diamond centered at (x,y) – e.g. pixels with D4≤2 from p 2 2 1 2 2 1 0 1 2 D4 = 1 are the 4-neighbors of p 2 1 2 2 FURC-BCSE7 25 Distance Measures • D8 distance (chessboard distance): – D8(p,q) = max(|x-s|,|y-t|) – Forms a square centered at p – e.g. pixels with D8≤2 from p 2 2 2 2 2 2 1 1 1 2 D8 = 1 are the 8-neighbors of p 2 1 0 1 2 2 1 1 1 2 2 2 2 2 2 FURC-BCSE7 26