MS PowerPoint

advertisement

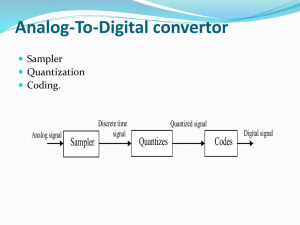

Sampling

and

Pulse Code Modulation

ECE460

Spring, 2012

How to Measure Information Flow

• Signals of interest are band-limited which lend themselves to

sampling (e.g., discrete values)

• Study the simplest model: Discrete Memoryless Source (DMS)

• Alphabet: A {a1 , a2 , , aN }

• Probability Mass Function:

pi P( X ai )

The DMS is fully defined given its Alphabet and PMF.

• How to measure information flow where a1 is the most likely

and aN is the least likely:

1. Information content of output aj depends only on the

probability of aj and not on the value.

Denote this as I(pj) – called self-information

2. Self-information is a continuous function of pj

3. Self-information is increases as pj decreases.

j1

j2

4. If pj = p(j1) p(j2), then I p j I p I p

Only function that satisfies all these properties:

I x log x

Unit of measure:

•

•

Log2 Loge -

bits (b)

nats

2

Entropy

The mean value of I(xi) over the alphabet of source X with N

different symbols is given by

H X E I xi

N

pi log pi

i 1

1

pi log

i 1

pi

N

Note: 0 log 0 = 0

H(X) is called entropy and is a measure of the average

information content per source symbol and is measured in

b/symbol

Source entropy H(X) is bounded:

0 H X log 2 N

3

Entropy Example

A DMS X has an alphabet of four symbols, {x1, x2, x3, x4} with

probabilities P(x1) = 0.4, P(x1) = 0.3, P(x1) = 0.2, and P(x1) = 0.1.

a) Calculate the average bits/symbol for this system.

b) Find the amount of information contained in the messages

x1, x2, x1, x3 and x4, x3, x3, x2 and compare it to the average.

c) If the source has a bandwidth of 4000 Hz and it is sampled

at the Nyquist rate, determine the average rate of the

source in bits/sec.

4

Joint & Conditional Entropy

Definitions

• Joint entropy of two discrete random variables (X, Y)

H X , Y p xi , y j log p xi , y j

n

m

i 1 j 1

• Conditional entropy of the random variable X given Y

H X | Y p xi , y j log p xi | y j

n

m

i 1 j 1

• Relationships

H X , Y H X | Y H Y

H X , Y H Y | X H X

• Entropy rate of a stationary discrete-time random process is

defined by

Memoryless:

Memory:

H X lim H X n | X 1 , X 2 ,

n

1

H X lim H X n | X 1 , X 2 ,

n n

, X n 1

, X n 1

5

Example

Two binary random variables X and Y are distributed according

to the joint distribution

p X Y 0 p X 0, Y 1 p X Y 1

1

3

Compute:

• H(X)

• H(Y)

• H(X,Y)

• H(X|Y)

• H(Y|X)

6

7

Source Coding

Which of these codes are viable?

Symbol Code 1 Code 2 Code 3

a

00

00

0

b

00

01

1

c

11

10

11

Code 4

1

10

100

Code 5

1

00

01

Code 6

1

01

11

Which have uniquely decodable properties?

Which are prefix-free?

A sufficient (but not necessary) condition to assure that a

code is uniquely decodable is that no code word be the prefix of any

other code words.

Which are instantaneously decodable?

Those for which the boundary of the present code word can be

identified by the end of the present code word rather than by the

beginning of the next code word.

Theorem: A source with entropy H can be encoded with arbitrarily

small error probability at any rate R (bits/source

output)as long as R > H. Conversely if

R < H, the error probability will be bounded away from

zero, independent of the complexity of the encoder and

the decoder employed.

R: the average code word length per source symbol

R

p x l x

where l x is the length of the code word

x X

8

Huffman Coding

Steps to Huffman Codes:

1. List the source symbols in order of decreasing probability

2. Combine the probabilities of the two symbols having the

lowest probabilities, and reorder the resultant probabilities;

this step is called reduction 1. The same procedure is

repeated until there are two ordered probabilities

remaining.

3. Start encoding with the last reduction, which consist of

exactly two ordered probabilities. Assign 0 as the first digit

in the code words for all the source symbols associated with

the first probability; assign 1 to the second probability.

4. Now go back and assign 0 and 1 to the second digit for the

two probabilities that were combined in the previous

reduction step, retaining all assignments made in step 3.

5. Keep regressing this way until the first column is reached.

Huffman code: H X R H X 1

9

Example

Sym Prob

x1 0.30

x2

0.25

x3

0.20

x4

0.12

x5

0.08

x6

0.05

Code

H X :

R:

10

Quantization

Scalar Quantization

x ¡ is partitioned into N disjoint subsets k ,1 k N .

If at time i, xi belongs to k , then xi is represented by xˆk .

For example:

x a1

a1 x a2

a2 x a3

a3 x a4

a4 x a5

a5 x a6

a6 x a7

a7 x

xˆ1

xˆ2

xˆ3

xˆ4

xˆ5

xˆ6

xˆ7

xˆ8

N is usually chosen to be a power of 2

R log 2 N

and, for this example, R log 2 8 3

11

Quantization

Define a Quantization Function Q as

Q x xˆi

for all x i

Is the quantization function invertible?

Define the squared-error distortion for a single measurement:

d x, xˆ x Q x x%2

2

Since X is a random variable, so are Xˆ and X%, and the distortion

D for the source is the expected value of this random variable

2

D E d X , Xˆ E X Q X

12

Example

The source X(t) is a stationary Gaussian source with mean zero

and power-spectral density

2,

SX f

0,

f 100 Hz

otherwise

The source is sampled at the Nyquest rate, and each sample is

quantized using the 8-level quantizer shown below.

What is the rate R and the distortion D?

x 60

60 x 40

40 x 20

20 x 0

0 x 20

20 x 40

40 x 60

60 x

xˆ1 70

xˆ2 50

xˆ3 30

xˆ4 10

xˆ5 10

xˆ6 30

xˆ7 50

xˆ8 70

13

Signal-to-Quantized Noise Ratio

In the example, we used the mean-squared distortion, or

quantization noise, as the measure of performance. SQNR

provides a better measure:

Definition: If the random variable X is quantized Q(x), the

signal-to-quantized noise ration (SQNR) is defined by

SQNR

E X 2

E X Q X

2

For signals, the quantization-noise power is

T /2

2

1

PX lim

E

X

t

Q

X

t

dt

T T

T /2

And the signal power is

T /2

1

PX lim

E X 2 t dt

T T

T /2

Therefore:

SQNR

PX

PX

If X(t) is a strictly stationary random process, how is the SQNR

related to the autocorrelation functions of X(t) and X% t ?

14

Uniform Quantization

The example before was for a uniform quantization where the

first and last ranges were (-∞,-70] and (70, ∞). The remaining

ranges went in steps of 20 (e.g., (-70, -50], … , (50, 70] ).

We can generalize for a zero-mean, unit variance Gaussian by

breaking the ranges into N symmetric segments of width Δ

about the origin. The distortion would then be given by:

Even N:

N

1

2

D 2

N

1

2

x xˆ1

N

i 1

2

N

i

2

2

i 1

Odd N:

N

1

2

D 2

N 3

2

2

i 1

f X x dx

x xˆi 1

x xˆ1

N

i 1

2

N

i

2

2

2

2

f X x dx

f X x dx

x xˆi 1

2

f X x dx

2

2

x 2 f X x dx

How do you select the optimized value for Δ give N ?

15

Optimal Uniform Quantizer

16

Non-Uniform Quantizers

The Lloyd-Max conditions for optimal quantization:

1. The boundaries of the quantization regions are the

midpoints of the corresponding quantized values (nearest

neighbors)

2. The quantized values are the centroids of the quantization

regions.

Typically determined by trial and error! Note the optimal nonuniform quantizer table for a zero-mean, unit variance Gaussian

source.

Example (Continued):

How would the results of the previous example change if instead

of the uniform quantizer we used an optimal non-uniform

quantizer with the same number of levels?

17

Optimized Non-Uniform Quantizer

18

Waveform Coding

Our focus will be on Pulse-Code Modulation (PCM)

x t

xn

xˆn

Uniform PCM

• Input range for x(t): [ xmax , xmax ]

• Length of each quantization region:

2 xmax xmax

1

N

2

• xˆn is chosen as the midpoint of each quantization level

• Quantization error x% x Q x is a random variable in the

interval / 2, / 2

19

Uniform PCM

• Typically, N is very high and the variations of input low so

that the error can be approximated as uniformly distributed

on the interval / 2, / 2

• This give the quantization noise as

/2 1

E x%2

x%2 dx%

/2

2

12

2

xmax

3N 2

2

xmax

3 4

• Signal-to-quantization noise ration (SQNR)

(2

X 2 3 N 2 X 2 3 4 X 2

SQNR

3 4 X

2

2

2

%

x

x

X

max

max

(

X

where X

xmax

20

Uniform PCM

Example: What is the resulting SQNR for a signal uniformly

distributed on [-1,1] when uniform PCM with 256

levels is employed? What is the minimum bandwidth

required?

21

How to Improve Results

A more effective method would be to make use a non-uniform

PCM:

g x

i

xn

x

xmax

Speech coding in the U.S. uses the µ-law compander with a

typical value of µ = 255:

x

ln 1

x

max

sgn m

g x

ln 1

x

xmax

1

22