Class 20. Sample Sample Selection Models and Models of Attrition

advertisement

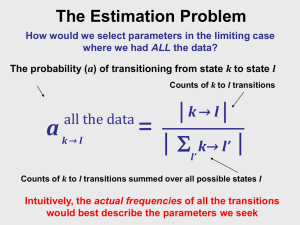

Part 20: Selection [1/66] Econometric Analysis of Panel Data William Greene Department of Economics Stern School of Business Part 20: Selection [2/66] Econometric Analysis of Panel Data 20. Sample Selection and Attrition Part 20: Selection [3/66] Received Sunday, April 27, 2014 I have a paper regarding strategic alliances between firms, and their impact on firm risk. While observing how a firm’s strategic alliance formation impacts its risk, I need to correct for two types of selection biases. The reviews at Journal of Marketing asked us to correct for the propensity of firms to enter into alliances, and also the propensity to select a specific partner, before we examine how the partnership itself impacts risk. Our approach involved conducting a probit of alliance formation propensity, take the inverse mills and include it in the second selection equation which is also a probit of partner selection. Then, we include inverse mills from the second selection into the main model. The review team states that this is not correct, and we need an MLE estimation in order to correctly model the set of three equations. The Associate Editor’s point is given below. Can you please provide any guidance on whether this is a valid criticism of our approach. Is there a procedure in LIMDEP that can handle this set of three equations with two selection probit models? AE’s comment: “Please note that the procedure of using an inverse mills ratio is only consistent when the main equation where the ratio is being used is linear. In non-linear cases (like the second probit used by the authors), this is not correct. Please see any standard econometric treatment like Greene or Wooldridge. A MLE estimator is needed which will be far from trivial to specify and estimate given error correlations between all three equations.” Part 20: Selection [4/66] Hello Dr. Greene, My name is xxxxxxxxxx and I go to the University of xxxxxxxx. I see that you have an errata page on your website of your econometrics book 7th edition. It seems like you want to correct all mistakes so I think I have spotted a possible proofreading error. On page 477 (theorem 13.2) you want to show that theta is consistent and you say that "But, at the true parameter values, qn(θ0) →0. So, if (13-7) is true, then it must follow that qn(θˆGMM) →θ0 as well because of the identification assumption" I think in the second line it should be qn(θˆGMM) → 0, not θ0. Part 20: Selection [5/66] I also have a questions about nonlinear GMM - which is more or less nonlinear IV technique I suppose. I am running a panel non-linear regression (non-linear in the parameters) and I have L parameters and K exogenous variables with L>K. In particular my model looks kind of like this: Y = b1*X^b2 + e, and so I am trying to estimate the extra b2 that don't usually appear in a regression. From what I am reading, to run nonlinear GMM I can use the K exogenous variables to construct the orthogonality conditions but what should I use for the extra, b2 coefficients? Just some more possible IVs (like lags) of the exogenous variables? I agree that by adding more IVs you will get a more efficient estimation, but isn't it only the case when you believe the IVs are truly uncorrelated with the error term? So by adding more "instruments" you are more or less imposing more and more restrictive assumptions about the model (which might not actually be true). I am asking because I have not found sources comparing nonlinear GMM/IV to nonlinear least squares. If there is no homoscadesticity/serial correlation what is more efficient/give tighter estimates? Part 20: Selection [6/66] Part 20: Selection [7/66] Dueling Selection Biases – From two emails, same day. “I am trying to find methods which can deal with data that is non-randomised and suffers from selection bias.” “I explain the probability of answering questions using, among other independent variables, a variable which measures knowledge breadth. Knowledge breadth can be constructed only for those individuals that fill in a skill description in the company intranet. This is where the selection bias comes from. Part 20: Selection [8/66] The Crucial Element Selection on the unobservables Selection into the sample is based on both observables and unobservables All the observables are accounted for Unobservables in the selection rule also appear in the model of interest (or are correlated with unobservables in the model of interest) “Selection Bias”=the bias due to not accounting for the unobservables that link the equations. Part 20: Selection [9/66] A Sample Selection Model Linear model 2 step ML – Murphy & Topel Binary choice application Other models Part 20: Selection [10/66] Canonical Sample Selection Model Regression Equation y*=x + Sample Selection Mechanism d*=z+u; d=1[d* > 0] (probit) y = y* if d = 1; not observed otherwise Is the sample 'nonrandomly selected?' E[y*|x,d=1] = x +E[ | x, d 1] = x +E[ | x,u z] = x something if Cor[,u|x] 0 A left out variable problem (again) Incidental truncation Part 20: Selection [11/66] Applications Labor Supply model: y*=wage-reservation wage d=labor force participation Attrition model: Clinical studies of medicines Survival bias in financial data Income studies – value of a college application Treatment effects Any survey data in which respondents self select to report Etc… Part 20: Selection [12/66] Estimation of the Selection Model Two step least squares Inefficient Simple – exists in current software Simple to understand and widely used Full information maximum likelihood Efficient Simple – exists in current software Not so simple to understand – widely misunderstood Part 20: Selection [13/66] Heckman’s Model y i *=x iβ+i di *=zi γ+ui ; di =1[di * > 0] (probit) y i = y i * if di = 1; not observed otherwise [i ,ui ]~Bivariate Normal[0,0,2 , ,1] E[y i *|x i ,di =1] = x iβ+E[i | x i , di 1] = x iβ+E[i | x i ,ui zi γ] (zi γ) = x iβ ( z γ ) i = x iβ i Least squares is biased and inconsistent again. Left out variable Part 20: Selection [14/66] Two Step Estimation Step 1: Estimate the probit model di *=zi γ+ui ; di =1[di * > 0] (probit). (zi γ ˆ) ˆ ˆ. Now compute i Estimation of γ by γ ˆ) (zi γ Step 2: Estimate the regression model with estimated regressor y i *=x iβ+i y i = y i * if di = 1; not observed otherwise E[y i *|x i ,di =1] = x iβ+E[i | x i , di 1] The “LAMBDA” = x iβ i ˆi . Linearly regress y i on x i , Step2a. Fix standard errors (Murphy and Topel). Estimate and using ˆ and e'e/n Part 20: Selection [15/66] FIML Estimation logL d0 log zi 1 i2 zi i / d1 log exp 2 2 2 1 2 i y i x iβ Let 1 / , =- /, = 1-2 logL d0 log zi 2 1 d1 log exp y i x iδ ( 1 2 )zi (y i x iδ) 2 2 Note : no inverse Mills ratio appears anywhere in the model. Part 20: Selection [16/66] Classic Application Mroz, T., Married women’s labor supply, Econometrica, 1987. A (my) specification N =753 N1 = 428 LFP=f(age,age2,family income, education, kids) Wage=g(experience, exp2, education, city) Two step and FIML estimation Part 20: Selection [17/66] Selection Equation +---------------------------------------------+ | Binomial Probit Model | | Dependent variable LFP | | Number of observations 753 | | Log likelihood function -490.8478 | +---------------------------------------------+ +--------+--------------+----------------+--------+--------+----------+ |Variable| Coefficient | Standard Error |b/St.Er.|P[|Z|>z]| Mean of X| +--------+--------------+----------------+--------+--------+----------+ ---------+Index function for probability Constant| -4.15680692 1.40208596 -2.965 .0030 AGE | .18539510 .06596666 2.810 .0049 42.5378486 AGESQ | -.00242590 .00077354 -3.136 .0017 1874.54847 FAMINC | .458045D-05 .420642D-05 1.089 .2762 23080.5950 WE | .09818228 .02298412 4.272 .0000 12.2868526 KIDS | -.44898674 .13091150 -3.430 .0006 .69588313 Part 20: Selection [18/66] Heckman Estimator and MLE Part 20: Selection [19/66] Extension – Treatment Effect What is the value of an elite college education? di *=zi γ+ui ; di=1[di * > 0] (probit) y i *=x iβ+di i observed for everyone [i ,ui ]~Bivariate Normal[0,0,2 , ,1] E[y i *|x i ,di=1] = x iβ+di +E[i | x i , di 1] = x iβ++E[i | x i ,ui zi γ] (zi γ) = x iβ+ (zi γ) = x iβ+ i E[y i *|x i ,di=0] = x iβ+di +E[i | x i , di 0] (zi γ) = x iβ (zi γ) Least squares is still biased and inconsistent. Left out variable Part 20: Selection [20/66] Sample Selection An approach modeled on Heckman's model Regression Equation: Prob[y=j|x,u]=P(λ); λ=exp(x β+θu) Selection Equation: d=1[zδ+ε>0] (The usual probit) [u,ε]~n[0,0,1,1,ρ] (Var[u] is absorbed in θ) Estimation: Nonlinear Least Squares: [Terza (1998, see cite in text).] Φ(zδ+ρ) E[y|x,d=1]=exp(x β+θρ2 ) Φ(zδ) FIML using Hermite quadrature: [Greene (Stern wp, 97-02, 1997)] Part 20: Selection [21/66] Extensions – Binary Data Application: In German Health care data, insurance choices di *=zi γ+ui ; di=1[di * > 0] (probit) y i *=x iβ+i , y i=1[y i * > 0] (probit) y i = y i * if di = 1; not observed otherwise [i ,ui ]~Bivariate Normal[0,0,1, ,1] Estimation: (1) Two step? (Wooldridge text) (2) FIML (Stata, LIMDEP) Part 20: Selection [22/66] Panel Data and Selection Selection equation with time invariant individual effect dit 1[zit γ i it 0] Observation mechanism: (y it , x it ) observed when dit 1 Primary equation of interest Common effects linear regression model y it | (dit 1) x it β i it " Selectivity " as usual arises as a problem when the unobservables are correlated; Corr(it , it ) 0. The common effects, i and i make matters worse. Part 20: Selection [23/66] Panel Data and Sample Selection Models: A Nonlinear Time Series I. 1990-1992: Fixed and Random Effects Extensions II. 1995 and 2005: Model Identification through Conditional Mean Assumptions III. 1997-2005: Semiparametric Approaches based on Differences and Kernel Weights IV. 2007: Return to Conventional Estimators, with Bias Corrections Part 20: Selection [24/66] Panel Data Sample Selection Models Verbeek, Economics Letters, 1990. dit 1[zit γ w i it 0] (Random effects probit) y it | (dit 1) x it β i it ; (Fixed effects regression) Proposed "marginal likelihood" based on joint normality zit γ + it ui,1 ditui,2 f(ui,1 ,ui,2 )dui,1dui,2 logL i t 1 (2dit 1) 2 2 (1 dit ) it ( / )dit (y it y i ) ( x it x i )'β (Integrate out the random effects; difference out the fixed effects.) Ti ui,1 ,ui,2 are time invariant uncorrelated standard normal variables How to do the integration? Natural candidate for simulation. (Not mentioned in the paper. Too early.) [Verbeek and Nijman: Selectivity "test" based on this model, International Economic Review, 1992.] Part 20: Selection [25/66] Zabel – Economics Letters Inappropriate to have a mix of FE and RE models Two part solution Treat both effects as “fixed” Project both effects onto the group means of the variables in the equations Resulting model is two random effects equations Use both random effects Part 20: Selection [26/66] Selection with Fixed Effects yit * i xit it , i xi wi , wi ~ N [0,1] dit * i zit uit , i zi vi , vi ~ N [0,1] (it , uit ) ~ N 2 [(0, 0), (2 ,1, )]. Li dit 0 zit zi vi (vi )dvi z z v ( / ) 1 i i it d 1 it it - it 1 2 it yit xit xi wi 2 (vi , wi )dvi dwi Part 20: Selection [27/66] Practical Complications The bivariate normal integration is actually the product of two univariate normals, because in the specification above, vi and wi are assumed to be uncorrelated. Vella notes, however, “… given the computational demands of estimating by maximum likelihood induced by the requirement to evaluate multiple integrals, we consider the applicability of available simple, or two step procedures.” Part 20: Selection [28/66] Simulation The first line in the log likelihood is of the form Ev[d=0(…)] and the second line is of the form Ew[Ev[(…)(…)/]]. Using simulation instead, the simulated likelihood is LSi 1 R R r 1 dit 0 zit zi vi ,r zit zi vi ,r ( / )it ,r 1 it ,r dit 1 2 1 yit xit xi wi ,r 1 R R r 1 it ,r Part 20: Selection [29/66] Correlated Effects Suppose that wi and vi are bivariate standard normal with correlation vw. We can project wi on vi and write wi = vwvi + (1-vw2)1/2hi where hi has a standard normal distribution. To allow the correlation, we now simply substitute this expression for wi in the simulated (or original) log likelihood, and add vw to the list of parameters to be estimated. The simulation is then over still independent normal variates, vi and hi. Part 20: Selection [30/66] Conditional Means Part 20: Selection [31/66] A Feasible Estimator Part 20: Selection [32/66] Estimation Part 20: Selection [33/66] Kyriazidou - Semiparametrics Assume 2 periods Estimate selection equation by FE logit Use first differences and weighted least squares: 1 ˆ i xi yi ˆ = Ni=1di1di2 i xi xi Ni=1di1di2 1 w iˆ ˆ i K kernel function. h h Use with longer panels - any pairwise differences Extensions based on pairwise differences by RochinaBarrachina and Dustman/Rochina-Barrachina (1999) Part 20: Selection [34/66] Bias Corrections Val and Vella, 2007 (Working paper) Assume fixed effects Bias corrected probit estimator at the first step Use fixed probit model to set up second step Heckman style regression treatment. Part 20: Selection [35/66] Postscript What selection process is at work? All of the work examined here (and in the literature) assumes the selection operates anew in each period An alternative scenario: Selection into the panel, once, at baseline. Why aren’t the time invariant components correlated? (Greene, 2007, NLOGIT development) Other models All of the work on panel data selection assumes the main equation is a linear model. Any others? Discrete choice? Counts? Part 20: Selection [36/66] Attrition In a panel, t=1,…,T individual I leaves the sample at time Ki and does not return. If the determinants of attrition (especially the unobservables) are correlated with the variables in the equation of interest, then the now familiar problem of sample selection arises. Part 20: Selection [37/66] Application of a Two Period Model “Hemoglobin and Quality of Life in Cancer Patients with Anemia,” Finkelstein (MIT), Berndt (MIT), Greene (NYU), Cremieux (Univ. of Quebec) 1998 With Ortho Biotech – seeking to change labeling of already approved drug ‘erythropoetin.’ r-HuEPO Part 20: Selection [38/66] QOL Study Quality of life study yit = self administered quality of life survey, scale = 0,…,100 xit = hemoglobin level, other covariates Treatment effects model (hemoglobin level) Background – r-HuEPO treatment to affect Hg level Important statistical issues i = 1,… 1200+ clinically anemic cancer patients undergoing chemotherapy, treated with transfusions and/or r-HuEPO t = 0 at baseline, 1 at exit. (interperiod survey by some patients was not used) Unobservable individual effects The placebo effect Attrition – sample selection FDA mistrust of “community based” – not clinical trial based statistical evidence Objective – when to administer treatment for maximum marginal benefit Part 20: Selection [39/66] Dealing with Attrition The attrition issue: Appearance for the second interview was low for people with initial low QOL (death or depression) or with initial high QOL (don’t need the treatment). Thus, missing data at exit were clearly related to values of the dependent variable. Solutions to the attrition problem Heckman selection model (used in the study) Prob[Present at exit|covariates] = Φ(z’θ) (Probit model) Additional variable added to difference model i = Φ(zi’θ)/Φ(zi’θ) The FDA solution: fill with zeros. (!) Part 20: Selection [40/66] An Early Attrition Model Hausman, J. and Wise, D., "Attrition Bias in Experimental and Panel Data: The Gary Income Maintenance Experiment," Econometrica, 1979. A two period model: Structural response model (Random Effects Regression) y i1 x i1β i1 ui y i2 x i2β i2 ui Attrition model for observation in the second period (Probit) zi2 * y i2 x i2θ wi2 α v i2 zi2 1(zi2 * 0) Endogeneity "problem" 12 Corr[i1 ui , i2 ui ] u2 /(2 u2 ) Corr[v i2 , i2 ui ] Corr[v i2 (i2 ui ), i2 ui ) Part 20: Selection [41/66] Methods of Estimating the Attrition Model Heckman style “selection” model Two step maximum likelihood Full information maximum likelihood Two step method of moments estimators Weighting schemes that account for the “survivor bias” Part 20: Selection [42/66] Selection Model Reduced form probit model for second period observation equation zi2 * x i2 ( ) wi (i2 ui v i ) ri2 hi2 zi2 1(zi2 * 0) Conditional means for observations observed in the second period (ri2 ) E[y i2 | x i2 , zi2 1] x i2 (12 ) (ri2 ) First period conditional means for observations observed in the second period (ri2 ) E[y i1 | x i1 , zi2 1] x i1 (12 ) (ri2 ) (1) Estimate probit equation (2) Combine these two equations with a period dummy variable, use OLS with a constructed regressor in the second period THE TWO DISTURBANCES ARE CORRELATED. TREAT THIS IS A SUR MODEL. (EQUIVALENT TO MDE) Part 20: Selection [43/66] Maximum Likelihood LogL i (y i1 x i1)2 log 2 log 2 22 [(y i2 12 y i1 ) (x12 12 x i1 ))]2 2 log log 1 12 2 2 2 (1 ) 12 zi2 r ( / )(y x ) i2 i2 log i2 2 1 r ( / )(y x ) 12 i1 i1 (1 zi2 ) log i2 2 1 12 (1) See H&W for FIML estimation (2) Use the invariance principle to reparameterize (3) Estimate separately and use a two step ML with Murphy and Topel correction of asymptotic covariance matrix. Part 20: Selection [44/66] Part 20: Selection [45/66] A Model of Attrition Nijman and Verbeek, Journal of Applied Econometrics, 1992 Consumption survey (Holland, 1984 – 1986) Exogenous selection for participation (rotating panel) Voluntary participation (missing not at random – attrition) Part 20: Selection [46/66] Attrition Model The main equation yi,t 0 xi,t i i,t , Random effects consumption function i xi ui , Mundlak device; ui uncorrelated with X i yi,t 0 xi,t xi ui i,t , Reduced form random effects model The selection mechanism ait 1[individual i asked to participate in period t] Purely exogenous ait may depend on observables, but does not depend on unobservables rit 1[individual i chooses to participate if asked] Endogenous. rit is the endogenous participation dummy variable ait 0 rit 0 ait 1 the selection mechanism operates Part 20: Selection [47/66] Selection Equation The main equation yi,t 0 xi,t xi ui i,t , Reduced form random effects model The selection mechanism rit 1[individual i chooses to participate if asked] Endogenous. rit is the endogenous participation dummy variable ait 0 rit 0 ait 1 the selection mechanism operates rit 1[ 0 xi,t xi zi,t v i w i,t 0] all observed if ait 1 State dependence: z may include ri,t-1 Latent persistent unobserved heterogeneity: 2v 0. "Selection" arises if Cov[i,t ,w i,t ] 0 or Cov[ui,v i ] 0 Part 20: Selection [48/66] Estimation Using One Wave Use any single wave as a cross section with observed lagged values. Advantage: Familiar sample selection model Disadvantages Loss of efficiency “One can no longer distinguish between state dependence and unobserved heterogeneity.” Part 20: Selection [49/66] One Wave Model A standard sample selection model. yit 0 xit xi (ui it ) rit 1[ 0 xit xi 1ri,t 1 2ai,t 1 (v i w it ) 0] With only one period of data and ri,t-1 exogenous, this is the Heckman sample selection model. If > 0, then ri,t-1 is correlated with v i and the Heckman approach fails. An assumption is required: (1) Include ri,t-1 and assume no unobserved heterogeneity (2) Exclude ri,t-1 and assume there is no state dependence. In either case, now if Cov[(ui it ),(v i w it )] we can use OLS. Otherwise, use the maximum likelihood estimator. Part 20: Selection [50/66] Maximum Likelihood Estimation See Zabel’s model in slides 20 and 23. Because numerical integration is required in one or two dimensions for every individual in the sample at each iteration of a high dimensional numerical optimization problem, this is, though feasible, not computationally attractive. The dimensionality of the optimization is irrelevant This is much easier in 2008 than it was in 1992 (especially with simulation) The authors did the computations with Hermite quadrature. Part 20: Selection [51/66] Testing for Selection? Maximum Likelihood Results Covariances were highly insignificant. LR statistic=0.46. Two step results produced the same conclusion based on a Hausman test ML Estimation results looked like the two step results. Part 20: Selection [52/66] A Dynamic Ordered Probit Model Part 20: Selection [53/66] Random Effects Dynamic Ordered Probit Model Random Effects Dynamic Ordered Probit Model hit * xit Jj1 jhi,t 1( j) i i,t hi,t j if j-1 < hit * < j hi,t ( j) 1 if hi,t = j Pit,j P[hit j] ( j xit Jj1 jhi,t 1( j) i ) ( j1 xit Jj1 jhi,t 1( j) i ) Parameterize Random Effects i 0 Jj11,jhi,1( j) xi ui Simulation or Quadrature Based Estimation lnL= i=1 ln N i Ti t 1 Pit,j f( j )d j Part 20: Selection [54/66] A Study of Health Status in the Presence of Attrition “THE DYNAMICS OF HEALTH IN THE BRITISH HOUSEHOLD PANEL SURVEY,” Contoyannis, P., Jones, A., N. Rice Journal of Applied Econometrics, 19, 2004, pp. 473-503. Self assessed health British Household Panel Survey (BHPS) 1991 – 1998 = 8 waves About 5,000 households Part 20: Selection [55/66] Attrition Part 20: Selection [56/66] Testing for Attrition Bias Three dummy variables added to full model with unbalanced panel suggest presence of attrition effects. Part 20: Selection [57/66] Attrition Model with IP Weights Assumes (1) Prob(attrition|all data) = Prob(attrition|selected variables) (ignorability) (2) Attrition is an ‘absorbing state.’ No reentry. Obviously not true for the GSOEP data above. Can deal with point (2) by isolating a subsample of those present at wave 1 and the monotonically shrinking subsample as the waves progress. Part 20: Selection [58/66] Probability Weighting Estimators A Patch for Attrition (1) Fit a participation probit equation for each wave. (2) Compute p(i,t) = predictions of participation for each individual in each period. Special assumptions needed to make this work Ignore common effects and fit a weighted pooled log likelihood: Σi Σt [dit/p(i,t)]logLPit. Part 20: Selection [59/66] Inverse Probability Weighting Panel is based on those present at WAVE 1, N1 individuals Attrition is an absorbing state. No reentry, so N1 N2 ... N8. Sample is restricted at each wave to individuals who were present at the previous wave. d it = 1[Individual is present at wave t]. d i1 = 1 i, d it 0 d i ,t 1 0. xi1 covariates observed for all i at entry that relate to likelihood of being present at subsequent waves. (health problems, disability, psychological well being, self employment, unemployment, maternity leave, student, caring for family member, ...) Probit model for d it 1[xi1 wit ], t = 2,...,8. ˆ it fitted probability. t Assuming attrition decisions are independent, Pˆit s 1 ˆ is ˆ d it Inverse probability weight W it Pˆit Weighted log likelihood logLW i 1 t 1 log Lit (No common effects.) N 8 Part 20: Selection [60/66] Spatial Autocorrelation in a Sample Selection Model Flores-Lagunes, A. and Schnier, K., “Sample selection and Spatial Dependence,” Journal of Applied Econometrics, 27, 2, 2012, pp. 173-204. Alaska Department of Fish and Game. Pacific cod fishing eastern Bering Sea – grid of locations Observation = ‘catch per unit effort’ in grid square Data reported only if 4+ similar vessels fish in the region 1997 sample = 320 observations with 207 reported full data Part 20: Selection [61/66] Spatial Autocorrelation in a Sample Selection Model Flores-Lagunes, A. and Schnier, K., “Sample selection and Spatial Dependence,” Journal of Applied Econometrics, 27, 2, 2012, pp. 173-204. • • • LHS is catch per unit effort = CPUE Site characteristics: MaxDepth, MinDepth, Biomass Fleet characteristics: Catcher vessel (CV = 0/1) Hook and line (HAL = 0/1) Nonpelagic trawl gear (NPT = 0/1) Large (at least 125 feet) (Large = 0/1) Part 20: Selection [62/66] Spatial Autocorrelation in a Sample Selection Model yi*1 0 xi1 ui1 ui1 j i cij u j1 i1 yi*2 0 xi 2 ui 2 ui 2 j i cij u j 2 i 2 0 12 12 i1 , (?? 1 1??) ~ N , 2 0 12 2 i 2 Observation Mechanism yi1 1 yi*1 > 0 Probit Model yi 2 yi*2 if yi1 = 1, unobserved otherwise. Part 20: Selection [63/66] Spatial Autocorrelation in a Sample Selection Model u1 Cu1 1 C = Spatial weight matrix, Cii 0. u1 [I C]1 1 = (1) 1 , likewise for u 2 () , Var[u ] ( ) Cov[u , u ] () ( ) y 0 xi1 j 1 () i1 , Var[ui1 ] * i1 N y 0 xi 2 j 1 ( ) * i2 N (1) ij (2) ij N 2 1 i2 i2 j 1 2 1 N j 1 N i1 i2 12 j 1 (1) 2 ij ( 2) 2 ij (1) ij (2) ij Part 20: Selection [64/66] Spatial Weights 1 cij 2 , dij d ij Euclidean distance Band of 7 neighbors is used Row standardized. Part 20: Selection [65/66] Two Step Estimation Probit estimated by Pinske/Slade GMM 0 xi1 N N 2 (1) 2 (1) (2) 1 j 1 ()ij ()ij ( )ij j 1 i N (1) 2 ( ) j 1 ij 0 xi1 N 2 (1) 2 1 j 1 ()ij Spatial regression with included IMR in second step (*) GMM procedure combines the two steps in one large estimation. Part 20: Selection [66/66]