Spike Train Statistics

Spike Train Statistics

Sabri

IPM

Review of spike train

Extracting information from spike trains

Noisy environment:

in vitro

in vivo

measurement

unknown inputs and states

what kind of code:

rate: rate coding (bunch of spikes)

spike time : temporal coding (individual spikes)

[Dayan and Abbot, 2001]

Non-parametric Methods

recording stimulus repeated trials

Information is in the difference of firing rates over time

Firing rate estimation methods:

• PSTH

• Kernel density function stimulus onset stimulus onset

Parametric Methods

recording stimulus repeated trials stimulus onset stimulus onset

Fitting P distribution with parameter set: 𝜃

1

, 𝜃

2

, …, 𝜃 𝑚

Parameter estimation methods:

• ML – Maximum likelihood

• MAP – Maximum a posterior

• EM – Expectation Maximization

Two sets of different values for two raster plots

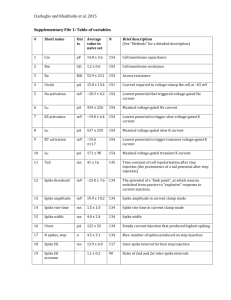

Models based on distributions: definitions & symbols

Fitting distributions to spike trains:

P [] : probability of an event (a single spike) p [] : probability density function

Probability corresponding to every sequence of spikes that can be evoked by the stimulus:

Joint probability of n events at specified times

P

t

1

, t

2

, , t n

t

1

, t

2

, ,

Spike time:

t i

, t n t i

n

t

Discrete random processes

Point Processes:

The probability of an event could depend of the entire history of proceeding events

Renewal Processes

The dependence extends only to the immediately preceding event

Poisson Processes

If there is no dependence at all on preceding events t i-1 t i t

Firing rate:

r

The probability of firing a single spike in a small interval around t i

Is not generally sufficient information to predict the probability of spike sequence

If the probability of generating a spike is independent of the presence or timing of other spikes , the firing rate is all we need to compute the probabilities for all possible spike sequences repeated trials

Homogeneous Distributions: firing rate is considered constant over time

Inhomogeneous Distributions: firing rate is considered to be time dependent

Homogenous Poisson Process

Poisson: each event is independent of others

Homogenous: r

r the probability of firing is constant during period T

Each sequence probability:

….

0 t

1 t i t n

T

P

t

1

, t

2

, , t n

P

t

1

'

, t

2

'

, , t n

'

n !

P

T

t

T n

P

T

n !

e

rT

: Probability of n events in [0 T]

[Dayan and Abbot, 2001] rT=10

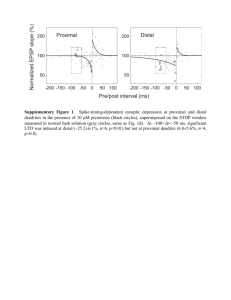

Fano Factor

Distribution Fitting validation

The ratio of variance and mean of the spike count

For homogenous Poisson model:

2 n

n

rT

n

2 n

MT neurons in alert macaque monkey responding to moving visual images:

(spike counts for 256 ms counting period,

94 cells recorded under a variety of stimulus conditions)

[Dayan and Abbot, 2001]

Interspike Interval (ISI) distribution

Distribution Fitting validation

The probability density of time intervals between adjacent spikes

Interspike interval

t i t i+1

for homogeneous Poisson model: P

t i

1

t i

t

r

te

r

p

re

r

MT neuron

[Dayan and Abbot, 2001]

Poisson model with a stochastic refractory period

Coefficient of variation

Distribution Fitting validation

In ISI distribution:

C v

For homogenous Poisson: C v

1 a necessary but not sufficient condition to identify Poisson spike train

For any renewal process, the Fano Factor over long time intervals approaches to value C v

2

Coefficient of variation for V1 and

MT neurons compared to Poisson model with a refractory period:

[Dayan and Abbot, 2001]

Renewal Processes

For Poisson processes: P

For renewal processes: P t i

t i

t t

t i t i

t

t

r

H

t

t

0

in which t

0 is the time of last spike

And H is hazard function

By these definitions ISI distribution is: p

H

exp

Commonly used renewal processes:

Gamma process: (often used non Poisson process) p

R

R

1 e

R

C v

1

Log-Normal process: p

2

1

exp

log

2

2

2

R

exp

2

2

0

H

d

C v

exp

1

Inverse Gaussian process: p

2

C

v

1

R

3 exp

C v

2

2

R

1

2

R

ISI distributions of renewal processes

[van Vreeswijk, 2010]

Gamma distribution fitting

spiking activity from a single mushroom body alpha-lobe extrinsic neuron of the honeybee in response to N=66 repeated stimulations with the same odor

[Meier et al., Neural Networks, 2008]

Renewal processes fitting

spike train from rat CA1 hippocampal pyramidal neurons recorded while the animal executed a behavioral task

Inhomogeneous Gamma

Inhomogeneous Poisson

Inhomogeneous inverse Gaussian

[Riccardo et al., 2001, J. Neurosci. Methods]

Spike train models with memory

Biophysical features which might be important

Bursting: a short ISI is more probable after a short ISI

Adaptation: a long ISI is more probable after a short ISI

Some examples:

Hidden Markov Processes:

The neuron can be in one of N states

States have different distributions and different probability for next state

Processes with memory for N last ISIs: p

n

|

n

1

,

n

2

, ,

n

Processes with adaptation

Doubly stochastic processes

Take Home messages

A class of parametric interpretation of neural data is fitting point processes

Point processes are categorized based on the dependence of memory:

Poisson processes: without memory

Renewal processes: dependence on last event (spike here)

Can show refractory period effect

Point processes: dependence more on history

Can show bursting & adaptation

Parameters to consider

Fano Factor

Coefficient of variation

Interspike interval distribution

Spike train autocorrelation

Distribution of times between any two spikes

Detecting patterns in spike trains (like oscillations)

Autocorrelation and cross-correlation in cat’s primary visual cortex:

Cross-correlation:

• a peak at zero: synchronous

• a peak at non zero: phase locked

[Dayan and Abbot, 2001]

Neural Code

In one neuron:

Independent spike code: rate is enough (e.g. Poisson process)

Correlation code: information is also correlation of two spike times

(not more than 10% of information in rate codes, Abbot 2001)

In population:

Individual neuron

Correlation between individual neurons adds more information

Synchrony

Rhythmic oscillations (e.g. place cells)

[Dayan and Abbot, 2001]