Bayesian Inference - Translational Neuromodeling Unit

advertisement

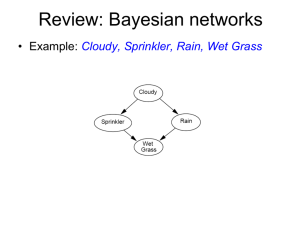

The Reverend Thomas Bayes (1702-1761) Bayesian Inference Sudhir Shankar Raman Translational Neuromodeling Unit, UZH & ETH With many thanks for materials to: Klaas Enno Stephan & Kay H. Brodersen Why do I need to learn about Bayesian stats? Because SPM is getting more and more Bayesian: • Segmentation & spatial normalisation • Posterior probability maps (PPMs) • Dynamic Causal Modelling (DCM) • Bayesian Model Selection (BMS) • EEG: source reconstruction Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling Classical and Bayesian statistics p-value: probability of getting the observed data in the effect’s absence. If small, reject null hypothesis that there is no effect. H0 : 0 One can never accept the null hypothesis p( y | H 0 ) Given enough data, one can always demonstrate a significant effect Probability of observing the data y, given no effect ( = 0). Correction for multiple comparisons necessary Bayesian Inference Flexibility in modelling p( y, ) Incorporating prior information p( ) Posterior probability of effect p( | y ) Options for model comparison Statistical analysis and the illusion of objectivity. James O. Berger, Donald A. Berry Bayes‘ Theorem Posterior Beliefs Observed Data Reverend Thomas Bayes 1702 - 1761 Prior Beliefs “Bayes‘ Theorem describes, how an ideally rational person processes information." Wikipedia Bayes’ Theorem Given data y and parameters , the conditional probabilities are: p( y, ) p( | y ) p( y) p( y , ) p( y | ) p( ) Eliminating p(y,) gives Bayes’ rule: Likelihood Posterior p( y | ) p( ) P( | y ) p( y) Evidence Prior Bayesian statistics new data p( y | ) prior knowledge p( ) p( | y) p( y | ) p( ) posterior likelihood ∙ prior Bayes theorem allows one to formally incorporate prior knowledge into computing statistical probabilities. Priors can be of different sorts: empirical, principled or shrinkage priors, uninformative. The “posterior” probability of the parameters given the data is an optimal combination of prior knowledge and new data, weighted by their relative precision. Bayes in motion - an animation Principles of Bayesian inference Formulation of a generative model Likelihood function p(y|) Model prior distribution p() Observation of data Data y Model Inversion - Update of beliefs based upon observations, given a prior state of knowledge p( | y) p( y | ) p( ) Maximum a posteriori (MAP) Maximum likelihood (ML) Conjugate Priors Prior and Posterior have the same form p( y | ) p( ) p( | y ) p( y) Same form !! Analytical expression. Conjugate priors for all exponential family members. Example – Gaussian Likelihood , Gaussian prior over mean Gaussian Model Likelihood & prior p( y | ) N ( y | , e 1 ) y p( ) N ( | p , p1 ) Posterior: p( | y ) N ( | , ) 1 e p e p y p Relative precision weighting Posterior y Likelihood p Prior Bayesian regression: univariate case Normal densities p( ) N ( | p , p2 ) y x Univariate linear model | y p( y | ) N ( y | x , e2 ) y x p( | y ) N ( | | y , 2| y ) p 1 2| y | y x2 1 2 2 e p x 1 2 y 2 p p e 2 |y Relative precision weighting Bayesian GLM: multivariate case Normal densities General Linear Model p(θ) N (θ; η p , C p ) y Xθ e p(θ | y) N (θ; η | y , C | y ) 1 1 C | y XT Ce X C p 2 p(y | θ) N (y; Xθ, Ce ) 1 1 η | y C | y XT Ce y C p η p One step if Ce is known. Otherwise define conjugate prior or perform iterative estimation with EM. 1 An intuitive example 10 2 5 0 -5 Prior Likelihood Posterior -10 -10 -5 0 1 5 10 Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling Bayesian model selection (BMS) Given competing hypotheses on structure & functional mechanisms of a system, which model is the best? Which model represents the best balance between model fit and model complexity? For which model m does p(y|m) become maximal? Pitt & Miyung (2002), TICS Bayesian model selection (BMS) Bayes’ rule: p( | y, m) p( y | , m) p( | m) p ( y | m) Model evidence: p( y | m) p( y | , m) p( | m) d accounts for both accuracy and complexity of the model allows for inference about structure (generalizability) of the model Model comparison via Bayes factor: 𝑝 𝑚1 𝑦) 𝑝(𝑦|𝑚1 ) 𝑝(𝑚1 ) = 𝑝(𝑚2 |𝑦) 𝑝(𝑦|𝑚2 ) 𝑝(𝑚2 ) Model averaging 𝑝 𝜃|𝑦 = 𝑝 𝜃 𝑦, 𝑚 𝑝(𝑚|𝑦) 𝑚 Kass and Raftery (1995), Penny et al. (2004) NeuroImage 𝐵𝐹 = 𝑝(𝑦|𝑚1 ) 𝑝(𝑦|𝑚2 ) BF10 Evidence against H0 1 to 3 Not worth more than a bare mention 3 to 20 Positive 20 to 150 Strong > 150 Decisive Bayesian model selection (BMS) Various Approximations: • Akaike Information Criterion (AIC) – Akaike, 1974 ln 𝑝 𝐷 𝜃𝑀𝐿 ) − 𝑀 • Bayesian Information Criterion (BIC) – Schwarz, 1978 1 ln 𝑝(𝐷) ≅ ln 𝑝 𝐷 𝜃𝑀𝐴𝑃 ) − 𝑀 ln(𝑁) 2 • Negative free energy ( F ) • A by-product of Variational Bayes Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling Approximate Bayesian inference Bayesian inference formalizes model inversion, the process of passing from a prior to a posterior in light of data. posterior 𝑝 𝜃𝑦 = likelihood prior 𝑝 𝑦𝜃 𝑝 𝜃 ∫ 𝑝 𝑦, 𝜃 d𝜃 marginal likelihood 𝑝 𝑦 (model evidence) In practice, evaluating the posterior is usually difficult because we cannot easily evaluate 𝑝 𝑦 , especially when: • High dimensionality, complex form • analytical solutions are not available • numerical integration is too expensive Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Approximate Bayesian inference There are two approaches to approximate inference. They have complementary strengths and weaknesses. Deterministic approximate inference Stochastic approximate inference in particular variational Bayes in particular sampling find an analytical proxy 𝑞 𝜃 that is maximally similar to 𝑝 𝜃 𝑦 design an algorithm that draws samples 𝜃 1 , … , 𝜃 𝑚 from 𝑝 𝜃𝑦 inspect distribution statistics of 𝑞 𝜃 (e.g., mean, quantiles, intervals, …) often insightful and fast often hard work to derive converges to local minima inspect sample statistics (e.g., histogram, sample quantiles, …) asymptotically exact computationally expensive tricky engineering concerns Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling The Laplace approximation The Laplace approximation provides a way of approximating a density whose normalization constant we cannot evaluate, by fitting a Normal distribution to its mode. 𝑝 𝑧 = 1 𝑍 𝑓 𝑧 Pierre-Simon Laplace (1749 – 1827) main part of the density (easy to evaluate) French mathematician and astronomer × normalization constant (unknown) This is exactly the situation we face in Bayesian inference: 𝑝 𝜃𝑦 = 1 𝑝 𝑦 × model evidence (unknown) 𝑝 𝑦, 𝜃 joint density (easy to evaluate) Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Applying the Laplace approximation Given a model with parameters 𝜃 = 𝜃1 , … , 𝜃𝑝 , the Laplace approximation reduces to a simple three-step procedure: Find the mode of the log-joint: 𝜃 ∗ = arg max ln 𝑝 𝑦, 𝜃 𝜃 Evaluate the curvature of the log-joint at the mode: We obtain a Gaussian approximation: 𝒩 𝜃 𝜇, Λ−1 with 𝜇 = 𝜃 ∗ Λ = −∇∇ ln 𝑝 𝑦, 𝜃 ∗ ∇∇ ln 𝑝 𝑦, 𝜃 ∗ Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Limitations of the Laplace approximation The Laplace approximation is often too strong a simplification. The TheLaplace Laplaceapproximation approximation The TheLaplace Laplaceapproximation approximation 0.25 0.25 0.25 0.25 0.2 0.2 0.2 0.2 ignores global properties of the posterior 0.15 0.15 0.1 0.1 0.05 0.05 0.05 0.05 00 10 10 20 20 becomes brittle when the posterior is multimodal 0.15 0.15 0.1 0.1 00 -10 -10 log logjoint joint approximation approximation 00 -10 -10 00 10 10 20 20 only directly applicable to real-valued parameters Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational Bayesian inference Variational Bayesian (VB) inference generalizes the idea behind the Laplace approximation. In VB, we wish to find an approximate density that is maximally similar to the true posterior. true posterior hypothesis class 𝑝 𝜃𝑦 divergence KL 𝑞||𝑝 best proxy 𝑞 𝜃 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational calculus Variational Bayesian inference is based on variational calculus. Standard calculus Variational calculus Newton, Leibniz, and others Euler, Lagrange, and others • functions 𝑓: 𝑥 ↦ 𝑓 𝑥 • functionals 𝐹: 𝑓 ↦ 𝐹 𝑓 d𝑓 • derivatives d𝑥 d𝐹 • derivatives d𝑓 Example: maximize the likelihood expression 𝑝 𝑦 𝜃 w.r.t. 𝜃 Example: maximize the entropy 𝐻 𝑝 w.r.t. a probability distribution 𝑝 𝑥 Leonhard Euler (1707 – 1783) Swiss mathematician, ‘Elementa Calculi Variationum’ Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational calculus and the free energy Variational calculus lends itself nicely to approximate Bayesian inference. 𝑝(𝑦,𝜃) ln 𝑝(𝑦) = ln 𝑝 𝜃𝑦 =∫ 𝑞 𝜃 ln ln = ∫ 𝑞 𝜃 ln d𝜃 𝑝(𝑦,𝜃) 𝑞 𝜃 𝑝 𝜃𝑦 𝑞 𝜃 = ∫ 𝑞 𝜃 ln = ∫𝑞 𝜃 𝑝(𝑦,𝜃) 𝑝 𝜃𝑦 𝑞 𝜃 𝑝 𝑞 𝜃 𝑝 + 𝜃𝑦 𝜃𝑦 d𝜃 𝑝(𝑦,𝜃) ln 𝑞 𝜃 d𝜃 d𝜃 + ∫ 𝑞 𝜃 ln 𝑝(𝑦,𝜃) d𝜃 𝑞 𝜃 KL[𝑞||𝑝] 𝐹(𝑞, 𝑦) divergence between 𝑞 𝜃 and 𝑝 𝜃 𝑦 free energy Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational calculus and the free energy In summary, the log model evidence can be expressed as: ln 𝑝 𝑦 ln 𝑝 𝑦 KL[𝑞| 𝑝 𝐹 𝑞, 𝑦 ln 𝑝(𝑦) = KL[𝑞| 𝑝 + 𝐹 𝑞, 𝑦 KL[𝑞| 𝑝 divergence free energy (easy to evaluate ≥ 0 (unknown) ∗ for a given 𝑞) 𝐹 𝑞, 𝑦 Maximizing 𝐹 𝑞, 𝑦 is equivalent to: • minimizing KL[𝑞| 𝑝 • tightening 𝐹 𝑞, 𝑦 as a lower bound to the log model evidence initialization … … convergence Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Computing the free energy We can decompose the free energy 𝐹(𝑞, 𝑦) as follows: 𝐹(𝑞, 𝑦) = ∫ 𝑞 𝜃 𝑝 𝑦,𝜃 ln 𝑞 𝜃 d𝜃 = ∫ 𝑞 𝜃 ln 𝑝 𝑦, 𝜃 𝑑𝜃 − ∫ 𝑞 𝜃 ln 𝑞 𝜃 𝑑𝜃 = ln 𝑝 𝑦, 𝜃 expected log-joint 𝑞 + 𝐻𝑞 Shannon entropy Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf The mean-field assumption 𝑞 𝜃1 When inverting models with several parameters, a common way of restricting the class of approximate posteriors 𝑞 𝜃 is to consider those posteriors that factorize into independent partitions, 𝑞 𝜃 = 𝑞 𝜃2 𝑞𝑖 𝜃𝑖 , 𝑖 where 𝑞𝑖 𝜃𝑖 is the approximate posterior for the 𝑖 th subset of parameters. 𝜃2 𝜃1 Jean Daunizeau, www.fil.ion.ucl.ac.uk/ ~jdaunize/presentations/Bayes2.pdf Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational algorithm under the mean-field assumption In summary: 𝐹 𝑞,𝑦 = −KL 𝑞𝑗||exp ln𝑝 𝑦,𝜃 𝑞\𝑗 +𝑐 Suppose the densities 𝑞\𝑗 ≡ 𝑞 𝜃\𝑗 are kept fixed. Then the approximate posterior 𝑞 𝜃𝑗 that maximizes 𝐹 𝑞, 𝑦 is given by: 𝑞𝑗∗ This implies a straightforward algorithm for variational inference: Initialize all approximate posteriors 𝑞 𝜃𝑖 , e.g., by setting them to their priors. = arg max 𝐹 𝑞, 𝑦 𝑞𝑗 = 1 exp 𝑍 ln 𝑝 𝑦, 𝜃 Therefore: ln 𝑞𝑗∗ = ln 𝑝 𝑦, 𝜃 𝑞\𝑗 𝑞\𝑗 − ln 𝑍 Cycle over the parameters, revising each given the current estimates of the others. Loop until convergence. =:𝐼 𝜃𝑗 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Typical strategies in variational inference no parametric assumptions parametric assumptions 𝒒 𝜽 =𝑭 𝜽𝜹 no mean-field assumption (variational inference = exact inference) fixed-form optimization of moments mean-field assumption 𝒒 𝜽 = ∏𝒒 𝜽𝒊 iterative free-form variational optimization iterative fixed-form variational optimization Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Example: variational density estimation We are given a univariate dataset 𝑦1 , … , 𝑦𝑛 which we model by a simple univariate Gaussian distribution. We wish to infer on its mean and precision: 𝑝 𝜇, 𝜏 𝑦 Although in this case a closed-form solution exists*, we shall pretend it does not. Instead, we consider approximations that satisfy the meanfield assumption: mean precision 𝜇 𝜏 𝑝 𝜇𝜏 𝑝𝜏 = 𝒩 𝜇 𝜇0, 𝜆0𝜏 = Ga 𝜏 𝑎0, 𝑏0 𝑦𝑖 data 𝑖 = 1…𝑛 𝑝 𝑦𝑖 𝜇, 𝜏 = 𝒩 𝑦𝑖 𝜇, 𝜏−1 𝑞 𝜇, 𝜏 = 𝑞𝜇 𝜇 𝑞𝜏 𝜏 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf 10.1.3; Bishop (2006) PRML −1 Recurring expressions in Bayesian inference Univariate normal distribution ln 𝒩 𝑥 𝜇, 𝜆−1 1 2 1 2 = ln 𝜆 − ln 𝜋 − 1 2 𝜆 2 𝑥−𝜇 2 = − 𝜆𝑥 2 + 𝜆𝜇𝑥 + 𝑐 Multivariate normal distribution ln 𝒩𝑑 𝑥 𝜇, Λ−1 1 2 1 − 𝑥 𝑇 Λ𝑥 2 𝑑 2 𝑥 𝑇 Λ𝜇 = − ln Λ−1 − ln 2𝜋 − = + 1 2 𝑥 − 𝜇 𝑇Λ 𝑥 − 𝜇 +𝑐 Gamma distribution ln Ga 𝑥 𝑎, 𝑏 = 𝑎 ln 𝑏 − ln Γ 𝑎 + 𝑎 − 1 ln 𝑥 − 𝑏 𝑥 = 𝑎 − 1 ln 𝑥 − 𝑏 𝑥 + 𝑐 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational density estimation: mean 𝜇 ln 𝑞 ∗ 𝜇 = ln 𝑝 𝑦, 𝜇, 𝜏 𝑛 = ln 𝑞𝜏 +𝑐 𝑝 𝑦𝑖 𝜇, 𝜏 + ln 𝑝 𝜇 𝜏 𝑖 = ln∏𝒩 𝑦𝑖 𝜇,𝜏−1 = = =− 𝜏 − 𝑦𝑖 − 𝜇 2 𝑞 𝜏 𝑞 𝜏 𝑞𝜏 + ln𝒩 𝜇 𝜇0, 𝜆0𝜏 2 𝑞𝜏 + ln 𝑝 𝜏 −1 𝜆0 𝜏 + − 𝜇 − 𝜇0 2 𝑞𝜏 2 𝑞 𝜏 +𝑐 + lnGa 𝜏 𝑎0,𝑏0 𝑞𝜏 +𝑐 +𝑐 𝑞 𝜏 𝜏𝑞𝜏 2 𝜏 𝑞 𝜏 2 𝜆0 𝜏 𝑞 𝜏 2 𝜆0 2 − 𝑦 + 𝜏 𝑞 𝜏 𝑛𝑦𝜇 − 𝑛 𝜇 − 𝜇 + 𝜆0𝜇𝜇0 𝜏 𝑞 𝜏 − 𝜇0 + 𝑐 2 𝑖 2 2 2 1 𝑛𝜏 2 𝑞𝜏 + 𝜆0 𝜏 ⟹ 𝑞 ∗ 𝜇 = 𝒩 𝜇 𝜇𝑛 , 𝜆−1 𝑛 𝑞𝜏 with 𝜇2 + 𝑛𝑦 𝜏 𝑞𝜏 + 𝜆0𝜇0 𝜏 𝜆𝑛 = 𝜆0 + 𝑛 𝜏 𝜇𝑛 = 𝑛𝑦 𝜏 𝑞 𝜏 𝑞𝜏 𝜇+𝑐 reinstation by inspection 𝑞 𝜏 + 𝜆0 𝜇0 𝜏 𝜆𝑛 𝑞 𝜏 𝜆0 𝜇0 + 𝑛𝑦 = 𝜆0 + 𝑛 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational density estimation: precision 𝜏 ln 𝑞 ∗ 𝜏 = ln 𝑝 𝑦, 𝜇, 𝜏 𝑞 𝜇 +𝑐 𝑛 𝒩 𝑦𝑖 𝜇, 𝜏 −1 = ln 𝑖=1 𝑛 = 𝑖=1 + ln 𝒩 𝜇 𝜇0 , 𝜆0 𝜏 + ln Ga 𝜏 𝑎0 , 𝑏0 𝑞 𝜇 𝑞 𝜇 +𝑐 𝑞 𝜇 1 𝜏 ln 𝜏 − 𝑦𝑖 − 𝜇 2 2 + 𝑎0 − 1 ln 𝜏 − 𝑏0 𝜏 𝑛 𝜏 = ln𝜏 − ∑ 𝑦𝑖 − 𝜇 2 2 𝑛 1 = + + 𝑎0 − 1 2 2 −1 2 ⟹ 𝑞∗ 𝜏 = Ga 𝜏 𝑎𝑛 , 𝑏𝑛 2 𝑞 𝜇 𝑞 𝜇 1 𝜆0 𝜏 + ln 𝜆0 𝜏 − 𝜇 − 𝜇0 2 2 2 𝑞 𝜇 +𝑐 1 1 𝜆0𝜏 + ln𝜆 + ln𝜏 − 𝜇 − 𝜇0 2 𝑞 𝜇 + 𝑎0 − 1 ln𝜏 − 𝑏0𝜏 + 𝑐 𝑞 𝜇 0 2 2 2 1 𝜆0 ln 𝜏 − ∑ 𝑦𝑖 − 𝜇 2 𝑞 𝜇 + 𝜇 − 𝜇0 2 𝑞 𝜇 + 𝑏0 𝜏 + 𝑐 2 2 with 𝑛+1 𝑎𝑛 = 𝑎0 + 2 𝜆0 𝑏𝑛 = 𝑏0 + 𝜇 − 𝜇0 2 2 𝑞𝜇 1 + ∑ 𝑦𝑖 − 𝜇 2 2 𝑞𝜇 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Variational density estimation: illustration 𝑝 𝜃𝑦 𝑞 𝜃 𝑞∗ 𝜃 Source: Kay H. Brodersen, 2013, http://people.inf.ethz.ch/bkay/talks/Brodersen_2013_03_22.pdf Bishop (2006) PRML, p. 472 Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling Markov Chain Monte Carlo(MCMC) sampling Posterior distribution Proposal distribution 𝑝 𝜃𝑦 𝜃0 𝜃1 q(𝜃) 𝜃2 𝜃3 …………………... • A general framework for sampling from a large class of distributions • Scales well with dimensionality of sample space • Asymptotically convergent 𝜃N Markov chain properties • Transition probabilities – homogeneous/invariant 𝑝 𝜃 𝑡+1 𝜃1, … , 𝜃𝑡) = 𝑝 𝜃 𝑡+1 𝜃𝑡) = 𝑇𝑡 (𝜃 𝑡+1 , 𝜃𝑡) • Invariance Ergodic 𝑝∗ 𝜃 = Reversible Invariant 𝑇 𝜃 ′ , 𝜃 𝑝∗ 𝜃 ′ 𝜃′ • Ergodicity 𝑝∗ 𝜃 = lim 𝑝 𝜃 𝑚 𝑛→∞ Homogeneous ∀ 𝑝(𝜃 0 ) MCMC Metropolis-Hastings Algorithm • Initialize 𝜃 at step 1 - for example, sample from prior • At step t, sample from the proposal distribution: 𝜃 ∗ ~ 𝑞 𝜃 ∗ 𝜃t) • Accept with probability: 𝐴(𝜃 ∗ , 𝜃 𝑡 ) ~ 𝑚𝑖𝑛 1, • Metropolis – Symmetric proposal distribution ∗ 𝑦) 𝑝 𝜃 𝐴(𝜃 ∗ , 𝜃 𝑡 ) ~ 𝑚𝑖𝑛 1, 𝑝 𝜃𝑡 𝑦) Bishop (2006) PRML, p. 539 Gibbs Sampling Algorithm 𝑝 𝜽 = 𝑝(𝜃1 , 𝜃2 , … , 𝜃𝑛 ) • Special case of Metropolis Hastings • At step t, sample from the conditional distribution: 𝜃1𝑡1 ~ 𝑝 𝜃1 𝜃2𝑡, … … , 𝜃𝑛𝑡 𝜃2𝑡1 ~ 𝑝(𝜃2|𝜃1𝑡1, … … , 𝜃𝑛𝑡) : : : 𝜃𝑛1 ~ 𝑝(𝜃3|𝜃1𝑡1, … … , 𝜃𝑛−1 𝑡1) • Acceptance probability = 1 • Blocked Sampling 𝜃1𝑡1, 𝜃2𝑡1 ~ 𝑝 𝜃1, 𝜃2 𝜃3𝑡, … … , 𝜃𝑛𝑡 Posterior analysis from MCMC 𝜃0 𝜃1 𝜃2 𝜃3 …………………... Obtain independent samples: Generate samples based on MCMC sampling. Discard initial “burn-in” period samples to remove dependence on initialization. Thinning- select every mth sample to reduce correlation . Inspect sample statistics (e.g., histogram, sample quantiles, …) 𝜃N Summary Bayesian Inference Model Selection Approximate Inference Variational Bayes MCMC Sampling References • • • • • • • • • • Pattern Recognition and Machine Learning (2006). Christopher M. Bishop Bayesian reasoning and machine learning. David Barber Information theory, pattern recognition and learning algorithms. David McKay Conjugate Bayesian analysis of the Gaussian distribution. Kevin P. Murphy. Videolectures.net – Bayesian inference, MCMC , Variational Bayes Akaike, H (1974). A new look at statistical model identification. IEEE transactions on Automatic Control 19, 716-723. Schwarz, G. (1978). Estimating the dimension of a model. Annals of Statistics 6, 461-464. Kass, R.E. and Raftery, A.E. (1995).Bayes factors Journal of the American Statistical Association, 90, 773-795. Markov Chain Monte Carlo: Stochastic Simulation for Bayesian Inference, Second Edition (Chapman & Hall/CRC Texts in Statistical Science) Dani Gamerman, Hedibert F. Lopes. Statistical Parametric Mapping: The Analysis of Functional Brain Images. Thank You http://www.translationalneuromodeling.org/tapas/