03_Linear-Regression

Econometrics

Session 3 – Linear Regression

Amine Ouazad,

Asst. Prof. of Economics

Econometrics

Session 3 – Linear Regression

Amine Ouazad,

Asst. Prof. of Economics

Outline of the course

1. Introduction: Identification

2. Introduction: Inference

3. Linear Regression

4. Identification Issues in Linear Regressions

5. Inference Issues in Linear Regressions

This session

Introduction: Linear Regression

• What is the effect of X on Y?

• Hands-on problems:

– What is the effect of the death of the CEO (X) on firm performance (Y)? (Morten Bennedsen)

– What is the effect of child safety seats (X) on the probability of death (Y)? (Steve Levitt)

This session:

Linear Regression

1. Notations.

2. Assumptions.

3. The OLS estimator.

– Implementation in STATA.

4. The OLS estimator is CAN.

Consistent and Asymptotically Normal

5. The OLS estimator is BLUE.*

Best Linear Unbiased Estimator (BLUE)*

6. Essential statistics: t-stat, R squared, Adjusted R

Squared, F stat, Confidence intervals.

7. Tricky questions.

*Conditions apply

Session 3 – Linear Regression

1. NOTATIONS

Notations

• The effect of X on Y.

• What is X?

– K covariates (including the constant)

– N observations

– X is an NxK matrix.

• What is Y?

– N observations.

– Y is an N-vector.

Notations

• Relationship between y and the xs.

y=f(x1,x2,x3,x4,…,xK)+ e

• f: a function K variables.

• e

: the unobservables (a scalar).

Session 3 – Linear Regression

2. ASSUMPTIONS

Assumptions

• A1: Linearity

• A2: Full Rank

• A3: Exogeneity of the covariates

• A4: Homoskedasticity and nonautocorrelation

• A5: Exogenously generated covariates.

• A6: Normality of the residuals

Assumption A1: Linearity

• y = f(x1,x2,x3,…,xK)+ e

• y = x1 b

1 + x2 b

2 + …+xK b

K + e

• In ‘plain English’:

– The effect of xk is constant.

– The effect of xk does not depend on the value of xk’.

• Not satisfied if :

– squares/higher powers of x matter.

– Interaction terms matter.

Notations

1. Data generating process

2. Scalar notation

3. Matrix version #1

4. Matrix version #2

Assumption A2: Full Rank

• We assume that X’X is invertible.

• Notes:

– A2 may be satisfied in the data generating process but not for the observed.

• Examples:

– Month of the year dummies/Year dummies,

Country dummies, Gender dummies.

Assumption A3: Exogeneity

• i.e. mean independence of the residual and the covariates.

• E( e

|x1,…,xK) = 0.

• This is a property of the data generating process.

• Link with selection bias in Session 1?

Dealing with Endogeneity

• You’re assuming that there is no covariate correlated with the Xs that has an effect on Y.

– If it is only correlated with X with no effect on Y, it’s

OK.

– If it is not correlated with X and has an effect on Y, it’s OK.

• Example of a problem:

– Health and Hospital stays.

– What covariate should you add?

• Conclusion: Be creative !! Think about unobservables !!

Assumption A4: Homoskedasticity and Non Autocorrelation

• Var( e

|x1,…,xK) = s

2 .

• Corr( e i, e j|X) = 0.

• Visible on a scatterplot?

• Link with t-tests of session 2?

• Examples: correlated/random effects.

Assumption A5

Exogenously generated covariates

1. Instead of requiring the mean independence of the residual and the covariates, we might require their independence.

– (Recall X and e independent if f(X,e)=f(X)f(e))

2. Sometimes we will think of X as fixed rather than exogenously generated.

Assumption A6:

Normality of the Residuals

• The asymptotic properties of OLS (to be discussed below) do not depend on the normality of the residuals: semi-parametric approach.

• But for results with a fixed number of observations, we need the normality of the residuals for the OLS to have nice properties

(to be defined below).

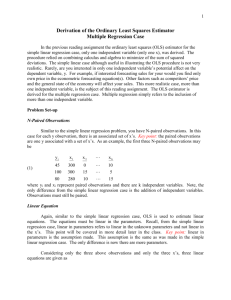

Session 3 – Linear Regression

3. THE ORDINARY LEAST SQUARES

ESTIMATOR

• Formula:

The OLS Estimator

• Two interpretations:

– Minimization of sum of squares (Gauss’s interpretation).

– Coefficient beta which makes the observed X and epsilons mean independent (according to

A3).

OLS estimator

• Exercise: Find the OLS estimator in the case where both y and x are scalars (i.e. not vectors). Learn the formula by heart (if correct !).

Implementation in Stata

• STATA regress command.

– regress y x1 x2 x3 x4 x5 …

• What does Stata do?

– drops variables that are perfectly correlated. (to make sure A2 is satisfied). Always check the number of observations !

• Options will be seen in the following sessions.

• Dummies (e.g. for years) can be included using « xi: i.year ». Again A2 must be satisfied.

First things first: Desc. Stats

• Each variable used in the analysis:

Mean, standard deviation for the sample and the subsamples.

• Other possible outputs: min max, median (only if you care).

• Source of the dataset.

• Why??

• Show the reader the variables are “well behaved”: no outlier driving the regression, consistent with intuition.

• Number of observations should be constant across regressions

(next slide).

Reading a table … from the Levitt paper (2006 wp)

Other important advice

1. As a best practice always start by regressing y on x with no controls except the most essential ones.

• No effect? Then maybe you should think twice about going further.

2. Then add controls one by one, or group by group.

• Explain why coefficient of interest changes from one column to the next. (See next session)

Stata tricks

• Output the estimation results using estout or outreg.

– Display stars for coefficients’ significance.

– Outputs the essential statistics (F, R2, t test).

– Stacks the columns of regression output for regressions with different sets of covariates.

• Formats: LaTeX and text (Microsoft Word).

Session 3 – Linear Regression

4. LARGE SAMPLE PROPERTIES OF

THE OLS ESTIMATOR

The OLS estimator is CAN

• CAN :

– Consistent

– Asymptotically Normal 𝛽 − 𝛽) → 𝑑 𝑁(0, 𝑉)

• Proof:

1. Use ‘true’ relationship between y and X to show that b = b

+ (1/N (X’X) -1 )(1/N (X’ e

)).

2. Use Slutsky theorem and A3 to show consistency.

3. Use CLT and A3 to show asymptotic normality.

4. V = plim (1/N (X’X)) -1

OLS is CAN: numerical simulation

• Typical design of a study:

1. Recruit X% of a population (for instance a random sample of students at INSEAD).

2. Collect the data.

3. Perform the regression and get the OLS estimator.

• If you perform these steps independently a large number of times (thought experiment), then you will get a normal distribution of parameters.

Important assumptions

• A1, A2, A3 are needed to solve the identification problem:

– With them, estimator is consistent.

• A4 is needed

– A4 affects the variance covariance matrix.

• Violations of A3? Next session (identif. Issues)

• Violations of A4? Session on inference issues.

Session 3 – Linear Regression

5. FINITE SAMPLE PROPERTIES OF

THE OLS ESTIMATOR

The OLS Estimator is BLUE

• BLUE:

– Best …

– Linear …

X and Y

– Unbiased …

– Estimator … observations i.e. has minimum variance i.e. is a linear function of the i.e. i.e. it is just a function of the

• Proof (a.k.a. the Gauss Markov Theorem):

OLS is BLUE

• Steps of the proof:

– OLS is LUE because of A1 and A3.

– OLS is Best…

1. For any other LUE, such as Cy, CX=Id.

2. Then take the difference Dy= Cy-b. (b is the OLS)

3. Show that Var(b0|X) = Var(b|X) + s 2 D’D.

4. The result follows from s 2 D’D > 0.

Finite sample distribution

• The OLS estimator is normally distributed for a fixed N, as long as one assumes the normality of the residuals (A6).

• What is “large” N?

– Small: e.g. Acemoglu, Johnson and Robinson

– Large: e.g. Bennedsen and Perez Gonzalez.

– Statistical question: rate of convergence of the law of large numbers.

This is small N

Other examples

Large N

• Compustat (1,000s + observations)

• Execucomp

• Scanner data

Small N

• Cross-country regressions (< 100 points)

Session 3 – Linear Regression

6. STATISTICS FOR READING THE

OUTPUT OF OLS ESTIMATION

Statistics

• R squared

– What share of the variance of the outcome variable is explained by the covariates?

• t-test

– Is the coefficient on the variable of interest significant?

• Confidence intervals

– What interval includes the true coefficient with probability 95%?

• F statistic.

– Is the model better than random noise?

Reading Stata Output

R Squared

• Measures the share of the variance of Y (the dependent variable) explained by the model

X b

, hence R 2 = var(X b

)/var(Y).

• Note that if you regress Y on itself, the R2 is

100%. The R2 is not a good indicator of the quality of a model.

Tricky Question

• Should I choose the model with the highest

R squared?

1. Adding a variable mechanically raises the R squared.

2. A model with endogenous variables (thus not interpretable nor causal) can have a high R square.

Adjusted R-Square

• Corrects for the number of variables in the regression.

𝐴𝑑𝑗 𝑅2 = 1 −

𝑁−1

𝑁−𝐾

(1 − 𝑅2)

• Proposition: When adding a variable to a regression model, the adjusted R-square increases if and only if the square of the tstatistic is greater than 1.

• Adj-R2: arbitrary (1, why 1?) but still interesting.

t-test and p value

𝑡 = 𝛽 𝑘 𝜎 2 𝑆 𝑘𝑘

→ 𝑆(𝑁 − 𝐾)

• p-value: significance level for the coefficient.

• Significance at 95% : pvalue lower than 0.05.

– Typical value for t is 1.96 (when N is large, t is normal).

• Significance at X% : pvalue lower than 1-X.

• Important significance levels: 10%, 5%, 1%.

– Depending on the size of the dataset.

• t-test is valid asymptotically under A1,A2,A3,A4.

• t-test is valid at finite distance with A6.

• Small sample t-tests… see Wooldridge NBER conference,

“Recent advances in Econometrics.”

F Statistic

• Is the model as a whole significant?

• Hypothesis H0: all coefficients are equal to zero, except the constant.

• Alternative hypothesis: at least one coefficient is nonzero.

• Under the null hypothesis, in distribution:

𝐹 𝐾 − 1, 𝑁 − 𝐾 =

𝑅2

𝐾 − 1

1 − 𝑅2

𝑁 − 𝐾

→ 𝐹(𝐾 − 1, 𝑁 − 𝐾)

Session 3 – Linear Regression

7. TRICKY QUESTIONS

Tricky Questions

• Can I drop a non significant variable?

• What if two variables are very strongly correlated (but not perfectly correlated)?

• How do I deal (simply) with missing/miscoded data?

• How do I identify influential observations?

Tricky Questions

• Can I drop a non significant variable?

– A variable may be non significant but still have a significant correlation with other covariates…

– Dropping the non significant covariate may unduly increase the significance of the coefficient of interest. (recently seen in an OECD working paper).

• Conclusion: controls stay.

Tricky Questions

• What if two variables are very strongly correlated (but not perfectly)?

– One coefficient tends to be very significant and positive…

– While the coefficient of the other variable is very significant and negative!

• Beware of multicollinearity.

Tricky Questions

• How do I deal (simply) with missing data?

– Create dummies for missing covariates instead of dropping them from the regression.

– If it is the dependent variable, focus on the subset of non missing dependents.

– Argue in the paper that it is missing at random (if possible).

• For more advanced material, see session on

Heckman selection model.

How do I identify influential points?

• Run the regression with the dataset except the point in question.

• Identify influential observations by making a scatterplot of the dependent variable and the prediction Xb.

Tricky Questions

• Can I drop the constant in the model?

– No.

• Can I include an interaction term (or a square) without the simple terms?

– No.

Session 3 – Linear Regression

NEXT SESSIONS …

LOOKING FORWARD

Next session

• What if some of my covariates are measured with error?

– Income, degrees, performance, network.

• What if some variable is not included

(because you forgot or don’t have it) and still has an impact on y?

– « Omitted variable bias »

Important points from this session

• REMEMBER A1 to A6 by heart.

– Which assumptions are crucial for the asymptotics?

– Which assumptions are crucial for the finite sample validity of the OLS estimator?

• START REGRESSING IN STATA TODAY !

– regress and outreg2