(Better) Bootstrap Confidence Intervals

advertisement

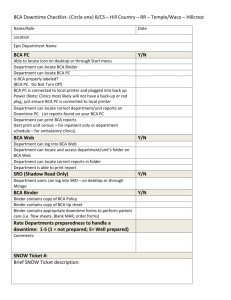

TAU Bootstrap Seminar 2011 Dr. Saharon Rosset (Better) Bootstrap Confidence Intervals Shachar Kaufman Based on Efron and Tibshirani’s “An introduction to the bootstrap” Chapter 14 Agenda • What’s wrong with the simpler intervals? • The (nonparametric) BCa method • The (nonparametric) ABC method – Not really Example: simpler intervals are bad 𝜃 ≔ var 𝐴 𝑛 𝑖=0 𝐴𝑖 − 𝐴 𝜃≔ 𝑛 2 Example: simpler intervals are bad Under the assumption that 𝐴𝑖 , 𝐵𝑖 ~𝒩 𝜇, Σ i.i.d. Under the assumption that 𝐴𝑖 , 𝐵𝑖 ~𝐹 i.i.d. Have exact analytical interval Can do parametric-bootstrap Can do nonparametric bootstrap Why are the simpler intervals bad? • Standard (normal) confidence interval assumes symmetry around 𝜃 • Bootstrap-t often erratic in practice – “Cannot be recommended for general nonparametric problems” • Percentile suffers from low coverage – Assumes nonp. distribution of 𝜃 ∗ is representative of 𝜃 (e.g. has mean 𝜃 like 𝜃 does) • Standard & percentile methods assume homogenous behavior of 𝜃, whatever 𝜃 is – (e.g. standard deviation of 𝜃 does not change with 𝜃) A more flexible inference model Account for higher-order statistics Mean Standard deviation Skewness 𝜃∗ A more flexible inference model • If 𝜃~𝒩 𝜃, 𝜎 2 doesn’t work for the data, maybe we could find a transform 𝜙 ≔ 𝑚 𝜃 and constants 𝑧0 and 𝑎 for which we can accept that 𝜙~𝒩 𝜙 − 𝑧0 𝜎𝜙 , 𝜎𝜙2 𝜎𝜙 ≔ 1 + 𝑎𝜙 • Additional unknowns – 𝑚 ⋅ allows a flexible parameter-description scale – 𝑧0 allows bias: ℙ 𝜙 < 𝜙 = Φ 𝑧0 – 𝑎 allows “𝜎 2 ” to change with 𝜃 • As we know, “more flexible” is not necessarily “better” • Under broad conditions, in this case it is (TBD) Where does this new model lead? 𝜙~𝒩 𝜙 − 𝑧0 𝜎𝜙 , 𝜎𝜙2 𝜎𝜙 ≔ 1 + 𝑎𝜙 𝛼 Assume known 𝑎 and 𝑧0 = 0, and initially that 𝜙 = 𝜙𝑙𝑜,0 ≔ 0, hence 𝜎𝜙,0 ≔ 1 Calculate a standard 𝛼-confidence endpoint from this 𝛼 𝑧 𝛼 ≔ Φ−1 𝛼 , 𝜙𝑙𝑜,1 ≔ 𝑧 𝛼 𝜎𝜙,0 = 𝑧 Now reexamine the actual stdev, this time assuming that 𝛼 𝜙 = 𝜙𝑙𝑜,1 According to the model, it will be 𝛼 𝜎𝜙,1 ≔ 1 + 𝑎𝜙𝑙𝑜,1 = 1 + 𝑎𝑧 𝛼 𝛼 Where does this new model lead? 𝜙~𝒩 𝜙 − 𝑧0 𝜎𝜙 , 𝜎𝜙2 𝜎𝜙 ≔ 1 + 𝑎𝜙 Ok but this leads to an updated endpoint 𝛼 𝜙𝑙𝑜,2 ≔ 𝑧 𝛼 𝜎𝜙,1 = 𝑧 𝛼 1 + 𝑎𝑧 Which leads to an updated 𝛼 𝛼 𝛼 𝛼 𝛼 2 𝜎𝜙,2 = 1 + 𝑎𝑧 1 + 𝑎𝑧 = 1 + 𝑎𝑧 + 𝑎𝑧 If we continue iteratively to infinity this way we end up with the confidence interval endpoint 𝛼 𝑧 𝛼 𝜙𝑙𝑜,∞ = 1 − 𝑎𝑧 𝛼 Where does this new model lead? • Do this exercise considering 𝑧0 ≠ 0 and get 𝛼 𝜙lo,∞ • Similarly for 𝑧0 + 𝑧 𝛼 = 𝑧0 + 1 − 𝑎 𝑧0 + 𝑧 𝛼 𝜙up,∞ with 𝑧 1−𝛼 𝛼 Enter BCa • “Bias-corrected and accelerated” • Like percentile confidence interval – Both ends are percentiles 𝜃 ∗ bootstap instances of 𝜃 ∗ – Just not the simple 𝛼1 ≔ 𝛼 𝛼2 ≔ 1 − 𝛼 𝛼1 , 𝜃∗ 𝛼2 of the 𝐵 BCa • Instead 𝑧0 + 𝑧 𝛼 𝛼1 ≔ Φ 𝑧0 + 1 − 𝑎 𝑧0 + 𝑧 𝛼 𝑧0 + 𝑧 1−𝛼 𝛼2 ≔ Φ 𝑧0 + 1 − 𝑎 𝑧0 + 𝑧 1−𝛼 • 𝑧0 and 𝑎 are parameters we will estimate – When both zero, we get the good-old percentile CI • Notice we never had to explicitly find 𝜙 ≔ 𝑚 𝜃 BCa • 𝑧0 tackles bias ℙ 𝜙 < 𝜙 = Φ 𝑧0 𝑧0 ≔ Φ−1 # 𝜃∗ 𝑏 < 𝜃 𝐵 (since 𝑚 is monotone) • 𝑎 accounts for a standard deviation of 𝜃 which varies with 𝜃 (linearly, on the “normal scale” 𝜙) BCa • One suggested estimator for 𝑎 is via the jackknife 𝑛 𝑖=1 𝑎≔ 6 where 𝜃 and 𝜃 ⋅ 𝑖 ≔ 𝑛 𝑖=1 𝜃 𝜃 𝑖 𝑖 −𝜃 −𝜃 3 ⋅ 2 1.5 ⋅ ≔ 𝑡 𝑥 without sample 𝑖 1 𝑛 𝑛 𝑖=1 𝜃 𝑖 • You won’t find the rationale behind this formula in the book (though it is clearly related to one of the standard ways to define skewness) Theoretical advantages of BCa • Transformation respecting – If the interval for 𝜃 is 𝜃lo , 𝜃up then the interval for a monotone 𝑢 𝜃 is 𝑢 𝜃lo , 𝑢 𝜃up – So no need to worry about finding transforms of 𝜃 where confidence intervals perform well • Which is necessary in practice with bootstrap-t CI • And with the standard CI (e.g. Fisher corrcoeff trans.) • Percentile CI is transformation respecting Theoretical advantages of BCa • Accuracy 𝛼 – We want 𝜃lo s.t. ℙ 𝜃 < 𝜃lo = 𝛼 – But a practical 𝜃lo is an approximation where 𝛼 ℙ 𝜃 < 𝜃lo ≅ 𝛼 – BCa (and bootstrap-t) endpoints are “second order accurate”, where 1 𝛼 ℙ 𝜃 < 𝜃lo = 𝛼 + 𝑂 𝑛 – This is in contrast to the standard and percentile 1 methods which only converge at rate (“first order 𝑛 accurate”) errors one order of magnitude greater But BCa is expensive • The use of direct bootstrapping to calculate delicate statistics such as 𝑧0 and 𝑎 requires a large 𝐵 to work satisfactorily • Fortunately, BCa can be analytically approximated (with a Taylor expansion, for differentiable 𝑡 𝑥 ) so that no Monte Carlo simulation is required • This is the ABC method which retains the good theoretical properties of BCa The ABC method • Only an introduction (Chapter 22) • Discusses the “how”, not the “why” • For additional details see Diciccio and Efron 1992 or 1996 The ABC method • Given the estimator in resampling form 𝜃=𝑇 𝑃 – Recall 𝑃, the “resampling vector”, is an 𝑛 dimensional random variable with components 𝑃𝑗 ≔ ℙ 𝑥𝑗 = 𝑥1∗ – Recall 𝑃0 ≔ 1 1 1 , ,…, 𝑛 𝑛 𝑛 • Second-order Taylor analysis of the estimate – as a function of the bootstrap resampling methodology 𝑇 𝑃 𝑇 𝑃 𝑇𝑖 ≔ 𝐽𝑖𝑖 , 𝑇𝑖 ≔ 𝐻𝑖𝑖𝑖 0 0 𝑃=𝑃 𝑃=𝑃 The ABC method • Can approximate all the BCa parameter estimates (i.e. estimate the parameters in a different way) 1 𝑛2 – 𝜎= – 𝑎= 1 6 𝑛 2 𝑇 𝑖=1 𝑖 1 2 𝑛 3 𝑖=1 𝑇𝑖 2 𝑛 2 3 𝑖=1 𝑇𝑖 – 𝑧0 = 𝑎 − 𝛾, where 𝑏 • 𝛾 ≔ 𝜎 − 𝑐𝑞 1 • 𝑏 ≔ 2𝑛2 𝑛 𝑖=1 𝑇𝑖 • 𝑐𝑞 ≔something akin to a Hessian component but along a specific direction not perpendicular to any natural axis (the “least favorable family” direction) The ABC method • And the ABC interval endpoint 𝜃𝐴𝐵𝐶 𝜆𝛿 1−𝛼 ≔𝑇 𝑃 + 𝜎 0 • Where –𝜆≔ –𝛿≔ 𝜔 1−𝑎𝜔 2 𝑇 𝑃0 with 𝜔 ≔ 𝑧0 + 𝑧 1−𝛼 • Simple and to the point, aint it?