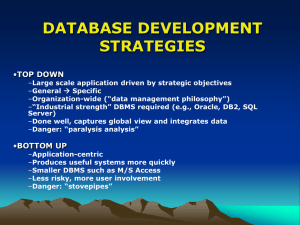

Statistics * Bayes v Classical

advertisement

UQ: from end to end Tony O’Hagan Outline Session 1: Quantifying input uncertainty Information Modelling Elicitation Coffee break: Propagation! Session 2: Model discrepancy and calibration All models are wrong Impact of model discrepancy Modelling model discrepancy 12/8/2014 UQ Summerschool 2014 2 UQ: from end to end Session 1: Quantifying input uncertainty Context You have a model To simulate or predict some real-world process I’ll call it a simulator For a given use of the simulator you are unsure of the true or correct values of inputs This uncertainty is a major component of UQ Propagating it through the simulator is a fundamental step in UQ We need to express that uncertainty in the form of probability distributions But how? I feel that this is a neglected area in UQ Distributions assumed, often with no discussion of where they came from 12/8/2014 UQ Summerschool 2014 4 Focus of this session Probability distributions for inputs Representing the analyst’s knowledge/uncertainty What they mean Interpretation of probability Where they come from Analysis of data and/or judgement Elicitation Principles Single input Multiple inputs Multiple experts 12/8/2014 UQ Summerschool 2014 5 The analyst The distributions should represent the best knowledge of the model user about the inputs I will refer to the model user as the analyst They are the analyst’s responsibility The analyst is the one who is interested in the simulator output For a particular application And some or all of the inputs refer specifically to that application The analyst must own the input distributions They should represent best knowledge Obviously! Anything else is unscientific Less input uncertainty means (generally) less output uncertainty 12/8/2014 UQ Summerschool 2014 6 What probability? Before we go further, we need to understand how a probability distribution represents someone’s knowledge The question goes right to the heart of what probability means Example: We are interested in X = the proportion of people infected with HIV who will develop AIDS within 10 years when treated with a new drug X will be an input to a clinical trial simulator To assist the pharmaceutical company in designing the drug’s development plan Analyst Mary expresses a probability distribution for X 12/8/2014 UQ Summerschool 2014 7 Mary’s distribution The stated distribution is shown on the right It specifies how probable any particular values of X are E.g. It says there is a probability of almost 0.7 that X is below 0.4 And the expected value of X is 0.35 It even gives a nontrivial probability to X being less than 0.2 Which would represent a major reduction in HIV progression 12/8/2014 UQ Summerschool 2014 8 How can X have probabilities? Almost everyone learning probability is taught the frequency interpretation The probability of something is the long run relative frequency with which it occurs in a very long sequence of repetitions How can we have repetitions of X? It’s a one-off: it will only ever have one value It’s that unique value we’re interested in Simulator inputs are almost always like this – they’re one-off! Mary’s distribution can’t be a probability distribution in that sense So what do her probabilities actually mean? And does she know? 12/8/2014 UQ Summerschool 2014 9 Mary’s probabilities Mary’s probability 0.7 that X < 0.4 is a judgement She thinks it’s more likely to be below 0.4 than above So in principle she would bet even money on it In fact she would bet £2 to win £1 (because 0.7 > 2/3) Her expectation of 0.35 is a kind of best estimate Not a long run average over many repetitions Her probabilities are an expression of her beliefs They are personal judgements You or I would have different probabilities We want her judgements because she’s the expert! We need a new definition of probability 12/8/2014 UQ Summerschool 2014 10 Subjective probability The probability of a proposition E is a measure of a person’s degree of belief in the truth of E If they are certain that E is true then P(E) = 1 If they are certain it is false then P(E) = 0 Otherwise P(E) lies between these two extremes Exercise 1 – How many Muslims in Britain? Refer to the two questions on your sheet The first asks for a probability Make your own personal judgement If you don’t already have a good feel for the probability scale, you may find it useful to think about betting odds The second asks for another probability 12/8/2014 UQ Summerschool 2014 11 Subjective includes frequency The frequency and subjective definitions of probability are compatible If the results of a very long sequence of repetitions are available, they agree Frequency probability equates to the long run frequency All observers who accept the sequence as comprising repetitions will give that frequency as their (personal/subjective) probability for the next (or any future) result in the sequence Subjective probability extends frequency probability But also seamlessly covers propositions that are not repeatable It’s also more controversial 12/8/2014 UQ Summerschool 2014 12 It doesn’t include prejudice etc! The word “subjective” has derogatory overtones Subjectivity should not admit prejudice, bias, superstition, wishful thinking, sloppy thinking, manipulation ... Subjective probabilities are judgements but they should be careful, honest, informed judgements As “objective” as possible without ducking the issue Using best practice Formal elicitation methods Bayesian analysis Probability judgements go along with all the other judgements that a scientist necessarily makes And should be argued for in the same careful, honest and informed way 12/8/2014 UQ Summerschool 2014 13 What about data? I’ve presented the analyst’s probability distributions as a matter of pure subjective judgement – what about data? Many possible scenarios: X is a parameter for which there is a published value Analyst has one or more direct experimental evaluations for X Analyst has data relating more or less directly to X Analyst has some hard data but also personal expertise about X Analyst relies on personal expertise about X Analyst seeks input from an expert on X … 12/8/2014 UQ Summerschool 2014 14 The case of a published value The published value may come with a completely characterised probability distribution for X representing uncertainty in the value The analyst simply accepts this distribution as her own judgement Or it may not The analyst needs to consider the uncertainty in X around the published value P X = P + E, where E is the error Analyst formulates her own probability distribution for E The published value P may simply come with a standard deviation The analyst accepts this as one judgement about E 12/8/2014 UQ Summerschool 2014 15 Using data – principles The appropriate framework for using data is Bayesian statistics Because it delivers a probability distribution for X Classical frequentist statistics can’t do that Even a confidence interval is not a probability statement about X The data are related to X through a likelihood function Derived from a statistical model This is combined with whatever additional knowledge the analyst may have In the form of a prior distribution Combination is performed by Bayes’ theorem The result is the analyst’s posterior distribution for X 12/8/2014 UQ Summerschool 2014 16 Using data – practicalities If data are highly informative about X, prior information may not matter Use a conventional non-informative prior distribution Otherwise the analyst formulates her own prior distribution for X Bayesian analysis can be complex Analyst is likely to need the services of a Bayesian statistician The likelihood/model is also a matter of judgement! Although I will not delve into this today 12/8/2014 UQ Summerschool 2014 17 Summary We have identified several situations where distributions need to be formulated by personal judgement No good data – analyst formulates distribution for X Published data does not have complete characterisation of uncertainty – analyst formulates distribution for E Data supplemented by additional expertise – analyst formulates prior distribution for X Analyst may seek judgements of one or more experts Rather than relying on her own Particularly when the stakes are high We have identified just one situation where personal judgements are not needed Published data with completely characterised uncertainty 12/8/2014 UQ Summerschool 2014 18 Elicitation The process of representing the knowledge of one or more persons (experts) concerning an uncertain quantity as a probability distribution for that quantity Typically conducted as a dialogue between the experts – who have substantive knowledge about the quantity (or quantities) of interest – and a facilitator – who has expertise in the process of elicitation ideally face to face but may also be done by video-conference, teleconference or online 12/8/2014 UQ Summerschool 2014 19 Some history The idea of formally representing uncertainty using subjective probability judgements began to be taken seriously in the 1960s For instance, for judgement of extreme risks Psychologists became interested How do people make probability judgements? What mental processes are used, and what does this tell us about the brain’s processing generally? They found many ways that we make bad judgements The heuristics and biases movement And continued to look mostly at how we get it wrong Since this told them a lot about our mental processes 12/8/2014 UQ Summerschool 2014 20 Meanwhile ... Statisticians increasingly made use of subjective probabilities Growth of Bayesian statistics Some formal elicitation but mostly unstructured judgements Little awareness of the work in psychology Reinforced recently by UQ with uncertain simulator inputs Our interests are more complex Not really interested in single probabilities Whole probability distributions Multivariate distributions We want to know how to get it right Psychology provides almost no help with these challenges 12/8/2014 UQ Summerschool 2014 21 Heuristics and biases Our brains evolved to make quick decisions Heuristics are short-cut reasoning techniques Allow us to make good judgements quickly in familiar situations Judgement of probability is not something that we evolved to do well The old heuristics now produce biases Anchoring and adjustment Availability Overconfidence And many others 12/8/2014 UQ Summerschool 2014 22 Anchoring and adjustment Exercise 1 was designed to exhibit this heuristic The probabilities should on average be different in the two groups When asked to make two related judgements, the second is affected by the first The second is judged relative to the first By adjustment away from the first judgement The first is called the anchor Adjustment is typically inadequate Second response too close to the first (anchor) Anchoring can be strong even when obviously not really relevant to the second question Just putting any numbers into the discussion creates anchors Exercise 1 12/8/2014 UQ Summerschool 2014 23 Availability The probability of an event is judged more likely if we can quickly bring to mind instances of it Things that are more memorable are deemed more probable High profile train accidents in the UK lead people to imagine rail travel is more risky than it really is My judgement of the risk of dying from a particular disease will be increased if I know (of) people who have the disease or have died from it Important for analyst to review all the evidence 12/8/2014 UQ Summerschool 2014 24 Overconfidence It is generally said that experts are overconfident When asked to give 95% intervals, say, far fewer than 95% contain the true value Several possible explanations Wish to demonstrate expertise Anchoring to a central estimate Difficulty of judging extreme events Not thinking ‘outside the box’ Expertise often consists of specialist heuristics Situations we elicit judgements on are not typical Probably over-stated as a general phenomenon Experts can be under-confident if afraid of consequences A matter of personality and feeling of security Evidence of over-confidence is not from real experts making judgements on serious questions 12/8/2014 UQ Summerschool 2014 25 The keys to good elicitation First, pay attention to the literature on psychology of elicitation How you ask a question influences the answer Second, ask about the right things Things that experts are likely to assess most accurately Third, prepare thoroughly Provide help and training for experts These are built into the SHELF system Sheffield Elicitation Framework 12/8/2014 UQ Summerschool 2014 26 The SHELF system SHELF is a package of documents and simple software to aid elicitation General advice on conducting the elicitation Templates for recording the elicitation Suitable for several different basic methods Annotated versions of the templates with detailed guidance Some R functions for fitting distributions and providing feedback SHELF is freely available and comments and suggestions for additions are welcomed Developed by Tony O’Hagan and Jeremy Oakley R functions by Jeremy http://tonyohagan.co.uk/shelf 12/8/2014 UQ Summerschool 2014 27 A SHELF template Word document Facilitator follows a carefully constructed sequence of questions Final step invites experts to give their own feed-back The tertile method One of several supported in SHELF 12/8/2014 UQ Summerschool 2014 28 Annotated template For facilitator’s guidance Advice on each field of the template Ordinary text says what is required in each field Text in brackets gives advice on how to work with experts Text in italics says why we are doing it this way Based on findings in psychology 12/8/2014 UQ Summerschool 2014 Let’s see how it works SHELF templates provide a carefully structured sequence of steps Informed by psychology and practical experience I’ll work through these, using the following illustrative example An Expert is asked for her judgements about the distance D between the airports of Paris Charles de Gaulle and Chicago O’Hare in miles She has experience of flying distances but has not flown this route before She knows that from LHR to JFK is about 3500 miles 12/8/2014 UQ Summerschool 2014 30 Credible range L to U Expert is asked for lower and upper credible bounds Expert would be very surprised if X was found to be below the lower credible bound or above the upper credible bound It’s not impossible to be outside the credible range, just highly unlikely Practical interpretation might be a probability of 1% that X is below L and 1% that it’s above U Example Expert sets lower bound L = 3500 CDG to ORD surely more than LHR to JFK Upper bound U = 5000 Additional flying distance for CDG to ORD surely less than 1500 12/8/2014 UQ Summerschool 2014 31 The median M The value of x for which the expert judges X to be equally likely to be above or below x Probability 0.5 (or 50%) below and 0.5 above Like a toss of a coin Or chopping the range into two equally probable parts If the expert were asked to choose either to bet on X < x or on X > x, he/she should have no preference It’s a specific kind of ‘estimate’ of X Need to think, not just go for mid-point of the credible range L = 0, U = 1 M = 0.36 Example Expert chooses median M = 4000 12/8/2014 UQ Summerschool 2014 32 The quartiles Q1 and Q3 The lower quartile Q1 is the p = 25% quantile The expert judges X < x to have probability 0.25 Like tossing two successive Heads with a coin Equivalently, x divides the range below the median into two equi-probable parts ‘Less than Q1’ & ‘between Q1 and M’ Should generally be closer to M than Q1 L = 0, U = 1 M = 0.36 Q1 = 0.25 Q3 = 0.49 Similarly, upper quartile Q3 is p = 75% Q1, M and Q3 divide the range into four equi-probable parts Example Expert chooses Q1 = 3850, Q3 = 4300 12/8/2014 UQ Summerschool 2014 33 Then fit a distribution Any convenient distribution As long as it fits the elicited summaries adequately SHELF has software for fitting a range of standard distributions At this point, the choice should not matter The idea is that we have elicited enough Any reasonable distribution choice will be similar to any other Elicitation can never be exact The elicited summaries are only approximate anyway If the choice does matter i.e. different fitted distributions give different answers to the problem for which we are doing the elicitation We can try to remove the sensitivity by eliciting more summaries Or involving more experts 12/8/2014 UQ Summerschool 2014 34 Exercise 2 So let’s do it! We’re going to elicit your beliefs about one of the following (you can choose!) Number of gold medals to be won by China in 2016 Olympics Length of the Yangtze River Population of Beijing in 2011 Proportion of the total world land area covered by China 12/8/2014 UQ Summerschool 2014 35 Do we need a facilitator? Yes, if the simulator output is sufficiently important A skilled facilitator is essential to get the most accurate and reliable representation of the expert’s knowledge At least for the most influential inputs Otherwise, no The analyst can simply quantify her own judgements But it’s still very useful to follow the SHELF process In effect, the analyst interrogates herself Playing the role of facilitator as well as that of expert 12/8/2014 UQ Summerschool 2014 36 Multiple inputs Hitherto we’ve basically considered just one input X In practice, simulators almost always have multiple inputs Then we need to think about dependence Two or more uncertain quantities are independent if: When you learn something about one of them it doesn’t change your beliefs about the others It’s a personal judgement, like everything else in elicitation! They may be independent for one expert but not for another Independence is nice Independent inputs can just be elicited separately 12/8/2014 UQ Summerschool 2014 37 Exercise 3 1. 2. 3. 4. Which of the following sets of quantities would you consider independent? The average weight B of black US males aged 40 and the average weight W of white US males aged 40 My height H and my age A The time T taken by the Japanese bullet train to travel from Tokyo to Kyoto and the distance D travelled The atomic numbers of Calcium (Ca), Silver (Ag) and Arsenic (As) 12/8/2014 UQ Summerschool 2014 38 Eliciting dependence If quantities are not independent we must elicit the nature and magnitude of dependence between them Remembering that probabilities are the best summaries to elicit Joint probabilities Probability that X takes some x-values and Y takes some y-values Conditional probabilities Probability that Y takes some y-values if X takes some x-values Much harder to think about than probabilities for a single quantity Perhaps the simplest is the quadrant probability Probability both X and Y are above their individual medians 12/8/2014 UQ Summerschool 2014 39 Bivariate quadrant probability First elicit medians Now elicit quadrant probability ? 0.5 Value indicates direction and strength of dependence Median 0.5 Median 12/8/2014 It can’t be negative Or more than 0.5 0.25 if X and Y are independent Greater if positively correlated 0.5 if when one is above its median the other must be Less than 0.25 if negatively correlated Zero if they can’t both be above their medians UQ Summerschool 2014 40 Higher dimensions This is already hard Just for two uncertain quantities In order to elicit dependence in any depth we will need to elicit several more joint or conditional probabilities More than two variables – more complex still! Even with just three quantities... Three pairwise bivariate distributions With constraints The three-way joint distribution is not implied by those, either We can’t even visualise or draw it! There is no clear understanding among elicitation practitioners on how to elicit dependence 12/8/2014 UQ Summerschool 2014 41 Avoiding the problem It would be so much easier if the quantities we chose to elicit were independent i.e. no dependence or correlation between them Then eliciting a distribution for each quantity would be enough We wouldn’t need to elicit multivariate summaries The trick is to ask about the right quantities Redefine inputs so they become independent This is called elaboration Or structuring 3 2 1 -3 -2 -1 y z0 x 0 1 2 3 -1 -2 -3 12/8/2014 UQ Summerschool 2014 42 Example – two treatment effects A clinical trial will compare a new treatment with an existing treatment Existing treatment effect A is relatively well known Expert has low uncertainty But added uncertainty due to the effects of the sample population New treatment effect B is more uncertain Evidence mainly from small-scale Phase III trial A and B will not be independent Mainly because of the trial population effect If A is at the high end of the expert’s distribution, she would expect B also to be relatively high Can we break this dependence with elaboration? 12/8/2014 UQ Summerschool 2014 43 Relative effect In the two treatments example, note that in clinical trials attention often focuses on the relative effect R = B/A When effect is bad, like deaths, this is called relative risk Expert may judge R to be independent of A Particularly if random trial effect is assumed multiplicative If additive we might instead consider A independent of D = B – A But this is unusual So elicit separate distributions for R and A The joint distribution of (A, B) is now implicit Can be derived if needed But often the motivating task can be rephrased in terms of (A, R) 12/8/2014 UQ Summerschool 2014 44 Trial effect Instead of simple structuring with the relative risk R, we can explicitly recognise the cause of the correlation Let T be the trial effect due to difference between the trial patients and the wider population Let E and N be efficacies of existing and new treatments in the wider population Then A = E x T and B = N x T Expert may be comfortable with independence of T, E and N With E well known, T fairly well known and N more uncertain We now have to elicit distributions for three quantities instead of two But can possibly assume them independent 12/8/2014 UQ Summerschool 2014 45 General principles Independence or dependence are in the head of the expert Two quantities are dependent if learning about one of them would change his/her beliefs about the other Explore possible structures with the expert(s) Find out how they think about these quantities Expertise often involves learning how to break a problem down into independent components SHELF does not yet handle multivariate elicitation But it does include an explicit structuring step Which we can now see is potentially very important! Templates for some special cases expected in the next release 12/8/2014 UQ Summerschool 2014 46 Multiple experts The case of multiple experts is important When elicitation is used to provide expert input to a decision problem with substantial consequences, we generally want to use the skill of as many experts as possible But they will all have different opinions Different distributions How do we aggregate them? In order to get a single elicited distribution 12/8/2014 UQ Summerschool 2014 47 Aggregating expert judgements 1. Two approaches Aggregate the distributions Elicit a distribution from each expert separately Combine them using a suitable formula For instance, simply average them Called ‘mathematical aggregation’ or ‘pooling’ 2. Aggregate the experts Get the experts together and elicit a single distribution Called ‘behavioural aggregation’ Neither is without problems 12/8/2014 UQ Summerschool 2014 48 Multiple experts in SHELF SHELF uses behavioural aggregation However, distributions are first elicited from experts separately After sharing of key information Allows facilitator to see the range of belief before aggregation Then experts discuss their differences With a view to assigning an aggregate distribution To represent what an impartial, intelligent observer might reasonably believe after seeing the experts’ judgements and hearing their discussions Facilitator can judge whether degree of compromise is appropriate to the intervening discussion 12/8/2014 UQ Summerschool 2014 49 Challenges in behavioural aggregation More psychological hazards Group dynamic – dominant/reticent experts Tendency to end up more confident Block votes Requires careful management What to do if they can’t agree? End up with two or more composite distributions Need to apply mathematical pooling to these But this is rare in practice 12/8/2014 UQ Summerschool 2014 50 Conclusions – Session 1 The analyst needs to supply probability distributions for uncertain inputs Probabilities are personal judgements But as objective and scientific as possible Distributions should represent her best knowledge A range of scenarios for specifying distributions From pure judgement When no good data are available To simply using a published value With quantification of uncertainty around that value Almost always, some part of the task will require distributions based on personal judgement E.g. prior distributions for Bayesian analysis of data 12/8/2014 UQ Summerschool 2014 51 Elicitation is the process of formulating knowledge about an uncertain quantity as a probability distribution Many pitfalls, practical and psychological SHELF is a set of protocols designed to avoid pitfalls An example of best practice in elicitation Templates to guide the facilitator or analyst through a structured sequence of steps Particular challenges arise when eliciting judgements about multiple inputs or from multiple experts 12/8/2014 UQ Summerschool 2014 52 And so to the coffee break Once we have specified input distributions, the next task is propagation of uncertainty through the simulator A well studied problem in UQ Polynomial chaos favoured by engineers, mathematicians Gaussian process emulators preferred by statisticians In my opinion, a more powerful and comprehensive UQ approach 12/8/2014 UQ Summerschool 2014 53 UQ: from end to end Session 2: Model discrepancy and calibration Case study – carbon flux Vegetation can be a major factor in mitigating the increase of CO2 in the atmosphere And hence reducing the greenhouse effect Through photosynthesis, plants take atmospheric CO2 Carbon builds new plant material and O2 is released But some CO2 is released again Respiration, death and decay The net reduction of CO2 is called Net Biosphere Production (NBP) I will refer to it as the carbon flux Complex processes modelled in SDGVM Sheffield Global Dynamic Vegetation Model 12/8/2014 UQ Summerschool 2014 55 SDGVM C flux outputs for 2000 Map of SDGVM estimates shows positive flux (C sink) in North, but negative (C source) in Midlands Total estimated flux is 9.06 Mt C Highly dependent on weather, so will vary greatly between years 12/8/2014 UQ Summerschool 2014 56 Quantifying input uncertainties Plant functional type parameters (growth characteristics) Expert elicitation Soil composition (nutrients and decomposition) Simple analysis from extensive (published) data Land cover (which PFTs are where) More complex Bayesian analysis of ‘confusion matrix’ data 12/8/2014 UQ Summerschool 2014 57 Elicitation Beliefs of expert (developer of SDGVMd) regarding plausible values of PFT parameters Four PFTs – Deciduous broadleaf (DBL), evergreen needleleaf (ENL), crops, grass Many parameters for each PFT Key ones identified by preliminary sensitivity analysis Important to allow for uncertainty about mix of species in a site and role of parameter in the model In the case of leaf life span for ENL, this was more complex 12/8/2014 UQ Summerschool 2014 58 ENL leaf life span 12/8/2014 UQ Summerschool 2014 59 Correlations PFT parameter value at one site may differ from its value in another Because of variation in species mix Common uncertainty about average over all species induces correlation Elicit beliefs about average over whole UK ENL joint distributions are mixtures of 25 components, with correlation both between and within years 12/8/2014 UQ Summerschool 2014 60 Soil composition Percentages of sand, clay and silt, plus bulk density Soil map available at high resolution Multiple values in each SDGVM site Used to form average (central estimate) And to assess uncertainty (variance) Augmented to allow for uncertainty in original data (expert judgement) Assumed independent between sites 12/8/2014 UQ Summerschool 2014 61 Land cover map LCM2000 is another high resolution map Obtained from satellite images Vegetation in each pixel assigned to one of 26 classes Aggregated to give proportions of each PFT at each site But data are uncertain Field data are available at a sample of pixels Countryside Survey 2000 Table of CS2000 class versus LCM2000 class is called the confusion matrix 12/8/2014 UQ Summerschool 2014 62 CS2000 versus LCM2000 matrix LCM2000 DBL CS2000 ENL Grass Crop Bare DBL 66 3 19 4 5 Enl Grass Crop Bare 8 31 7 2 20 5 1 0 1 356 41 3 0 22 289 8 0 15 9 81 Not symmetric Rather small numbers Bare is not a PFT and produces zero NBP 12/8/2014 UQ Summerschool 2014 63 Modelling land cover The matrix tells us about the probability distribution of LCM2000 class given the true (CS2000) class Subject to sampling errors But we need the probability distribution of true PFT given observed PFT Posterior probabilities as opposed to likelihoods We need a prior distribution for land cover We used observations in a neighbourhood Implicitly assuming an underlying smooth random field And the confusion matrix says nothing about spatial correlation of LCM2000 errors We again relied on expert judgement Using a notional equivalent number of independent pixels per site 12/8/2014 UQ Summerschool 2014 64 Overall proportions Red lines show LCM2000 proportions Clear overall biases Analysis gives estimates for all PFTs in each SDGVM site With variances and correlations 12/8/2014 UQ Summerschool 2014 65 Case study – results Following on the carbon flux case study, input uncertainties were propagated through the SDGVM simulator Extensive use of Gaussian process emulators 12/8/2014 UQ Summerschool 2014 66 Mean NBP corrections 12/8/2014 UQ Summerschool 2014 67 NBP standard deviations 12/8/2014 UQ Summerschool 2014 68 Aggregate across 4 PFTs Mean NBP 12/8/2014 Standard deviation UQ Summerschool 2014 69 England & Wales aggregate Plug-in estimate (Mt C) Mean (Mt C) Variance (Mt C2) Grass 5.28 4.37 0.2453 Crop 0.85 0.43 0.0327 Deciduous 2.13 1.80 0.0221 Evergreen 0.80 0.86 0.0048 PFT Covariances Total 12/8/2014 -0.0081 9.06 7.46 UQ Summerschool 2014 0.2968 70 Sources of uncertainty The total variance of 0.2968 is made up as follows Variance due to PFT and soil inputs = 0.2642 Variance due to land cover uncertainty = 0.0105 Variance due to interpolation/emulation = 0.0222 Land cover uncertainty much larger for individual PFT contributions Dominates for ENL But overall tends to cancel out Changes estimates Larger mean corrections and smaller overall uncertainty But we haven’t addressed what is probably the biggest source of uncertainty in this carbon flux problem … 12/8/2014 UQ Summerschool 2014 71 Notation A simulator takes a number of inputs and produces a number of outputs We can represent any output y as a function y = f (x) of a vector x of inputs 12/8/2014 UQ Summerschool 2014 72 Example: A simple machine (SM) A machine produces an amount of work y which depends on the amount of effort x put into it Ideally, y = f(x, β) = βx Parameter β is the rate at which effort can be converted to work True value of β is β* = 0.65 Data zi = yi + εi Graph shows observed data Points lie below y = 0.65x For large enough x Because of losses due to friction etc. Large relative to observation errors 12/8/2014 UQ Summerschool 2014 73 The SM as a simulator A simulator produces output from inputs When we consider calibration we divide its inputs into Calibration parameters – unknown but fixed Control variables – known features of application context Calibration concerns learning about the calibration parameters Using observations of the real process Extrapolation concerns predicting the real process At control variable values beyond where we have observations We can view the SM as a (very simple) simulator x is a control variable, β is a calibration parameter 12/8/2014 UQ Summerschool 2014 74 Tuning and physical parameters Calibration parameters may be physical or just for tuning We adjust tuning parameters so the model fits reality better We are not really interested in their ‘true’ values We calibrate tuning parameters for prediction Physical parameters are different We are often really interested in true physical values The SM’s efficiency parameter β is physical It’s the theoretically achievable efficiency in the absence of friction We like to think that calibration can help us learn about them 12/8/2014 UQ Summerschool 2014 75 Exercise 4 Look at the four datasets, and in each case estimate the best fitting slope β Draw a line by eye through the origin Using a straight-edge Read off the slope as the y value on the line when x = 1 Write that value beside the graph The actual best-fitting calibrated values are: Dataset 1 – 0.58 Dataset 2 – 0.58 Dataset 3 – 0.66 Dataset 4 – 0.57 12/8/2014 UQ Summerschool 2014 76 Calibrating the SM It’s basically a simple linear regression through the origin zi = βxi + εi Calibration Posterior distribution misses the true value completely More data makes things worse More and more tightly concentrated on the wrong value We could use a quadratic regression but the problem would remain 12/8/2014 UQ Summerschool 2014 77 The problem is completely general Calibrating (inverting, tuning, matching) a wrong model gives parameter estimates that are wrong Not equal to their true physical values – biased With more data we become more sure of these wrong values The SM is a trivial model, but the same conclusions apply to all models All models are wrong In more complex models it is just harder to see what is going wrong Even with the SM, it takes a lot of data to see any curvature in reality What can we do about this? 12/8/2014 UQ Summerschool 2014 78 Model discrepancy The SM example suggests that we need to allow that the model does not correctly represent reality For any values of the calibration parameters The simulator outputs deviate systematically from reality Model discrepancy (or model bias or model error) There is a difference between the model with best/true parameter values and reality r(x) = f(x, θ) + δ(x) where δ(x) represents this discrepancy and will typically itself have uncertain parameters We observe zi = r(xi) + εi = f(xi, θ) + δ(xi) + εi 12/8/2014 UQ Summerschool 2014 79 SM revisited Kennedy and O’Hagan (2001) introduced this model discrepancy Modelled it as a zero-mean Gaussian process They claimed it acknowledges additional uncertainty And mitigates against over-fitting of θ So add this model discrepancy term to the linear model of the simple machine r(x) = βx + δ(x) With δ(x) modelled as a zero-mean GP Posterior distribution of β now behaves quite differently 12/8/2014 UQ Summerschool 2014 80 SM – calibration, with discrepancy Posterior distribution much broader and doesn’t get worse with more data But still misses the true value 12/8/2014 UQ Summerschool 2014 81 Interpolation Main benefit of simple GP model discrepancy is prediction E.g. at x = 1.5 Prediction within the range of the data is possible And gets better with more data 12/8/2014 UQ Summerschool 2014 82 But when it comes to extrapolation … … at x = 6 More data doesn’t help because it’s all in the range [0, 4] Prediction OK here but gets worse for larger x 12/8/2014 UQ Summerschool 2014 83 Extrapolation One reason for wish to learn about physical parameters Should be better for extrapolation than just tuning Without model discrepancy The parameter estimates will be biased Extrapolation will also be biased Because best fitting parameter values are different in different parts of the control variable space With more data we become more sure of these wrong values With GP model discrepancy Extrapolating far from the data does not work No information about model discrepancy Prediction just uses the (calibrated) simulator 12/8/2014 UQ Summerschool 2014 84 We haven’t solved the problem With simple GP model discrepancy the posterior distribution for θ is typically very wide Increases the chance that we cover the true value But is not very helpful And increasing data does not improve the precision Similarly, extrapolation with model discrepancy gives wide prediction intervals And may still not be wide enough What’s going wrong here? 12/8/2014 UQ Summerschool 2014 85 Nonidentifiability Formulation with model discrepancy is not identifiable For any θ, there is a δ(x) to match reality perfectly Reality is r(x) = f(x, θ) + δ(x) Given θ, model discrepancy is δ(x) = r(x) – f(x, θ) Suppose we had an unlimited number of observations We would learn reality’s true function r(x) exactly Within the range of the data Interpolation works But we would still not learn θ It could in principle be anything And we would still not be able to extrapolate reliably 12/8/2014 UQ Summerschool 2014 86 The joint posterior Calibration leads to a joint posterior distribution for θ and δ(x) But nonidentifiability means there are many equally good fits (θ, δ(x)) to the data Induces strong correlation between θ and δ(x) This may be compounded by the fact that simulators often have large numbers of parameters (Near-)redundancy means that different θ values produce (almost) identical predictions Sometimes called equifinality Within this set, the prior distributions for θ and δ(x) count 12/8/2014 UQ Summerschool 2014 87 The importance of prior information The nonparametric GP term allows the model to fit and predict reality accurately given enough data Within the range of the data But it doesn’t mean physical parameters are correctly estimated The separation between original model and discrepancy is unidentified Estimates depend on prior information Unless the real model discrepancy is just the kind expected a priori the physical parameter estimates will still be biased To learn about θ in the presence of model discrepancy we need better prior information And this is also crucial for extrapolation 12/8/2014 UQ Summerschool 2014 88 Better prior information For calibration Prior information about θ and/or δ(x) We wish to calibrate because prior information about θ is not strong enough So prior knowledge of model discrepancy is crucial In the range of the data For extrapolation All this plus good prior knowledge of δ(x) outside the range of the calibration data That’s seriously challenging! In the SM, a model for δ(x) that says it is zero at x = 0, then increasingly negative, should do better 12/8/2014 UQ Summerschool 2014 89 Inference about the physical parameter We conditioned the GP δ(0) = 0 δ′(0) = 0 δ′(0.5) < 0 δ′(1.5) < 0 12/8/2014 UQ Summerschool 2014 90 Prediction x = 1.5 12/8/2014 x=6 UQ Summerschool 2014 91 Where is the uncertainty? Return to the general case How might the simulator output y = f (x) differ from the true real-world value z that the simulator is supposed to predict? Error in inputs x Initial values Forcing inputs Model parameters Error in model structure or solution Wrong, inaccurate or incomplete science Bugs, solution errors 12/8/2014 UQ Summerschool 2014 92 Quantifying uncertainty The ideal is to provide a probability distribution p(z) for the true real-world value The centre of the distribution is a best estimate Its spread shows how much uncertainty about z is induced by uncertainties on the previous slide How do we get this? Input uncertainty: characterise p(x), propagate through to p(y) Model discrepancy: characterise p(z - y) 12/8/2014 UQ Summerschool 2014 93 The hard part We know pretty well how to do uncertainty propagation Uncertainties associated with the simulator output The hard part is the link to reality The difference between the real system z and the simulator output y = f(x) using best input values Because all models are wrong (Box, 1979) It was through thinking about this link … Particularly in the context of calibration i.e. learning about uncertain parameters in the model And also extrapolation … that I was led to think more deeply about parameters And to realise just how important model discrepancy is 12/8/2014 UQ Summerschool 2014 94 Modelling model discrepancy 1. Three rules: Must account for model discrepancy Ignoring it leads to biased calibration, over-optimistic predictions 2. Discrepancy term must be modelled nonparametrically Allows learning about reality and interpolative prediction 3. Model must incorporate realistic knowledge about discrepancy To get unbiased learning about physical parameters and extrapolation But following these rules is hard Ongoing research 12/8/2014 UQ Summerschool 2014 95 Managing uncertainty To understand the implications of different uncertainty sources Probabilistic, variance-based sensitivity analysis Helps with targeting and prioritising research To reduce uncertainty, get more information! Informal – more/better science Tighten p(x) through improved understanding Tighten p(z - y) through improved modelling or programming Formal – using real-world data Calibration – learn about model parameters Data assimilation – learn about the state variables Learn about model discrepancy z - y Validation (another talk!) 12/8/2014 UQ Summerschool 2014 96 Conclusions – Session 2 Without model discrepancy Inference about physical parameters will be wrong And will get worse with more data The same is true of prediction Both interpolation and extrapolation With crude GP model discrepancy Interpolation inference is OK And gets better with more data But we still get physical parameters and extrapolation wrong The better our prior knowledge about model discrepancy The more chance we have of getting physical parameters right Also extrapolation But then we need even better prior knowledge 12/8/2014 UQ Summerschool 2014 97 Any final questions? It remains just to say thank you for sitting through this morning’s sessions! 12/8/2014 UQ Summerschool 2014 98