Bayesian Reinforcement Learning

advertisement

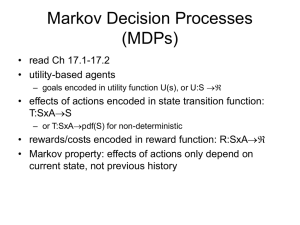

Bayesian Reinforcement Learning Machine Learning RCC 16th June 2011 Outline • Introduction to Reinforcement Learning • Overview of the field • Model-based BRL • Model-free RL References • ICML-07 Tutorial – P. Poupart, M. Ghavamzadeh, Y. Engel • Reinforcement Learning: An Introduction – Richard S. Sutton and Andrew G. Barto Machine Learning Unsupervised Learning Reinforcement Learning Supervised Learning Definitions State Action Reward £££££ Policy Reward function Markov Decision Process Transition Probability x0 x1 a0 a1 r0 r1 Policy Reward function Value Function Optimal Policy • Assume one optimal action per state Value Iteration Unknown Reinforcement Learning • RL Problem: Solve MDP when reward/transition models are unknown • Basic Idea: Use samples obtained from agent’s interaction with environment Model-Based vs Model-Free RL • Model-Based: Learn a model of the reward/transition dynamics and derive value function/policy • Model-Free: Directly learn value function/policy RL Solutions RL Solutions • Value Function Algorithms – Define a form for the value function – Sample state-action-reward sequence – Update value function – Extract optimal policy • SARSA, Q-learning RL Solutions • Actor-Critic – Define a policy structure (actor) – Define a value function (critic) – Sample state-action-reward – Update both actor & critic RL Solutions • Policy Search Algorithm – Define a form for the policy – Sample state-action-reward sequence – Update policy • PEGASUS – (Policy Evaluation-of-Goodness And Search Using Scenarios) Online - Offline • Offline – Use a simulator – Policy fixed for each ‘episode’ – Updates made at the end of episode • Online – Directly interact with environment – Learning happens step-by-step Model-Free Solutions 1. Prediction: Estimate V(x) or Q(x,a) 2. Control: Extract policy • • On-Policy Off-Policy Monte-Carlo Predictions Leave car park Get out of city Motorway Enter Cambridge State Value -90 -83 -55 -11 Reward -13 -15 -61 -11 Updated -100 -87 -72 -11 Temporal Difference Predictions Leave car park Get out of city Motorway Enter Cambridge State Value -90 -83 -55 -11 Reward -13 -15 -61 -11 Updated -96 -70 -72 -11 Advantages of TD • Don’t need a model of reward/transitions • Online, fully incremental • Proved to converge given conditions on step-size • “Usually” faster than MC methods From TD to TD(λ) State Reward Terminal state From TD to TD(λ) State Reward Terminal state SARSA & Q-learning TD-Learning On-Policy Off-Policy Estimate value function for current policy Estimate value function for optimal policy SARSA Q-Learning GP Temporal Difference xx xx xx x x xx x 1 2 GP Temporal Difference xx xx xx x x xx x 1 2 GP Temporal Difference GP Temporal Difference GP Temporal Difference GP Temporal Difference