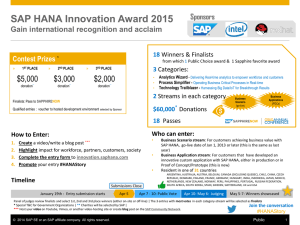

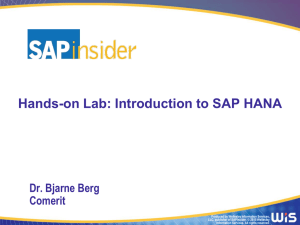

Best practices to ensure efficient data

models, fast data activation, and

performance of your SAP NetWeaver

BW 7.3 data warehouse

Dr. Bjarne Berg

COMERIT

© Copyright 2013

Wellesley Information Services, Inc.

All rights reserved.

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

1

In This Session …

•

This is a advanced technical presentation intended for

developers with significant experience with SAP BW in a

hands-on role

•

We will look at many EDW technical design options and the

pros and cons of some of the new design features in HANA

and BW 7.3

•

During the presentation we will look at many real examples

from 5 real implementations and explore what can be

learned from these

2

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

3

Data Design The Use of Layered Scalable Architecture (LSA)

SAP BW 7.3 SP-3 has a set of 10

templates to help build a layered

data architecture for large-scale

data warehousing

The LSA consists logically of:

Acquisition layer

Harmonization/quality layer

Propagation layer

Business transformation layer

Reporting layer

Virtualization layer

4

EDW Design Vs. Evolution

An organization has two fundamental choices:

1.

Build a new well architected EDW

2.

Evolve the old EDW or reporting system

Both solutions are feasible, but organizations

that selects an evolutionary approach should

be self-aware and monitor undesirable add-ons

and ‘workarounds”.

Failure to break with the past can be

detrimental to an EDW’s long-term success…

5

Data Design - Real Example of LSA Implementation

This company

implemented a full LSA

Architecture and also

partitioned the

Infoproviders for faster

data loads and faster

query performance.

While this architecture

has benefits, there are

significant issues around

data volumes and the

Total cost of Ownership

when changes are made

to the data model(s)

6

Data Design Example of LSA Simplification In HANA

Since many of the benefits

sought by the LSA

architecture are inherent in

HANA, significant

simplifications can be

made to the data design

and data flows

This design has a dramatically

smaller cost of ownership

(fewer objects to maintain,

design and change) than the

traditional LSAs

7

EDW - Complex Layered Architectures

•

•

This BPC on BW system was experiencing

substantial load performance issues

Some of this was due to underlying SAP BW

configuration, while some was due to the

technical configuration of the data store

architecture and data flow inside SAP BW

Consolidation

Cube

(OC_CON)

Consolidation Processes:

1) Clearing

2) Load

3) Foreign Exchange

4) Eliminations

5) Optimizations

BPC Staging

Cube

(BPC_C01)

GL Summary

Cube

(FIGL_C03)

Production Issues included:

1) Dependent jobs not running

sequentially, i.e., load from

Summary cube to Staging cube is

sometimes executed before the

summary cube data is loaded and

activated, resulting in zero

records in the staging cube.

2) Long latency with 6 layers of

PSA, DSOs, and InfoCubes

before consolidation processes

can be executed.

Conformed

Reportable

DSO

Write

Optimized

DSO

FIGL_D21

FIGL_D20

FIGL_D17

FIGL_D14

FIGL_D18

FIGL_D15S

FIGL_D13S

FIGL_D10S

FIGL_D08

FIGL_D11S

R/3 3.1i

EU

ECC 4.7

ASIA

Persistent Staging Area (PSA)

ECC 6.0

AsiaPacific

ECC 6.0

NorthAmerica

ECC 4.7

LatinAmerica

8

Fixes to Complex EDW Architecture

•

•

The fix to this system included removing the conformed DSO

layer, with BEx flags for data stores that are never reported on.

Also, the BPC staging cube served

little practical purpose since the data is

Consolidation Processes:

1) Clearing

2) Load

already staged in the GL Summary cube

3) Foreign Exchange

4) Eliminations

and the logic can be maintained in the

5) Optimizations

load from this cube directly to the

consolidation cube.

Consolidation

Cube

(OC_CON)

GL Summary

Cube

(FIGL_C03)

Long-term benefits included

reduced data latency, faster

data activation, less data

replication, smaller system

backups as well as simplified

system maintenance.

Write

Optimized

DSO

FIGL_D15S

FIGL_D13S

FIGL_D10S

FIGL_D08

FIGL_D11S

R/3 3.1i

EU

ECC 4.7

ASIA

Persistent Staging Area (PSA)

ECC 6.0

AsiaPacific

ECC 6.0

NorthAmerica

ECC 4.7

LatinAmerica

9

EDW Data Design - Use of MultiProvider Hints in BW

Problem: To reduce data volume in each InfoCube,

data is partitioned by Time period.

2002

2003

2004

2005 2006

2007

2008

A query must now search in all InfoProviders to find

the data. This is very slow.

Solution: We can add “hints” to guide the query execution. In the

RRKMULTIPROVHINT table, you can specify one or several

characteristics for each MultiProvider, which are then used to

partition the MultiProvider into BasicCubes.

•

If a query has restrictions on this characteristic, the OLAP processor is

already checked to see which part of the cubes can return data for the query.

The data manager can then completely ignore the remaining cubes.

An entry in RRKMULTIPROVHINT only makes sense if a few attributes of this

characteristic (that is, only a few data slices) are affected in the majority of, or

the most important, queries (SAP Notes: 911939. See also: 954889 and 1156681).

10

EDW Data Design - Semantic Partitioned Objects (SPO)

•

•

•

When data stores and InfoCubes are allowed to grow over time, the data load

and query performance suffers

Normally objects should be physically partitioned when the numbers of records

exceed 100 – 200 million

However, this may be different depending on the size of your hardware and

the type of database you use

In SAP NetWeaver BW 7.3 we get an option to create a Semantic Partitioned

Object (SPO) through wizards

You can partition based on fields such as calendar year, region, country, etc.

11

Data Design - Semantic Partitioned Objects (cont.)

•

When an SPO is created, a reference structure keeps track of the

partitions. The structure is placed in the MultiProvider for querying.

SPO Wizards create all Data Transfer Processes (DTP),

transformations, filters for each data store, and a process chain

12

•

•

•

In this example, many of the

InfoCubes are very large and not

partitioned

Several have over 100 million

records and one is approaching

500 million

In this system SPOs in BW 7.3 can

be very helpful. For BW 7.0 many

of these cubes can be physically

partitioned with hints on the

MultiProviders

Millions

InfoCube Design - Size

425

400

375

350

325

300

275

250

225

200

175

150

125

100

75

50

25

-

InfoCubes Number of Records

InfoCubes should be performance tuned if the number of records exceeds 100

million and partitioned before they are approaching 200+ million records. This

creates faster loads, better query performance, and easier management.

13

InfoCube Design - Use of Line Item Dimensions

•

•

Line item dimensions are basically

fields that are transaction oriented

Once flagged as a line item dimension,

the field is actually stored in the fact

table and has no table joins

This may result in improvements to query speeds for cubes not

in BWA or HANA

Explore the use of line item dimensions for fields that

are frequently conditioned in queries. This model

change can yield faster queries.

14

InfoCube Design — High Cardinality Flags

•

High-Cardinality flag for large InfoCubes with more than 10 million rows

InfoCube

FIUC_C03

ZGAT_C01

FIIF_C02

FIGL_C01

•

•

•

Number of rows

12,859,780

20,793,573

68,090,967

156,738,973

Entries in dimension

compared for F table

37%

46%

102%

88%

There are currently 11 InfoCubes with a ratio of more than 30% of the records in

the dimensions vs. fact table

SAP recommends for Indexing and performance reasons to flag these as “highcardinality” dimensions. However, it has minor impact to smaller cubes.

In this example, there were four medium and large InfoCubes that are not

following the basic design guidelines, and subsequently had slow performance

Many companies should redesign large InfoCubes with high-cardinality to

take advantage of the standard performance enhancements available.

15

DSO Design and Locks on Large Oracle Tables

Additionally, 101 DSO objects

were flagged as being reportable.

This resulted in System IDs (SIDs)

being created during activation.

Combined, these resulted in

frequent locks on the Oracle

database and failed parallel

activation jobs

Millions

In this example, many of the very

large DSOs are not partitioned,

and several objects have over 250

million records

425

400

375

350

325

300

275

250

225

200

175

150

125

100

75

50

25

-

DSO Number of Records

Partition DSOs. The lock on very large DSOs during parallel loads are well

known and SAP has issued several notes on the topic: 634458 'ODS object:

Activation fails - DEADLOCK' and 84348 'Oracle deadlocks, ORA-00060.'

16

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

17

An Example of a - BW System Review

Database:

Oracle version 11.2g

BW system:

BW version 7.3

Operation systems: HP-UNIX; Linux for BWA; AIX 6.4 for three app servers

Area

Value

Trend

Active Users

106

steady

Avg. Availability per Week

100 %

up

DB Size

Last Month DB Growth

3514.24 GB steady

131.88 GB up

In this section, we take a look at a real example of a BW Implementation

and explore what we can learn from it.

18

System Background

•

•

There were over 600

InfoProviders in the system

The system had been in

production for 6 years and

has was upgraded in 2012

InfoProviders

400

353

350

300

250

200

150

113

101

95

100

•

Most InfoCubes followed

standard development

guidelines, but some had

abnormalities such as

InfoCubes feeding DSOs.

50

39

11

0

9

10

0

Structured design review sessions should be undertaken as part of

every project to assure that this design did not continue.

19

Faster Data Load and Design Options — Activation

•

During activation, SAP NetWeaver BW 7.0 has to lookup in the

NIRV table to see if the object already exists

This can be a slow process

•

In SAP NetWeaver BW 7.0 we may buffer the number ranges to

compare the data load with records in-memory

This speeds up data activation

•

However, in SAP NetWeaver BW 7.3, the data activation is

changed from single lookups to package fetch of the active table,

resulting in faster activation and less locks on the lookup tables

This activation method results in 15-30% faster data activation

20

Example: Data Activation and Options

• During

activation, there was limited use

of buffering of number ranges for

dimensions and InfoObjects, even

when the number of entries were large.

Table

/BIC

/BI0

InfoObject

SGOBJKEY

SCO_ITEM_NO

SDOC_NUMBER

SAC_DOC_NO

SREF_DOC_NO

SBILL_NUM

SBA_BELNR

SCO_DOC_NO

SMAT_DOC

STCTSTEPUID

Buffer range

(thousands) level (thousands)

12,792

18,699

87,423

93,099

65,184

71,713

16,814

19,693

15,321

18,663

10,389

14,704

9,433

13,421

11,468

12,282

10,427

12,040

9,951

11,793

Rows

Buffer range

(thousands) level (thousands)

FIGL_C012 138,286

163,271

FIGL_C01

FIGL_C016

47,005

53,133

FICP_C053

10,172

29,655

FICP_C05

FICP_C059

6,268

18,808

3,281

15,740

FICP_C02 FICP_C021

FICP_C013

9,149

46,308

3,804

64,388

FICP_C01 FIAR_C014

FIAP_C017

19,139

25,844

69,264

79,758

FIIF_C02 FIIF_C022

InfoCube Dimension

Rows

This resulted in many reads on the

NRIV table that slowed down data

activation and process chains

(see SAP notes: 857998, 141497, 179224 and 504875)

Start buffering of number ranges of dimensions and

InfoObjects or use 7.3 data activation instead (‘packet fetch’)

21

More Data Load Ideas

•

In BW 7.3 for data transformations, the option “Read

from DataStore” for a faster data lookup is also available

•

Additionally, the use of navigational attributes as

sources in Masterdata transformations reduce overhead

for lookups

Combined, this may lead to an additional 10-20%

improvement

The 7.3 initial load runtime option “Insert only” and the “Unique

data records only” prevents all lookups during activation and

can dramatically improve data loads when used correctly

22

More 7.3 Performance and Cockpit Capabilities

•

BW 7.3 monitors and cockpit capabilities also include:

Monitor of database usage and object sizes (i.e., InfoCubes, DSOs)

Query usage statistics are more visible (similar to RSRT, RSRV, RSTT)

We can see more of the use of SAP NetWeaver BW Accelerator and sizes

Monitor for the actual use of OLAP/MDX Cache and hit ratios

You can now selectively delete internal statistics in RSDDSTATWHM by

date through the updated RSDDSTAT_DATA_DELETE ABAP program

There is also a MDX Editor for coding and syntax assistance

Solution Manager has been

updated to take advantage of

these new monitors.

23

BW 7.3 Performance and Monitoring

•

Additionally BW 7.3 monitors include:

DEAMON update information (i.e., RDA capacity status, usage)

A performance monitoring workbench for performance trends

Process chain monitoring (transaction: RSPCM) with error and

active chain monitoring, user specific displays, and

performance threshold monitoring (i.e., for SLAs)

In SAP NetWeaver BW 7.3, the Near Line Storage (NLS) has been

enhanced to include archiving, support for write optimized DSOs

24

ETL Options for EDWs

In SAP NetWeaver BW 7.3 you can

create generic delta extraction for

the Universal Data (UD) and

Database Connect (DB) options,

as well as for flat files

• Additionally, you can use the

new DataSource adapter “Web

Service Pull” to load data from

external Web services

You can even create generic Web services delta loads and load the

new data straight into the staging area of SAP NetWeaver BW 7.3

• While Web services does not fully support hierarchies yet, there is

integration of hierarchies into the standard process flow such as

transformation and DTPs, as well as being able to load hierarchies from

flat files using a new DataSource

•

25

The 7.3 DataFlow Generation Wizard

• SAP

NetWeaver BW 7.3 has a new, step-bystep wizard that allows you to generate data

flows from flat files or existing data sources

•A

great benefit is that the

wizards work against any

InfoProvider; i.e., you can use

the wizards to create loads from

DSOs to DSOs or InfoCubes

This wizard reduces the number or manual steps needed to load data. It also

simplifies the development process and makes ETL work much easier.

26

Example of Poor EDW Data Load Design

•

•

On an average daily data

extraction, transformation,

and load process takes

44.8 hours if run sequentially

A substantial amount of the

time is spent on data

transformation (51%) and

lookups are often done on

large DSOs without secondary

indexes.

ETL Statistics (average per day)

44.8

Total Load time (sequential) - hrs

11.1

- Time spent on source extraction - hrs

0.5

- Time spent on error filtering - hrs

22.9

- Time spent on transformations - hrs

10.4

- Time spent on target - hrs

371,407,881

Number of records extracted from source

125,102,791

Number of records written to target

100%

24.7%

1.1%

51.1%

23.1%

33.7%

•

Of the 371 million records extracted from

the source, only 33.7% are written to disk

•

This is due to lack of ability to do delta

processing for some files and also a

substantial amount of transform and

lookup logic in some of the ABAP rules

For this System, Developers should revisit extractor design

for lookups on source system instead of inside BW

27

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

28

Database Performance (non-HANA systems)

•

Database statistics are used by the

database optimizer to route queries.

Outdated statistics leads to

performance degradation.

•

Outdated indexes can lead to very poor search performance in all

queries where conditioning is used (i.e., mandatory prompts)

The current sampling rates for this example were too low, and

statistics should only be run after major data loads, and could be

scheduled weekly

•

For many systems, database statistics are outdated and may cause database

performance to perform significantly poorer than otherwise would be the case.

Sampling should often be changed and process chains may be re-scheduled.

29

Database Design for EDW: B-Tree Indexes on Large Objects

•

InfoCubes that are not flagged as high-cardinality use a Bitmap

index instead of a classical b-tree index for the joins.

•

This type of index does not get “unbalanced” since it uses

pointers instead of “buckets”

•

When updating these large InfoCubes, dropping and recreating

Indexes in the process chain can be very time consuming and

actually take longer than the inserts

•

It can also result in locks when the objects are very large (100

million+ records) and when attempting to do this in parallel (see

ORA-0060)

Rebuilding bitmap indexes in load processing for large objects

should not be a default answer for all designs. Any process

chains that do that, may need to be revisited.

30

Aggregates Are Not Needed with BW and HANA (or BWA)

•

•

•

•

At this company, there are 11 aggregates

in the system

Four are related to the cube ZIR_C01

and seldom used and (two has never

been used by any query)

The 7 other aggregates are used only

by the statistical cubes.

Every day, 1.9 million records are

inserted into the aggregates and take

35.6 minutes of processing time

Infocube

0TCT_C01

0TCT_C21

0TCT_C01

0TCT_C01

0TCT_C21

0TCT_C22

0TCT_C23

Number of records in

Aggregate

895,070

420,314

313,540

188,875

87,771

82,051

69,725

Delete unused aggregates. By reducing the data volume in the

underlying statistical cubes (cleanup), the remaining aggregates

will reduce in size and processing time.

31

The OLAP Memory Cache Size Utilization

•

The OLAP Cache is by default 100 MB for local and 200 MB

for global use

•

The system at this company was consuming no more than

80MB on average

This means that most queries were re-executing the same

data (good hit ratio of over 90%)

•

32

OLAP Cache — Turned Off

•

At one client, the OLAP cache was turned off for 131 out of

690 queries (excluding 4 planning queries in BW-IP)

•

The cache was also turned off for 24 out of 256 InfoCubes

•

The OLAP Cache mode for many of their queries could also

have been stored as “Binary Large Objects (BLOB),” that

could speed up caching and very large reads

For most companies queries are using CKF and

sums and sorts extensively, the cache read mode

for most queries should be turned on

33

Broadcast to Pre-Fill the Cache

•

•

This company’s Java Stack did not

communicate properly with SAP NetWeaver

BW, and multiple logons were required

As a result, broadcasting could not be used

until the connectivity was set up correctly

Set up Java connectivity ASAP and use the Broadcasting feature to prefill

the MDX cache (OLAP Universes) for BI analytical processing intensive

functions such as CKF, Sorts, Exceptions, Conditions

34

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

35

HybridProvider and Real-Time Data

•

•

The “HybridProvider” (HP) is new in SAP NetWeaver BW 7.3. The core idea is to

link the historical data inside BW with real-time data.

There are two ways of implementing an HP:

HP based on a DSO

HP based on a Virtual InfoCube

Real-Time Data

Acquisition

HybridProvider

Transaction

Data

Indexing

ECC

and

External

Systems

BWA based

InfoCube

Direct

reads

Virtual

InfoCube

RDA

Real-Time

Data

Acquisition

Query

(real time)

Direct

Access

36

Option 1: The DSO-Based HybridProvider for EDWs

Real-time data is in the DSO and historical data in the SAP NetWeaver BW

Accelerator-based InfoCube

The DSO use real-time data acquisition (RDA) to load data

SAP NetWeaver BW automatically creates a process chain for the

HybridProvder’s data flow

The process chain is executed for every closed request

Real-Time Data

Acquisition

HybridProvider

Transaction

Data

Indexing

ECC

and

External

Systems

BWA based

InfoCube

Direct

reads

RDA

Real-Time

Data

Acquisition

Query

(real time)

Direct

Access

Virtual

InfoCube

This solution provides for really fast queries, but delta

logic has to be custom designed

37

The DSO-Based HybridProvider for EDW (cont.)

•

This solution provides for really fast queries, but delta logic has to

be custom designed and may be complex. However, the solution

allows for high-frequency updates and very rapid query response.

This is a good option if you

have a low volume of new

records and a high number

of queries or operational

dashboards

38

Option 2: The Virtual Cube-Based HybridProvider for EDW

Data is read in real-time from SAP ECC, while historical data is read from SAP

NetWeaver BW Accelerator

The difference depends on how often SAP NetWeaver BW Accelerator is loaded

Non-complex data logic can be applied

DTP is permitted if you do not filter the data set

Real-Time Data

Acquisition

HybridProvider

Transaction

Data

Indexing

ECC

and

External

Systems

BWA based

InfoCube

Direct

reads

RDA

Real-Time

Data

Acquisition

Query

(real time)

Direct

Access

Virtual

InfoCube

Warning: Virtual cubes with many users may place high-stress on the ERP system

39

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

40

Database Growth and Cleanup - Example

The database has grown between

12 and 344 GB each month for

the last year

Three months of the year saw

data, logs, and PSA cleaned. Data

volume declined between 63 and

275 Gb those months

•

•

Oracle DB (Mb)

3600

3500

3400

3300

3200

3100

3000

2900

2800

2700

2600

2500

Oracle DB (Mb) Growth

The database has grown by

732Gb (26%) in the last year,

and the growth is uneven.

400

300

200

100

0

Feb-2010 Mar-2010

-100

-200

-300

Apr-2010 May-2010 Jun-2010

Jul-2010

Aug-2010 Sep-2010

Oct-2010

Nov-2010 Dec-2010

Jan-2011

Feb-2011

Schedule “housekeeping”

jobs. Better management of

cleanup would result in more

predictable patterns.

(i.e. we found PSA data that had 10

months of load history).

41

BW Data Reduction – Statistical Cubes

•

In our example, there were many statistical cubes with significant volume and

no real benefits. During the BW upgrade, most of these were not cleared and

are now creating poor system performance. For example:

0TCT_C02 has 408 million rows; others also had millions of rows

Stats are collected for over 1,900 objects, queries, InfoProviders,

templates, and workbooks (could be reduced significantly)

There are 8 aggregates with over 12 million rows on the stats cubes

Creating aggregates on stats cubes inserts 1.9 million rows and took 35.6

minutes for refresh each night

High-cardinality flags are set for small cube with only one million rows

(0TCT_C21)

Use RSDDSTAT and select “Delete Data” for old stats and also

schedule periodic jobs using standard process chains.

42

EDW on SAP HANA: 12 Cleaning Pre-Steps

1. Clean the Persistent Staging Area (PSA) for data already loaded to DSOs

2. Delete the Aggregates (summary tables). They will not be needed again.

3. Compress the E and F tables in all InfoCubes. This will make InfoCubes

much smaller.

4. Remove data from the statistical cubes (they starts with the technical

name of 0CTC_xxx). These contain performance information for the BW

system running on the relational database. You can do this using the

transaction RSDDSTAT or the program RSDDSTAT_DATA_DELETE to

help you.

5. Look at log files, bookmarks and unused BEx queries and templates

(transaction RSZDELETE).

6. Remove as much as possible of the DTP temporary storage, DTP error

logs, and temporary database objects. Help and programs to do this

is found in SAP Notes 1139396 and 1106393.

43

EDW on SAP HANA: 12 Cleaning Pre-Steps

7) For write-optimized DSOs that push data to reportable

DSOs (LSA approach), remove data in the writeoptimized DSOs. It is already available in higher level

objects.

8) Migrate old data to Near-Line Storage (NLS) on a small server.

This will still provide access to the data for the few users who

infrequently need to see this old data. You will also be able to query

it when BW is on HANA, but it does not need to be in-memory.

7) Remove data in unused DSOs, InfoCubes and files used for staging

in the BW system. This include possible reorganization of

masterdata text and attributes using process type in RSPC

44

EDW on SAP HANA: 12 Cleaning Pre-Steps

10) You may also want to clean up background information stored in the table

RSBATCHDATA. This table can get very big if not managed. You should

also consider archiving any IDocs and clean the tRFC queues. All of this

will reduce size of the HANA system and help you fit the system tables on

the master node.

11) In SAP Note 706478, SAP provides some ideas on how to keep the basis

tables from growing too fast too fast in the future, and if you are on Service

Pack 23 on BW 7.0, or higher, you can also delete unwanted masterdata

directly (see SAP Note: 1370848).

12) Finally, you can use the program RSDDCVER_DIM_UNUSED to delete any

unused dimension entries in your InfoCubes to reduce the overall

system size.

45

PSA Cleanup

•

For this company there were over 1.6 billion rows in the PSA

AGE of PSA

<1 MONTH

1-3 MONTHS

3-6 MONTHS

6-12 MONTH

1-2 YEARS

Total

•

•

Number of Records

591,774,063

738,204,015

144,476,834

173,651,469

35,421,883

1,683,528,264

Percent

35%

44%

9%

10%

2%

100%

Estimated

Gb

14.9

18.6

3.6

4.4

0.9

42.3

An estimated 29Gb could be freed up if data older than one month is

removed

A formal retention policy that is communicated and enforced should be

implemented

Create standard practices for PSA cleanup and

schedule regular jobs that take care of this in the future

46

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

47

Looking Inside SAP HANA — In-Memory Computing Engine

Metadata

Authorization

Transaction

Manager

Manager

Manager

Relational

Engine

SQL Script

SQL Parser

-Row Store

-Column Store

Calculation

Disk Storage

Data

Volumes

Log

Session

Manager

MDX

Engine

Volumes

Load

Controller

Replication Server

Inside the Computing Engine of SAP HANA, we have many different components

that manage the access and storage of the data. This includes MDX and SQL

access, as well as Load Controller (LC) and the Replication Server.

BusinessObjects Data Services

48

Moving EDW to SAP HANA – Automated tool 1

SAP has a checklist tool for

SAP NetWeaver BW powered by

HANA (thanks Marc Bernard).

In this tool, SAP provided

automatic check programs for

both the 3.5 version and the 7.x

version of BW. These are found

in SAP Note: 1729988.

In version 2.x of this tool,

hundreds of checks are done

automatically in the BW system.

This includes platform checks

on database and application

There are even basis checks for support packs, ABAP/JAVA

and system information.

stacks, Unicode, BW releases, and add-ons to your system.

49

Moving EDW to SAP HANA – Automated tool 1

The idea of the checklist tool

from SAP is that you run it

several times throughout the

project.

Once before you start, then

periodically as you resolve

issues and upgrade

requirements, and then finally

when the system has been

migrated to HANA.

The checklist tool also has specific checks for the HANA system that can help

you identify any issues before turning over the system to end users..

50

Moving EDW to SAP HANA – Automated tool 2

SAP has released a new ABAP based tool that generates a report significantly

better sizing fro SAP BW than using just the QuickSizer above. This program

takes into consideration existing database compression, different table types

and also include the effects of non-active data on the HANA system.

The higher precision

you run the estimate at

the longer the program

is going to run.

With 14 parallel

processors and 8Tb

data warehouse, it is

not unusual to see 4575 minutes run time.

To increase speed,

you can also

suppress analysis

tables with less

than 1 MB size.

51

Moving EDW to SAP HANA – Automated tool 2

Since timeouts are common when

running the sizing program, you

can temporarily change the

parameter in rdisp/max_wprun_time

to 0 in BW transaction RZ11.

Finally, you estimate the growth for

the system as a percentage, or as

absolute growth.

After you have downloaded and installed the program, and-selected the parameters

above, you can go to SE38 and run SDF/HANA_BW_SIZING as a background job.

The output is stored in the file you specified and the file can now be email emailed

to hardware vendors for sizing input and hardware selection.

This program is attached to SAP Note: 1736976 on SAP Marketplace

52

Moving EDW to SAP HANA – Automated tool 3

Many experienced developers are

not aware that moving BW to

HANA can in some cases result in

slower transformations during

data loads.

a. Select for all entries (SFAE)

statements without HANA DB hints

--> add hints

b. Select * --> specify fields to select

c. Database access in the field routines

--> move to start routine

d. Loops which do not assign field

symbols --> use field symbols

e. Selects from master data tables -->

Use the read master data rule

f. Selects from DSOs --> Use the read

DSO rule

g. Direct updates to BW object tables -> Do not update tables directly

53

Hardware Options for EDW on SAP HANA

There are currently 7 different

certified HANA hardware vendors

with 13 different products.

Memory

Hardware

128GB

Cisco C260

X

Cisco C460

256GB

X

X

X

X+

X

Dell R910

X

X

X

Hitachi CB 2000

X

X

X

X

X

X

X

NEC Express 5800

Fujitsu RX 600 S5

X

Fujitsu RX 900 S2

HP DL 580 G7

X

X

X

HP DL 980 G7

X+

X+

X

X

X

X+

HP BL 680

X

X

X

IBM x3690 X5

X

X

X

X

X

IBM x3950 X5

1024GB

X

Cisco B440

Some boxes can be used as single nodes

with others are intended for scale-out

solutions for large multi-node systems

512GB

X+

54

A HANA Hardware Example

In this box, we are

see the inside of an

IBM x3950 HANA

system.

The system

basically consists

of memory, disk,

processors and

network cards

The hardware vendor will install, connect and to a health check on your system

before handing it over to you. A 3-year service plan is also normally required.

55

What We’ll Cover …

•

•

•

•

•

•

•

•

Introductions

EDW Data Design and Data Modeling

Data Loading and Fast Activations

Tips and Tricks for Faster Query Times

Real-Time and Near-Time reporting for the EDW

EDW Reduction and Cleanup

In Memory Options with HANA

Wrap-Up

56

SAP BW Roadmap

Source: SAP May 2013,

https://scn.sap.com/docs/DOC-36273

BW 7.4 SP05 Should be released this fall. BW 7.3 SP09 is already available

57

Additional Resources

•

An Introduction to SAP HANA

•

SAP NetWeaver 7.4 on SAP Community Network

•

http://scn.sap.com/docs/DOC-35096

LSA Templates and Architecture in SAP NetWeaver BW 7.3

•

Dr. Berg and Penny Silvia, SAP Press 2013, 2nd Edition

www.sdn.sap.com/irj/scn/weblogs?blog=/pub/wlg/24800

Roadmap – SAP NetWeaver BW 7.3

www.sdn.sap.com/irj/sdn/bw-73?rid=/library/uuid/300347b59bcf-2d10-efa9-8cc8d89ee72c

58

7 Key Points to Take Home

•

SAP BW has come a long way since version 7.0 and there are

significant new capabilities for EDW in later releases

•

Do not rely on old ‘knowledge’ – Big Data is changing everything!

•

Near-time and Real-time EDWs are gradually becoming the norm

•

Start a long-term plan for moving to HANA (you will do it eventually)

•

Costs of EDWs are too high due to complex data logic, design and

end of useful life of relational databases for high data volumes

•

All queries in your future EDW should run under 20 seconds, and

50% of these should be under 10 seconds.

•

Design is not evolutionary, it is deliberately created.

59

Your Turn!

How to contact me:

Dr. Bjarne Berg

Bberg@Comerit.com

Please remember to complete your session evaluation

60

Disclaimer

SAP, R/3, mySAP, mySAP.com, SAP NetWeaver®, Duet®, PartnerEdge, and other SAP products and services mentioned herein as well as their

respective logos are trademarks or registered trademarks of SAP AG in Germany and in several other countries all over the world. All other product and

service names mentioned are the trademarks of their respective companies. Wellesley Information Services is neither owned nor controlled by SAP.

61