Presentation - SNOW Workshop

advertisement

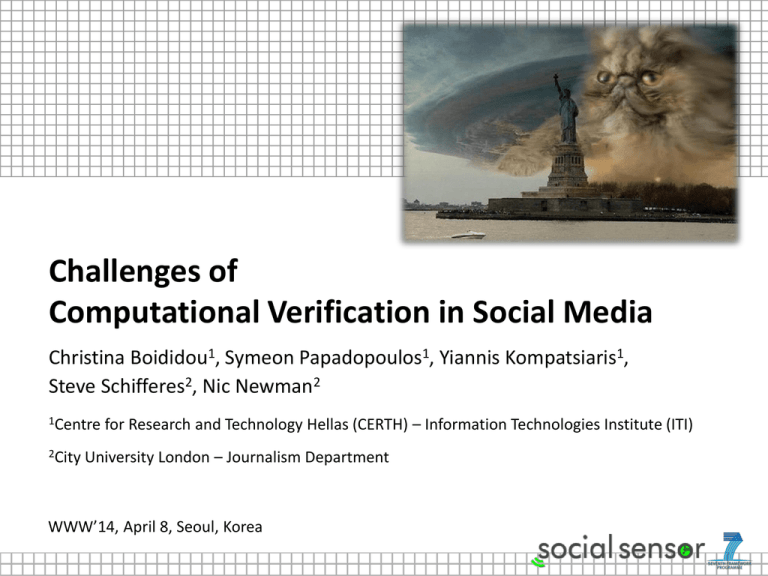

Challenges of Computational Verification in Social Media Christina Boididou1, Symeon Papadopoulos1, Yiannis Kompatsiaris1, Steve Schifferes2, Nic Newman2 1Centre 2City for Research and Technology Hellas (CERTH) – Information Technologies Institute (ITI) University London – Journalism Department WWW’14, April 8, Seoul, Korea How trustworthy is Web multimedia? Real photo captured April 2011 by WSJ but heavily tweeted during Hurricane Sandy (29 Oct 2012) Tweeted by multiple sources & retweeted multiple times Original online at: http://blogs.wsj.com/metropolis/2011/04/28/weatherjournal-clouds-gathered-but-no-tornado-damage/ #2 Disseminating (real?) content on Twitter • Twitter is the platform for sharing newsworthy content in real-time. • Pressure for airing stories very quickly leaves very little room for verification. • Very often, even well-reputed news providers fall for fake news content. • Here, we examine the feasibility and challenges of conducting verification of shared media content with the help of a machine learning framework. #3 Related: Web & OSN Spam • Web spam is a relatively old problem, wherein the spammer tries to “trick” search engines into thinking that a webpage is high-quality, while it’s not (Gyongyi & Garcia-Molina, 2005). • Spam revived in the age of social media. For instance, spammers try to promote irrelevant links using popular hashtags (Benevenuto et al., 2010; Stringhini et al., 2010). Mainly focused on characterizing/detecting sources of spam (websites, twitter accounts) rather than spam content. Z. Gyongyi and H. Garcia-Molina. Web spam taxonomy. In First international workshop on adversarial information retrieval on the web (AIRWeb), 2005 F. Benevenuto, G. Magno, T. Rodrigues, and V. Almeida. Detecting spammers on twitter. In Collaboration, Electronic messaging, Anti-abuse and Spam conference (CEAS), volume 6, 2010 G. Stringhini, C. Kruegel, and G. Vigna. Detecting spammers on social networks. In Proceedings of the 26th Annual Computer Security Applications Conference, pages 1–9. ACM, 2010. #4 Related: Diffusion of Spam • In many cases, the propagation patterns between real and fake content are different, e.g. in the case of the large Chile earthquakes (Mendoza et al., 2010) • Using a few nodes of the network as “monitors”, one could try to identify sources of fake rumours (Seo and Mohapatra, 2012). Still, such methods are very hard to use in real-time settings or very soon after an event starts. M. Mendoza, B. Poblete, and C. Castillo. Twitter under crisis: Can we trust what we rt? In Proceedings of the first Workshop on Social Media Analytics, pages 71–79. ACM, 2010 E. Seo, P. Mohapatra, and T. Abdelzaher. Identifying rumors and their sources in social networks. In SPIE Defense, Security, and Sensing, 2012 #5 Related: Assessing Content Credibility • Four types of features are considered: message, user, topic and propagation (Castillo et al., 2011). • Classify tweets with images as fake or not using a machine learning approach (Gupta et al., 2013) Reports an accuracy of ~97%, which is a gross overestimation of expected real-world accuracy. C. Castillo, M. Mendoza, and B. Poblete. Information credibility on twitter. In Proceedings of the 20th international conference on World Wide Web, pages 675–684. ACM, 2011. A. Gupta, H. Lamba, P. Kumaraguru, and A. Joshi. Faking sandy: characterizing and identifying fake images on twitter during hurricane sandy. In Proceedings of the 22nd international conference on World Wide Web companion, pages 729–736, 2013 #6 Goals/Contributions • Distinguish between fake and real content shared on Twitter using a supervised approach • Provide closer to reality estimates of automatic verification performance • Explore methodological issues with respect to evaluating classifier performance • Create reusable resources – Fake (and real) tweets (incl. images) corpus – Open-source implementation #7 Methodology • Corpus Creation – Topsy API – Near-duplicate image detection • Feature Extraction – Content-based features – User-based features • Classifier Building & Evaluation – Cross-validation – Independent photo sets – Cross-dataset training #8 Corpus Creation • Define a set of keywords K around an event of interest. • Use Topsy API (keyword-based search) and keep only tweets containing images T. • Using independent online sources, define a set of fake images IF and a set of real ones IR. • Select TC ⊂ T of tweets that contain any of the images in IF or IR. • Use near-duplicate visual search (VLAD+SURF) to extend TC with tweets that contain near-duplicate images. • Manually check that the returned near-duplicates indeed correspond to the images of IF or IR. #9 Features # Content Feature # User Feature 1 Length of the tweet 1 Username 2 Number of words 2 Number of friends 3 Number of exclamation marks 3 Number of followers 4 Number of quotation marks 4 Number of followers/number of friends 5 Contains emoticon (happy/sad) 5 Number of times the user was listed 6 Number of uppercase characters 6 If the user’s status contains URL 7 Number of hashtags 7 If the user is verified or not 8 Number of mentions 9 Number of pronouns 10 Number of URLs 11 Number of sentiment words 12 Number of retweets #10 Training and Testing the Classifier • Care should be taken to make sure that no knowledge from the training set enters the test set. • This is NOT the case when using standard cross-validation. #11 The Problem with Cross-Validation Training/Test tweets are randomly selected. One of the reference fake images Multiple tweets per reference image. Training set #12 Testing set Independence of Training-Test Set Training/Test tweets are constraint to correspond to different reference images. IR1 TR11 TR12 IR2 TR13 TR21 TR22 IR5 TR23 TR51 Training set #13 TR52 TR53 Testing set Cross-dataset Training-Testing • In the most unfavourable case, the dataset used for training should refer to a different event than the one used for testing. • Simulates real-world scenario of a breaking story, where no prior information is available to news professionals. • Variants: – Different event, same domain – Different event, different domain (very challenging!) #14 Evaluation • Datasets – Hurricane Sandy – Boston Marathon bombings • Evaluation of two sets of features (content/user) • Evaluation of different classifier settings #15 Dataset – Hurricane Sandy Natural disaster held around the USA from October 22nd to 31st, 2012. Fake images and content, such as sharks inside New York and flooded Statue of Liberty, went viral. Hashtags Hurricane Sandy #hurricaneSandy Hurricane #hurricane Sandy #Sandy #16 Dataset – Boston Marathon Bombings The bombings occurred on 15 April, 2013 during the Boston Marathon when two pressure cooker bombs exploded at 2:49 pm EDT, killing three people and injuring an estimated 264 others. Hashtags Boston Marathon #bostonMarathon Boston bombings #bostonbombings Boston suspect #bostonSuspect manhunt #manhunt watertown #watertown Tsarnaev #Tsarnaev 4chan #4chan Sunil Tripathi #prayForBoston #17 Dataset Statistics Hurricane Sandy Boston Marathon Tweets with other image URLs 343939 Tweets with other image URLs 112449 Tweets with fake images 10758 Tweets with fake images 281 Tweets with real images 3540 Tweets with real images 460 #18 Prediction accuracy (1) • 10-fold cross validation results using different classifiers ~80% #19 Prediction accuracy (2) • Results using different training and testing set from the Hurricane Sandy dataset ~75% • Results using Hurricane Sandy for training and Boston Marathon for testing ~58% #20 Sample Results • Real tweet My friend's sister's Trampolene in Long Island. #HurricaneSandy Classified as real • Real tweet 23rd street repost from @wendybarton #hurricanesandy #nyc Classified as fake • Fake tweet Sharks in people's front yard #hurricane #sandy #bringing #sharks #newyork #crazy http://t.co/PVewUIE1 Classified as fake • Fake tweet Statue of Liberty + crushing waves. http://t.co/7F93HuHV #hurricaneparty #sandy Classified as real #21 Conclusion • Challenges – Data Collection: (a) Fake content is often removed (either by user or by OSN admin), (b) API limitations make very difficult the collection after an event takes place – Classifier accuracy: Purely content-based classification can only be of limited use, especially when used in a context of a different event. However, one could imagine that separate classifiers might be built for certain types of incidents, cf. AIDR use for the recent Chile Earthquake • Future Work – Extend features: (a) geographic location of user (wrt. location of incident), (b) time the tweet was posted – Extend dataset: More events, more fake examples #22 Thank you! • Resources: Slides: http://www.slideshare.net/sympapadopoulos/computationalverification-challenges-in-social-media Code: https://github.com/socialsensor/computational-verification Dataset: https://github.com/MKLab-ITI/image-verification-corpus Help us make it bigger! • Get in touch: @sympapadopoulos / papadop@iti.gr @CMpoi / boididou@iti.gr #23 Sample fake and real images in Sandy • Fake pictures shared on social media • Real pictures shared on social media #24