Reflections on Real (Thermodynamic) Entropy, Disorder and

Reflections on Real (Thermodynamic)

Entropy, Disorder and

Statistical Information Entropy

– (Lecture IV)

Institute of Engineering Thermophysics

Tsinghua University

Beijing, China, June 20, 2013

Prof. M. Kostic

Mechanical Engineering

NORTHERN ILLINOIS UNIVERSITY www.kostic.niu.edu

Slide 1

Some Challenges in Thermoscience Research and Application Potentials

Energy Ecology Economy

Tsinghua University, XJTU, and HUST

China 2013: Beijing, Xi’an, Wuhan, June 14-28, 2013

Prof. M. Kostic

Mechanical Engineering

NORTHERN ILLINOIS UNIVERSITY www.kostic.niu.edu

Slide 2

www.kostic.niu.edu

Hello

:

Thank you for the opportunity to present a holistic, phenomenological reasoning of some challenging issues

in Thermo-science.

Discussions are informal and not finalized yet.

Thus, respectful, open-minded arguments, and brainstorming are desired for better comprehension of tacit and often elusive thermal phenomena.

Slide 3 3

Among distinguished invites were five keynote speakers from China and seven international keynote speakers : three from the USA and one each from Japan, United Kingdom, Singapore, and Spain; including four Academicians and six university Presidents/vice-presidents.

It has been my great pleasure and honor to meet Prof. ZY Guo and other distinguished colleagues, and even more so to visit again and meet friends now!

www.kostic.niu.edu

Slide 4

www.kostic.niu.edu

Slide 5

www.kostic.niu.edu

Slide 6

Q cal

=Q rev

+W loss

=Q rev

+Q diss

Entropy

, the thermal displacement property , dS=dQ rev

/T ( or dQ cal

/T) with J/K unit, is “ a measure ” of thermal dynamic-disorder or thermal randomness, and may be expressed as being related to logarithm of number of “all thermal, dynamic-mi crostates”

, or to their logarithmic-probability or uncertainty , that corresponds, or are consistent with the given thermodynamic macrostate . Note that the meanings of all relevant adjectives are deeply important to reflect reality and as such it has metaphoric description for real systems.

www.kostic.niu.edu

Slide 7

Persistent misconceptions

:

Persistent misconceptions existing for many years in different fields of science. They are sometimes encountered in the scientific and especially, the popular-science literature.

The Entropy (2 nd ) Law misconceptions are:

1. The first misconception:

Entropy is a measure of any disorder.

2. The second misconception:

Entropy (2nd) Law is valid only for closed systems.

3. The third misconception:

Entropy (2 nd ) Law is valid for inanimate, not for living (animate) systems.

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 8

The Boltzmann constant is a dimensionless conversion factor

:

Temperature is (random kinetic) thermal-energy of a representative (microthermal) particle (with Boltzmann constant being the conversion factor between m i cro-thermal energy and m a cro-temperature),

… thus entropy is ratio between macro (multi-particle) thermal energy and a representative particle thermal-energy, thus dimensionless . For average

(representative) thermal- particle, the unit for the Boltzmann constant, k

B

, is: 𝑘

𝐵

=

𝐸 𝑡ℎ

_ 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒

𝐸

𝑇

_ 𝑒𝑞

[𝐽/

_ 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒 particle

[𝐾]

]

=

[ J/(K

particle)]

=

𝐸 𝑡ℎ

_

𝐴𝑙𝑙

𝑃𝑟𝑡

𝑁 𝑢𝑚𝑏𝑒𝑟

=[J/J]=[1]

𝐸

𝑇

_ 𝑒𝑞

_ 𝑝𝑎𝑟𝑡𝑖𝑐𝑙𝑒

The thermal-energy used for entropy is without its ‘useful’ part or work potential, thus include pressure-volume influence. In that regard, a single particle entropy without thermal-interactions is zero (no random-thermal motion), but also infinity (as if in infinite volume, taking forever to thermally interact).

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 9

2 www.kostic.niu.edu

…thus thermal & mechanical energies are coupled

Slide 10

Importance of Sadi Carnot's treatise of reversible heatengine cycles for Entropy and the 2nd Law definitions:

Carnot's ingenious reasoning of limiting, reversible engine cycles allowed others to prove that entropy is conserved in ideal cycles ( Clausius Equality - definition of entropy) , that entropy cannot be destroyed since it will imply supper-ideal cycles , more efficient than reversible ones, but is always irreversibly generated (overall increased) due to dissipation of any work potential to heat ( Clausius Inequality ) in irreversible cycles.

These are easily expanded for all reversible and irreversible processes and generalization of the 2nd Law of

Thermodynamics.

www.kostic.niu.edu

Slide 11

Thermal energy versus Internal energy concepts in Thermodynamics:

The entropy is related to internal thermal energy

(obvious for incompressible substances), but is more subtle for compressible gases due to coupling of internal thermal energy (transferred as heat TdS ) and internal elastic-mechanical energy

(transferred as work PdV ). Entropy is NOT related to any other internal energy type, but thermal

(unless the former is converted / dissipated to thermal in a process).

www.kostic.niu.edu

Slide 12

Disorder versus Spreading/Dispersal as statistical metaphorical-concepts of entropy:

The three terms are qualitative and metaphorical concepts related to each other and have to relate to the random, complex thermal motion and complex thermal interactions of material structure, like its thermal heat capacity and temperature, among others.

Only for simple ideal gases (with all internal energy consisting of random thermal motion and elastic collisions), entropy could be correlated with statistical and probabilistic modeling, but has to be and is measured for any and all real substances (regardless of its structure) as phenomenologically defined by Clausius

(dS=dQ rev

/T abs

). Thus entropy and the Second Law are well defined in classical Thermodynamics www.kostic.niu.edu

Slide 13

Disorder versus Spreading/Dispersal as statistical metaphorical-concepts of entropy:

The simplified simulations (analytical, statistical, numerical, etc.) should not take precedence over phenomenological reality and reliable observations, but to the contrary:

Substance is more important than formalism!

‘Extreme’ judgments based on simulations are usually risky, particularly if detached from reality-checks or with attempt to suppress reality.

www.kostic.niu.edu

Slide 14

A system form and/or functional order/disorder:

A system form and/or function related order or disorder is not thermal-energy order/disorder, and the former is not the latter, thus not related to Thermodynamic entropy.

Entropy is always generated (due to ‘energy dissipation’) during production of form/function order or disorder, including information, i.e., during any process of creating or destroying, i.e., transforming any material structure.

Expanding entropy to any disorder type or information is unjustified, misleading and plain wrong .

Slide 15 www.kostic.niu.edu

Disorder versus Spreading/Dispersal as statistical metaphorical-concepts of entropy:

There is a "strange propensity” of some authors involved with simplified statistical interpretation of complex, random natural phenomena, to make unjustified statements that their analyses are true descriptions of natural phenomena and that the phenomenological definitions are deficient and misleading, or even worse , that the natural phenomena are a subset of more general statistical theory, for example, that information entropy is more general than thermodynamic entropy , the latter being a subset of the former. For example, some “promoters” of statistical descriptions of entropy become so detached from physical reality as if not aware of the reality .

www.kostic.niu.edu

Slide 16

Entropy and Disorder …

S=S(T,V) not of other type of disorder:

If T left

=T right and V left

=V right

S left

=S right

Entropy refers to dynamic thermal-disorder of its m i cro structure (which give rise to temperature, heat capacity, entropy and thermal energy. It does not refer to form-nor functionaldisorder of macro-structure : For example, the same ordered or piled bricks (see above) at the same temperature have the same entropy (the same Thermodynamic state)!

www.kostic.niu.edu

Slide 17

Disorder versus Spreading/Dispersal as statistical metaphorical-concepts of entropy:

Since entropy is directly related to the random thermal motion of a system micro (atomic and molecular) structure, it is suitable to statistical analysis , particularly of simple system structures, like ideal gases, consisting of completely randomized particle motion in thermal equilibrium, without any other particle interactions, but elastic, random collisions of material point-like particles.

For more complex , thus all real systems , the thermal motion and interactions are much more complex, thus the statistical analysis is metaphorical only and can not be quantitatively reduced to physical entropy, the latter welldefined and measured in laboratory for all substances of practical interest.

www.kostic.niu.edu

Slide 18

Entropy Generation (Production)

S gen

Entropy Generation (Production) is always irreversible in one direction only, occurring during a process within a system and stored as entropy property. Entropy cannot be destroyed under any circumstances, since it will imply spontaneous heat transfer from lower to higher temperature, or imply higher efficiency than the ideal Carnot cycle engine www.kostic.niu.edu

Slide 19

Dissecting The Second Law of Thermodynamics:

It Could Be Challenged But Not Violated

Carnot

1824

Heat Engine

Reversibility

Clausius

1850 NO Heat from cold to hot

1865 Entropy

Kelvin-Planck

1848 Abs. Temperature

1865 NO Work from single reservoir

Gibbs

1870’s Entropy,

Chem.Potential

Phys.Chemistry

… with some updates

Presented at:

Royal Institute of Technology - KTH

KTH Department of Energy Technology, Stockholm, Sweden, 22 May 2012

Prof. M. Kostic

Mechanical Engineering

NORTHERN ILLINOIS UNIVERSITY www.kostic.niu.edu

Slide 20

Sadi Carnot’s far-reaching treatise of heat engines was not noticed at his time and even not fully recognized nowadays

In 1824 Carnot gave a full and accurate reasoning of heat engine limitations almost two decades before equivalency between work and heat was experimentally established by Joules in 1843

Sadi Carnot laid ingenious foundations for the Second

Law of Thermodynamics before the Fist Law of energy conservation was known and long before Thermodynamic concepts were established.

www.kostic.niu.edu

Slide 21

Fig. 1: Similarity between an ideal

heat engine (HE) and a water wheel (WW)

( instead oh heat, entropy is conserved ) .

www.kostic.niu.edu

Slide 22

Carnot Efficiency …

W

W netOUT

Q

IN

f c

( T

H

, T

L

)

Ct

W netOUT

Q

IN

f ( T

H

, T

L

)

Max

Qualitativ e function

Rev .

“The motive power of heat is independent of the agents employed to realize it; its quantity is fired solely by the temperatures of the bodies between which is effected, finally, the transfer of the caloric.

”

Slide 23 www.kostic.niu.edu

Fig. 2 : Heat-engine ideal Carnot cycle :

Note thermal and mechanical expansions and compressions

(the former is needed for net-work out , while the latter is needed to provide reversible heat transfer ).

www.kostic.niu.edu

Slide 24

Q

H

, Q

L

, W

C

IF REVERESED

Q

H

,

Q

L

,

W

C

( 2 )

Fig. 3: Reversible Heat-engine (solid lines) and Refrigeration Carnot cycle (dashed lines, reversed directions).

Note, W

H

=W

L

=0 if heat transfer with phase change (compare Fig.2).

www.kostic.niu.edu

Slide 25

T=T

Any

Q

Q ( T

1 | T

( T

2 | H

Any

)

)

`

Q

Ref

Q

Ref

f ( T

1 | T

Any

) f ( T

2 | H

)

f ( T

1

) f ( T

2

) f ( T )

T

T

1

T

2

Q

1 | T

Any

Q

2 | H

The Carnot ratio equality above , is much more important than what it appears at first .

Actually it is probably the most important equation in

Thermodynamics and among the most important equations in natural sciences .

Fig. 5 : For a fixed T

H

, T

Rref

, Q

H

, and Q

Ref

, the Q(T) is proportional to Q

Ref

(efficiency is intensive property) and an increasing ( positive ) function of T for a given T

Ref

( thus absolute temperature ).

www.kostic.niu.edu

Slide 26

“Definition” of Temperature, Mass, etc…

Q ( T

1

Q ( T

2

)

)

Q

Ref

Q

Ref

f ( T

1

) f ( T

2

)

f ( T

1

) f ( T

2

) f ( T )

T

T

1 , A ny

T

2 , Ref

Q

1 , A ny

Q

2 , Ref

f ( T )

T

F

d dt

f ( m ) V

f ( m )

m

d dt

f ( m )

m

The

Carnot ratio equality above , defines Temperature vs. Heat-flux correlation, the way the

Newton Law defines Force vs. Momentum-flux correlation. The two simplest non-zero positive-definite functionals are chosen, however the others are also possible. Similarly, the

Einstein’s theory of relativity concept could have been correlated with similar, but different functional, resulting to similar and equally coherent theory !

Slide 27 www.kostic.niu.edu

Clausius (In)Equality

W

Irr

Q

Irr

Q

Rev

W

Rev

dQ

T

Irr

dQ

0

T

Re v

Eq .

( 10 ) or

dQ

T

S

0

Gen

Any Cycle

Clausius Inequality

dQ

T

S

0

Gen

Any Cycle

Clausius Inequality

Note :

S

Gen ( Cycle )

S out

S in

0 , but

S cycle

S in

S

Gen

S out

0

( any cycle )

Slide 28 www.kostic.niu.edu

Fig. 7: Heat engine ideal Carnot cycle between two different temperature heat-reservoirs (T

H

>T

L

(left), and with a single temperature heat-reservoirs (T

H

=T

L and W>0) and W=0, ideal reversible cycle) (right).

Low-temperature thermal compression is needed ( critical ), not the mechanical (isentropic) compression, to realize work potential between the two different temperature heat-reservoirs, due to internal thermal energy transfer via heat (W=Q

H

-Q

L

>0). The isentropic expansion and compression are needed to provide temperature for reversible heat transfer, while net thermal expansioncompression provides for the net-work out of the cycle.

www.kostic.niu.edu

Slide 29

Therefore, …

... the so called

“

waste cooling-heat

” in power cycles

(like in thermal power plants) is

not waste but very useful heat

, necessary for thermal compression of cycling medium (steam-into-condensate, for example), without which it

will not be possible to produce mechanical work from heat

(i.e., from thermal energy).

Slide 30 www.kostic.niu.edu

Fig. 8:

Significance of the Carnot’s reasoning of reversible cycles is in many ways comparable with the Einstein ’s relativity theory in modern times. The Carnot Ratio

Equality is much more important than what it appears at first. It is probably the most important equation in

Thermodynamics and among the most important equations in natural sciences.

www.kostic.niu.edu

Slide 31

Heat Transfer Is Unique and Universal:

Heat transfer is a spontaneous irreversible process where all organized (structural) energies are disorganized or dissipated as thermal energy with irreversible loss of energy potential

(from high to low temperature) and overall entropy increase.

Thus, heat transfer and thermal energy are unique and universal manifestation of all natural and artificial (man-made) processes,

… and thus … are vital for more efficient cooling and heating in new and critical applications , including energy production and utilization, environmental control and cleanup, and biomedical applications.

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 32

REVERSIBILITY AND

IRREVERSIBILITY:

ENERGY TRANSFER AND DISORGANIZATION,

RATE AND TIME, AND ENTROPY GENERATION

Net-energy transfer is in one direction only , from higher to lower potential (energy-forcing-potential), and the process cannot be reversed.

Thus all real processes are irreversible in the direction of decreasing energy-forcing-potential, like pressure and temperature (forced displacement of mass-energy)

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 33

Quasi-equilibrium Process :

in limit, energy transfer process with infinitesimal potential difference (still from higher to infinitesimally lower potential, P).

Then, if infinitesimal change of potential difference direction is reversed

P+dP → P-dP with infinitesimally small external energy, since dP→0 , the process will be reversed too , which is characterized with infinitesimal entropy generation, and in limit , without energy degradation (no further energy disorganization) and no entropy generation thus achieving a limiting reversible process .

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 34

Local-Instant & Quasi-Equilibrium:

At instant (frozen) time, a locality around a point in space may be considered as ‘ instant-local equilibrium ’

(including inertial forces) with instantaneous localproperties well-defined , regardless of non- uniformity.

Quasi-equilibrium is due to very small energy fluxes due to very small gradients and/or very high impedances, so that changes are infinitely slow, for all practical purposes appearing as equilibrium with virtually netzero energy exchange.

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 35

REVERSIBILITY –Relativity of Time:

Therefore, the changes are ‘fully reversible,’ and along with their rate of change and time, totally irrelevant (no irreversible-permanent change), as if nothing is effectively changing ( no permanent-effect to the surroundings or universe)

The time is irrelevant as if it does not exist, since it could be reversed or forwarded at will and at no ‘cost’ (no permanent change) and, thus, relativity of time.

Real time cannot be reversed , it is a measure of permanent changes , like irreversibility, which is in turn measured by entropy generation.

In this regard the time and entropy generation of the universe have to be related .

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 36

The 2

nd

Law Definition …

Non-equilibrium cannot be spontaneously created .

All natural spontaneous, or over-all processes (proceeding by itself and without interaction with the rest of the surroundings) between systems in non-equilibrium have irreversible, forced tendency towards common equilibrium and thus irreversible loss of the original work potential (measure of non-equilibrium), by converting

(dissipating) other energy forms into the thermal energy (and degrading the latter to lower temperature) accompanied with increase of entropy (randomized equi-partition of energy per absolute temperature level).

The 2 nd Law is more than thermo-mechanical (heat-work) energy conversion, but about energy processes in general :

Forcing due to non-equilibrium has tendency towards equilibrium.

www.kostic.niu.edu

Slide 37

The 2

nd

Law “Short” Definition:

• The useful-energy (non-equilibrium work potential) cannot be created from within equilibrium alone or otherwise, it only can be forcefully transferred between systems

(ideally conserved) and irreversibly dissipated towards equilibrium into thermal energy thus generating entropy.

The 2 nd Law is more than thermo-mechanical (heat-work) energy conversion, but about energy processes in general :

Forcing due to non-equilibrium has tendency towards equilibrium.

Force or Forcing is a process of exchanging useful-energy

(forced displacement) with net-zero exchange at forced equilibrium.

www.kostic.niu.edu

Slide 38

Issues and Confusions …

• There are many puzzling issues surrounding the Second

Law and other concepts in Thermodynamics, including subtle definitions and ambiguous meaning of very fundamental concepts.

• Further confusions are produced by attempts to generalize some of those concepts with similar but not the same concepts in other disciplines, like

Thermodynamic entropy versus other types of (quasi & statistical) entropies.

Thermodynamic ENTROPY [J/K] is related to thermal energy transfer & generation per absolute temperature ; it is a physical concept not a statistical construct (which is only a limited ‘description’ tool) as argued by some .

Slide 39 www.kostic.niu.edu

Local Creation of Nonequilibrium …

… It should not be confused with local increase/decrease of non-equilibrium and/or

‘organized structures’ on expense of ‘over-all’ non-equilibrium transferred from elsewhere. Nonequilibrium is always “destroyed” by spontaneous and irreversible conversion (dissipation) of other energy forms into the thermal energy, always and everywhere accompanied with entropy generation.

(randomized

t

S

m

t

Q

Gen

T equi-partition

m

temperature level).

0 , of energy per absolute local entropy generation rate

Over all entropy increases without exception on any time and space scales www.kostic.niu.edu

Slide 40

Definition of Entropy

"Entropy is ‘ an integral measure ’ of (random) thermal energy redistribution (stored as property, due to heat transfer or irreversible heat generation) within a system mass and/or space (during system expansion), per absolute temperature level. Entropy is increasing from orderly crystalline structure at zero absolute temperature (zero reference) during reversible heating (entropy transfer) and entropy generation during irreversible energy conversion, i.e. energy degradation or random equi-partition within system material structure and space."

(by M. Kostic)

© M. Kostic

< www.kostic.niu.edu

>

Thermodynamic ENTROPY [J/K] is THE specific thermo-physical concept,

NOT a statistical concept, i.e., the S=k log ( W ) is ONLY a

SIMPLIFIED/very-limited ‘construct’ not to be extrapolated …

2009 January 10-12 Slide 41

Entropy …

… entropy of a system for a given state is the same, regardless whether it is reached by reversible heat transfer or irreversible heat or irreversible work transfer ( entropy is a state function, while entropy generation is process dependent ). Once generated it cannot be destroyed

(irreversible change), but transferred only.

dS

Q

Q rev

T

Q gen or S

T

Q

T

S ref

However, the source entropy will decrease to a smaller extent over higher potential, thus resulting in overall entropy generation for the two interacting systems.

Note :

Q

Irr

Q gen

W

Loss

W

Loss(W)

W

Loss(Q

Irr

)

( i.e.,

S

Area

V ol

P unristr.

| throtll.

dT

Irr

T ref

S

) gen (Vol)

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 42

Note :

Q

Irr

Q

Gen

W

Loss

T

Ref

S

Gen ( any ) www.kostic.niu.edu

Slide 43

… Entropy …

We could consider a system internal thermal energy and entropy, as being accumulated from absolute zero level, by disorganization of organized/structural or higher level energy potential with the corresponding entropy generation.

Thus entropy as system property is associated with its thermal energy and temperature.

(but also space, since mechanical & thermal energies are coupled and equi-partitioned for I.G. ( PV=Nk

B

T ): unrestricted expansion is work-potential loss to thermal energy, as is the heat-transfer at finite temperature difference ).

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12

…thus thermal & mechanical energies are coupled

Slide 44

Entropy Summary

Thus, entropy transfer is due to reversible heat transfer and could be ether positive or negative (thus entropy is over-all conserved while reversibly transferred) .

However, entropy generation is always positive and always due to irreversibility .

Thus entropy is Over-ALL increased (The Second Law):

t

S

m

t

Q

Gen

T

m

K

W

kg

0 , local entropy generation rate

ΔS

Over change

ALL

J

K

Tr conserved (

0 )

S

Gen

t m

Witho ut exception for all and

ALL

dm dt

0

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 45

“ The Second Law of Thermodynamics is considered one of the central laws of science, engineering and technology.

For over a century it has been assumed to be inviolable by the scientific community.

Over the last 10-20 years, however , more than two dozen challenges to it have appeared in the physical literature more than during any other period in its 150year history.”

Second Law Conference: Status and Challenges with Prof. Sheehan in Sun Diego, CA June 2011

© M. Kostic < www.kostic.niu.edu

> Slide 46

The Second Law Symposium has been a unique gathering of the unorthodox physicist and inventors (to avoid using a stronger word) www.kostic.niu.edu

Slide 47

Living and Complex Systems

Many creationists (including evolutionists and information scientists) make claims that evolution violates the Second Law .

Although biological and some other systems may and do create local non-equilibrium and order ( BUT only on expense of elsewhere !) , the net change in entropy for all involved systems is positive (due to its unavoidable irreversible local generation) and conforms to the Laws of Nature and the

Second Law for non-equilibrium open systems .

It may appear that the created non-equilibrium structures are self-organizing from nowhere, from within an equilibrium (thus violating the 2nd Law ), due to the lack of proper observations and ‘accounting’ of all mass-energy flows, the latter maybe in ‘stealth’ form or undetected rate at our state of technology and comprehension (as the science history has though us many times).

www.kostic.niu.edu

Slide 56

Crystal ‘self-formation’…

It may appear that the created non-equilibrium structures are self-organizing from nowhere, from within an equilibrium ( thus violating the 2nd Law ), due to the lack of proper

‘observations’ at our state of technology and comprehension

( as the science history has though us many times ).

… and Plant Cells growth

Slide 57 www.kostic.niu.edu

Nature often defy our intuition

• Without friction, clock will not work, you could not walk, birds could not fly, and fish could not swim.

• Friction can make the flow go faster

• Roughening the surface can decrease drag

• Adding heat to a flow may lower its temperature, and removing heat from a flow may raise its temperature

• Infinitesimally small causes can have large effects (tipping point)

• Symmetric problems may have non-symmetric solutions

Slide 58 www.kostic.niu.edu

YES!

Miracles are possible

!

It may look ‘ perpetuum mobile ’ but miracles are real too …

Things and Events are both, MORE but also LESS complex than how they appear and we ‘see’ them -- it is natural simplicity in real complexity

… we could not comprehend energy conservation until 1850s:

( mechanical energy was escaping “without being noticed how” )

… we may not comprehend now new energy conversions and wrongly believe they are not possible :

(“cold fusion” seems impossible for now … ?)

…….Let us keep our eyes and our minds ‘open’ ………..

Slide 59 www.kostic.niu.edu

YES!

Miracles are possible

!

… but there is

NO ideal ‘Things and Events’

…

‘Things and Events’ are both, MORE but also LESS complex than how they appear and we ‘see’ them: it is natural simplicity in real complexity

… there are no ideal things

, no ideal rigid body, no ideal gas, no perfect elasticity, no adiabatic boundary, no frictionless/reversible process, no perfect equilibrium, not a steadystate process …

… there are always processes

- energy in transfer or motion, all things/everything ARE energy in motion with unavoidable process irreversibilities

, however , in limit, an infinitesimally slow process with negligible irreversibility ‘ appears ’ as instant reversible equilibrium – thus, everything is relative with regard to different space and time scales

….Let us keep our eyes and our minds ‘open’ ………..

Slide 60 www.kostic.niu.edu

All processes are transient …

All processes are transient (work and heat transfer, and entropy production, in time) and degradive/dissipative, even Eulerian steady-state processes (space-wise) are transient in Lagrangian form (system-wise, from input to output ),

… but equilibrium processes and even quasi-static (better, quasi-equilibrium ) processes are sustainable/reversible .

The existence in space and transformations in time are manifestations of perpetual mass-energy forced displacement processes: with net-zero mass-energy transfer in equilibrium ( equilibrium process ) and non-zero mass-energy transfer in non-equilibrium ( active process ) towards equilibrium.

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 61

If we are unable to observe …

• If we are unable to measure something it does not mean it does not exist (it could be sensed or measured with more precise instruments or in a longer time scale, or in similar stronger processes ( mc 2 always!, but often not measurable ).

• So called " self-organizing " appear as entropy increasing processes, since we are unable to comprehend or to observe/measure entropy change within or of affecting boundary environment, for such open processes.

The miracles are until they are comprehended and understood!

© M. Kostic

< www.kostic.niu.edu

>

2009 January 10-12 Slide 62

Simulation and Reality …

• Entropy is a measure of thermal-energy metaphorical-disorder (with ‘strings’ attached) , not a measure of any-form disorder.

• Einstein is quoted as satted:

“Since mathematicians explained ‘ Theory of

Relativity ’, I do not understand it any more.”

• Similarly, after statisticians explained ‘ Entropy ’

I do not understand it any more.

Slide 63 www.kostic.niu.edu

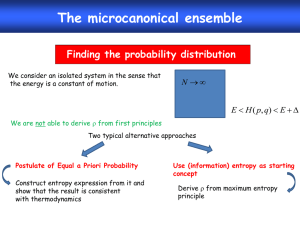

Statistical Interpretation Is

Important as Metaphoric Only

• Again, a statistical interpretation is important as metaphoric only: The sum of the probabilities of possible discrete microstates, p i

's , that could occur during the "random fluctuations" of a given macro-state. The adjective, possible, could occur, consistent random fluctuations ( thus thermal ), and the holistic of the statement have deep meanings , and could not be evaluated for any real system, but only scaled for the trivial one(s).

Slide 64 www.kostic.niu.edu

Granted, there are some benefits, BUT …

Granted, there are some benefits from simplified statistical descriptions to better understand the randomness of thermal motion and related physical quantities, but the limitations should be stated so the real physics would not be overlooked, or worse discredited. The phenomenological thermodynamics has the supremacy due to logical reasoning based on the fundamental laws and without the regard to the system complex dynamic structure and even more complex interactions. The fundamental laws and physical phenomena could not be caused and governed by mathematical modeling and calculation outcomes as suggested by some, but the other way around.

Slide 65 www.kostic.niu.edu

Thank you! Any Questions

?

www.kostic.niu.edu

Slide 66

Appendices

Stretching the mind further …

www.kostic.niu.edu

Slide 67

Entropy Logarithmic Law:

• dS=C th dT/T then S-S ref

=C th

*ln(T/T ref

), i.e. proportional to T or thermal motion, or W, number of thermal microstates (depends on thermal motion) that are consistent/correspond to a macrostate.

• Many other processes/phenomena are governed by C dX/X and thus Logarithmic Law.

• The C th is thermal capacity of any reversible heating process or isochoric thermal capacity Cv otherwise

Slide 68 www.kostic.niu.edu

Entropy and Random Thermal Motion

• Since entropy is directly related to the random thermal motion of a system micro (atomic and molecular) structure, it is suitable to statistical analysis , particularly of simple system structures, like ideal gases, consisting of completely randomized particle motion in thermal equilibrium, without any other particle interactions, but elastic, random collisions of material point-like particles. For more complex, thus all real systems , the thermal motion and interactions are much more complex , thus the statistical analysis is metaphorical only and cannot be quantitatively reduced to physical entropy, the latter well-defined and measured in laboratory for all substances of practical interest.

www.kostic.niu.edu

Slide 69

Just because we could scale entropy …

• Just because we could scale entropy using a statistical description of statistically random thermal motion of simple system particulate structure, the latter related to both, the thermal energy and thermodynamic temperature , thus entropy , it does not mean that entropy is a simple statistical concept and not physical quantity of its own right. Actually , the statistical representation is so simple and so limited, that without knowing the result upfront, the scaling would be impossible but for trivially simple and fully randomized mono-atomic ideal gas structure.

Slide 70 www.kostic.niu.edu

statistical analysis is ‘going so far’

• The interpretation of the statistical analysis is going so far as to forget about the phenomena it is trying to describe , and presenting it as spatial particle arrangement, and or simplified statistics of position and momenta of particles without other realistic interactions. As if entropy is a measure of statistical randomness without reference to thermal energy, or reference to energy in general, both physically inappropriate!

Slide 71 www.kostic.niu.edu

The real entropy, as defined and measured

The real entropy, as defined and measured, is related to the thermal energy and thermodynamic temperature, dS=dQ/T, not others internal energies. The Boltzmann's metaphorical entropy description, S=k*log(W), refers to a logarithmic measure of the number of possible microscopic states (or microstates), W, of a system in thermodynamic equilibrium , consistent with its macroscopic entropy state (thus ‘equivalent’ number of thermal, dynamic microstates). This is really far-fetched qualitative description that transfers all real complexity to W (number of relevant thermal, dynamic microstates) with deep meaning of relevant adjectives: equivalent number of microstates consistent with the well-defined macro-state.

www.kostic.niu.edu

Slide 72

not a number of all possible spatial distributions

• This is not a number of all possible spatial distributions of micro-particles within the system volume as often graphically depicted .

• For example , the m i crostates with all molecules in one half or one quarter of system volume and similar are inappropriate to count, since they are not consistent with the m a crostate, nor physically possible to selfforce all molecules in one half volume with vacuum in the other half. That would be quite different macrostate with virtually null probability (not equi-probable)!

Slide 73 www.kostic.niu.edu

Randomness is Statistical

The microstate of a very simple, ideal system could be described by the positions and momenta of all the atoms.

In principle, all the physical properties of the system are determined by its microstate . The Gibbs or von Neumann quantum or Shanon or other probabilistic entropy descriptions are also statistical as Boltzmann's.

Actually they all reduce to the latter for fully randomized large system in equilibrium, since the logarithmic probability of all discrete microstates, where, equiprobable p i

=1/W, result in the Boltzmann's logarithmic value, i.e.:

-Sum(p i

*log(p i

)=log(W) www.kostic.niu.edu

Slide 74

Statistical Interpretation Is

Important as Metaphoric Only

• Again, a statistical interpretation is important as metaphoric only: The sum of the probabilities of possible discrete microstates, p i

's, that could occur during the "random fluctuations" of a given macro-state. The adjective, possible, could occur, consistent random fluctuations ( thus thermal ), and the holistic of the statement have deep meanings, and could not be evaluated for any real system, but only scaled for the trivial one(s).

Slide 75 www.kostic.niu.edu

Granted, there are some benefits, BUT …

Granted, there are some benefits from simplified statistical descriptions to better understand the randomness of thermal motion and related physical quantities, but the limitations should be stated so the real physics would not be overlooked, or worse discredited. The phenomenological thermodynamics has the supremacy due to logical reasoning based on the fundamental laws and without the regard to the system complex dynamic structure and even more complex interactions. The fundamental laws and physical phenomena could not be caused and governed by mathematical modeling and calculation outcomes as suggested by some, but the other way around.

Slide 76 www.kostic.niu.edu

U,T & S are subtle and elusive, but …

• The energy, temperature and entropy are subtle and elusive, but well-defined and precisely measured as physical quantities , and used as such. They should be further refined and explained for what they are and not be misrepresented as something they are not. Any new approach should be correlated with existing knowledge, and limitations clearly and objectively presented.

Slide 77 www.kostic.niu.edu

To repeat again … Q cal

=Q rev

+W loss

=Q rev

+Q diss

Entropy

, the thermal displacement property , dS=dQ rev

/T ( or dQ cal

/T) with J/K unit, is a measure of thermal dynamic-disorder or thermal randomness, and may be expressed as being related to logarithm of number of “all thermal, dynamicmicrostates”

, or to their logarithmic-probability or uncertainty , that corresponds, or are consistent with the given thermodynamic macrostate . Note that the meanings of all relevant adjectives are deeply important to reflect reality and as such it has metaphoric description for real systems.

www.kostic.niu.edu

Slide 78

Thank you! Any Questions

?

www.kostic.niu.edu

Slide 79