Ch3

advertisement

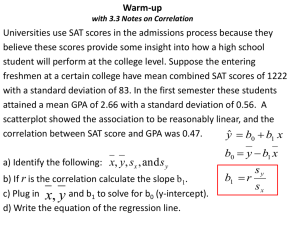

Finding Areas with Calc 1. Shade Norm • Consider WISC data from before: N (100, 15). Suppose we want to find % of children whose scores are above 125 • Specify window: X[55,145]12 and Y[-.008, .028].01 • Press 2nd VARS (DISTR), then choose Draw and 1: ShadeNorm(. • Compete command ShadeNorm(125, 1E99, 100, 15) or (0,85, 100,15). • If using Z scores instead of raw scores, the mean 0 and SD 1 will be understood so ShadeNorm (1,2) will give you area from z-score of 1.0 to 2.0. How does this compare to the 68-95-99.7 rule? 2. NormalCDF • Advantage- quicker, disadvantage- no picture. • 2nd Vars (DISTR) choose 2: normalcdf(. • Complete command (125, 1E99, 100, 15) and press enter. You get .0477 or approx. 5%. • If you have Z scores: normalcdf (-1, 1) = .6827 aka 68%...like our rule! InvNorm • InvNorm calculates the raw or Z score value corresponding to a known area under the curve. • 2nd Vars (DISTR), choose 3: invNorm(. • Complete the command invNorm (.9, 100, 15) and press Enter. You get 119.223, so this is the score corresponding to 90th percentile. • Compare this with command invNorm (.9) you get 1.28. This is the Z score. Bivariate Relationships What is Bivariate data? When exploring/describing a bivariate (x,y) relationship: Determine the Explanatory and Response variables Plot the data in a scatterplot Note the Strength, Direction, and Form Note the mean and standard deviation of x and the mean and standard deviation of y Calculate and Interpret the Correlation, r Calculate and Interpret the Least Squares Regression Line in context. Assess the appropriateness of the LSRL by constructing a Residual Plot. 3.1 Response Vs. Explanatory Variables • Response variable measures an outcome of a study, explanatory variable helps explain or influences changes in a response variable (like independent vs. dependent). • Calling one variable explanatory and the other response doesn’t necessarily mean that changes in one CAUSE changes in the other. • Ex: Alcohol and Body temp: One effect of Alcohol is a drop in body temp. To test this, researches give several amounts of alcohol to mice and measure each mouse’s body temp change. What are the explanatory and response variables? Scatterplots • Scatterplot shows the relationship between two quantitative variables measured on the same individuals. • Explanatory variables along X axis, Response variables along Y. • Each individual in data appears as the point in the plot fixed by the values of both variables for that individual. • Example: Interpreting Scatterplots • Direction: in previous example, the overall pattern moves from upper left to lower right. We call this a negative association. • Form: The form is slightly curved and there are two distinct clusters. What explains the clusters? (ACT States) • Strength: The strength is determined by how closely the points follow a clear form. The example is only moderately strong. • Outliers: Do we see any deviations from the pattern? (Yes, West Virginia, where 20% of HS seniors take the SAT but the mean math score is only 511). Association Introducing Categorical Variables Calculator Scatterplot Student 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Beers 5 2 9 8 3 7 3 5 3 5 4 6 5 7 1 4 BAC 0.1 0.03 0.19 0.12 0.04 0.09 0.07 0.06 0.02 0.05 0.07 0.1 0.08 0.09 0.01 0.05 50 5 • Enter the Beer consumption in L1 and the BAC values in L2 • Next specify scatterplot in Statplot menu (first graph). X list L1 Y List L2 (explanatory and response) • Use ZoomStat. • Notice that their are no scales on the axes and they aren’t labeled. If you are copying your graph to your paper, make sure you scale and label the Axis (use Trace) • Correlation Caution- our eyes can be fooled! Our eyes are not good judges of how strong a linear relationship is. The 2 scatterplots depict the same data but drawn with a different scale. Because of this we need a numerical measure to supplement the graph. r • The Correlation measures the direction and strength of the linear relationship between 2 variables. Z Z x y • Formula- (don’t need to memorize or use): r = • In Calc: Go to Catalog (2nd, zero button), go to DiagnosticOn, enter, enter. You only have to do this ONCE! Once this is done: • Enter data in L1 and L2 (you can do calc-2 var stats if you want the mean and sd of each) • Calc, LinReg (A + Bx) enter n 1 Interpreting r • The absolute value of r tells you the strength of the association (0 means no association, 1 is a strong association) • The sign tells you whether it’s a positive or a negative association. So r ranges from -1 to +1 • Note- it makes no difference which variable you call x and which you call y when calculating correlation, but stay consistent! • Because r uses standardized values of the observations, r does not change when we change the units of measurement of x, y, or both. (Ex: Measuring height in inches vs. ft. won’t change correlation with weight) • values of -1 and +1 occur ONLY in the case of a perfect linear relationship , when the variables lie exactly along a straight line. Examples 1. Correlation requires that both variables be quantitative 2. Correlation measures the strength of only LINEAR relationships, not curved...no matter how strong they are! 3. Like the mean and standard deviation, the correlation is not resistant: r is strongly affected by a few outlying observations. Use r with caution when outliers appear in the scatterplot 4. Correlation is not a complete summary of two-variable data, even when the relationship is linear- always give the means and standard deviations of both x and y along with the correlation. 3.2- least squares regression Text The slope here B = .00344 tells us that fat gained goes down by .00344 kg for each added calorie of NEA according to this linear model. Our regression equation is the predicted RATE OF CHANGE in the response y as the explanatory variable x changes. The Y intercept a = 3.505kg is the fat gain estimated by this model if NEA does not change when a person overeats. • We can use a regression line to predict the response y for a specific value of the explanatory variable x. Prediction LSRL • In most cases, no line will pass exactly through all the points in a scatter plot and different people will draw different regression lines by eye. • Because we use the line to predict y from x, the prediction errors we make are errors in y, the vertical direction in the scatter plot • A good regression line makes the vertical distances of the points from the line as small as possible • Error: Observed response - predicted response LSRL Cont. Equation of LSRL • Example 3.36: The Sanchez household is about to install solar panels to reduce the cost of heating their house. In order to know how much the panels help, they record their consumption of natural gas before the panels are installed. Gas consumption is higher in cold weather, so the relationship between outside temp and gas consumption is important. • • About how much gas does the regression line predict that the family will use in a month that averages 20 degree-days per day? • • • Positive, linear, and very strong 500 cubic feet per day How well does the least-squares line fit the data? Residuals • The error of our predictions, or vertical distance from predicted Y to observed Y, are called residuals because they are “left-over” variation in the response. One subject’s NEA rose by 135 calories. That subject gained 2.7 KG of fat. The predicted gain for 135 calories is Y hat = 3.505- .00344(135) = 3.04 kg The residual for this subject is y - yhat = 2.7 - 3.04 = -.34 kg Residual Plot • The sum of the least-squares residuals is always zero. • The mean of the residuals is always zero, the horizontal line at zero in the figure helps orient us. This “residual = 0” line corresponds to the regression line • Residuals Listresiduals on listed Calc If you want to get all your in L3 highlight L3 (the name of the list, on the top) and go to 2nd- stat- RESID then hit enter and enter and the list that pops out is your resid for each individual in the corresponding L1 and L2. (if you were to create a normal scatter plot using this list as your y list, so x list: L1 and Y list L3 you would get the exact same thing as if you did a residual plot defining x list as L1 and Y list as RESID as we had been doing). This is a helpful list to have to check your work when Examining Residual Plot • Residual plot should show no obvious pattern. A curved pattern shows that the relationship is not linear and a straight line may not be the best model. • Residuals should be relatively small in size. A regression line in a model that fits the data well should come close” to most of the points. • A commonly used measure of this is the standard deviation of the residuals, given by: s For the NEA and fat gain data, S = 7.663 .740 14 residuals n2 2 Residual Plot on Calc • Produce Scatterplot and Regression line from data (lets use BAC if still in there) • Turn all plots off • Create new scatterplot with X list as your explanatory variable and Y list as residuals (2nd stat, resid) • Zoom Sta R squared- Coefficient of determination If all the points fall directly on the least-squares line, r squared = 1. Then all the variation in y is explained by the linear relationship with x. So, if r squared = .606, that means that 61% of the variation in y among individual subjects is due to the influence of the other variable. The other 39% is “not explained”. r squared is a measure of how successful the regression was in explaining the response Facts about LeastSquares regression • The distinction between explanatory and response variables is essential in regression. If we reverse the roles, we get a different least-squares regression line. • There is a close connection between corelation and the slope of the LSRL. Slope is r times Sy/Sx. This says that a change of one standard deviation in x corresponds to a change of 4 standard deviations in y. When the variables are perfectly correlated (4 = +/- 1), the change in the predicted response y hat is the same (in standard deviation units) as the change in x. • The LSRL will always pass through the point (X bar, Y Bar) • r squared is the fraction of variation in values of y explained by the x variable 3.3 Influences • Correlation r is not resistant. Extrapolation is not very reliable. One unusual point in the scatterplot greatly affects the value of r. LSRL also not resistant. • A point extreme in the x direction with no other points near it pulls the line toward itself. This point is influential. Lurking VariablesBeware! • Example: A college board study of HS grads found a strong correlation between math minority students took in high school and their later success in college. News articles quoted the College Board saying that “math is the gatekeeper for success in college”. • But, Minority students from middle-class homes with educated parents no doubt take more high school math courses. They are also more likely to have a stable family, parents who emphasize education, and can pay for college etc. These students would likely succeed in college even if they took fewer math courses. The family background of students is a lurking variable that probably explains much of the relationship between math courses and college success. Beware correlations based on averages • Correlations based on averages are usually too high when applied to individuals. • Example: if we plot the average height of young children against their age in months, we will see a very strong positive association with correlation near 1. But individual children of the same age vary a great deal in height. A plot of height against age for individual children will show much more scatter and lower correlation than the plot of average height against age. Chapter Example: Corrosion and Strength Consider the following data from the article, “The Carbonation of Concrete Structures in the Tropical Environment of Singapore” (Magazine of Concrete Research (1996):293-300 which discusses how the corrosion of steel(caused by carbonation) is the biggest problem affecting concrete strength: x= carbonation depth in concrete (mm) y= strength of concrete (Mpa) x y 8 20 20 30 35 40 50 22.8 17.1 21.5 16.1 13.4 12.4 11.4 55 65 9.7 6.8 Define the Explanatory and Response Variables. Plot the data and describe the relationship. Corrosion and Strength Strength (Mpa) There is a strong, negative, linear relationship between depth of corrosion and concrete strength. As the depth increases, the strength decreases at a constant rate. Depth (mm) Strength (Mpa) Corrosion and Strength Depth (mm) The mean depth of corrosion is 35.89mm with a standard deviation of 18.53mm. The mean strength is 14.58 Mpa with a standard deviation of 5.29 Mpa. Find the equation of the Least Squares Regression Line (LSRL) that models the relationship between corrosion and strength. Strength (Mpa) Corrosion and Strength Depth (mm) y=24.52+(-0.28)x strength=24.52+(-0.28)depth r=-0.96 Corrosion and Strength Strength (Mpa) y=24.52+(-0.28)x strength=24.52+(-0.28)depth r=-0.96 Depth (mm) What does “r” tell us? There is a Strong, Negative, LINEAR relationship between depth of corrosion and strength of concrete. What does “b=-0.28” tell us? For every increase of 1mm in depth of corrosion, we predict a 0.28 Mpa decrease in strength of the concrete. Corrosion and Strength Use the prediction model (LSRL) to determine the following: What is the predicted strength of concrete with a corrosion depth of 25mm? strength=24.52+(-0.28)depth strength=24.52+(-0.28)(25) strength=17.59 Mpa What is the predicted strength of concrete with a corrosion depth of 40mm? strength=24.52+(-0.28)(40) strength=13.44 Mpa How does this prediction compare with the observed strength at a corrosion depth of 40mm? Residuals Note, the predicted strength when corrosion=40mm is: predicted strength=13.44 Mpa The observed strength when corrosion=40mm is: observed strength=12.4mm • The prediction did not match the observation. • That is, there was an “error” or “residual” between our prediction and the actual observation. • RESIDUAL = Observed y - Predicted y • The residual when corrosion=40mm is: • residual = 12.4 - 13.44 • residual = -1.04 Assessing the Model Is the LSRL the most appropriate prediction model for strength? r suggests it will provide strong predictions...can we do better? To determine this, we need to study the residuals generated by the LSRL. Make a residual plot. Look for a pattern. If no pattern exists, the LSRL may be our best bet for predictions. If a pattern exists, a better prediction model may exist... Residual Plot residuals Construct a Residual Plot for the (depth,strength) LSRL. depth(mm) There appears to be no pattern to the residual plot...therefore, the LSRL may be our best prediction model. Coefficient of Determination 93.75% of the variability in predicted strength can be explained by the LSRL on depth. Strength (Mpa) We know what “r” tells us about the relationship between depth and strength....what about r2? Depth (mm) Summary When exploring a bivariate relationship: Make and interpret a scatterplot: Strength, Direction, Form Describe x and y: Mean and Standard Deviation in Context Find the Least Squares Regression Line. Write in context. Construct and Interpret a Residual Plot. Interpret r and r2 in context. Use the LSRL to make predictions... Examining Relationships Regression Review Regression Basics When describing a Bivariate Relationship: Make a Scatterplot Strength, Direction, Form Model: y-hat=a+bx Interpret slope in context Make Predictions Residual = Observed-Predicted Assess the Model Interpret “r” Residual Plot Reading Minitab Output Regression Analysis: Fat gain versus NEA The regression equation is FatGain = ****** + ******(NEA) Predictor Constant NEA Coef 3.5051 -0.0034415 S=0.739853 R-Sq = 60.6% SE Coef 0.3036 0.00074141 T 11.54 -4.04 P 0.000 0.000 RSq(adj)=57.8% Regression equations aren’t always as easy to spot as they are on your TI-84. Can you find the slope and intercept above? Outliers/Influential Points Age at Fi rst Word and Gesel l Score Child Age Score 1 1 15 m onths 95 2 2 26 m onths 71 3 3 10 m onths 83 4 4 9 m onths 91 5 5 15 m onths 102 6 6 20 m onths 87 7 7 18 m onths 93 8 8 11 m onths 100 9 9 8 m onths 104 10 10 20 m onths 94 11 11 7 m onths 113 12 12 9 m onths 96 13 13 10 m onths 83 14 14 11 m onths 84 15 15 11 m onths 102 16 16 10 m onths 100 17 17 12 m onths 105 18 18 42 m onths 57 19 19 17 m onths 121 20 20 11 m onths 86 21 21 10 m onths 100 Does the age of a child’s first word predict <new > his/her mental ability? Consider the following data on (age of first word, Gesell Adaptive Score) for 21 children. Influential? Does the highlighted point markedly affect the equation of the LSRL? If so, it is “influential”. Test by removing the point and finding the new LSRL. Explanatory vs. Response The Distinction Between Explanatory and Response variables is essential in regression. Switching the distinction results in a different least-squares regression line. Note: The correlation value, r, does NOT depend on the distinction between Explanatory and Response. Correlation The correlation, r, describes the strength of the straight-line relationship between x and y. Ex: There is a strong, positive, LINEAR relationship between # of beers and BAC. There is a weak, positive, linear relationship between x and y. However, there is a strong nonlinear relationship. r measures the strength of linearity... Coefficient of Determination The coefficient of determination, r2, describes the percent of variability in y that is explained by the linear regression on x. 71% of the variability in death rates due to heart disease can be explained by the LSRL on alcohol consumption. That is, alcohol consumption provides us with a fairly good prediction of death rate due to heart disease, but other factors contribute to this rate, so our prediction will be off somewhat. Cautions Correlation and Regression are NOT RESISTANT to outliers and Influential Points! Correlations based on “averaged data” tend to be higher than correlations based on all raw data. Extrapolating beyond the observed data can result in predictions that are unreliable. Correlation vs. Causation Consider the following historical data: Coll ecti on 1 Year Ministers Rum 1 1860 63 8376 2 1865 48 6406 3 1870 53 7005 4 1875 64 8486 5 1880 72 9595 6 1885 80 10643 7 1890 85 11265 8 1895 76 10071 9 1900 80 10547 10 1905 83 11008 <new > There is an almost perfect linear 12 1915 140 18559 relationship between x and y. (r=0.999997) x = # Methodist Ministers in New England y = # of Barrels of Rum Imported to Boston CORRELATION DOES NOT IMPLY CAUSATION! 11 1910 105 13885 Summary