Multicollinearity in Regression Principal Components Analysis

advertisement

Multicollinearity in Regression

Principal Components Analysis

Standing Heights and Physical Stature

Attributes Among Female Police

Officer Applicants

S.Q. Lafi and J.B. Kaneene (1992). “An Explanation of the Use of Principal Components

Analysis to Detect and Correct for Multicollinearity,” Preventive Veterinary Medicine,

Vol. 13, pp. 261-275

Data Description

• Subjects: 33 Females applying for police officer positions

• Dependent Variable: Y ≡ Standing Height (cm)

• Independent Variables:

X1 ≡ Sitting Height (cm)

X2 ≡ Upper Arm Length (cm)

X3 ≡ Forearm Length (cm)

X4 ≡ Hand Length (cm)

X5 ≡ Upper Leg Length (cm)

X6 ≡ Lower Leg Length (cm)

X7 ≡ Foot Length (inches)

X8 ≡ BRACH (100X3/X2)

X9 ≡ TIBIO (100X6/X5)

Data

ID

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

Y

165.8

169.8

170.7

170.9

157.5

165.9

158.7

166.0

158.7

161.5

167.3

167.4

159.2

170.0

166.3

169.0

156.2

159.6

155.0

161.1

170.3

167.8

163.1

165.8

175.4

159.8

166.0

161.2

160.4

164.3

165.5

167.2

167.2

X1

88.7

90.0

87.7

87.1

81.3

88.2

86.1

88.7

83.7

81.2

88.6

83.2

81.5

87.9

88.3

85.6

81.6

86.6

82.0

84.1

88.1

83.9

88.1

87.0

89.6

85.6

84.9

84.1

84.3

85.0

82.6

85.0

83.4

X2

31.8

32.4

33.6

31.0

32.1

31.8

30.6

30.2

31.1

32.3

34.8

34.3

31.0

34.2

30.6

32.6

31.0

32.7

30.3

29.5

34.0

32.5

31.7

33.2

35.2

31.5

30.5

32.8

30.5

35.0

36.2

33.6

33.5

X3

28.1

29.1

29.5

28.2

27.3

29.0

27.8

26.9

27.1

27.8

27.3

30.1

27.3

30.9

28.8

28.8

25.6

25.4

26.6

26.6

29.3

28.6

26.9

26.3

30.1

27.1

28.1

29.2

27.8

27.8

28.6

27.1

29.7

X4

18.7

18.3

20.7

18.6

17.5

18.6

18.4

17.5

18.1

19.1

18.3

19.2

17.5

19.4

18.3

19.1

17.0

17.7

17.3

17.8

18.2

20.2

18.1

19.5

19.1

19.2

17.8

18.4

16.8

19.0

20.2

19.8

19.4

X5

40.3

43.3

43.7

43.7

38.1

42.0

40.0

41.6

38.9

42.8

43.1

43.4

39.8

43.1

41.8

42.7

44.2

42.0

37.9

38.6

43.2

43.3

40.1

43.2

45.1

42.3

41.2

42.6

41.0

47.2

45.0

46.0

45.2

X6

38.9

42.7

41.1

40.6

39.6

40.6

37.0

39.0

37.5

40.1

41.8

42.2

39.6

43.7

41.0

42.0

39.0

37.5

36.1

38.2

41.4

42.9

39.0

40.7

44.5

39.0

43.0

41.1

39.8

42.4

42.3

41.6

44.0

X7

6.7

6.4

7.2

6.7

6.6

6.5

5.9

5.9

6.1

6.2

7.3

6.8

4.9

6.3

5.9

6.0

5.1

5.0

5.2

5.9

5.9

7.2

5.9

5.9

6.3

5.7

6.1

5.9

6.0

5.0

5.6

5.6

5.2

X8

88.4

89.8

87.8

91.0

85.0

91.2

90.8

89.1

87.1

86.1

78.4

87.8

88.1

90.4

94.1

88.3

82.6

77.7

87.8

90.2

86.2

88.0

84.9

79.2

85.5

86.0

92.1

89.0

91.1

79.4

79.0

80.7

88.7

X9

96.5

98.6

94.1

92.9

103.9

96.7

92.5

93.8

96.4

93.7

97.0

97.2

99.5

101.4

98.1

98.4

88.2

89.3

95.3

99.0

95.8

99.1

97.3

94.2

98.7

92.2

104.4

96.5

97.1

89.8

94.0

90.4

97.3

Standardizing the Predictors

X

*

ij

X ij X

j

n

X ij X

j

i 1

*

X 11

*

X 21

*

X

*

X 33 ,1

*

X 22

*

X 33 , 2

n

r jk

X

j

i 1

n

X

i 1

ij

X

i 1, ..., 33;

ik

X

1

r21

*

*

X 'X R

r91

k

n

X

2

j

X

i 1

ik

j 1, ..., 9

2

j

*

X 19

*

X 29

*

X 33 ,9

*

ij

j

( n 1) S

2

X 12

X

X ij X

X

k

2

r12

1

r92

r19

r29

1

Correlations Matrix of Predictors and Inverse

R

1.0000

0.1441

0.2791

0.1483

0.1863

0.2264

0.3680

0.1147

0.0212

0.1441

1.0000

0.4708

0.6452

0.7160

0.6616

0.1468

-0.5820

-0.0984

0.2791

0.4708

1.0000

0.5050

0.3658

0.7284

0.4277

0.4420

0.4406

0.1483

0.6452

0.5050

1.0000

0.6007

0.5500

0.3471

-0.1911

-0.0988

0.1863

0.7160

0.3658

0.6007

1.0000

0.7150

-0.0298

-0.3882

-0.4099

0.2264

0.6616

0.7284

0.5500

0.7150

1.0000

0.2821

0.0026

0.3434

R^(-1)

1.52

-3.48

3.15

0.41

13.15

-13.28

-0.62

-3.41

10.21

-3.48

436.47

-390.31

-1.26

-83.83

77.01

1.18

425.55

-62.66

3.15

-390.31

353.99

-0.07

91.67

-87.90

-1.25

-382.59

68.23

0.41

-1.26

-0.07

2.46

4.89

-5.40

-0.81

-0.49

4.57

13.15

-83.83

91.67

4.89

817.17

-807.75

-2.21

-76.90

603.81

-13.28

77.01

-87.90

-5.40

-807.75

801.94

2.65

71.74

-597.88

0.3680

0.1468

0.4277

0.3471

-0.0298

0.2821

1.0000

0.2445

0.3971

-0.62

1.18

-1.25

-0.81

-2.21

2.65

1.77

1.12

-2.49

0.1147

-0.5820

0.4420

-0.1911

-0.3882

0.0026

0.2445

1.0000

0.5082

-3.41

425.55

-382.59

-0.49

-76.90

71.74

1.12

417.39

-58.24

0.0212

-0.0984

0.4406

-0.0988

-0.4099

0.3434

0.3971

0.5082

1.0000

10.21

-62.66

68.23

4.57

603.81

-597.88

-2.49

-58.24

448.37

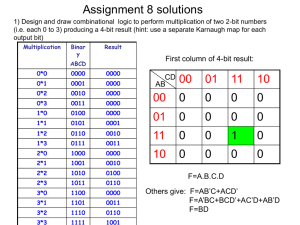

Variance Inflation Factors (VIFs)

• VIF measures the extent that a regression

coefficient’s variance is inflated due to correlations

among the set of predictors

• VIFj = 1/(1-Rj2) where Rj2 is the coefficient of multiple

determination when Xj is regressed on the remaining

predictors.

• Values > 10 are often considered to be problematic

• VIFs can be obtained as the diagonal elements of R-1

VIFs

X1

1.52

X2

436.47

X3

353.99

X4

2.46

X5

817.17

X6

801.94

X7

1.77

X8

417.39

X9

448.37

Not surprisingly, X2, X3, X5, X6, X8, and X9 are problems (see definitions of X8 and X9)

Regression of Y on [1|X*]

E Yi 0 1 X i 1

*

9 X i9

*

E Y 0 1 X β

Regression Statistics

Multiple R

0.944825

R Square

0.892694

Adjusted R Square 0.850704

Standard Error

1.890412

Observations

33

ANOVA

df

Regression

Residual

Total

Intercept

X1*

X2*

X3*

X4*

X5*

X6*

X7*

X8*

X9*

SS

9 683.7823

23 82.1941

32 765.9764

MS

75.9758

3.5737

F Significance F

21.2600

0.0000

Coefficients

Standard Error t Stat

P-value Lower 95%Upper 95%

164.5636

0.3291 500.0743

0.0000 163.8829 165.2444

11.8900

2.3307

5.1015

0.0000

7.0686 16.7114

4.2752 39.4941

0.1082

0.9147 -77.4246 85.9751

-3.2845 35.5676 -0.0923

0.9272 -76.8616 70.2927

4.2764

2.9629

1.4433

0.1624 -1.8528 10.4057

-9.8372 54.0398 -0.1820

0.8571 -121.6270 101.9525

25.5626 53.5337

0.4775

0.6375 -85.1802 136.3055

3.3805

2.5166

1.3433

0.1923 -1.8255

8.5865

6.3735 38.6215

0.1650

0.8704 -73.5211 86.2682

-9.6391 40.0289 -0.2408

0.8118 -92.4453 73.1670

*

Note the surprising

negative coefficients

for X3*, X5*, and X9*

Principal Components Analysis

U sing S tatistical or M atrix C om puter P ac kage, decom pose

the p p correlation m atrix R into its p eigen values and eigenvectors

p

X 'X R

*

*

j

v j v j ' V L V ' w here j j

th

j 1

V v 1

v2

v p

1

0

L

0

0

2

0

v j1

v j2

j th eigenvector

eigenvalue and v j

v

jp

0

0

p

p

subject to:

j p

v j'v j 1

v j'v k 0

j k

C ondition Index: j

m ax

i 1

*

P rincipal C om ponents: W = X V

While the columns of X* are highly correlated, the columns of W are uncorrelated

The s represent the variance corresponding to each principal component

j

Police Applicants Height Data - I

V

0.1853

0.4413

0.3934

0.4182

0.4125

0.4645

0.2141

-0.0852

0.0474

0.1523

-0.2348

0.3336

-0.0813

-0.3000

0.1011

0.3577

0.5467

0.5261

0.8017

-0.0986

-0.1642

0.0284

-0.0121

-0.2518

0.3790

-0.0498

-0.3320

0.2782

-0.2312

0.2336

-0.2063

0.3508

0.1658

-0.5862

0.4536

-0.2685

-0.3707

-0.2551

0.1239

0.5765

0.0559

-0.2697

0.2139

0.3674

-0.4396

-0.2327

-0.3191

-0.3183

-0.3703

0.4669

0.3798

0.4811

0.0367

-0.1027

0.1754

-0.3973

-0.4953

0.5529

0.0250

0.2786

-0.2484

-0.0418

0.3445

-0.0005

0.5850

-0.5205

0.0009

0.1487

-0.1539

0.0009

0.5738

0.1089

0.0104

-0.1414

0.1397

0.0040

0.6106

-0.6040

-0.0022

-0.1352

0.4521

L

3.6304

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

2.4427

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

1.0145

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.7656

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.6109

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.3024

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.2322

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0009

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0000

0.0005

Police Applicants Height Data - II

VLV'

1.0000

0.1441

0.2791

0.1483

0.1863

0.2263

0.3680

0.1147

0.0212

0.1441

1.0000

0.4708

0.6452

0.7160

0.6617

0.1468

-0.5820

-0.0985

0.2791

0.4708

1.0000

0.5051

0.3658

0.7284

0.4277

0.4420

0.4406

0.1483

0.6452

0.5051

1.0000

0.6007

0.5500

0.3471

-0.1911

-0.0988

0.1863

0.7160

0.3658

0.6007

1.0000

0.7150

-0.0298

-0.3882

-0.4098

0.2263

0.6617

0.7284

0.5500

0.7150

1.0000

0.2821

0.0026

0.3434

0.3680

0.1468

0.4277

0.3471

-0.0298

0.2821

1.0000

0.2445

0.3971

0.1147

-0.5820

0.4420

-0.1911

-0.3882

0.0026

0.2445

1.0000

0.5083

0.0212

-0.0985

0.4406

-0.0988

-0.4098

0.3434

0.3971

0.5083

1.0000

R

1.0000

0.1441

0.2791

0.1483

0.1863

0.2264

0.3680

0.1147

0.0212

0.1441

1.0000

0.4708

0.6452

0.7160

0.6616

0.1468

-0.5820

-0.0984

0.2791

0.4708

1.0000

0.5050

0.3658

0.7284

0.4277

0.4420

0.4406

0.1483

0.6452

0.5050

1.0000

0.6007

0.5500

0.3471

-0.1911

-0.0988

0.1863

0.7160

0.3658

0.6007

1.0000

0.7150

-0.0298

-0.3882

-0.4099

0.2264

0.6616

0.7284

0.5500

0.7150

1.0000

0.2821

0.0026

0.3434

0.3680

0.1468

0.4277

0.3471

-0.0298

0.2821

1.0000

0.2445

0.3971

0.1147

-0.5820

0.4420

-0.1911

-0.3882

0.0026

0.2445

1.0000

0.5082

0.0212

-0.0984

0.4406

-0.0988

-0.4099

0.3434

0.3971

0.5082

1.0000

Regression of Y on [1|W]

E Y 0 1 W γ

Regression Statistics

Multiple R

0.944825

R Square

0.892694

Adjusted R Square

0.850704

Standard Error 1.890412

Observations

33

ANOVA

df

Regression

Residual

Total

Intercept

W1

W2

W3

W4

W5

W6

W7

W8

W9

SS

9 683.7823

23 82.1941

32 765.9764

MS

75.9758

3.5737

F Significance F

21.2600

0.0000

Coefficients

Standard Error t Stat

P-value Lower 95%Upper 95%

164.5636

0.3291 500.0743

0.0000 163.8829 165.2444

12.1269

0.9922 12.2227

0.0000 10.0744 14.1793

4.5224

1.2096

3.7389

0.0011

2.0202

7.0245

7.6160

1.8769

4.0578

0.0005

3.7334 11.4985

4.9552

2.1605

2.2935

0.0313

0.4858

9.4246

-3.5819

2.4185

-1.4810

0.1522

-8.5850

1.4213

3.2973

3.4376

0.9592

0.3474

-3.8139 10.4085

6.8268

3.9230

1.7402

0.0952

-1.2885 14.9422

1.4226 64.0508

0.0222

0.9825 -131.0766 133.9219

-27.5954 87.0588

-0.3170

0.7541 -207.6903 152.4995

Note that W8 and

W9 have very small

eigenvalues and

very small

t-statistics

Condition indices

are 63.5 and 85.2,

Both well above 10

Reduced Model

• Removing last 2 principal components due to small,

insignificant t-statistics and high condition indices

• Let V(g) be the p×g matrix of the eigenvectors for the

g retained principal components (p=9, g=7)

• Let W(g) = X*V(g)

• Then regress Y on [1|W(g)]

V(g)

0.1853

0.4413

0.3934

0.4182

0.4125

0.4645

0.2141

-0.0852

0.0474

0.1523

-0.2348

0.3336

-0.0813

-0.3000

0.1011

0.3577

0.5467

0.5261

0.8017

-0.0986

-0.1642

0.0284

-0.0121

-0.2518

0.3790

-0.0498

-0.3320

0.2782

-0.2312

0.2336

-0.2063

0.3508

0.1658

-0.5862

0.4536

-0.2685

-0.3707

-0.2551

0.1239

0.5765

0.0559

-0.2697

0.2139

0.3674

-0.4396

-0.2327

-0.3191

-0.3183

-0.3703

0.4669

0.3798

0.4811

0.0367

-0.1027

0.1754

-0.3973

-0.4953

0.5529

0.0250

0.2786

-0.2484

-0.0418

0.3445

Reduced Regression Fit

SUMMARY OUTPUT

Regression Statistics

Multiple R

0.944575

R Square

0.892223

Adjusted R Square 0.862045

Standard Error

1.817195

Observations

33

ANOVA

df

Regression

Residual

Total

Intercept

W1

W2

W3

W4

W5

W6

W7

SS

7 683.4215

25 82.5549

32 765.9764

MS

97.6316

3.3022

F

Significance F

29.5657

0.0000

Coefficients

Standard Error t Stat

P-value Lower 95%Upper 95%

164.5636

0.3163 520.2229

0.0000 163.9121 165.2151

12.1268

0.9537 12.7151

0.0000 10.1625 14.0910

4.5224

1.1627

3.8895

0.0007

2.1277

6.9170

7.6160

1.8042

4.2213

0.0003

3.9002 11.3317

4.9551

2.0768

2.3859

0.0249

0.6777

9.2324

-3.5819

2.3249

-1.5407

0.1360

-8.3701

1.2063

3.2972

3.3044

0.9978

0.3279

-3.5084 10.1028

6.8268

3.7711

1.8103

0.0823

-0.9398 14.5934

Transforming Back to X-scale

^

^

^

β (g) = V (g) γ (g)

s

2

β (g) s V (g) L (g) V '(g)

2

-1

s^2

3.3022

W1

W2

W3

W4

W5

W6

W7

gamma-hat(g)

12.1268

4.5224

7.6160

4.9551

-3.5819

3.2972

6.8268

X1*

X2*

X3*

X4*

X5*

X6*

X7*

X8*

X9*

beta-hat(g) StdErr

12.1779

2.0639

-0.4583

2.0549

1.3113

2.3006

4.3866

2.8275

6.8020

1.7926

9.1146

1.8993

3.3197

2.4118

1.8268

1.4407

2.6829

1.9731

V{beta-hatg}

4.2598 -0.1779

-0.1779

4.2228

-0.6883

3.6089

1.0454 -2.2379

-0.8386 -1.9307

-0.0887 -2.4561

-1.8757 -0.1330

-0.4214 -1.0423

0.9289 -0.7562

-0.6883

3.6089

5.2928

-2.3318

-1.3892

-2.9496

-0.3347

1.1128

-2.2031

1.0454

-2.2379

-2.3318

7.9948

-1.6401

-0.1911

-2.6329

0.1667

1.9223

-0.8386

-1.9307

-1.3892

-1.6401

3.2135

2.3480

1.4626

0.7180

-1.1223

-0.0887

-2.4561

-2.9496

-0.1911

2.3480

3.6074

0.1090

-0.1452

1.7520

-1.8757

-0.1330

-0.3347

-2.6329

1.4626

0.1090

5.8170

-0.1949

-1.7317

-0.4214

-1.0423

1.1128

0.1667

0.7180

-0.1452

-0.1949

2.0755

-1.2055

0.9289

-0.7562

-2.2031

1.9223

-1.1223

1.7520

-1.7317

-1.2055

3.8931

Comparison of Coefficients and SEs

Original Model

Intercept

X1*

X2*

X3*

X4*

X5*

X6*

X7*

X8*

X9*

Coefficients

Standard Error

164.5636

0.3291

11.8900

2.3307

4.2752 39.4941

-3.2845 35.5676

4.2764

2.9629

-9.8372 54.0398

25.5626 53.5337

3.3805

2.5166

6.3735 38.6215

-9.6391 40.0289

Principal Components

X1*

X2*

X3*

X4*

X5*

X6*

X7*

X8*

X9*

beta-hat(g) StdErr

12.1779

2.0639

-0.4583

2.0549

1.3113

2.3006

4.3866

2.8275

6.8020

1.7926

9.1146

1.8993

3.3197

2.4118

1.8268

1.4407

2.6829

1.9731