Slide 5 - Department of Electrical Engineering & Computer Science

advertisement

COSC 6114

Prof. Andy Mirzaian

TOPICS

General Overview

Introduction

Fundamentals

Duality

Major Algorithms

Open Problems

2D Linear Programming

O(n log n) time by computation of feasible region

O(n) time Randomized Incremental Method

O(n) time Deterministic Prune-&-Search Method

Smallest Enclosing Disk

References:

•

•

•

•

•

[M. de Berge et al] chapter 4

[CLRS] chapter 29

[Edelsbrunner ‘87] chapter 10

Lecture Notes 4, 7-13

Reference books on “Optimization” listed on the course web-site

General Overview

The LP Problem

maximize c1 x1 c 2 x 2 c d x d

subject to:

a 11 x1 a 12 x 2 a 1 d x d b1

a 21 x1 a 22 x 2 a 2 d x d b 2

a n 1 x1 a n 2 x 2 a nd x d b n

Applications

•

The most widely used Mathematical Optimization Model.

•

Management science (Operations Research).

•

Engineering, technology, industry, commerce, economics.

•

Efficient resource allocation:

– Airline transportation,

– Communication network – opt. transmission routing,

– Factory inventory/production control,

– Fund management, stock portfolio optimization.

•

Approximation of hard optimization problems.

•

...

Example in 2D

max

x1 = 46/7

x2 = 59/7

x2

x1 + 8x2

subject to:

(1)

(2)

(3)

(4)

(5)

x1

x2

–3x1 + 4x2

4x1 – 3x2

x1 + x2

optimum

basic

constraints

(3)

3

2

14

25

15

(5)

(1)

Feasible

Region

(4)

(2)

x1

Example in 3D

maximize

z

z

Optimum

(x,y,z)=(0,0,3)

subject to:

x y z 3

y2

y

x0

y0

z0

x

History of LP

3000-200 BC: Egypt, Babylon, India, China, Greece: [geometry & algebra]

Egypt: polyhedra & pyramids.

India: Sulabha suutrah (Easy Solution Procedures) [2 equations, 2 unknowns]

China: Jiuzhang suanshu (9 Chapters on the Mathematical Art)

[Precursor of Gauss-Jordan elimination method on linear equations]

Greece: Pythagoras, Euclid, Archimedes, …

825 AD: Persia: Muhammad ibn-Musa Alkhawrazmi (author of 2 influential books):

“Al-Maqhaleh fi Hisab al-jabr w’almoqhabeleh” (An essay on Algebra and equations)

“Kitab al-Jam’a wal-Tafreeq bil Hisab al-Hindi” (Book on Hindu Arithmetic).

originated the words algebra & algorithm for solution procedures of algebraic systems.

Fourier [1826], Motzkin [1933]

[Fourier-Motzkin elimination method on linear inequalities]

Minkowski [1896], Farkas [1902], De la Vallée Poussin [1910], von Neumann [1930’s],

Kantorovich [1939], Gale [1960] [LP duality theory & precursor of Simplex]

George Dantzig [1947]: Simplex algorithm.

Exponential time in the worst case, but effective in practice.

Leonid Khachiyan [1979]: Ellipsoid algorithm.

The first weakly polynomial-time LP algorithm: poly(n,d,L).

Narendra Karmarkar [1984]: Interior Point Method.

Also weakly polynomial-time. IPM variations are very well studied.

Megiddo-Dyer [1984]: Prune-&-Search method.

O(n) time if the dimension is a fixed constant. Super-exponential on dimension.

LP in matrix form:

Canonical Form:

maximize

subject to:

cT

x

Ax b

x ( x1 , x 2 , , x d )

T

c

T

( c1 , c 2 , , c d )

A ( a ij )

i 1 .. n

j 1 .. d

b ( b1 , b 2 , , b n )

T

We assume all vectors are column vectors.

Transpose (T) if you need a row vector.

LP feasible region

a i 1 x 1 a i 2 x 2 a id x d b i

a i 1 x 1 a i 2 x 2 a id x d b i

hyper -plane in

half -space in

d

F = { xd | Ax b }

d

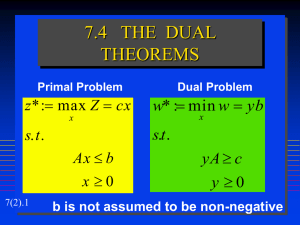

Primal Dual LPs:

Primal:

maximize cT x

subject to: Ax b

xT

(canonical form)

n

Dual:

minimize yT b

subject to: yT A = cT

y0

(standard form)

y

A

cT

d

b

Primal-Dual LP Correspondence

PRIMAL

DUAL

max cTx

min yTb

Constraint: aiT x bi

ajT x bj

akT x = bk

(aiT = i-th row of constraint matrix A)

Variable:

xi 0

xj 0

xk unrestricted

Variable:

yi 0

yj 0

yk unrestricted

Constraint: yTAi ci

yTAj cj

yTAk = ck

(Ai = i-th column of constraint matrix A)

FACT: Dual of the Dual is the Primal.

Example 1: Primal-Dual

PRIMAL:

max 16 x1 - 23 x2

+

subject to:

3 x1 + 6 x2

-9 x1 + 8 x2

5 x1 + 12 x2

- 9 x3 + 4 x4

+ 17 x3 - 14 x4

+ 21 x3 + 26 x4

x10,

DUAL:

43 x3 + 82 x4

x20,

x40

min 239 y1 + 582 y2 - 364 y3

subject to:

3 y1

6 y1

-9 y1

4 y1

- 9 y2

+ 8 y2

+ 17 y2

- 14 y2

y10,

+ 5 y3

+ 12 y3

+ 21 y3

+ 26 y3

y30

16

-23

= 43

82

=

239

582

-364

Example 2: The Diet Problem

This is one of the earliest Primal-Dual LP formulations. Dantzig attributes it to:

[G. J. Stigler, “The cost of subsistence,” J. Farm Econ. 27, no. 2, May 1945, 303-314].

Primal: A homemaker has n choices of food to buy,

and each food has some of each of m nutrients:

Given:

aij = amount of the ith nutrient in a unit of the jth food, i=1..m, j=1..n,

ri = yearly minimum requirement of the ith nutrient, i=1..m,

cj = cost per unit of the jth food, j=1..n.

Determine: xj = yearly consumption of the jth food, j=1..n.

Objective: Minimize total food purchasing cost cTx for the homemaker.

Constraints: Meet yearly nutritional requirement (Ax r)

with nonnegative amount of each food (x 0).

Primal:

min cTx subject to: Ax r , x 0.

Example 2: The Diet Problem

Primal: min cTx subject to:

Ax r , x 0.

max rTy subject to:

ATy c , y 0.

Dual:

Dual interpretation:

A pill-maker wishes to market pills containing each of the m nutrients,

yi = the price per unit of nutrient i, i=1..m.

He wishes to be competitive with the price of real food, and

maximize his profit from the sale of pills for an adequate diet.

m

The dual constraints

a

ij

yi c j

j 1..n

i1

express the fact that the cost in pill form of all the nutrients in the jth food

is no greater than the cost of the jth food itself.

The objective function rTy is simply the pill maker’s profit for an adequate diet.

Example 3: Network Max Flow

Flow Network: N=(V,E,s,t,c)

V = vertex set

E = directed edge set

s = source vertex

t = terminal vertex

c: E + edge capacities

find max flow f: E

see also

AAW

+ satisfying flow conservation & capacity constraints

Assume flow is 0 on non-edges.

maximize v

subject to:

Maximize Net flow out of source

Sy fxy - Sy fyx =

fe ce

fe 0

v

for x=s

-v

for x=t

0

for xV - {s,t}

for e E

for e E

Flow Conservation Law

Flow Capacity Constraints

Flow Non-negativity Constraints

Example 3: Network Max Flow

A: vertex-edge incidence matrix

Rows indexed by vertices V, columns by edges E.

For edge e=(i,j)E : Aie = +1, Aje = -1, Ake = 0 kV-{i,j}.

e

j

0

i

e

+1

0

A=

j

-1

0

i

fe

ce

Example 3: Network Max Flow

Primal : (Max s-t Flow) :

maximize

s.t. :

j

v

v

Af - v

0

e

row s

row t

(flow concervati o n)

fe

ce

i

other rows

0 f c

(capacity constraint s)

Dual : (Min s-t Cut) :

minimize

c eσ e

j

e E

s.t. :

π i - π j σ ij 0 ( i , j) E

- πs πt

1

σ

0

e

i

e

ce

LP: Fundamental Facts

LP optimal solution set

Feasible region F = { xd | Ax b } is the intersection of n half-spaces.

F is a convex polyhedron (possibly empty or unbounded).

Convexity: local optimality global optimality.

A supporting hyper-plane is one that does not intersect the interior of F.

A face of F is the intersection of F with some supporting hyper-plane.

We consider F itself as a (full dimensional) face of F. is also a face of F.

Vertex 0 dimensional face. Edge (bounded) 1 dimensional face.

optimum objective value z* = max { cTx : xF}

supporting hyper-plane cTx = z*

optimum face

F* = { x : cTx = z* , xF}

(may not exist or be unbounded)

F* interior(F) =

F is pointed if it doesn’t contain any line. Hence, F= or has a vertex (i.e., rank(A)=d).

F is pointed every face of F is pointed.

F* & pointed F* contains a vertex of F (an optimal vertex).

[In that case, we can focus on searching for an optimal vertex.]

For further details see the upcoming slides.

Separation Lemma

The Hahn-Banach Separation Theorem:

Consider any non-empty closed convex set P and a point b in n .

bP there is a hyper-plane that separates b from P, i.e.,

hyper-plane yTx = for some yn and ,

such that yTb < and yTz zP.

Proof:

Consider the closest point pP to point b.

Take the hyper-plane through p and orthogonal to segment bp.

b

p

P

Extreme Points

FACT:

A non-empty closed convex set P has an extreme point

if and only if

P does not contain any line.

Proof (): Suppose P contains a line L.

We must prove that no point xP is an extreme point of P.

Let L’ be the line parallel to L that passes through x.

CLAIM: P contains L’.

This claim implies x is not an extreme point of P. (Why?)

Refutation of the Claim leads to a contradiction as follows:

Suppose there is a point b L’ – P.

By the Separation Lemma, there is a hyperplane H that separates b from P.

H intersects L’ (at some point between x and b).

H must also intersect line L (which is parallel to L’).

That would contradict the fact that H separates b from P L.

b

x

This contradiction proves the Claim.

L’

L

H

Extreme Points

FACT:

A non-empty closed convex set P has an extreme point

if and only if

P does not contain any line.

Proof (): Suppose P does not contain any line.

We apply induction on the dimension.

If P is a single point, then it is an extreme point.

Now suppose dim(P) > 0.

Since P does not contain any line,

P is a proper subset of aff(P) (affine hull of P).

So, there is a point b aff(P) – P.

By the Separation Lemma,

there is a hyperplane H separating b from P in aff(P).

Move H parallelwise toward P until H is tangent to P.

Let f = HP.

dim(f) dim(H) < dim(P).

f is a non-empty closed convex set that contains no line.

So, by the induction hypothesis, f must have an extreme point x.

x is an extreme point of P also. (Why?)

H

f

P

Extreme Points

The Krein-Milman Theorem:

A closed bounded convex set is equal to the convex hull of its extreme points.

Proof:

By convexity & closedness, the given set P contains the convex hull of its extreme points.

The converse, i.e., every point xP can be represented as a convex combination of

extreme points of P, is proved by induction on the dimension.

If x is an extreme point of P, we are done. Otherwise, draw a line L through x.

Let yz be the maximal segment of L in P.

x is a convex combination of the boundary points y and z (as shown).

By induction, y & z can be represented as convex combinations of extreme points of the

lower dimensional closed bounded convex sets PH1 and PH2, respectively.

Hence, x is a convex combination of extreme points of P (details for exercise).

H2

P

H1

x

y

L

z

Farkas Lemma

Farkas Lemma:

Let And, bn. Then exactly one of the following must hold:

(1) xd :

Ax b.

(2) yn :

yTA = 0, yTb < 0, y0.

Proof:

[(1) & (2) cannot both hold] Else: 0 = 0x = (yTA)x = yT(Ax) yTb < 0.

[ (1) (2)]: P = { zn | z=Ax+s, xd , sn , s0 } is convex & non-empty(0P).

(1)

bP

y,: yTb < yTz zP

by Separation Lemma

y,: yTb < yT(Ax+s) s 0,x

y,: yTb < (yTA)x+ yTs s 0,x

y,: yTb < yTs s 0, & yTA = 0

if yTA0, take x=(-1- yTs) ATy ||yTA||-2

y,: yTb < yTs s 0, & yTA = 0, & y 0

if yi <0, take si= - (1+||)/yi & sj=0 for ji

y,: yTb < 0 yTs s 0, & yTA = 0, & y 0

if >0, take s = (/2)y ||y||-2

y: yTb < 0, yTA = 0, y 0

(2)

Farkas Lemma

Farkas Lemma (alternative versions): Let And, bn.

1)

xd : Ax b

yn : yTA = 0, yTb < 0, y0.

2)

xd : Ax b, x 0

yn : yTA 0, yTb < 0, y0.

3)

xd : Ax = b

yn : yTA = 0, yTb < 0.

4)

xd : Ax = b, x 0

yn : yTA 0, yTb < 0.

Convex Cones, Sets & Polyhedra

Minkowski sum (of vector sets): P+Q = {x+y | xP, yQ}

Convex Cone: C ( d), 0 C, C + C C, and 0: C C.

Finitely Generated Convex Cones and Convex Sets:

Let V = {v1, … ,vm} and R = {r1, … ,rk}

CH(V) = {1v1+2v2+ … +mvm | Si i=1,i0 }

cone(R) = {m1r1+m2r2+ … +mkrk | mi0 }

cone(R)={0} if R=.

Extreme rays of the cone are shown bold.

0

CH(V) + cone(R):

+

=

Recession Cone

of the polyhedron

polyhedron

Caratheodory’s Theorem

Let X be a non-empty set in d.

(a) xcone(X) & x0 if and only if

x= m1x1+m2x2+ … +mkxk for some m1, … ,mk > 0

and for some linearly independent x1, … ,xk X.

(Hence, k d.)

(b) xCH(X)

if and only if

x= m1x1+m2x2+ … +mkxk for some m1, … ,mk > 0, Si mi =1.

and for some affinely independent x1, … ,xk X.

(I.e., x2-x1, … ,xk-x1 are linearly independent. Hence, k d+1.)

x1

cone(X)

x2

x1

CH(X)

X

x

x

x2

x3

0

(a)

(b)

Caratheodory’s Theorem - Proof

(a) Consider a minimal k such that

x = m1x1+m2x2+ … +mkxk ,

mi >0, xiX, i=1..k.

(xi) are linearly dependent r1, … ,rk : at least one ri >0 &

0 = r1x1+r2x2+ … +rkxk.

So, x = (m1 – r1) x1+ (m2 – r2) x2+ … + (mk – rk) xk .

Take = min { mi / ri | ri >0}.

All coefficients remain non-negative and at least one becomes 0.

This contradicts minimality of k.

cone(Y)

Y

d+1

to cone(Y) where

Y = CH(X){1} = { (x,1) d+1 | xCH(X) }.

(b) Apply proof of (a) in

x

CH(X)

Polyhedra - Theorem

Fundamental Theorem of Polyhedra [Minkowski-Weyl]:

A subset P d is the intersection of a finite set of half-spaces:

P = { xd | Ax b }

for some A nd, b n

if and only if

P is the sum of convex hull of a finite set of points plus

conical combination of a finite set of vectors:

P = CH(V) + cone(R)

for some V dm , R dm’

= { x d | x = V + Rm, m0, 0, Si i = 1 }.

If P is pointed, columns of V are vertices of P, columns of R are extreme rays of P.

P is a polytope P is bounded R=, V = vertex set of P.

Corollary: A polytope can be viewed in 2 equivalent ways:

1) as intersection of half spaces (defined by its facets),

2) as convex hull of its vertices (cf. Krein-Milman Theorem).

Polyhedra – Example

P

x

2

2

P x

| - x 1 - x 2 0 , - x 1 1, - x 1 - 3 x 2 2

x1

1

-1

x2

- 1 λ1 3

1 λ 2 - 1

V

0 μ 1

, μ i 0, λ i 0,

1 μ 2

λ i 1

R

x2

P

v2

V = (v1 , v2)

R = ( r1 , r2)

r2

x1

r1

v1

Polyhedra

– Proof

Proof of ():

[Assume polyhedron P is pointed.]

Let Q = { x d | x = V + Rm, m0, 0, Si i = 1 } where columns vi of V are

extreme points of P, and columns ri of R are extreme rays of P.

We claim Q = P:

Q P: xQ implies x =y + z for some y=V, 0, Si i = 1, and z= Rm, m0.

So yP, since y is a convex combination of extreme points of P.

Also, z is a conic combination of extreme rays of P, so y+z P. So xP.

Q P: WLOG assume P. If xQ, then (,m) mm’ such that

v1 1 + v2 2 + …+ vm m + r1 m1 + r2 m2 +… + rm’ mm’ x

1 + 2 + …+

m

1

0, m 0.

So, by Farkas Lemma (,o)d

such that i: Tvi o 0, Tri 0, Tx o > 0.

Now consider the LP max {Ty | yP}.

For all rays ri of P, Tri 0. Hence, the LP has a bounded optimum.

So, the optimum must be achieved at an extreme point of P.

But for every extreme point vi of P we have Tx > -o Tvi.

So, Tx > -o max {Ty | yP}. (The hyperplane Ty -o separates x from P.)

Therefore, xP.

[What happens if P is not pointed? See exercise 7.]

Polyhedra

– Proof

Proof of ():

Q is the projection onto x of the polyhedron

{ (x,,m) dmm’ | x - V – Rm 0, m0, 0, Si i = 1 }.

Hence, Q is also a polyhedron

(i.e, intersection of a finite number of half-spaces).

Bases & Basic Solutions

Bases & Basic Solutions:

Basis B:

Any set of d linearly independent rows of A.

B dd and det(B) 0.

N = remaining non-basic rows of A.

xTB

B

bB

Basic Feasible Solution (BFS):

If xB is feasible, i.e., NxB bN .

N

bN

Each BFS corresponds to a vertex of the

feasible polyhedron.

A

Basic Solution:

BxB = bB xB = B-1 bB .

b

Fundamental Theorem of LP

For any instance of LP exactly one of the following three possibilities holds:

(a) Infeasible.

(b) Feasible but no bounded optimum.

(c) Bounded optimum.

[Note: Feasible polyhedron could be unbounded even if optimum is bounded.

It depends on the direction of the objective vector.]

Moreover, if A has full rank (i.e., basis), then every nonempty face of

the feasible polyhedron contains a BFS, and this implies:

(1) feasible solution BFS.

(2) optimum solution optimum solution that is a BFS.

Proof: If there is a basis, the basic cone

contains the feasible region but does not

contain any line. So the feasible region does

c

not contain any line, hence it is pointed. So every

non-empty face of it (including the optimal face,

if non-empty) is pointed, and thus contains a vertex.

(For details see exercise 4.)

LP Duality Theorem

Primal: max { cTx | Ax b }

Dual:

min { yTb | yTA = cT, y 0 }

Weak Duality: x Primal feasible & y Dual feasible cTx yTb.

Proof: cTx = (yTA)x = yT(Ax) yTb.

Corollary:

(1) Primal unbounded optimum Dual infeasible

(2) Dual unbounded optimum Primal infeasible

(3) Primal & Dual both feasible both have bounded optima.

[Note: Primal & Dual may both be infeasible. See Exercise 5.]

Strong Duality: If x & y are Primal/Dual feasible solutions, then

x & y are Primal/Dual optima cTx = yTb.

Proof: By Farkas Lemma. See Exercise 8.

Example 1

P:

max -18 x1 27 x2

subject to:

-4 x1 + 5 x2 8

x1 + x2 7

x10,

Optimum Solution for P:

x1 = 3,

x2 = 4

opt objective value =

-18*3 + 27*4 = 54

D:

min 8 y1 + 7 y2

subject to:

-4 y1 + y2 -18

5 y1 + y2 27

x2 0

y10, y2 0

Optimum Solution for D:

y1 = 5,

y2 = 2

opt objective value =

8*5 + 7*2 = 54

Example 2: The Diet Problem

Primal: min cTx subject to: Ax r , x 0.

Dual:

[Homemaker]

max rTy subject to: ATy c , y 0.

[Pill Maker]

Week Duality:

For every primal feasible x and dual feasible y:

cTx rTy.

Interpretation: the pill maker cannot beat the real food market

for an adequate diet.

Strong Duality:

min {cTx | Ax r , x 0} = max { rTy | ATy c , y 0}.

Interpretation: homemaker’s minimum cost food budget is

equal to pill maker’s maximum profit for an adequate diet.

LP Optimization Feasibility

Dual:

minimize yT b

subject to: yT A = cT

y0

Primal:

maximize cT x

subject to: Ax b

Is there (x,y) that satisfies:

bT y - cT x 0

AT y

y

Ax b

= c

0

Complementary Slackness

Primal:

maximize cT x

subject to: aTi x bi , i=1..n

xj 0 , j=1..d

Dual:

minimize yT b

subject to: ATj y cj , j=1..d

yi 0 , i=1..n

Complementary Slackness Condition (CSC):

yi (bi - aTi x ) = 0 , i=1..n

xj (ATj y - cj ) = 0 , j=1..d

FACT: Suppose x & y are Primal-Dual feasible.

Then the following statements are equivalent:

(a) x and y are Primal-Dual optimal,

(b) cTx = yTb,

(c) x & y satisfy the CSC.

Example 3: Max-Flow Min-Cut

Primal : (Max s-t Flow) :

Dual : (Min s-t Cut) :

maximize

minimize

s.t. :

v

v

Af - v

0

c eσ e

e E

row s

s.t. :

row t

π i - π j σ ij 0 ( i , j) E

- πs πt

other rows

1

σ

0 f c

0

See AAW for animation:

=1

=0

f = c , =1

C

S

(v

=0

=0

net out-flow)

f=0,=0

t

C

Min-Max Saddle-Point Property

FACT:

For all functions f : XY :

max min f (x,y)

xX

yY

min max f (x,y) .

yY

xX

Proof:

Suppose LHS = f(x1,y1) and RHS = f(x2,y2).

Then, f(x1,y1) ≤ f(x1,y2) ≤ f(x2,y2) .

x1

x2

y1

y2

Min-Max Saddle-Point Property

Function f has the Saddle-Point Property (SPP)

if it has a saddle-point, i.e., a point (x0, y0) XY such that

xX f (x , y0)

≤

f(x0 , y) yY .

f (x0 , y0) ≤

FACT: f has the SPP if and only if

max

xX

min

yY

f (x,y) =

min

yY

max f (x,y) .

xX

Duality via Lagrangian

P* = max {cT x | Ax b }

x

D* = min { yT b | ATy = c }

y0

Lagrangian: L(x,y) = cT x + yT(b – Ax) = yT b + xT(c – ATy)

Fact:

P* = max min L(x,y) ,

x

y0

D* = min max L(x,y) .

y0

x

Proof: See Exercise 9.

Weak Duality : max min L(x,y) min max L(x,y)

x

y0

y0

x

P* D* .

Strong Duality : P or D feasible Lagrangian has SPP.

max min L(x,y) = min max L(x,y)

x

y0

y0

P* = D* .

x

Dantzig – Khachiyan – Karmarkar

George Dantzig [1947]: Simplex algorithm.

Exponential time in the worst case,

but effective in practice.

Leonid Khachiyan [1979]: Ellipsoid algorithm.

The first weakly polynomial-time LP algorithm:

poly(n,d,L).

Narendra Karmarkar [1984]: Interior Point Method.

Also weakly polynomial-time.

IPM variations are very well studied.

Dantzig: Simplex Algorithm

Pivots along the edges of the feasible polyhedron,

monotonically improving the objective value.

c

Each BFS corresponds to a

vertex of the feasible polyhedron.

Simplex Pivot:

A basic row leaves the basis and

a suitable row enters the basis.

A non-degenerate pivot moves the BFS

along an edge of the feasible polyhedron

from one vertex to an adjacent one.

Pivot Rule:

Decides which row leaves & which row

enters the basis (i.e., which edge of the

feasible polyhedron to follow next).

Center of Gravity

A set of points K d is called a convex body

if it is a full dimensional, closed, bounded, convex set.

FACT: [Grünbaum 1960]

Let point c be the center of gravity of a convex body K and H be an

arbitrary hyperplane passing through point c.

Suppose H divides K into two bodies K+ and K– .

Then the volumes vol(K+) and vol(K–) satisfy:

max{

vol ( K

c

K–

) , vol ( K

-

) } 1 - 1 -

)

d

1

d 1

1 -

K+

H

)

1

vol ( K ) .

-

lim 1 - 1 d

)

)

d

1

d 1

)

d

1

d 1

)

1 - 1

e

Iterative Central Bisection

FACT: Suppose the feasible set is a convex body F which is

contained in a ball C0 of radius R and contains a ball C1 of radius r.

Suppose we start with (the center of) the larger ball and iteratively

perform central bisection until center of gravity of the remaining

convex body falls within F.

The number of such iterations is O(d log R/r).

Proof:

vol(C0) = vdRd

vol(C1) = vdrd

vd = volume of the unit ball in d .

With each iteration,

the volume drops by a constant factor.

# iterations = O( log vol(C0)/vol(C1) ).

C0

R

C1 r

F

Iterative Central Bisection

The # central bisection iterations O(d log R/r)

is polynomial in the bit-size of the LP instance.

However, each iteration involves computing the volume

or center of gravity of the resulting convex body.

This task seems to be hard even for LP instances.

We don’t know how to compute them in polynomial time.

So, we resort to approximation

1) approximate the center of gravity

2) approximate the convex body

What simple approximating “shape” to use?

Bounding Box, Ball, ellipsoid, …?

Ellipsoids

An ellipsoid is an affine transform of the Euclidean unit ball.

E

x

d

x

d

x

d

|

|

|

y

A

-1

d

: x z o Ay ,

x - zo )

1

2

y

x - z o ) AA ) x - z o ) 1

zo = center of ellipsoid E.

T

T

-1

A = a dd non-singular matrix.

y

x

E

0

y x z o Ay

zo

2

1

Ellipsoids

Example: A a 1

0

0

,

a2

0

z o

0

x1

2

E

x

2

2

x1

a

2

1

2

x2

2

a2

1

x2

a2

E

a1

x1

Ellipsoids

E

x

d

y

|

c

xopt

E

zo

d

: x z o Ay ,

x opt arg max

zo A

y

2

1

{ c x | x E }

x

T

T

A c

T

A c

vol ( E ) v d det( A )

( vd = volume of the unit ball in d .)

Löwner-John Ellipsoid

FACT 1: [K. Löwner 1934, Fritz John, 1948]

Let K be a convex body in d. Then

1. There is a unique maximum volume ellipsoid Ein(K) contained in

K.

2. K is contained in the ellipsoid that results from Ein(K) by

centrally expanding Ein(K) by a factor of d (independent of K).

3. If K is centrally symmetric, then the factor d in part (2) can be

improved to d .

Löwner-John Ellipsoid

FACT 2: [K. Löwner 1934, Fritz John, 1948]

Let K be a convex body in d. Then

1. There is a unique minimum volume ellipsoid Eout(K) that

contains K.

2. K contains the ellipsoid that results from Eout(K) by centrally

shrinking Eout(K) by a factor of d (independent of K).

3. If K is centrally symmetric, then the factor d in part (2) can be

improved to d .

Löwner-John metric approximation

Corollary: Any metric in d can be approximated by a Löwner-John

metric with distortion factor at most d .

Proof:

Let || . ||B denote an arbitrary metric norm with (centrally symmetric) unit ball B.

|| x ||B = min { | x B } for any x d .

Let ELJ = { xd | || A–1 x ||2 1 } be the min volume Löwner-John ellipsoid

that contains B.

The metric norm with ELJ as the unit ball is || x ||LJ = || A–1 x ||2 for any x d .

We have

Therefore,

E LJ

B

1 E .

LJ

d

|| x || LJ || x || B

1 E

LJ

d

d || x || LJ .

B

ELJ

Iterative Löwner-John Ellipsoidal Cut

T

T

c ( x opt - z o ) c ( x out - z o ) d c T ( x k - z o ) d c ( x opt - z o ) - d c ( x opt - x k )

T

T

c ( x opt - x k ) (1 - d1 ) c ( x opt - z 0 ) (1 - d1 ) c ( x opt - x k -1 )

T

T

T

xout

c ( x opt - x k )

T

T

c ( x opt

-1 / d

1

1e

d

- x k -1 )

xopt

xk

c ( x opt - x k )

T

c ( x opt - x 0 )

T

e

zo

-k /d

c

xk-1

Iterative Löwner-John Ellipsoidal Cut

c ( x opt - x k )

T

c ( x opt - x k )

after

T

c ( x opt - x 0 )

T

e

-k /d

k O d log

c ( x opt - x 0 )

T

iterations

# iterations is polynomial in the bit-size of the LP instance.

However, each iteration is expensive and involves computing

Löwner-John Ellipsoid of a convex polytope.

What next?

Approximate the convex body by an ellipsoid.

Khachiyan: Ellipsoid Method

Circumscribed ellipsoid volume reduction by cuttings.

Ek

Ek+1

vol ( E k 1 )

zk+1

zk

vol ( E k )

# iterations = O(d2 log R/r)

Time per iteration = O(d n)

-

e

1

2 ( d 1 )

The New York Times, November 7, 1979

Continued on next slide.

Karmarkar: Interior Point Method

Logarithmic Barrier and Central Path

maximize cTx + m i log si

subject to Ax+s=b, s > 0

m=

m=1

m=1

m=

m=0.01

Central path

IPM: Primal-Dual Central Path

(P)

(D)

max cTx

s.t. Ax+s=b, s 0

min bTy

s.t. ATy=c, y 0

Optimality Conditions on (x,s,y):

Ax+s=b, s 0

ATy=c, y 0

si yi = 0 i

Primal-Dual Central Path

Ax+s=b, s > 0

ATy=c, y > 0

si yi = m i

(Primal feasibility)

(Dual feasibility)

(Complementary Slackness)

parameterized by m > 0:

(Primal strict feasibility)

(Dual strict feasibility)

(Centrality)

IPM: Primal-Dual Central Path

Central Path:

Ax+s=b, s > 0

ATy=c, y > 0

si yi = m i

Algorithmic Framework:

Assume both (P) and (D) have strictly feasible solutions. Then, for any m > 0, the

above non-linear system of equations has a unique solution (x(m), s(m), y(m)).

These points form the Primal-Dual Central Path { (x(m), s(m), y(m)) | m (0, ) }.

lim m0 (x(m), s(m), y(m)) = (x*, s*, y*) is optimal solution of the Primal-Dual LP.

Update approximate solution to the system while iteratively reducing m towards 0.

Open Problem

Can LP be solved in strongly polynomial time?

Weakly Polynomial:

(i)

Time = poly(n,d,L), [L = bit-length of input coefficients]

(ii) Bit-length of each program variable = poly(n,d,L).

Strongly Polynomial:

(i)

Time = poly(n,d) [i.e., independent of input coefficient sizes]

(ii) Bit-length of each program variable = poly(n,d,L).

[ Steve Smale, “Mathematical Problems for the Next Century’’,

Mathematical Intelligencer, 20:7–15, 1998]

lists 18 famous open problems and

invites the scientific community to solve them in the 21st century.

Problem 9 on that list is the above open problem on LP.

Hirsch’s Conjecture [1957]

Hirsch’s Conjecture:

Diameter of any d-polyhedron with n facets satisfies

D(d,n) n – d.

That is, any pair of its vertices are connected by a path

of at most n - d edges.

Best known upper-bound [Kalai, Kleitman 1992]:

D(d,n) nlog d + 2

For a recent survey on this topic click here.

This conjecture was disproved by Francisco Santos in June 2010.

Click here to see Santos’s paper.

LP in dimension 2

2D Linear Programming

Objective function = c1 x + c2 y,

Feasible region F

cT =(c1 , c2)

c

optimum

F

y

x

2D Linear Programming

Feasible region F is the intersection of n half-planes.

F is (empty, bounded or unbounded) convex polygon with

n vertices.

F can be computed in O(n log n) time by divide-&-conquer

(See Lecture-Slide 3).

If F is empty, then LP is infeasible.

Otherwise, we can check its vertices, and its possibly up to

2 unbounded edges, to determine the optimum.

The latter step can be done by binary search in O(log n) time.

If objective changes but constraints do not, we can update the optimum

in only O(log n) time. (We don’t need to start from scratch).

Improvement Next:

Feasible region need not be computed to find the optimum vertex.

Optimum can be found in O(n) time both randomized & deterministic.

2D LP Example: Manufacturing with Molds

2D LP Example: Manufacturing with Molds

The Geometry of Casting: Is there a mold for an n-faceted 3D polytope P such that

P can be removed from the mold by translation?

Lemma: P can be removed from its mold with a single translation in direction d

d makes an angle 90 with the outward normal of all non-top facets of P.

f

d

P

f’

mold

(f ‘) = - (f)

Corollary: Many small translations possible Single translation possible.

2D LP Example: Manufacturing with Molds

The Geometry of Casting: Is there a mold for an n-faceted 3D polytope P such that

P can be removed from the mold by translation?

z

d=(x,y,1)

f

d

z=1

y

P

f’

mold

(f ‘) = - (f)

x

(f) = ( x(f) , y(f) , z(f) ) outward normal to facet f of P.

dT.(f) ≤ 0 non-top facet f of P

x(f) . x + y(f) . y + z(f) ≤ 0 f

n-1 constraints

THEOREM: The mold casting problem can be solved in O(n log n) time.

(This will be improved to O(n) time on the next slides.)

Randomized Incremental

Algorithm

Randomization

Random(k): Returns an integer i 1..k, each with equal probability 1/k.

[Use a random number generator.]

Algorithm RandomPermute (A)

Input:

O(n) time

Array A[1..n]

Output: A random permutation of A[1..n] with each

of n! possible permutations equally likely.

for k n downto 2 do Swap A[k] with A[Random(k)]

end.

This is a basic “initial” part of many randomized incremental algorithms.

2D LP: Incremental Algorithm

Method: Add constraints one-by-one, while maintaining the current optimum vertex.

Possible Outcomes:

H(i)

c

v

r

e

H(j)

H(k)

Infeasible

Unbounded

Non-unique optimum

Unique optimum

Input: (H, c), H = { H(1), H(2), … , H(n)} n half-planes, c = objective vector

Output: Infeasible: (i,j,k), or

Unbounded: r, or

Optimum: v = argmaxx { cTx | x H(1)H(2)…H(n) }.

Define: C(i) = H(1)H(2)…H(i) , for i = 1..n

v(i) = optimum vertex of C(i) , for i=2..n.

Note: C(1) C(2) … C(n).

cTv(i) = max { cTx | x C(i) }

2D LP: Incremental Algorithm

LEMMA: (1) v(i-1) H(i) v(i-1) C(i) v(i) v(i-1).

(2) v(i-1) H(i)

(2a) C(i) = , or

(2b) v(i) L(i) C(i-1), L(i) =bounding-line of H(i).

c

H(i)

C(i-1)

v(i)=v(i-1)

v(i-1)

v(i-1)

L(i)

L(i)

H(i)

H(i)

C(i-1)

C(i-1)

v(i)

H(k)

H(j)

(1)

(2a)

(2b)

2D LP: Incremental Algorithm

Algorithm PreProcess (H,c)

1.

2.

3.

4.

O(n) time

min { angle between c and outward normal of H(i) | i=1..n }

H(i) = most restrictive constraint with angle

Swap H(i) with H(1) (L(1) is bounding-line of H(1)).

If (parallel) H(j) with angle - and H(1)H(j)= then return “infeasible”.

If L(1) H(j) is unbounded for all H(j) H, then

r most restrictive L(1) H(j) over all H(j) H

return (“unbounded”, r )

If L(1) H(j) is bounded for some H(j)H, then

Swap H(j) with H(2) and return “bounded”.

c

L(1)

L(1)

H(j)

L(1)

r

H(1)

H(j)

H(1)

H(1)

(1)

v(2)

(2)

(3)

(4)

H(2)

Randomized Incremental 2D LP Algorithm

Input:

Output:

1.

(H, c),

H = { H(1), H(2), … , H(n)} n half-planes, c = objective vector

Solution to max { cTx | x H(1)H(2)…H(n) }

if PreProcess(H,c) returns (“unbounded”, r) or “infeasible”

then return the same answer

(* else bounded or infeasible *)

2.

v(2) vertex of H(1) H(2)

3.

4.

5.

RandomPermute (H[3..n])

for i 3..n do

6.

7.

8.

9.

end.

if v(i-1) H(i) then v(i) v(i-1)

else v(i) optimum vertex p of L(i)(H(1)…H(i-1))

if p does not exist then return “infeasible”

end-for

return (“optimum”, v(n))

(* 1D LP *)

Randomized Incremental 2D LP Algorithm

THEOREM: 2D LP Randomized Incremental algorithm has the following complexity:

Space complexity = O(n)

Time Complexity: (a) O(n2) worst-case

(b) O(n) expected-case.

Proof of (a):

Line 6 is a 1D LP with i-1constraints and takes O(i) time.

n

Total time over for-loop of lines 4-8:

O (i ) O ( n

i3

2

).

Randomized Incremental 2D LP Algorithm

1

if v(i - 1) H(i)

0

otherwise

Proof of (b): Define 0/1 random variables X ( i )

for i 3 .. n .

n

T O ( n ) O ( i ) * X ( i )

Lines 5-7 take O( i*X(i) +1) time. Total time is

i3

Expected time:

linearity of

expectation

n

E[ T ] O ( n ) E[ O (i ) * X (i ) ]

O (n )

n i3

O (i ) * E[ X (i ) ]

i3

E [ X(i) ]

Backwards

Analysis

Pr [ v(i- 1) H(i) ] 2 /(i- 2 )

“Fix” C(i) = { H(1), H(2)} {H(3), …, H(i)}

Random : C(i-1) = C(i) – {H(i)}

random

v(i) is defined by 2 H(j)’s. The probability that one of them is H(i) is 2/(i-2).

This does not depend on C(i). Hence, remove the “Fix” assumption.

n

Therefore

:

E [T ]

O (n)

i3

O (i ) *

2

i - 2

O ( n ).

Randomized Incremental LP in d dimension

Randomized Incremental algorithm can be used (with minor modifications)

in d dimensions, recursively.

For example, for 3D LP line 6 becomes a 2D LP with i-1 constraints.

In general, it will be a (d-1) dimensional LP.

Define: T(n,d) = running time of algorithm for n constraints & d variables.

T ( n , d ) O ( dn )

[ T ( i - 1, d

- 1) di ]

i

This would be exponential in both n and d.

Expected time (by backwards analysis):

1

X (i )

0

if v(i - 1) H(i)

otherwise

E [ X(i) ] Pr [ v(i- 1 ) H(i) ] d/(i-d) .

n

E [ T ( n , d )] O ( dn )

E [ T ( i - 1 , d - 1 )] *

i d 1

E [ T ( n , d )] O ( d ! ( n - d ))

d

i-d

for n > d .

[proof on next slide]

Randomized Incremental LP in d dimension

n

T(n,d) cdn

id 1

Solution :

d

i-d

T(i - 1 ,d - 1 )

for n

> d

d -1

T(n, d) c(n - d) 3 d! Fd - d O(d! (n - d))

where

Fd

1

i!

(1)

i0

Proof :

Basis

By induction

on n :

(n d 1) :

d -1

T(d 1, d) cd(d 1) d T(d, d- 1)

Step

by iterative

expansion

G ( d -1 )

G (d)

Induction

cd!

i0

i2

3 cd! Fd - cd .

i!

(n > d 1) :

n

T(n, d) cdn

i d 1

d

i- d

c(i - d) 3(d - 1)! Fd - 1 - (d - 1)

cdn 3 cd! Fd - 1 (n - d) - cd(d - 1)(n - d)

1

cdn 3 c(n - d) d! Fd - cd(d - 1)(n - d)

(d - 1)!

cdn 3 c(n - d) d! Fd - 3 c(n - d) d - cd(d - 1)(n - d)

c(n - d) 3 d! Fd - d - cd (n - d - 1)

2

c(n - d) 3 d! Fd - d .

[For nd, T(n,d) = O(dn2), by Gaussian elimination.]

Randomized Incremental

Algorithm for

Smallest Enclosing Disk

Smallest Enclosing Disk

Input: A set P={p1, p2, … , pn } of n points in the plane.

Output: Smallest enclosing disk D of P.

Lemma: Output is unique.

Incremental Construction:

pi+1

P[1..i] = {p1, p2, … , pi }

D(i)= smallest enclosing disk of P[1..i] .

D(i+1)

Lemma: (1) pi D(i-1) D(i) = D(i-1)

pi

(2) pi D(i-1) pi lies on the boundary of D(i).

D(i)=D(i-1)

Smallest Enclosing Disk

LEMMA:

Let P and R be disjoint point sets in the plane. pP, R possibly empty.

Define MD(P, R) = minimum disk D such that P D & R D ( D = boundary of D).

(1)

(2)

(3)

If MD(P, R) exists, then it’s unique,

p MD(P-{p}, R) MD(P,R) = MD(P-{p}, R),

p MD(P-{p}, R) MD(P, R) = MD(P-{p}, R {p}).

Proof: (1) If non-unique smaller such disk:

(2) is obvious.

(3)

D(0) MD(P-{p}, R)

D(1) MD(P, R)

D() (1-) D(0) + D(1)

0 1

As goes from 0 to 1, D() continuously deforms from D(0) to D(1) s.t.

D(0) D(1) D().

p

p D(1) – D(0) by continuity smallest *, 0 < * 1 s.t.

p D(*)

D()

p D(*).

P D(*) & R D(*)

R

*=1 by uniqueness.

Therefore, p is on the boundary of D(1).

D(0)

D(1)

Smallest Enclosing Disk

Algorithm MinDisk (P[1..n])

1.

RandomPermute(P[1..n])

2.

D(2) smallest enclosing disk of P[1..2]

3.

for i 3..n do

4.

if pi D(i-1) then D(i) D(i-1)

5.

else D(i) MinDiskWithPoint (P[1..i-1] , pi)

6.

return D(n)

Procedure MinDiskWithPoint (P[1..j],q)

1.

RandomPermute(P[1..j])

2.

D(1) smallest enclosing disk of p1 and q

3.

for i 2..j do

4.

if pi D(i-1) then D(i) D(i-1)

5.

else D(i) MinDiskWith2Points (P[1..i-1] , q, pi)

6.

return D(j)

Procedure MinDiskWith2Points (P[1..j],q1,q2)

1.

D(0) smallest enclosing disk of q1 and q2

2.

for i 1..j do

3.

if pi D(i-1) then D(i) D(i-1)

4.

else D(i) Disk (q1, q2, pi)

5.

return D(j)

Smallest Enclosing Disk

THEOREM:

The smallest enclosing disk of n points in the plane can be computed in

randomized O(n) expected time and O(n) space.

Proof: Space O(n) is obvious.

MinDiskWith2Points (P,q1,q2) takes O(n) time.

MinDiskWithPoint (P,q) takes time:

n

T O ( n ) O ( i ) * X ( i )

where

E [ X ( i )] 2 / i

analysis)

i2

(by backwards

n

E[T ] O (n )

2

O (i) * i

1

X (i )

0

if p i D (i) - D ( i - 1)

otherwise

O ( n ).

i2

“Fix” P[1..i] = {p1, … , pi}

P[1..i-1] = {p1, … , pi} - {pi}

P[1..i]

backwards

one of these is pi with prob. 2/i

q

Apply this idea once more: expected running time of MinDisk is also O(n).

2D LP

Prune-&-Search

2D LP: Megiddo(83), Dyer(84): Prune-&-Search

Original LP: min { ax + by |

ai x + bi y + ci 0 , i=1..n }

STEP 1: Transform so that the objective hyper-plane becomes horizontal.

(a,b) (0,0), so WLOG b 0.

Coordinate transform:

Y = ax + by

i = ai – (a/b) bi

X=x

i = bi /b

min { Y | i X + i Y + ci 0 , i=1..n }

Y

F

feasible

region

optimum

X

2D LP: Prune-&-Search

min { Y | i X + i Y + ci 0 , i=1..n }

STEP 2: Partition the constraints (the index set [1..n])

I0 = { i | i = 0 } vertical line constraints determine feasible x-interval

I- = { i | i < 0 }

I+ = { i | i > 0 }

Y

STEP 3:

U1 X U 2

U 1 max

U 2 min

STEP 4: Define

-

i -

F

ci

i

ci

i

i

i

| i I0 , i 0

X

| i I0 , i > 0

, i -

U1

ci

i

So constraint

s in I become :

Y iX i

i I

constraint

s in I - become :

Y iX i

i I-

U2

2D LP: Prune-&-Search

STEP 5: Define

F ( X ) min ( i X i ) ,

i I

F- ( X ) max ( i X i )

i I -

F+

FSo, the transformed constraints are: F-(X) Y F+ (X).

Since we want to minimize Y, our objective function is F-(X).

STEP 6: Our new (transformed) problem is:

F- ( X )

minimize

s.t.

F- ( X ) F ( X )

U1 X U 2

F+

F

Y

FX

U1

optimum

U2

2D LP: Prune-&-Search

STEP 7: An Evaluation Stage:

7(a): Given X [U1 , U2 ] , we can determine in O(n) time:

F-(X) = max { i X + i | i I- }

F+(X) = min { i X + i | i I+ }

f- (L) (X) = left slope of F-(X) at X

f- (R) (X) = right slope of F-(X) at X

f+ (L) (X) = left slope of F+(X) at X

f+ (R) (X) = right slope of F+(X) at X

Note: if only one i I- achieves the “max” in F-(X), then

f- (L) (X) = f- (R) (X) = i . Similarly with F+(X).

X’

X

F-

2D LP: Prune-&-Search

STEP 7: An Evaluation Stage:

7(b): In O(n) time we can determine for X [U1 , U2 ], whether:

X achieves the minimum of F-(X) and is feasible (hence optimum).

X is infeasible & there is no solution to the LP

X is infeasible and we know which side (left/right) of X the feasible region F lies.

X is feasible and we know which side of X the optimum solution lies.

In the latter two cases, shrink the interval [U1 , U2 ] accordingly.

OR

F-(X)

F+(X)

X

F to

the left

F to

the right

infeasible

X

f- (L) (X) > f+ (L) (X)

X

f- (R) (X) < f+ (R) (X)

X

f- (L) (X) f+ (L) (X) & f- (R) (X) f+ (R) (X)

F = ; LP is infeasible

2D LP: Prune-&-Search

METHOD:

A series of evaluation stages .

Prune at least a fixed fraction (1-) of the constraints in each stage.

These constraints won’t play a role in determining the optimum vertex.

After the k-th stage, # constraints remaining k n.

At most log n stages.

Total time:

O

k

α

n

k0

log α n

O

1

1- α

n)

O ( n ).

2D LP: Prune-&-Search

METHOD: (continued)

= 3/4 is achievable.

Which X to select to evaluate then prune?

Pair up constraints in I+ (do the same for I- ).

Suppose i,j I+ are paired up.

Xij

Y jX j

Y iX i

U1

Xij

U1

U2

U2

Xij

U1

eliminate

neither one

U2

2D LP: Prune-&-Search

METHOD: (continued)

Next evaluation point:

X* = median of the Xij’s of the non-eliminated pairs. O(n) time.

Half of the Xij’s will be on the wrong side of X*.

We will eliminate one line from at least half of the pairs.

So, at least ¼ of the lines will be eliminated in this stage.

At most = ¾ of the lines remain.

THEOREM:

A 2-variable LP with n constraints can be solved in O(n) time

in the worst-case by Megiddo-Dyer’s Prune-&-Search method.

GENERALIZATION: Megiddo[84] showed d dim. LP can also be

solved by this technique in O(n) time assuming d is a fixed constant.

[However, the hidden constant in O(n) is super-exponential in d.]

Extensions & Applications

The Smallest Enclosing Disk:

min max

x,y

(x i - x ) (yi - y)

2

2

1 i n

z

minimize

2

2

z (x i - x ) (yi - y)

s.t.

for i 1 .. n

A Quadratic

Program.

See Exercise 16.

THEOREM [Megiddo]:

The smallest enclosing disk can be solved in O(n) time

in the worst-case by the Prune-&-Search method.

See Lecture Notes 7-13 for more on this topic.

Exercises

1.

A Non-Linear Primal Dual Example: [Rochat, Vecten, Fauguier, Pilatte, 1811]

We are given 3 points A,B,C in general position in the plane.

Primal: Consider any equilateral triangle PQR whose 3 sides

pass through A,B,C, respectively. (See Figure below.)

Let H denote the altitude of the equilateral triangle PQR.

Find PQR to maximize H.

Dual:

Consider any point S, with L as the sum of the lengths of the 3

segments L = SA + SB + SC. Find S to minimize L.

[The optimum S is called the Steiner point of ABC.]

R

Prove the following claims:

a) For any primal-dual feasible solutions H L.

b) At optimality max H = min L.

c) At optimality SA QR, SB RP, SC PQ,

where means perpendicular.

A

S

B

Q

C

P

2.

LP Primal Dual Example: Give the dual of the following LP instance:

max

5 x1 + 12 x2

subject to:

-2 x1 + 5 x2

11 x1 - 4 x2

-4 x1 + 6 x2

8 x1 - 3 x2

x10,

3.

- 6 x3 + 9 x4 + 4 x5

+ 13 x3 +

+ 5 x3 +

+ 14 x3 +

- 7 x3 +

x3 0,

6 x4 + 7 x5 =

8 x4 - 2 x5

2 x4 + 7 x5

9 x4 - 14 x5

x40,

x50

53

31

43

61

Fractional Knapsack Problem (FKP):

FKP is the LP max { vTx | wTx W, 0xi1, i=1..n }, where

vT=(v1 ,…, vn ) and wT =(w1 ,…, wn ) are vectors of item values and weights,

and W is the knapsack capacity. Assume these are all positive reals.

The real variable xi , 0xi1, is the selected fraction of the i-th item, for i=1..n.

a) Give the dual of FKP.

b) Use the Duality Theorem to show that a simple greedy algorithm

obtains optimal primal-dual solutions. [Hint: sort vi / wi , for i=1..n.]

4. Polyhedral Vertices versus Basic Solutions:

Consider the (possibly empty) polyhedron F = { xd | Ax b } for some And,

bn, d n. Assume rank(A)=d (i.e., A contains d linearly independent rows).

Show the following facts:

a) F has a basic solution (not necessarily feasible).

b) The cone defined by the constraints corresponding to a basis is pointed (i.e.,

does not contain any line).

c) F is pointed.

d) Every non-empty face of F has a vertex.

e) Every vertex of F corresponds to at least one basic feasible solution.

f) A vertex could correspond to more than one basic feasible solution.

g) Every vertex of F is the unique optimum LP solution for some

linear objective vector and with F as the feasible region.

5. Give LP Primal-Dual examples where:

a) Both the Primal and the Dual are infeasible.

b) The Primal is unbounded and the Dual is infeasible.

c) Both the Primal and the Dual have bounded optima.

6.

Farkas Lemma (Alternative versions):

Prove the remaining 3 versions of Farkas Lemma.

7.

Fundamental Theorem of Polyhedra [Minkowski-Weyl] when P is not pointed:

a) Example 1: Consider the non-pointed polyhedron P={(x1, x2)T2 | x11, -x11}.

Show that P={ x 2 | x = V + Rm, m0, 0, Si i = 1 }, where V consists of the

2 columns v1=(1,0)T, v2=(-1,0)T, and R consists of the 2 columns r1=(0,1)T,

r2=(0,-1)T. [Note that v1 and v2 are not extreme points of P.]

b) Example 2: Show the alternative representation (according to the Theorem)

for the non-pointed polyhedron P = {(x1, x2, x3)T3 | x1+x2 -x31, -x1+x2 -x31}.

c) Prove the () part of the Theorem when P is not pointed.

[Our referenced book [Ziegler, “Lectures on Polytopes”] contains a proof using

the Fourier-Motzkin elimination method.]

8.

Strong Duality Theorem:

Prove the Strong Duality Theorem using Farkas Lemma.

[Hint: = min{yTb : yTA=c, y0} implies the system {yTb – < 0, yTA - c = 0, y0}

has no solution in y. Reformulate this as F.L.(2) using an extra variable y0.

What is F.L.(1)?]

9.

LP Duality via Lagrangian: Consider the primal-dual pair of linear programs

(Primal) P* = max { cTx | Ax < b }

(Dual)

D* = min { bTy | ATy = c and y > 0 }

and their Lagrangian

L(x,y) = cTx + yT (b – Ax) = bTy + xT (c – ATy) .

Define the functions

p(x) = miny>0 L(x,y) and

d(y) = maxx L(x,y).

That is, for any x, p(x) is obtained by a y > 0 that minimizes the Lagrangian (for the

given x). Similarly, for any y > 0, d(y) is obtained by an x that maximizes the

Lagrangian (for the given y).

c x

p(x)

-

T

a) Show that

b y

d ( y)

T

b) Show that P* = maxx p(x)

D* = miny>0 d(y)

if Ax b

otherwise

,

if A y c

.

otherwise

T

= maxx miny>0 L(x,y) and

= miny>0 maxx L(x,y).

c) Show that if at least one of the Primal or the Dual LP has a feasible point, then

the Lagrangian has the Saddle Point Property, i.e.,

maxx miny>0 L(x,y) = miny>0 maxx L(x,y).

[Can you prove this without assuming the LP Strong Duality Theorem?]

10. In the restricted version of the mold casting problem we insist that the object is

removed from its mold using a vertical translation (perpendicular to the top facet).

(a) Prove that in this case only a constant number of top facets are possible.

(b) Give a linear time algorithm that determines all such possible top facets.

11. Let H be a set of at least 3 half-planes. A half-plane hH is called redundant if its

removal does not change H (the intersection of half-planes in H).

Prove that h is redundant if and only if h’h’’ h, for some h’,h’’H – {h}.

12. Prove that RandomPermute(A[1..n]) is correct, i.e., each of the n! permutations

of A can be the output with equal probability 1/n!.

13. Show how to implement MinDisk using a single routine MinDiskWithPoints(P,R)

that computes MD(P,R) as defined before. Your algorithm should compute only a

single random permutation during the entire computation.

14. Show the “bridge finding” problem in Kirkpatrick-Seidel’s O(n log h) time 2D CH

algorithm can be solved in O(n) time by Megiddo-Dyer’s technique.

15. Minimum Area Annulus:

An annulus is the portion of the plane contained between two concentric circles.

Let a set P of n points in the plane be given.

Give an efficient algorithm to find the minimum area annulus that contains P.

[Hint: This can be solved by an LP. We want to find a center (x,y) and two radii R and r

to minimize R2 – r2 subject to the 2n constraints r2 (x-xi)2 + (y – yi)2 R2 .

Now instead of the variables x,y,r,R, use the variables x, y, w x2 +y2 - R2 and z x2 +y2 - r2.

Also ensure that the LP solution satisfies x2 +y2 = w + R2 = z + r2 max{w,z}. How?]

16. Minimum Enclosing Disk in 2D:

Show this problem can be solved in O(n) time by prune-&-search.

[Hint: First show the restricted problem where the disk center lies on a given line can be solved

in O(n) time. Then show it can be decided in O(n) time on which side of this line the center of

the (unrestricted) minimum enclosing disk lies. Then apply prune-&-search to this scheme.]

17. Maximum Inscribed Sphere:

Let P d be a convex polytope, given as intersection of n half-spaces.

Give a formulation of the problem to determine the largest sphere inscribed in P.

You don’t need to solve the problem; just give a good formulation of it.

[The center of this sphere is called the Chebyshev center of the polytope.]

END