Document

advertisement

Linear Programming

and Approximation

Seminar in Approximation Algorithms

Linear Programming

Linear objective function and Linear constraints

T

min

c

x : Ax b

Canonical form

T

min

c

x : Ax b , x 0

Standard form

n

n

a jxj b

a

j

xj s b

j 1

j 1

s0

n

a

n

a

j

xj b

j 1

j

xj b

j

xj b

j 1

n

a

j 1

Unbounded

xj

xj xj xj

xj ,xj 0

Linear Programming – Example

min

7 x1 x 2 5 x 3

s .t .

x1 x 2 3 x 3 10

5 x1 2 x 2 x 3 6

x1 , x 2 , x 3 0

x=(2,1,3) is a feasible solution

7 * 2 + 1 + 5 * 3 = 30 is an upper bound the optimum

Lower Bound

How can we find a lower bound?

For example,

7 x1 x 2 5 x 3 x1 x 2 3 x 3 10

min

7 x1 x 2 5 x 3

s .t .

x1 x 2 3 x 3 10

5 x1 2 x 2 x 3 6

x1 , x 2 , x 3 0

Lower Bound

Another example:

7 x1 x 2 5 x 3 ( x1 x 2 3 x 3 ) ( 5 x1 2 x 2 x 3 ) 16

min

7 x1 x 2 5 x 3

s .t .

x1 x 2 3 x 3 10

5 x1 2 x 2 x 3 6

x1 , x 2 , x 3 0

Lower Bound

min

7 x1 x 2 5 x 3

s .t .

x1 x 2 3 x 3 10

( y1 )

5 x1 2 x 2 x 3 6

( y2 )

x1 , x 2 , x 3 0

Assign a non-negative coefficient yi to every primal

inequality such that

y1 ( x1 x 2 3 x 3 ) y 2 ( 5 x1 2 x 2 x 3 ) 7 x1 x 2 5 x 3

Lower bound 10y1+6y2

LP Duality

The problem of finding the best lower bound is a

linear program

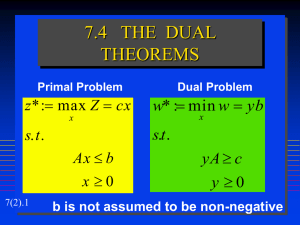

Primal

Dual

min

7 x1 x 2 5 x 3

max

10 y 1 6 y 2

s .t .

x1 x 2 3 x 3 10

s .t .

y1 5 y 2 7

5 x1 2 x 2 x 3 6

y1 2 y 2 1

x1 , x 2 , x 3 0

3 y1 y 2 5

y1 , y 2 0

LP Duality

T

min

c x

s .t .

Ax b

max

b y

s .t .

A y c

x0

For every x and y: cTx bTy

Thus, Opt(primal) Opt(dual)

The dual of the dual is the primal

T

T

y 0

Example

Dual

Primal

min

7 x1 x 2 5 x 3

max

10 y 1 6 y 2

s .t .

x1 x 2 3 x 3 10

s .t .

y1 5 y 2 7

5 x1 2 x 2 x 3 6

x1 , x 2 , x 3 0

y1 2 y 2 1

3 y1 y 2 5

y1 , y 2 0

x=(7/4,0,11/4) and y=(2,1) are feasible solutions

7*7/4 + 0 + 5*11/4 = 10*2 + 6*1 = 26

Thus, x and y are optimal

Max Flow vs. Min s,t-cut

Path(s,t) - the set of directed paths from s to t.

m

Max Flow:

max

fi

i 1

s.t.

f i ce

e E

Pi :e Pi

fi 0

Dual:

min

c

e

de

e

1

Pi Path ( s , t )

e E

Implicit d e 1

Min s,t-cut d e 0 ,1

Opt(Dual) = Opt(Min s,t-cut)

s.t.

d

Pi Path ( s , t )

e Pi

de 0

e E

LP-duality Theorem

Theorem:

Opt(primal) is finite Opt(dual) is finite

If x* and y* are optimal

n

c

j 1

m

j

x

*

j

by

i

i 1

Conclusion: LP NPcoNP

*

i

Weak Duality Theorem

n

c

If x and y are feasible

j 1

m

j

xj

by

i

i

i 1

Proof:

n

c

j 1

n

j

xj

m

( a

j 1

i 1

ij

m

n

m

i 1

j 1

i 1

y i ) x j ( a ij x j ) y i b i y i

Complementary Slackness

Conditions

x and y are optimal

Primal conditions:

m

j , either

x j 0 or

a

ij

yi c j

ij

x j bi

i 1

Dual conditions:

n

i , either

y i 0 or

a

j 1

Complementary Slackness

Conditions - Proof

By the duality theorem

n

c

n

j

xj

j 1

m

( a

j 1

m

ij

yi ) x j

i 1

n

( a

i 1

m

ij

x j ) yi

j 1

m

c j a ij y i x j 0

j 1

i 1

m

n

c j a ij y i 0 and x j 0

i 1

m

Either

x j 0 or

a

ij

yi c j

i 1

Similar arguments work for y

For other direction read the slide upwards

b y

i

i 1

i

Algorithms for Solving LP

Simplex [Dantzig 47]

Ellipsoid [Khachian 79]

Interior point [Karmakar 84]

Open question: Is there a strongly polynomial

time algorithm for LP?

Integer Programming

NP-hard

Branch and Bound

T

min

c x

s.t.

Ax b

x0

LP relaxation

Opt(LP) Opt(IP)

Integrality Gap – Opt(IP)/Opt(LP)

xN

n

Using LP for Approximation

Finding a good lower bound - Opt(LP) Opt(IP)

Integrality gap is the best we can hope for

Techniques:

Rounding

– Solve LP-relaxation and then round solution.

Primal Dual

– Find a feasible dual solution y, and a feasible integral primal

solution x such that cTx r * bTy

Minimum Vertex Cover

LP-relaxation and its dual:

min

(P)

cu xu

u V

s.t.

(D)

max

xu xv 1

e (u , v ) E

xu 0

u V

ye

e E

s.t.

e :u e

y e cu

ye 0

u V

e E

Solving the Dual

Algorithm:

1. Solve (D)

2. Define: C D u :

y e cu

e :u e

Analysis:

CD is a cover:

e ( u , v ), x ( C D ) u x ( C D ) v 0

ye ye

Solving the Dual

CD is r-approx:

u C D , cu

y

c

e

e :u e

u

x (C D ) u

u V

x (C

D

u V

x (C

D

)2

e :u e

Weak duality The.

)u

e :u e

y x (C

e

e E

ue

2 ye

e E

2x *

ye

D

)u

Rounding

Algorithm:

1.

Solve (P)

*

2.

Define: C P u : x u 0

Analysis:

e ( u , v ), x ( C P ) u x ( C P ) v 0

x* is not feasible

Due to the complementary conditions:

x (C P ) x (C D )

Conclusion: x(CP) is 2-approx

Rounding

Algorithm:

1.

Solve (P)

*

2.

Define:

C 1 u : x u

2

1

2

Analysis:

e ( u , v ), x ( C 1 ) u x ( C 1 ) v 0

2

2

x* is not feasible

Clearly, x ( C ) x ( C P )

Conclusion: x(C½) is 2-approx

1

2

The Geometry of LP

Claim:

Let x1,x2 be feasible solutions

Then, x1+(1-)x2 is a feasible solution

Definition:

x is a vertex if x1 , x 2 , 0 , x1 (1 ) x 2

Theorem:

If a finite solution exists, there exists an

optimal solution x* which is a vertex

Half Integrality

Theorem: If x is a vertex then x{0,1/2,1}n

Proof:

Let x be a vertex such that x {0,1/2,1}n

V

V

x v*

*

x 'v x v

x*

v

v V

v V

x

v : 0 x

v:

Otherwise

1

2

*

v

*

v

1

1

2

x v*

*

x"v x v

x*

v

v V

v V

Otherwise

Both solutions are feasible

= shortest distance to {0,1/2,1}

Conclusion: x such that

*

j , x j 0 , 1 2 ,1

*

x

*

1

2

( x ' x " )

Half Integrality

Algorithm:

Construct the following bipartite graph G’:

V ' u ' : u V

V " u " : u V

E ' ( u ' , v " ) : ( u , v ) E

Find a vertex cover C’ in G’

(This can be done by using max-flow.)

C u : u ' C ' or u " C '

Primal Dual

Construct an integral feasible solution x and a

feasible solution y such that cTx r bTy

Weak duality theorem bTy Opt(LP)

cTx r Opt(LP) r Opt(IP)

x is r-approx

Greedy H()-Approximation

Algorithm

C

While there exists an uncovered edge:

c

u

arg

min

–

deg ( v )

v V

– C C {u }

– Remove u and incident edges

v

G

Primal Dual Schema

Modified version of the primal dual method for

solving LP

Construct integral primal solution and feasible

dual solution simultaneously.

We impose the primal complementary

slackness conditions, while relaxing the dual

conditions

Relaxed Dual Conditions

m

Primal:

j , either

Relaxed dual:

a

x j 0 or

ij

yi c j

i 1

n

i , either

y i 0 or

a

ij

x j bi

j 1

Claim: If x,y satisfy the above conditions

n

c

j 1

m

j

x j bi y i

i 1

Proof:

n

c

j 1

n

j

xj

m

( a

j 1

i 1

m

ij

yi ) x j

n

( a

i 1

j 1

m

ij

x j ) y i bi y i

i 1

Vertex Cover

Algorithm:

C ; y 0

For every edge e=(u,v) do:

– y e min c u y e , c v y e

e :u e

e :v e

– C C arg min c u y e , c v y e

e :u e

e :v e

Output C

(We can construct C at the end.)

Vertex Cover

There are no uncovered edges, or over packed

vertices at the end. So x=x(C) and y are feasible.

Since x and y satisfy the relaxed complementary

slackness conditions with =2, x is a 2-approx

solution.

– Primal:

u C y e cu

e :u e

– Relaxed Dual: e ( u , v ), y 0

e

u V

cu xu

u V

y e xu

e:u e

e E

xu xv 2

y e xu y e xu xv 2 y e

e E

u e e E

Generalized Vertex Cover

LP-relaxation and its dual:

min

(P)

cu xu ce xe

u V

s.t.

(D)

max

e E

xu xv xe 1

e (u , v ) E

xt 0

t V E

ye

e E

s.t.

y e cu

u V

0 ye ce

e E

e :u e