GENIConnections

advertisement

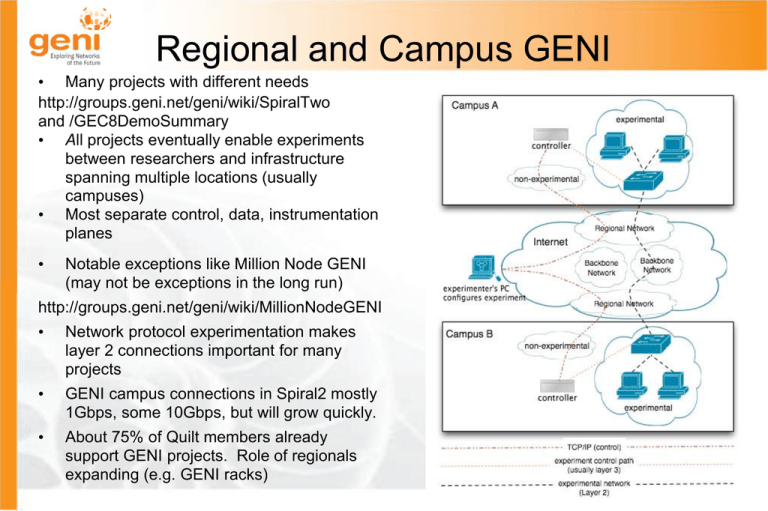

Regional and Campus GENI • Many projects with different needs http://groups.geni.net/geni/wiki/SpiralTwo and /GEC8DemoSummary • All projects eventually enable experiments between researchers and infrastructure spanning multiple locations (usually campuses) • Most separate control, data, instrumentation planes • Notable exceptions like Million Node GENI (may not be exceptions in the long run) http://groups.geni.net/geni/wiki/MillionNodeGENI • Network protocol experimentation makes layer 2 connections important for many projects • GENI campus connections in Spiral2 mostly 1Gbps, some 10Gbps, but will grow quickly. • About 75% of Quilt members already support GENI projects. Role of regionals expanding (e.g. GENI racks) Nationwide Meso-scale Prototype Current plans for locations & equipment OpenFlow WiMAX Stanford U Washington Wisconsin U Indiana U Rutgers Princeton Clemson Georgia Tech Stanford UCLA UC Boulder Wisconsin Rutgers NYU Polytech UMass Columbia OpenFlow Backbones ShadowNet Seattle Salt Lake City Sunnyvale Denver New York City Houston Chicago Los Angeles Atlanta Salt Lake City Kansas City Washington, DC Atlanta Arista 7124S Switch Toroki LightSwitch 4810 HP ProCurve 5400 Switch Juniper MX240 Ethernet Services Router NEC WiMAX Base Station NEC IP8800 Ethernet Switch Meso-Scale in the backbones • Internet2 and NLR installing 5 OpenFlow switches in each backbone (HP ProCurve and possibly a second vendor) Internet2 MX-960 conns • NLR 3-node backbone live for GEC8 demo • ProtoGENI installing additional nodes (2 or more) in Internet2 and integrating prototype OpenFlow • GENI layer 2 data planes starting to appear across backbones, regionals and campuses – ION, FrameNet and custom network engineering "stitching" VLANs – ORCA, IGENI demonstrating dynamic VLANs at GECs – NLR and I2 10Gpbs layer2 interconnect at Atlanta • ShadowNet: installing 3 Juniper M7i routers for measurements in I2 PoPs this year • Find updates through GENI wiki Spiral Two page (http://groups.geni.net/geni/wiki/SpiralTwo) dwgs courtesy I2, NLR NLR MesoScale in the Regionals • Plain old IP • Tunneling above layer 2 (GRE, OpenVPN) • Layer 2 – Single VLAN (801.q tagged) – VLAN translation (Supported in ION, not Sherpa/FrameNet) – Layer 2 tunneling (MPLS, QinQ) (DRAGON, OpenFlow) – Direct fiber to NLR or I2 backbone (ION, FrameNet) – Access via FrameNet/ION procedures (duration, bw limits,first-come first-served) • More info (how-tos, track connections, references) http://groups.geni.net/geni/wiki/ConnectivityHome site BBN snippet MesoScale in the Regionals (cont) • International – GpENI – iGENI (Starlight) – K-GENI (Korea/Indiana only) – ORBIT (Australia only) – PrimoGENI (Brazil) – Others brewing • Mobile/Wireless (over L2 or IP at regional) – WiMAx – OpenFlow WiFi (Openroads) – Offloading/Application Migration (e.g. Android phones) University of Wisconsin – Million Node GENI dwgs courtesy iGENI DRAGON •MAX, GpENI, TIED projects (may be others) •Dynamic resource allocation via GMPLS-enabled control plane •MAX supports sliced edge compute resources (myPLC) and network virtualization with a GENI Aggregate Manager •Layer 2 Ethernet switches with dedicated 1 GbE and 10GbE connections •Layer 3 IP (management, control and general connectivity) • Programmable network hardware — NetFPGA card installed in rack mounted PC o acts as OpenFlow switch, programmable router, packet generator, etc. o offers advanced measurement capabilities dwgs courtesy iGENI ProtoGENI and ORCA • ProtoGENI backbone (5 locations in I2) – Current nodes on 10 GE dedicated connections to Infinera GENI wave – Moving to 1 GE ION links between nodes in August (stay tuned) – Campuses access via IP, ION or dedicated layer2 (through regional) • ORCA clearinghouse uses NLR backbone in Spiral 2 – Campuses connect via FrameNet, iGENI, and regional optical networks – Dynamic VLAN allocation – ORCA broker plans to eventually support many mixed connection types dwgs courtesy iGENI OpenFlow Deployment Roadmap Clemson OpenFlow Example Multiple layer 2 & 3 connections VLAN 3711 connects BBN and Clemson via NLR, SoX and NoX. Application (Experiment) Example LLDP workaround