Slides 13

advertisement

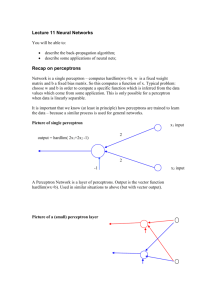

Machine Learning: Connectionist McCulloch-Pitts Neuron Perceptrons Multilayer Networks Support Vector Machines Feedback Networks Hopfield Networks Uses Classification Pattern Recognition Memory Recall Prediction Optimization Noise Filtering Artificial Neuron Input signals, xi Weights, w i Activation level, Sigma w x i i Threshold function, f Neural Networks Network Topology Learning Algorithm Encoding Scheme McCulloch-Pitts Neuron Output is either +1 or -1. Computes weighted sum of inputs. If weighted sum >= 0 outputs +1, else -1. Can be combined into networks (multilayers) Not trained Computationally complete Example Perceptrons (Rosenblatt) Similar to McCulloch-Pitts neuron Single layer Hard limited threshold function, +1 if weighted sum >=t, -1 otherwise Can use sign function if bias included Allows for supervised training (Perceptron Training Algorithm) Perceptron Training Algorithm Adjusts weights by using the difference between the actual output and the expected output in a a training example. Rule: Δw i = c(di – Oi) xi c is the learning rate d is the expected output i O is the computed output, sign(Σw x ). i i i Example: Matlab nnd4pr function Perceptron (Cont'd) Simple training algorithm Not computationally complete Counter-example: XOR function Requires problem to be linearly separable Threshold function not continuous (needed for more sophisticated training algorithms) Generalized Delta Rule Conducive to finer granularity in the error measurement Form of gradient descent learning – consider the error surface, the map of the error vs. the weights. The rule takes a step closer to a local minima by following the gradient Uses the learning parameter, c Generalized Delta Rule (cont'd) The threshold function must be continuous. We use the a sigmoid function, f(x) = 1/(1 + e -λx), instead of a hard limit function. The sigmoid function is continuous, but approximates the hard limit fn. The rule is: Δ w = c (d – O ) f'(Σ w x ) x i i i i i k = - c (di -Oi) * Oi * (1 – Oi) * xk Hill-climbing algorithm c determines how much the weight changes in a single step Multilayer Network Since a single-layer perceptron network is not computationally complete, we allow for a multilayer network where the output of each layer is the input for the next layer (except for the final layer, the output layer). The first layer whose input comes from the external source is the input layer. All other layers are called hidden layers. Training a ML Network How can we train a multilayer network? Given a training example, the ouput layer can be trained like a single-layer network by comparing the expected output to the actual output and adjusting the weights going of the lines going into the output layer accordingly. But how can the hidden layers (and the input layer) be trained? Training an ML Network (cont'd) The solution is to assign a certain amount of blame, delta, to each neuron in a hidden layer (or the input layer) based on its contribution to the total error. The blame is used to adjust the weights. The blame for a node in the hidden layer (or the input layer) is calculated by using the blame values for the next layer. Backpropagation To train a multilayer network we use the backpropagation algorithm. First we run the network on a training example. Then we compare the expected output to the actual output to calculate the error. The blame (delta) is attributed to the nonoutput-layer nodes by working backward, from the output layer to the input layer. Finally the blame is used to adjust the weights on the connections. Backpropagation (cont'd) Δ wi = - c * (di -Oi) * Oi * (1 – Oi) * xk, for output nodes Δ w = - c * O * (1 – O ) * Σ (-delta * w ) * x for i i i j j ij k, hidden and input nodes where delta = (d – O ) * O * (1 – O ) or j i i i i deltaj = - Oj * (1 – Oj) * Σk(-deltak * wjk) Example - NETtalk NETtalk is a neural net for pronouncing English text. The input consists of a sliding window of seven characters. Each character may be one of 29 values (26 letters, two punctuation chars, and a space), for a total of 203 input lines. There are 26 output lines (21 phonemes and 5 to encode stress and syllable boundaries). There is a single hidden layer of 80 units. NETtalk (cont'd) Uses backpropagation to train Requires many passes through the training set Results comparable to ID3 (60% correct) The hidden layers serve to abstract information from the input layers Competitive Learning Can be supervised or unsupervised, the latter usually for clustering In Winner-Take-All learning for classification, one output node is considered the “winner.” The weight vector of the winner is adjusted to bring it closer to the input vector that caused the win. t t-1 – Wt-1) Kohonen Rule: Δ w = c (X Don't need to compute f(x), weighted sum sufficient Kohonen Network Can be used to learn prototypes Inductive bias in terms of the number of prototypes originally specified. Start with random prototypes Essentially measures the distance between each prototype and the data point to select the winner Reinforces the winning node by moving it closer to the input data Self-organizing network Support Vector Machines Form of supervised competitive learning Classifies data to be in one of two categories by finding a hyperplane (determined by the support vectors) between the positive and negative instances Classifies elements by computing the distance from a data point to a hyperplane as an optimization problem Requires training and linearly separable data, o.w., doesn't converge.