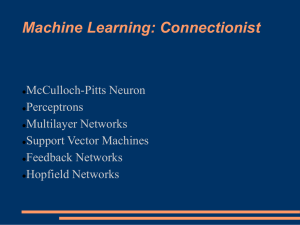

ARTIFICIAL NEURAL NETWORKS MULTILAYER FEED FORWARD NEURAL NETWORKS Module - 3 AIM To familiarize students with the concept of Perceptron INSTRUCTIONAL OBJECTIVES This Session is designed to: 1. Differentiate about SLP and MLP 2. Understand the Structure of MLP. 3. Understand working of MLP . LEARNING OUTCOMES At the end of this session, you should be able to: 1. Know history of ANN. 2. Write perceptron learning algorithms. 3. Design Neural Networks for different data. 2 DISADVANTAGES OF SINGLE LAYER PERCEPTRON • Linear Separability Limitation: • can only solve problems that are linearly separable, unable to handle non-linearly separable data. • Example: The XOR problem, where the data points for class 0 form one cluster and those for class 1 form another, but are not linearly separable • Limited Representation Power: • SLP have limited representation capabilities and can only represent simple linear functions. • Example: In a regression task, if the relationship between input features and the target variable is nonlinear, a single-layer perceptron cannot adequately capture this complex relationship 3 DISADVANTAGES OF SINGLE LAYER PERCEPTRON • Inability to Learn Complex Patterns: • Single-layer perceptrons are incapable of learning complex hierarchical patterns or features from the data • Example: For image recognition tasks, recognizing complex objects often involves learning hierarchical features such as edges, textures, and high-level structures • Lack of Generalization: • SLPs may overfit to the training data and fail to generalize to unseen data. • Example: In a text classification task, a single-layer perceptron may memorize the training examples rather than learning the underlying patterns, leading to poor performance on new, unseen documents • Limited Task Scope: • SLPs are primarily suitable for simple linear classification problems and may not be suitable for more diverse tasks. • Example: For tasks like natural language processing (NLP) or computer vision, where data exhibits complex patterns, a singlelayer perceptron is too simplistic. 4 MULTILAYER FEEDFORWARD NETWORKS • Multilayer feedforward neural networks, also known as multilayer perceptrons (MLPs), are a fundamental type of artificial neural network that consists of an input layer, one or more hidden layers, and an output layer. • It consists of multiple layers of artificial neurons or units, where information flows in one direction, from the input layer through the hidden layers to the output layer. • Each layer contains interconnected nodes or neurons, and connections have associated weights that are adjusted during the learning process 5 MLP ARCHITECTURE Input layer First hidden layer Second hidden layer 6 Output layer COMPONENTS OF MLP • Input Layer: The input layer receives the initial data or features. Each node in this layer represents an input feature. • Hidden Layers: • These intermediate layers perform the majority of the network's computation. • Each neuron in a hidden layer is connected to every neuron in the previous and subsequent layers. • The number of hidden layers and the number of neurons in each layer are customizable and depend on the complexity of the problem. • Output Layer: The output layer produces the final results or predictions. The number of nodes in this layer depends on the type of task. 7 HIDDEN UNIT REPRESENTATIONS • Trained hidden units can be seen as newly constructed features that make the target concept linearly separable in the transformed space. • On many real domains, hidden units can be interpreted as representing meaningful features such as vowel detectors or edge detectors, etc.. • However, the hidden layer can also become a distributed representation of the input in which each individual unit is not easily interpretable as a meaningful feature 8 CONNECTION AND COMPUTATION • Each neuron in a layer is connected to every neuron in the subsequent layer (fully connected). • Each connection has a weight associated with it. The weight determines the strength of the connection. • Each neuron performs a weighted sum of the inputs it receives, applies an activation function to the sum, and passes the result to the next layer. 9 NEURON COMPUTATION • Weighted Sum: • Each neuron in the network takes the inputs from the previous layer, multiplies them by corresponding weights, and sums these weighted inputs. • The weighted sum for neuron j in layer l is computed as: • Activation Function: • After computing the weighted sum, an activation function is applied to introduce nonlinearity. • Common activation functions include ReLU (Rectified Linear Unit), Sigmoid, Tanh, and others. The choice of activation function impacts the network's learning ability and performance. 10 TRAINING AND LEARNING • Initialization: • Initialize the weights and biases of the network, usually randomly. • The weights determine the strength of connections between neurons, and biases provide each neuron with an adjustable threshold • Forward Propagation: • Input data is fed forward through the network, passing through each layer. • Neurons compute their weighted sum of inputs and pass the result through an activation function. • Error Computation: • Calculate the difference between the predicted output and the actual output (the error). • Commonly used error metrics include Mean Squared Error, Cross-Entropy, etc. 11 TRAINING AND LEARNING • Backpropagation: • Propagate the error backward through the network to calculate the gradients of the loss function with respect to the weights. • The gradients describe the direction and magnitude of adjustments needed for the weights to minimize the error. • Gradient Descent: • Update the weights using the calculated gradients to minimize the error. • Stochastic Gradient Descent (SGD), Adam, RMSprop, and other optimization algorithms adjust the weights incrementally. • Iterative Process: • Repeat these steps iteratively for multiple epochs or until the model converges and the error is minimized 12 TRAINING AND LEARNING 13 ACTIVATION FUNCTIONS 14 SOLVE THE PROBLEM USING SIGMOID ACTIVATION FUNCTION – CALCULATE OUTPUTS 15 • Great tool to visualize networks http://playground.tensorow.org/ 16 Self-Assessment Questions 1. Single layer perceptron can learn ___________. (a) Linear Boundary (b) Non-Linear Boundary (c) Both (d) Depends on data 2. Sigmoid activation function used at output layer for (a) Binary class (b) Multi-class (c) Both (d) None 17