Lecture 11 Neural Networks

advertisement

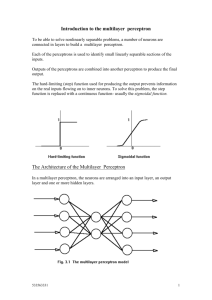

Lecture 11 Neural Networks You will be able to: describe the back-propagation algorithm; describe some applications of neural nets; Recap on perceptrons Network is a single perceptron – computes hardlim(wx+b). w is a fixed weight matrix and b a fixed bias matrix. So this computes a function of x. Typical problem: choose w and b in order to compute a specific function which is inferred from the data values which come from some application. This is only possible for a perceptron when data is linearly separable. It is important that we know (at least in principle) how perceptrons are trained to learn the data – because a similar process is used for general networks. Picture of single perceptron x1 input 2 output = hardlim( 2x1+2x2 -1) 2 -1 A Perceptron Network is a layer of perceptrons. Output is the vector function hardlim(wx+b). Used in similar situations to above (but with vector output). Picture of a (small) perceptron layer x2 input A Multilayer Perceptron Network is a combination of several layers of perceptrons. Picture of multilayer perceptron network This network computes hardlim(w2(hardlim(w1x+b1))+b2) . We can build multilayer perceptrons – and we can rig them to do calculations. There is no obvious training method to use. The definitive work was "Perrceptrons:.."Minsky and Papert (1969) We now want to generalise from the perceptron to other kinds of neuron and we want to be able to train them. Inputs remain pretty much as before (input neurons, weights on links etc. Calculate wx+b and input to the transfer function.) We do however allow other transfer functions. We still have input patterns x with desired output vector tx. We still define error vector e as e =tx – net(x). We give it lots of samples to train on, and if it works fine. If not we try altering the hidden layer size or using other transfer functions. Will this approach work (how do we know a Neural Network will give a good enough answer)? No matter what the mapping we can model it with such a network provided we have enough neurons in the hidden layer. (How do we decide we have enough?). Algorithms for training a neural network. Recall how we trained the perceptron. new w = old w + e x T. new b = old b + e. The weight update rule is sometimes called the delta rule new w = old w + w and in this case w=e x T. There is a more sophisticated version of the delta rule which uses a learning rate (< 1) to control the "step length". Here w= e x T. Altering the learning rate can make your NN learn faster – or become unstable if set too high. It is one of the things you can alter in matlab. The basic idea behind the delta rule is that the weights are tweaked in the direction which reduces the error. You can see this by doing a few calculations for the perceptron. If the network output is bigger than we want then the weight is altered to reduce the network output. If the network output is smaller than we want then the weight changes are such as to increase the network output. In principle the same ideas apply to the more complicated muti-layer feed forward network – but there are some difficulties. The first point is that the effect that a change in weight has on the transfer function value of the neuron it's going into needs further analysis – it is linked to the formula for the transfer function. The formulas for updating become a bit more complicated. x1 w1 x2 w2 wi xi S wn xn Artificial Neuron O F (S ) The second complicating factor is that there is no obvious choice for the error value that we need to correct for a hidden neuron – in other words we have no obvious way of telling how far out the middle layer is. We are fine at the output layer – we can calculate the error exactly as before. The solution to the problem is to use the Back-propagation algorithm in order to change the weights in a way which we hope improves the learning. We first of all choose a set of weights and biases randomly. The start place can affect the outcomes – which is why we sometimes randomise and try again if a net doesn’t train well. [Back-propagation was discovered in the late 60's but ignored until the mid-eighties when it was rediscovered. See "Parallel and distributed processing" Rumelhart and McClelland (1986).] Then we proceed in a similar way to the adapt process for perceptrons: take the first input x and feed it into the network to get the output value net(x) [This is the forward pass]; calculate the error at the output neurons – as before e =tx – net(x); now make an estimate of how much error we attribute to each of the neurons in the hidden layer [this is the backward pass – we go backwards layer by layer]; error gets taken back in bigger amounts along the edges which have more effect. now update all weights and biases using the appropriate rule [this will depend on what transfer function the neuron is using]; move on and process the next input vector in the same way; .... when all input vectors have been processed we have completed one epoch; if the global error target is reached stop – otherwise process another epoch. It is possible to give general formulas – but these depend on sophisticated mathematics. We will not give the specific formulas here. Looking at the picture will help: The global error target is usually measured by sum of squares of the errors or the mean square error. So we are actually just trying to find a minimum point on a graph: (much simplified) picture What can go wrong?? – we can find local minimum not global minimum. Adding a momentum term can fix this. Application to driving a car. ALVINN (Autonomous Land Vehicle In a Neural Network) is a perception system which learns to control the NAVLAB vehicles by watching a person drive. [Pomerleau, 1993 Neural Network Perception for Mobile Robot Guidance, Kluwer] ALVINN's architecture consists of a single hidden layer back-propagation network. The input layer of the network is a 30x32 unit two dimensional "retina" which receives input from the vehicles video camera. Each input unit is fully connected to a layer of five hidden units which are in turn fully connected to a layer of 30 output units. The output layer is a linear representation of the direction the vehicle should travel in order to keep the vehicle on the road. [Very limited driving situation – very simple and no negotiation with traffic] 5 minutes training gives enough data to train the net. Training glitch Necessary to "bodge" the training data to cope with error situations. This was done by "rotating the view" to simulate off line situations. MANIAC [Jochem, Pomerleau and Thorpe, Maniac: a next generation neurally based autonomous road follower, Proc of Int. Conf on Intelligent Autonomous Systems: IAS-3] The use of artificial neural networks in the domain of autonomous vehicle navigation has produced promising results. ALVINN [Pomerleau, 1993] has shown that a neural system can drive a vehicle reliably and safely on many different types of roads, ranging from paved paths to interstate highways. ALVINN could be applied to many different road types – but each version could only deal with one type of road. Maniac uses ALVINN subnets and combines them to be able to cope with multiple road types. Handwriting recognition [Le Cun 1989 Handwritten digit recognition, IEEE Communications Magazine 27 (11):41-46 ] Identifies digit via 16x16 input, 3 hidden layers and distributed output covering 10 digits. Hidden layers have 768,192,30 neurons respectively. Not all edges used – training impossible if they were (idea of feature extraction) Ignored confusing outputs i.e output confusing if two outputs fire. This meant 12% of test data rejected – but what was left was 99% correct . Acceptable to the client. Implemented in hardware and put into use by client. Machine Printed Character Recognition This is one of the applications found on a database of commercial applications held by The Pacific Northwest National Lab in USA at http://www.emsl.pnl.gov:2080/proj/neuron/neural/products/ Here is the entry for Machine Printed Character Recognition Audre Recognition Systems o Application: optical character recognition o Product: Audre Neural Network Caere Corporation o Application: optical character recognition o Product: OmniPage 6.0 and 7.0 Pro for Windows o Product: OmniPage 6.0 Pro for MacOS o Product: AnyFax OCR engine o Product: FaxMaster o Product: WinFax Pro 3.0 (from Delrina Technology Inc.) Electronic Data Publishing, Inc o Application: optical character recognition Synaptics o Application: check reader o Product: VeriFone Oynx NETtalk A discussion about NETtalk can be found at: http://psy.uq.oz.au/CogPsych/acnn96/case7.html The NETtalk network was part of a larger system for mapping English words as text into the corresponding speech sounds. NETtalk was configured and trained in a number of different ways. This study considers only the network that was trained on "continuous informal speech". [Interesting project – appealed to people possibly for other than academic value. (Authors played tape where the learning net sounded pretty baby-like!)] The NETtalk network was a feedforward multi-layer perceptron (MLP) with three layers of units and two layers of weighted connections, trained using the back-propagation algorithm. No feedback mechanism was used. There were 203 units in the input layer, 80 hidden units, and 26 output units (203-8026 MLP). Input to the network represented a sequence of seven consecutive characters from a sample of English text. The task of the network was to map these to a representation of a single phoneme corresponding to the fourth character in the sequence. Phonemes corresponding to a complete sample of text were produced by mapping each of the consecutive sequences of seven characters in the sample to a phoneme. Clearly this requires a few filler characters at the beginning and the end of the text sample. The network learned a training sequence until it was deemed to have generalized sufficiently, after which the connection weights were fixed. [A lot of domain knowledge is needed to be able to set this network up – and was it successful?] Network input encoding Network inputs were required to represent a sequence of seven characters selected from a set of 29, comprising the letters of the Roman alphabet plus three punctuation marks. These were encoded as seven sets of 29 input units, where for each set of 29 units a character was represented as a pattern with one unit "on" and each of the others "off". Network output encoding Network outputs consist of 26 units to represent a single phoneme. Each output unit was used to represent an "articulatory feature" of which most phonemes had about three. The articulatory features correspond to actions or positions Portfolio Exercise Draw a pixel representation of what Alvin might see if the decision is to go straight ahead and represent the output pattern expected. Repeat for the situation where the output is telling Alvin to turn to the right.