Group 10

advertisement

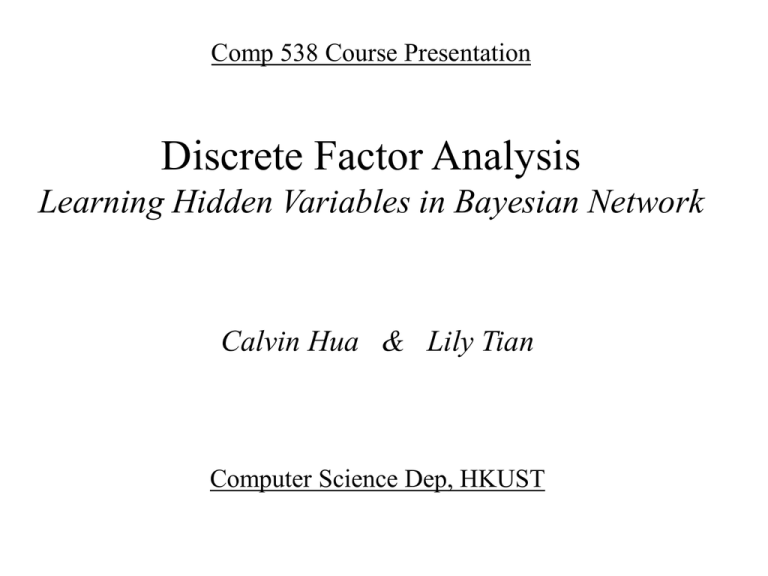

Comp 538 Course Presentation Discrete Factor Analysis Learning Hidden Variables in Bayesian Network Calvin Hua & Lily Tian Computer Science Dep, HKUST Objectives and Outlines • Objective: - Present a space of BN topologies with hidden variables(or factors) and a method for rapidly learning an appropriate topology from data. • Outline: -Motivating example -Methodology * Finding the topology * Constructing the factors - Some Results & Evaluation • Q&A Motivating Example Observable Variables: N •H- Hives •N-Nasal Congestion •C-Cough S H •S-Sore Throat Question: 1. What independencies are encoded? 2. Is the direction of each edge unique? C Motivating Example (Cond.) •More compact V •Inference easier A •Hidden variables used to explain dependencies and independencies H N C S Our Work DATA SET MODEL OUR WORK DO INFERENCE Task: 1. How to find the topology given data(structure)? 2. How to construct the factors(parameters)? Learning Factor Structure • Finding the topology – Decide which observable variables each factor should cover – Decide what factors to use • Constructing the factors – Determine highly probable number of values per factor – Determine highly probable conditional dependencies between factors and observable variables Algorithm-Finding the topology • Step1: – Introduce a link between two variables when they are dependent – Label each link with the probability that those two variables are dependent • Step2: – Extract cliques in the graph • Step3: – Perform a greedy search for the log n cliques Algorithm-Step1 (Cond.) • How to test whether two variables are dependent or not? – Using Chi-Squared Test n r (x i 1 i x)( yi y ) n x y Algorithm-Step2(Cond.) • Principles of extracting cliques Iterating through the variables, in each iteration we do the following : – Adding a variable to an existing clique if the variable is dependent on all other variables in that clique. Algorithm-Step3(Cond.) • Perform a greedy search for log n cliques – By maximizing the sum of the labels represented in the set of cliques. – Labels: the probability that those variables are dependent. Algorithm-Constructing the factors • Initialization • Calculate the most probable assignment of the nth instance, I, to the values of each factor given the first n-1 instances: (1) Choose a random order of factors (2) Iterate over the factors (details later) Algorithm-Constructing the factors (Cond.) • Task: –Choose the number of values for each factor –Choose the conditional probabilities •Note: –FL(Factor Learning) can do so rapidly by approximating the normative Bayesian method for learning hidden variables –The normative way should consider all possible numbers of values and all possible assignments of hidden variable values to the instances in the data set(Cooper & Herskovits, 1992; Cooper, 1994) Algorithm-Step 2 (Cond.) 1. 2. 3. Compute P(Vij | I ,Vi1,Vi2 ,...)for each value, Vij , of the ith factor Calculate the probability of a new value for the ith factor, P(Vio | I ,Vi1,Vi2 ,...) - Label the instance with the factor value with the maximum probability - Update the estimated prior probabilities of the ith factor’s values and the estimated conditional probabilities of the observable values given the factor’s value Note: In all cases where probabilities must be estimated from frequencies we use the following formula: P(Vi aij ) freqij j ni io Some Results and Evaluation Association tested by FL M-Math M P-Physics G P C-Chemistry G-Geology C Note: In this figure, the arc’s denote the dependencies between any pair of variables Some Results and Evaluation 0.7,0.3 A A0 : 0.2,0.8 A : 0.8,0.2 A : 0.2,0.8 1 0 A : 0.8,0.2 1 M P 0.6,0.4 A0 B0 : 0.1,0.8 A B : 0.2,0.8 0 1 A1 B0 : 0.3,0.7 A B : 0 . 9 , 0 . 1 1 1 C A-Analytic ability M2 M2-Memory A0 : 0.2,0.8 A : 0.8,0.2 1 G M-Math P-Physics C-Chemistry G-Geology Some Results and Evaluation • Characteristics of factor structure 1. There are log n hidden variables, called factors. 2. Hidden variables can interact to influence observable variables. 3. It can support polynomial time probabilistic inference. 4. The resulting network captures conditional independencies among the observable variables.