Correlation, Simple and Multiple Regression

advertisement

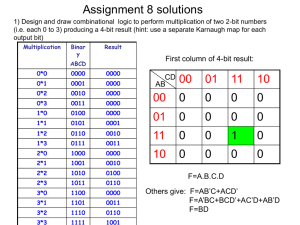

Multiple Regression Analysis: Wrap-Up More Extensions of MRA 1 Contents for Today Probing interactions in MRA: Reliability and interactions Enter Method vs. Hierarchical Patterns of interactions/effects coefficients Polynomial Regression Interpretation issues in MRA Model Comparisons 2 Recall our continuous variable interaction Job satisfaction as a function of Hygiene and Caring Atmosphere Steeper slope for the regression of job satisfaction on hygiene, when people perceived an (otherwise) caring atmosphere. 3 Simple Slopes of satisfaction on hygiene for three levels of caring atmosphere Interaction of Care x Basics on Satisfaction 8 Low Care Mean of Care High Care 7 Satisfaction Level 6 Low Basic High Basic 3.464 5.036 4.192 6.258 4.575 6.902 5 Low Value of care Mean of care High value of care 4 3 2 1 0 Low X High X Low/High Basics 4 Developing equations to graph: Using Cohen et al.’s notation: 1) Choose a high, medium, and low value for Z and solve the following: Y ' ( A B2 Z ) (B1 B3Z ) X Example: Low value of Z [Caring atmosphere] might be -1.57 (after centering) Y ' [5.23 0.3721(1.57)] [0.5165 0.0472(1.57)]X1 Y ' [4.646] [0.442] X 2) Next, solve for two reasonable (but extreme) values of X1 Example: Doing so for -2 and +2 for basics gives us 3.76 and 5.53 3) Repeat for medium & high values of X2 5 More on centering… First, some terms (two continuous variables with an interaction) Y ' 0 1 X 2 Z 3 XZ Assignment of X & Z is arbitrary. What do β1 and β2 represent if β3 =0? What if β3 ≠ 0? Full regression equation for our example (centered): Y ' 5.225 .516 X .372 Z .047 XZ Where, X = Hygeine (cpbasic) and Z = caring atmosphere (cpcare) 6 Even more on centering We know that centering helps us with multicollinearity issues. Let’s examine some other properties, first turning to p. 259 of reading… Note the regression equation above graph A. Then above graph B. 7 Why does this (eq. slide 6) make sense? Or does it? Our regression equation: Y ' 0 1 X 2 Z 3 XZ Rearranging some terms: Y ' 1 X 3 XZ 0 2 Z Then factor out X: Y ' (1 3Z ) X (0 2 Z ) The right-hand side (in parentheses) reflects the intercept. The left-hand side (in parentheses) reflects the slope. We then solve this equation at different values of Z. 8 Since the regression is “symmetric”… Our regression equation: Y ' 0 1 X 2 Z 3 XZ We can rearrange the terms differently: Y ' 2 Z 3 XZ 0 1 X Then factor out Z: Y ' (2 3 X )Z (0 1 X ) The right-hand side (in parentheses) reflects the intercept. The left-hand side (in parentheses) reflects the slope. We then solve this equation at different values of X. 9 Are the simple slopes different from 0? May be a reasonable question, if so solve for the simple slope: Y ' (1 3Z ) X (0 2 Z ) And solve for a chosen Z value (e.g., one standard deviation below the mean: -1.57) Y ' [5.23 0.3721(1.57)] [0.5165 0.0472(1.57)]X1 Y ' [4.646] [0.442] X The simple slope is 0.442. Next we need to obtain an error term. The standard error is given by: SEB at Z SEB211 2Z cov B13 Z 2 SEB233 10 How to solve… Under “Statistics” request covariance matrix for regression coefficients For our example: Coefficient Covariances b1 b2 b3 cpbasic cpcare cbasxcar cpbasic cpcare 0.00187830 -0.00053855 -0.00053855 0.00047930 0.00015193 0.00011203 cbasxcar 0.00015193 0.00011203 0.00031912 Note: I reordered these as SPSS didn’t provide them in order, I also added b1, b2, etc. SEB at Z 0.0018783 2(-1.5735)0.00015193 (1.5735)2 0.00031912 SEBat Z .002190286 .0468 t1038 0.442 9.444 0.0468 11 Simple Slope Table Simple slopes, intercepts, test statistics and confidence intervals Confidence Intervals for Simple Slope Simple Regression Equations Value of Z (z_cpcare) Low Medium High Value Slope Intercept -1.5735 0.4423 4.6396 0.0000 0.5165 5.2251 1.5735 0.5907 5.8106 SE of simple slope t 0.0468 9.4502 0.0433 11.9172 0.0561 10.5303 p 0.0000 0.0000 0.0000 95% CI Low 0.3504 0.4314 0.4806 95% CI High 0.5341 0.6015 0.7008 Compare to original regression table (Constant) x_cpbasic z_cpcare xz_cbasxcar B 5.2251 0.5165 0.3721 0.0472 Std. Error 0.0286 0.0433 0.0219 0.0179 Beta 0.3413 0.4981 0.0650 t 182.6190 11.9172 16.9960 2.6399 Sig. 0.0000 0.0000 0.0000 0.0084 95% CI for B Lower Upper 5.1690 5.2813 0.4314 0.6015 0.3291 0.4151 0.0121 0.0822 12 A visual representation of regression plane: The centering thing again… 13 Using SPSS to get simple slopes When an interaction is present bx = ? Knowing this, we can “trick” SPSS into computing simple slope test statistics for us. Uncentered Descriptives Centered Descriptives Descriptive Statistics Descriptive Statistics satis pbasic pcare basxcar Mean 5.26 4.131144 5.621881 24.0355 Std. Deviation 1.175 .7766683 1.5735326 9.20924 N 1042 1042 1042 1042 satis cpbasic cpcare cbasxcar Mean 5.26 -.0007 .0032 .8107 Std. Deviation 1.175 .77667 1.57353 1.61918 N 1042 1042 1042 1042 When X is centered, we get the “middle” simple slope. So… 14 Using SPSS to get simple slopes (cont’d) If we force Z=0 to be 1 standard deviation above the mean… We get the simple slope for X at 1 standard deviation below the mean compute cpbasic=pbasic-4.131875. compute cpcare = pcare-5.61866+1.573532622. compute cbasxcar=cpbasic*cpcare. execute. TITLE 'regression w/interaction centered'. REGRESSION /DESCRIPTIVES MEAN STDDEV CORR SIG N /MISSING LISTWISE /STATISTICS COEFF OUTS CI BCOV R ANOVA COLLIN TOL ZPP /CRITERIA=PIN(.05) POUT(.10) /NOORIGIN /DEPENDENT satis /METHOD=ENTER cpbasic cpcare cbasxcar /SCATTERPLOT=(*ZRESID ,*ZPRED ) /SAVE PRED . This code gets us… 15 This… B Std. Error (Constant) 4.6396 0.0479 cpbasic 0.4423 0.0468 cpcare 0.3721 0.0219 cbasxcar 0.0472 0.0179 Dependent Variable: satis Beta 0.2922 0.4981 0.0610 t 96.7988 9.4502 16.9960 2.6399 Sig. 0.0000 0.0000 0.0000 0.0084 Lower Bound 4.5456 0.3504 0.3291 0.0121 Upper Bound 4.7337 0.5341 0.4151 0.0822 And, setting the zero-point to one standard deviation below the mean: compute cpcare = pcare-5.61866-1.573532622. Gives us… (Constant) cpbasic cpcare cbasxcar B Std. Error 5.8106 0.0414 0.5907 0.0561 0.3721 0.0219 0.0472 0.0179 Beta 0.3903 0.4981 0.0976 t 140.3721 10.5303 16.9960 2.6399 Sig. 0.0000 0.0000 0.0000 0.0084 Lower Bound 5.7294 0.4806 0.3291 0.0121 Upper Bound 5.8918 0.7008 0.4151 0.0822 16 Choice of levels of Z for simple slopes Interaction of Care x Basics on Satisfaction 8 •+/- 1 standard deviation 7 •Range of values Satisfaction Level 6 5 Low Value of care Mean of care High value of care 4 •Meaningful cutoffs 3 2 1 0 Low X High X Low/High Basics 17 Wrapping up CV Interactions Interaction term (highest order) is invariant: Upper limits on correlations governed by rxx Crossing point of regression lines Assumes all lower-level terms are included For Hygeine: -10.9 (centered) For Caring atmosphere: -7.9 If your work involves complicated interaction hypotheses – Examine Aiken & West (1991). Section 7.7 not covered, but good discussion Cannot interpret β weights using method discussed here 18 Polynomial Regression (10,000 Ft) Polynomial 3rd Power Polynomial 2nd Power 25.00000000 9.00000000 8.00000000 20.00000000 7.00000000 15.00000000 6.00000000 5.00000000 10.00000000 Series1 Poly. (Series1) Series1 Poly. (Series1) 4.00000000 5.00000000 3.00000000 0.00000000 0.0000 2.00000000 -3.0000 -2.0000 -1.0000 1.0000 2.0000 3.0000 1.00000000 -5.00000000 -3.0000 -2.0000 -1.0000 0.00000000 0.0000 1.0000 2.0000 3.0000 -10.00000000 -1.00000000 Polynomial 4th Power Polynomial to the 5th Power 60.0000 140.0000 120.0000 50.0000 100.0000 40.0000 X+X^2+X^3+X^4 80.0000 30.0000 60.0000 Series1 Poly. (Series1) Series1 Poly. (Series1) 40.0000 20.0000 20.0000 10.0000 -3.0000 -3.0000 -2.0000 -1.0000 0.0000 0.0000 -2.0000 -1.0000 0.0000 0.0000 1.0000 2.0000 3.0000 -20.0000 1.0000 2.0000 3.0000 -40.0000 -10.0000 Y' -60.0000 Y 19 Predicting job satisfaction from IQ Model Summaryb Model 1 R a .365 Adjusted R Square .111 R Square .134 Std. Error of the Estimate 2.10543 a. Predictors: (Constant), IQ b. Dependent Variable: JobSat ANOVAb Model 1 Regression Residual Total Sum of Squares 25.952 168.448 194.400 df 1 38 39 Mean Square 25.952 4.433 F 5.855 Sig. .020a a. Predictors: (Constant), IQ b. Dependent Variable: JobSat 20 Continued Unstandardized Coefficients Standardized Coefficients 95% Confidence Interval for B B Std. Error Beta t Sig. Lower Bound Upper Bound (Constant) -0.8432 3.1763 -0.2655 0.7921 -7.2734 5.5869 IQ 0.0720 0.0297 0.3654 2.4196 0.0204 0.0118 0.1322 Dependent Variable: JobSat It’s all good, let’s inspect our standardized predicted by residual graph 21 Ooops! 22 Next step… Center X Square X Add X2 to prediction equation compute c_IQ=IQ-106.20. compute IQsq=c_IQ**2. execute. REGRESSION /DESCRIPTIVES MEAN STDDEV CORR SIG N /MISSING LISTWISE /STATISTICS COEFF OUTS CI R ANOVA COLLIN TOL ZPP /CRITERIA=PIN(.05) POUT(.10) /NOORIGIN /DEPENDENT JobSat /METHOD=ENTER IQ IQsq /SCATTERPLOT=(*ZRESID ,*ZPRED ) . 23 Results Model Summaryb Model 1 R a .944 Adjusted R Square .886 R Square .891 Std. Error of the Estimate .75534 a. Predictors: (Constant), IQsq, IQ b. Dependent Variable: JobSat ANOVAb Model 1 Regression Residual Total Sum of Squares 173.290 21.110 194.400 df 2 37 39 Mean Square 86.645 .571 F 151.867 Sig. .000a a. Predictors: (Constant), IQsq, IQ b. Dependent Variable: JobSat 24 And both predictors are significant (Constant) IQ IQsq B Std. Error -6.5099 1.1928 0.1455 0.0116 -0.0171 0.0011 Beta 0.7385 -0.9472 t -5.4575 12.5294 -16.0700 Sig. 0.0000 0.0000 0.0000 Lower Bound -8.9269 0.1219 -0.0192 Upper Bound -4.0930 0.1690 -0.0149 25 Interpretation Issues & Model Comparison Linearity vs. Nonlinearity Nonlinear effects well-established? Replicability of nonlinearity Degree of nonlinearity Interpretation Issues Regression coefficients context specific Assumption that we are testing “the” model β-weights vs. b-weights Replication Strength of relationship Model Comparison May sometimes wish to determine whether one model significantly better predictor than another (where different variables are used) E.g., which of two sets of predictors best predict relapse? 26 Strength of relationship: My test is sooo valid! Example of "Artificial" Correlation 10.0000 9.0000 R2 = 0.4499 8.0000 7.0000 Outcome 6.0000 Y Linear (Y) Linear (Y) 5.0000 4.0000 3.0000 2.0000 1.0000 0.0000 0.0000 1.0000 2.0000 3.0000 4.0000 5.0000 6.0000 7.0000 8.0000 9.0000 10.0000 Predictor 27